Alper Yilmaz

BetterScene: 3D Scene Synthesis with Representation-Aligned Generative Model

Feb 26, 2026Abstract:We present BetterScene, an approach to enhance novel view synthesis (NVS) quality for diverse real-world scenes using extremely sparse, unconstrained photos. BetterScene leverages the production-ready Stable Video Diffusion (SVD) model pretrained on billions of frames as a strong backbone, aiming to mitigate artifacts and recover view-consistent details at inference time. Conventional methods have developed similar diffusion-based solutions to address these challenges of novel view synthesis. Despite significant improvements, these methods typically rely on off-the-shelf pretrained diffusion priors and fine-tune only the UNet module while keeping other components frozen, which still leads to inconsistent details and artifacts even when incorporating geometry-aware regularizations like depth or semantic conditions. To address this, we investigate the latent space of the diffusion model and introduce two components: (1) temporal equivariance regularization and (2) vision foundation model-aligned representation, both applied to the variational autoencoder (VAE) module within the SVD pipeline. BetterScene integrates a feed-forward 3D Gaussian Splatting (3DGS) model to render features as inputs for the SVD enhancer and generate continuous, artifact-free, consistent novel views. We evaluate on the challenging DL3DV-10K dataset and demonstrate superior performance compared to state-of-the-art methods.

MotivNet: Evolving Meta-Sapiens into an Emotionally Intelligent Foundation Model

Dec 30, 2025Abstract:In this paper, we introduce MotivNet, a generalizable facial emotion recognition model for robust real-world application. Current state-of-the-art FER models tend to have weak generalization when tested on diverse data, leading to deteriorated performance in the real world and hindering FER as a research domain. Though researchers have proposed complex architectures to address this generalization issue, they require training cross-domain to obtain generalizable results, which is inherently contradictory for real-world application. Our model, MotivNet, achieves competitive performance across datasets without cross-domain training by using Meta-Sapiens as a backbone. Sapiens is a human vision foundational model with state-of-the-art generalization in the real world through large-scale pretraining of a Masked Autoencoder. We propose MotivNet as an additional downstream task for Sapiens and define three criteria to evaluate MotivNet's viability as a Sapiens task: benchmark performance, model similarity, and data similarity. Throughout this paper, we describe the components of MotivNet, our training approach, and our results showing MotivNet is generalizable across domains. We demonstrate that MotivNet can be benchmarked against existing SOTA models and meets the listed criteria, validating MotivNet as a Sapiens downstream task, and making FER more incentivizing for in-the-wild application. The code is available at https://github.com/OSUPCVLab/EmotionFromFaceImages.

Multivariate Gaussian Representation Learning for Medical Action Evaluation

Nov 13, 2025

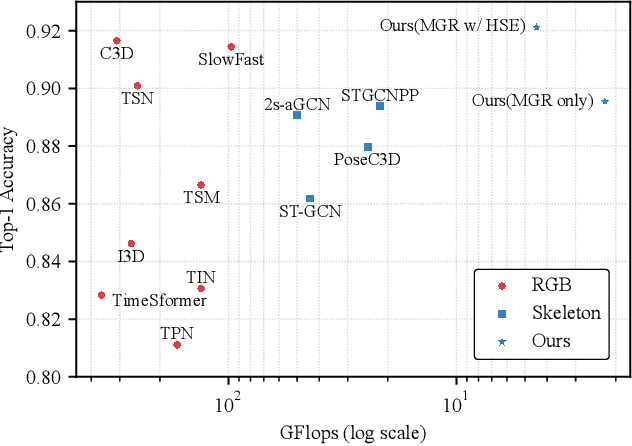

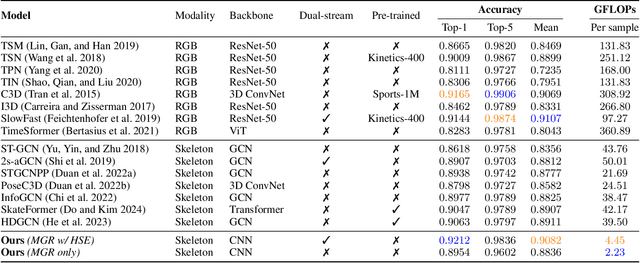

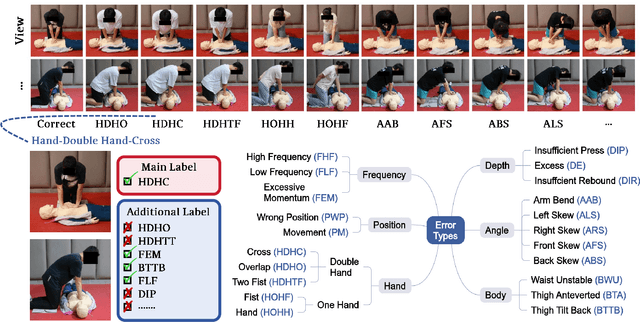

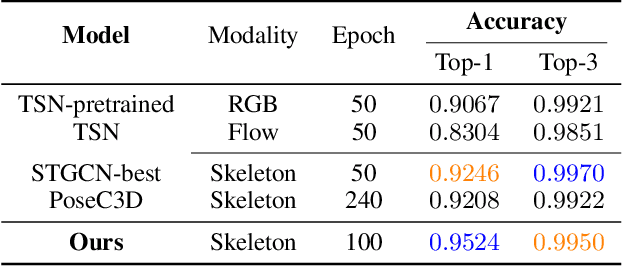

Abstract:Fine-grained action evaluation in medical vision faces unique challenges due to the unavailability of comprehensive datasets, stringent precision requirements, and insufficient spatiotemporal dynamic modeling of very rapid actions. To support development and evaluation, we introduce CPREval-6k, a multi-view, multi-label medical action benchmark containing 6,372 expert-annotated videos with 22 clinical labels. Using this dataset, we present GaussMedAct, a multivariate Gaussian encoding framework, to advance medical motion analysis through adaptive spatiotemporal representation learning. Multivariate Gaussian Representation projects the joint motions to a temporally scaled multi-dimensional space, and decomposes actions into adaptive 3D Gaussians that serve as tokens. These tokens preserve motion semantics through anisotropic covariance modeling while maintaining robustness to spatiotemporal noise. Hybrid Spatial Encoding, employing a Cartesian and Vector dual-stream strategy, effectively utilizes skeletal information in the form of joint and bone features. The proposed method achieves 92.1% Top-1 accuracy with real-time inference on the benchmark, outperforming the ST-GCN baseline by +5.9% accuracy with only 10% FLOPs. Cross-dataset experiments confirm the superiority of our method in robustness.

Lightweight Road Environment Segmentation using Vector Quantization

Apr 19, 2025

Abstract:Road environment segmentation plays a significant role in autonomous driving. Numerous works based on Fully Convolutional Networks (FCNs) and Transformer architectures have been proposed to leverage local and global contextual learning for efficient and accurate semantic segmentation. In both architectures, the encoder often relies heavily on extracting continuous representations from the image, which limits the ability to represent meaningful discrete information. To address this limitation, we propose segmentation of the autonomous driving environment using vector quantization. Vector quantization offers three primary advantages for road environment segmentation. (1) Each continuous feature from the encoder is mapped to a discrete vector from the codebook, helping the model discover distinct features more easily than with complex continuous features. (2) Since a discrete feature acts as compressed versions of the encoder's continuous features, they also compress noise or outliers, enhancing the image segmentation task. (3) Vector quantization encourages the latent space to form coarse clusters of continuous features, forcing the model to group similar features, making the learned representations more structured for the decoding process. In this work, we combined vector quantization with the lightweight image segmentation model MobileUNETR and used it as a baseline model for comparison to demonstrate its efficiency. Through experiments, we achieved 77.0 % mIoU on Cityscapes, outperforming the baseline by 2.9 % without increasing the model's initial size or complexity.

UAS Visual Navigation in Large and Unseen Environments via a Meta Agent

Mar 20, 2025Abstract:The aim of this work is to develop an approach that enables Unmanned Aerial System (UAS) to efficiently learn to navigate in large-scale urban environments and transfer their acquired expertise to novel environments. To achieve this, we propose a meta-curriculum training scheme. First, meta-training allows the agent to learn a master policy to generalize across tasks. The resulting model is then fine-tuned on the downstream tasks. We organize the training curriculum in a hierarchical manner such that the agent is guided from coarse to fine towards the target task. In addition, we introduce Incremental Self-Adaptive Reinforcement learning (ISAR), an algorithm that combines the ideas of incremental learning and meta-reinforcement learning (MRL). In contrast to traditional reinforcement learning (RL), which focuses on acquiring a policy for a specific task, MRL aims to learn a policy with fast transfer ability to novel tasks. However, the MRL training process is time consuming, whereas our proposed ISAR algorithm achieves faster convergence than the conventional MRL algorithm. We evaluate the proposed methodologies in simulated environments and demonstrate that using this training philosophy in conjunction with the ISAR algorithm significantly improves the convergence speed for navigation in large-scale cities and the adaptation proficiency in novel environments.

HyperGLM: HyperGraph for Video Scene Graph Generation and Anticipation

Nov 27, 2024Abstract:Multimodal LLMs have advanced vision-language tasks but still struggle with understanding video scenes. To bridge this gap, Video Scene Graph Generation (VidSGG) has emerged to capture multi-object relationships across video frames. However, prior methods rely on pairwise connections, limiting their ability to handle complex multi-object interactions and reasoning. To this end, we propose Multimodal LLMs on a Scene HyperGraph (HyperGLM), promoting reasoning about multi-way interactions and higher-order relationships. Our approach uniquely integrates entity scene graphs, which capture spatial relationships between objects, with a procedural graph that models their causal transitions, forming a unified HyperGraph. Significantly, HyperGLM enables reasoning by injecting this unified HyperGraph into LLMs. Additionally, we introduce a new Video Scene Graph Reasoning (VSGR) dataset featuring 1.9M frames from third-person, egocentric, and drone views and supports five tasks: Scene Graph Generation, Scene Graph Anticipation, Video Question Answering, Video Captioning, and Relation Reasoning. Empirically, HyperGLM consistently outperforms state-of-the-art methods across five tasks, effectively modeling and reasoning complex relationships in diverse video scenes.

DINTR: Tracking via Diffusion-based Interpolation

Oct 14, 2024

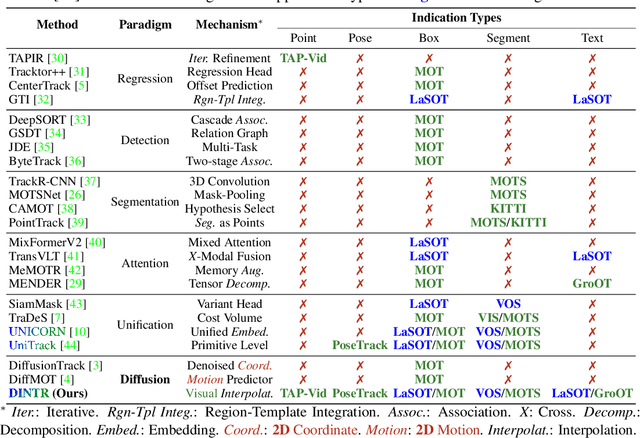

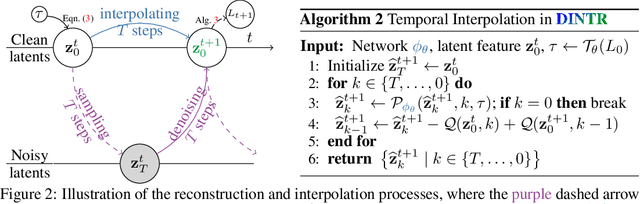

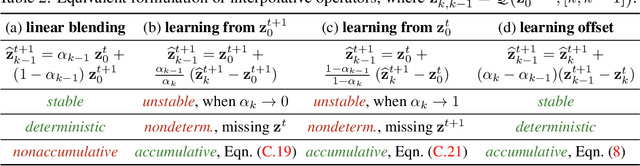

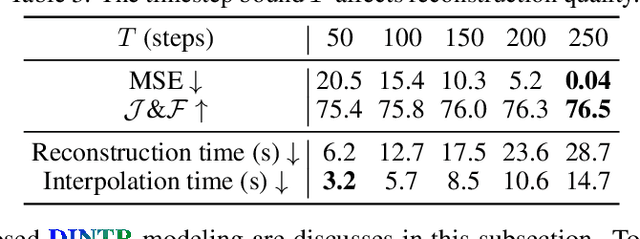

Abstract:Object tracking is a fundamental task in computer vision, requiring the localization of objects of interest across video frames. Diffusion models have shown remarkable capabilities in visual generation, making them well-suited for addressing several requirements of the tracking problem. This work proposes a novel diffusion-based methodology to formulate the tracking task. Firstly, their conditional process allows for injecting indications of the target object into the generation process. Secondly, diffusion mechanics can be developed to inherently model temporal correspondences, enabling the reconstruction of actual frames in video. However, existing diffusion models rely on extensive and unnecessary mapping to a Gaussian noise domain, which can be replaced by a more efficient and stable interpolation process. Our proposed interpolation mechanism draws inspiration from classic image-processing techniques, offering a more interpretable, stable, and faster approach tailored specifically for the object tracking task. By leveraging the strengths of diffusion models while circumventing their limitations, our Diffusion-based INterpolation TrackeR (DINTR) presents a promising new paradigm and achieves a superior multiplicity on seven benchmarks across five indicator representations.

KnobGen: Controlling the Sophistication of Artwork in Sketch-Based Diffusion Models

Oct 02, 2024

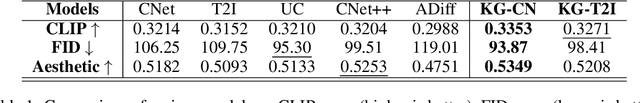

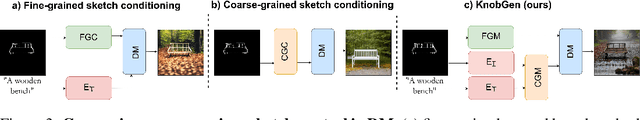

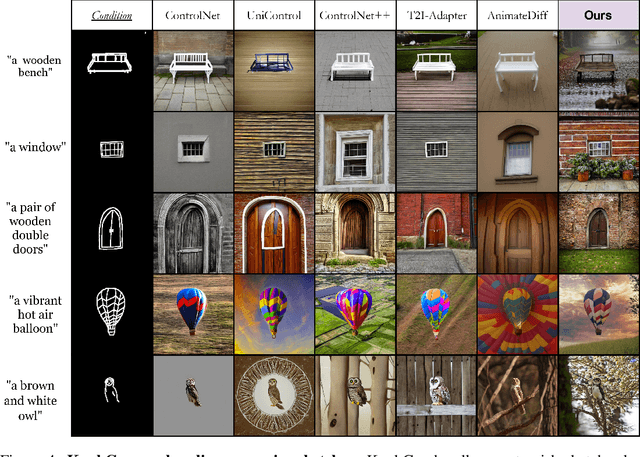

Abstract:Recent advances in diffusion models have significantly improved text-to-image (T2I) generation, but they often struggle to balance fine-grained precision with high-level control. Methods like ControlNet and T2I-Adapter excel at following sketches by seasoned artists but tend to be overly rigid, replicating unintentional flaws in sketches from novice users. Meanwhile, coarse-grained methods, such as sketch-based abstraction frameworks, offer more accessible input handling but lack the precise control needed for detailed, professional use. To address these limitations, we propose KnobGen, a dual-pathway framework that democratizes sketch-based image generation by seamlessly adapting to varying levels of sketch complexity and user skill. KnobGen uses a Coarse-Grained Controller (CGC) module for high-level semantics and a Fine-Grained Controller (FGC) module for detailed refinement. The relative strength of these two modules can be adjusted through our knob inference mechanism to align with the user's specific needs. These mechanisms ensure that KnobGen can flexibly generate images from both novice sketches and those drawn by seasoned artists. This maintains control over the final output while preserving the natural appearance of the image, as evidenced on the MultiGen-20M dataset and a newly collected sketch dataset.

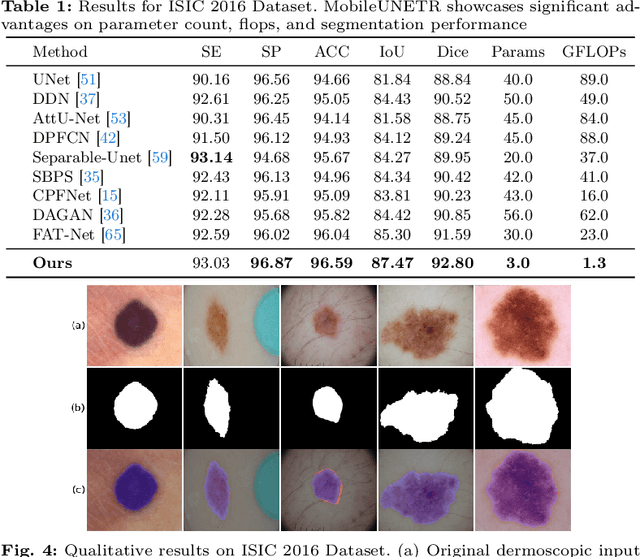

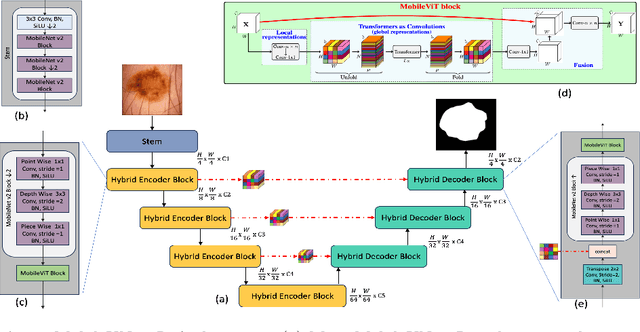

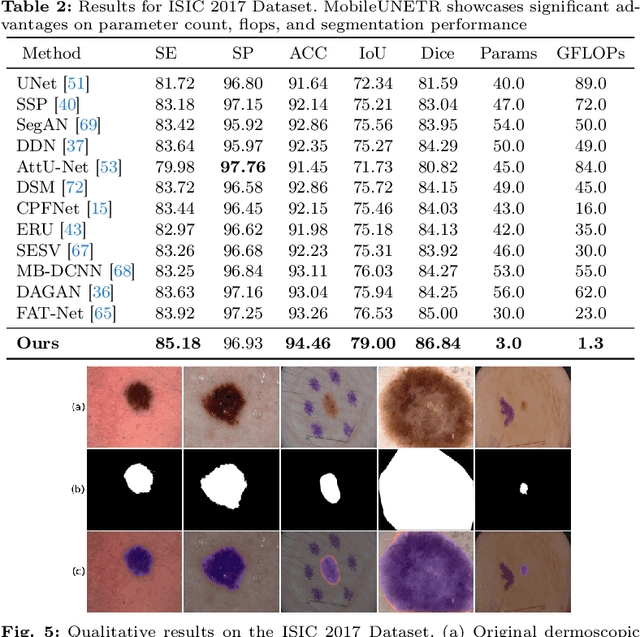

MobileUNETR: A Lightweight End-To-End Hybrid Vision Transformer For Efficient Medical Image Segmentation

Sep 04, 2024

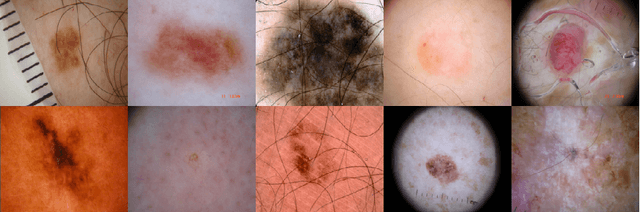

Abstract:Skin cancer segmentation poses a significant challenge in medical image analysis. Numerous existing solutions, predominantly CNN-based, face issues related to a lack of global contextual understanding. Alternatively, some approaches resort to large-scale Transformer models to bridge the global contextual gaps, but at the expense of model size and computational complexity. Finally many Transformer based approaches rely primarily on CNN based decoders overlooking the benefits of Transformer based decoding models. Recognizing these limitations, we address the need efficient lightweight solutions by introducing MobileUNETR, which aims to overcome the performance constraints associated with both CNNs and Transformers while minimizing model size, presenting a promising stride towards efficient image segmentation. MobileUNETR has 3 main features. 1) MobileUNETR comprises of a lightweight hybrid CNN-Transformer encoder to help balance local and global contextual feature extraction in an efficient manner; 2) A novel hybrid decoder that simultaneously utilizes low-level and global features at different resolutions within the decoding stage for accurate mask generation; 3) surpassing large and complex architectures, MobileUNETR achieves superior performance with 3 million parameters and a computational complexity of 1.3 GFLOP resulting in 10x and 23x reduction in parameters and FLOPS, respectively. Extensive experiments have been conducted to validate the effectiveness of our proposed method on four publicly available skin lesion segmentation datasets, including ISIC 2016, ISIC 2017, ISIC 2018, and PH2 datasets. The code will be publicly available at: https://github.com/OSUPCVLab/MobileUNETR.git

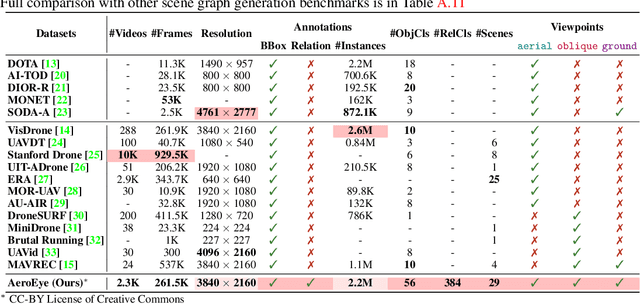

CYCLO: Cyclic Graph Transformer Approach to Multi-Object Relationship Modeling in Aerial Videos

Jun 03, 2024

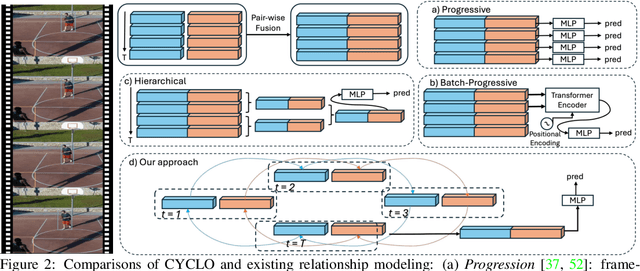

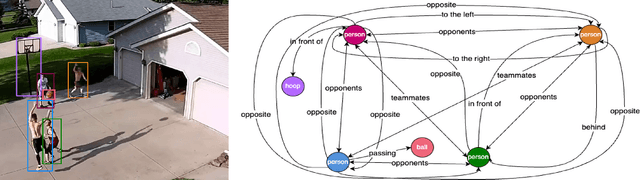

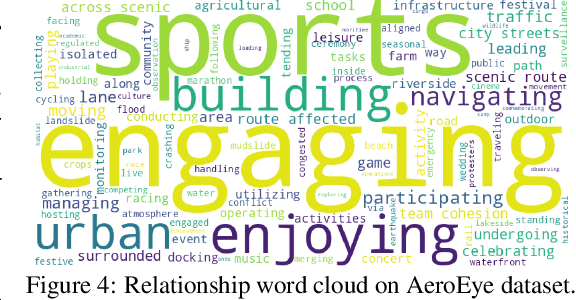

Abstract:Video scene graph generation (VidSGG) has emerged as a transformative approach to capturing and interpreting the intricate relationships among objects and their temporal dynamics in video sequences. In this paper, we introduce the new AeroEye dataset that focuses on multi-object relationship modeling in aerial videos. Our AeroEye dataset features various drone scenes and includes a visually comprehensive and precise collection of predicates that capture the intricate relationships and spatial arrangements among objects. To this end, we propose the novel Cyclic Graph Transformer (CYCLO) approach that allows the model to capture both direct and long-range temporal dependencies by continuously updating the history of interactions in a circular manner. The proposed approach also allows one to handle sequences with inherent cyclical patterns and process object relationships in the correct sequential order. Therefore, it can effectively capture periodic and overlapping relationships while minimizing information loss. The extensive experiments on the AeroEye dataset demonstrate the effectiveness of the proposed CYCLO model, demonstrating its potential to perform scene understanding on drone videos. Finally, the CYCLO method consistently achieves State-of-the-Art (SOTA) results on two in-the-wild scene graph generation benchmarks, i.e., PVSG and ASPIRe.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge