Khoa Luu

BRAIN: Bias-Mitigation Continual Learning Approach to Vision-Brain Understanding

Aug 25, 2025

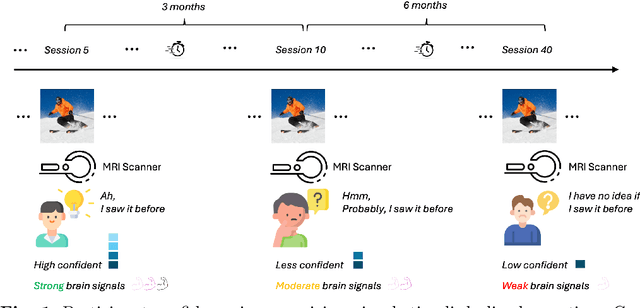

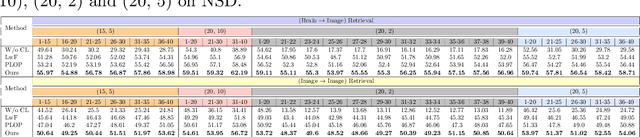

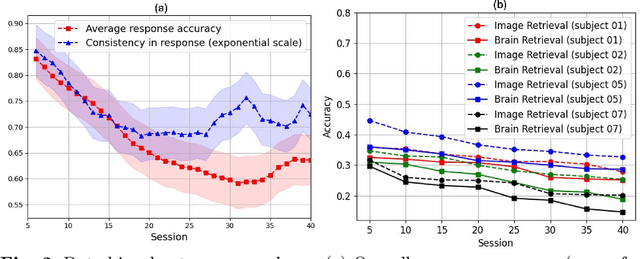

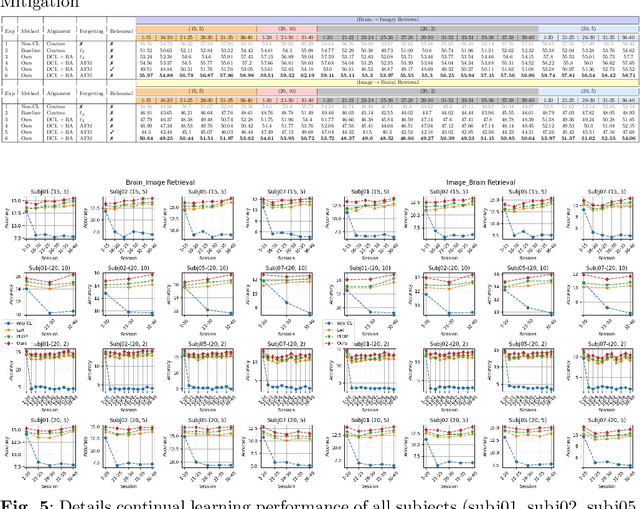

Abstract:Memory decay makes it harder for the human brain to recognize visual objects and retain details. Consequently, recorded brain signals become weaker, uncertain, and contain poor visual context over time. This paper presents one of the first vision-learning approaches to address this problem. First, we statistically and experimentally demonstrate the existence of inconsistency in brain signals and its impact on the Vision-Brain Understanding (VBU) model. Our findings show that brain signal representations shift over recording sessions, leading to compounding bias, which poses challenges for model learning and degrades performance. Then, we propose a new Bias-Mitigation Continual Learning (BRAIN) approach to address these limitations. In this approach, the model is trained in a continual learning setup and mitigates the growing bias from each learning step. A new loss function named De-bias Contrastive Learning is also introduced to address the bias problem. In addition, to prevent catastrophic forgetting, where the model loses knowledge from previous sessions, the new Angular-based Forgetting Mitigation approach is introduced to preserve learned knowledge in the model. Finally, the empirical experiments demonstrate that our approach achieves State-of-the-Art (SOTA) performance across various benchmarks, surpassing prior and non-continual learning methods.

CLIFF: Continual Learning for Incremental Flake Features in 2D Material Identification

Aug 24, 2025

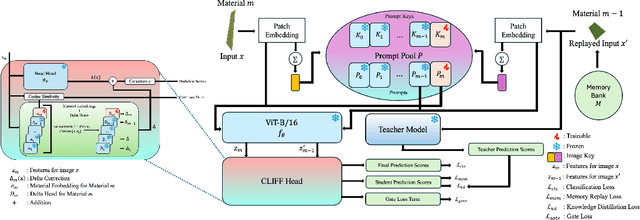

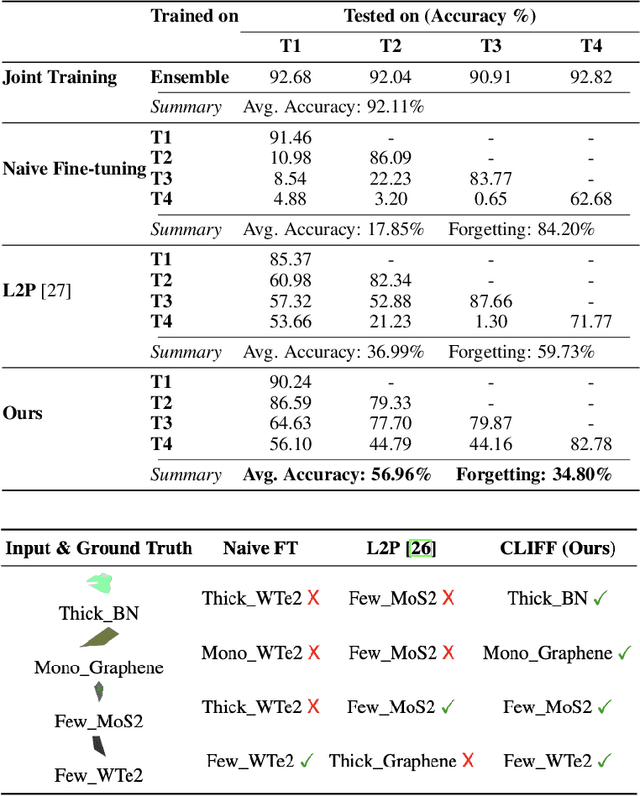

Abstract:Identifying quantum flakes is crucial for scalable quantum hardware; however, automated layer classification from optical microscopy remains challenging due to substantial appearance shifts across different materials. In this paper, we propose a new Continual-Learning Framework for Flake Layer Classification (CLIFF). To our knowledge, this is the first systematic study of continual learning in the domain of two-dimensional (2D) materials. Our method enables the model to differentiate between materials and their physical and optical properties by freezing a backbone and base head trained on a reference material. For each new material, it learns a material-specific prompt, embedding, and a delta head. A prompt pool and a cosine-similarity gate modulate features and compute material-specific corrections. Additionally, we incorporate memory replay with knowledge distillation. CLIFF achieves competitive accuracy with significantly lower forgetting than naive fine-tuning and a prompt-based baseline.

Directed-Tokens: A Robust Multi-Modality Alignment Approach to Large Language-Vision Models

Aug 19, 2025Abstract:Large multimodal models (LMMs) have gained impressive performance due to their outstanding capability in various understanding tasks. However, these models still suffer from some fundamental limitations related to robustness and generalization due to the alignment and correlation between visual and textual features. In this paper, we introduce a simple but efficient learning mechanism for improving the robust alignment between visual and textual modalities by solving shuffling problems. In particular, the proposed approach can improve reasoning capability, visual understanding, and cross-modality alignment by introducing two new tasks: reconstructing the image order and the text order into the LMM's pre-training and fine-tuning phases. In addition, we propose a new directed-token approach to capture visual and textual knowledge, enabling the capability to reconstruct the correct order of visual inputs. Then, we introduce a new Image-to-Response Guided loss to further improve the visual understanding of the LMM in its responses. The proposed approach consistently achieves state-of-the-art (SoTA) performance compared with prior LMMs on academic task-oriented and instruction-following LMM benchmarks.

AGP: A Novel Arabidopsis thaliana Genomics-Phenomics Dataset and its HyperGraph Baseline Benchmarking

Aug 19, 2025

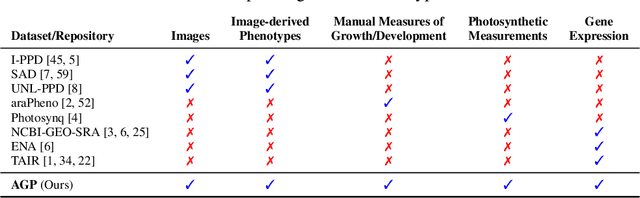

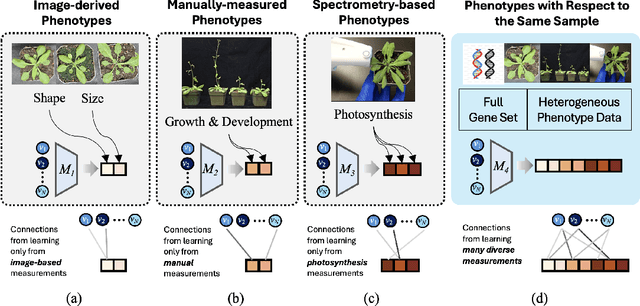

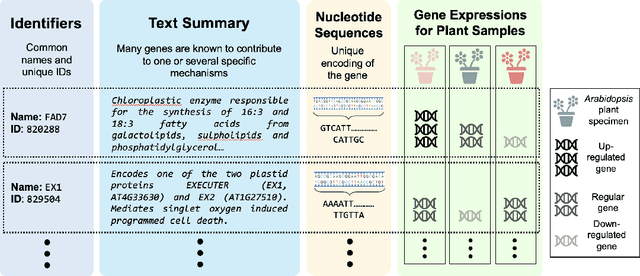

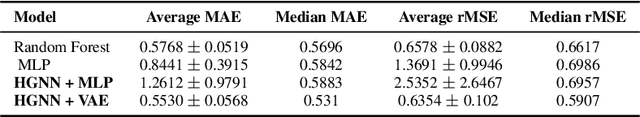

Abstract:Understanding which genes control which traits in an organism remains one of the central challenges in biology. Despite significant advances in data collection technology, our ability to map genes to traits is still limited. This genome-to-phenome (G2P) challenge spans several problem domains, including plant breeding, and requires models capable of reasoning over high-dimensional, heterogeneous, and biologically structured data. Currently, however, many datasets solely capture genetic information or solely capture phenotype information. Additionally, phenotype data is very heterogeneous, which many datasets do not fully capture. The critical drawback is that these datasets are not integrated, that is, they do not link with each other to describe the same biological specimens. This limits machine learning models' ability to be informed on the various aspects of these specimens, impacting the breadth of correlations learned, and therefore their ability to make more accurate predictions. To address this gap, we present the Arabidopsis Genomics-Phenomics (AGP) Dataset, a curated multi-modal dataset linking gene expression profiles with phenotypic trait measurements in Arabidopsis thaliana, a model organism in plant biology. AGP supports tasks such as phenotype prediction and interpretable graph learning. In addition, we benchmark conventional regression and explanatory baselines, including a biologically-informed hypergraph baseline, to validate gene-trait associations. To the best of our knowledge, this is the first dataset that provides multi-modal gene information and heterogeneous trait or phenotype data for the same Arabidopsis thaliana specimens. With AGP, we aim to foster the research community towards accurately understanding the connection between genotypes and phenotypes using gene information, higher-order gene pairings, and trait data from several sources.

MANGO: Multimodal Attention-based Normalizing Flow Approach to Fusion Learning

Aug 13, 2025Abstract:Multimodal learning has gained much success in recent years. However, current multimodal fusion methods adopt the attention mechanism of Transformers to implicitly learn the underlying correlation of multimodal features. As a result, the multimodal model cannot capture the essential features of each modality, making it difficult to comprehend complex structures and correlations of multimodal inputs. This paper introduces a novel Multimodal Attention-based Normalizing Flow (MANGO) approach\footnote{The source code of this work will be publicly available.} to developing explicit, interpretable, and tractable multimodal fusion learning. In particular, we propose a new Invertible Cross-Attention (ICA) layer to develop the Normalizing Flow-based Model for multimodal data. To efficiently capture the complex, underlying correlations in multimodal data in our proposed invertible cross-attention layer, we propose three new cross-attention mechanisms: Modality-to-Modality Cross-Attention (MMCA), Inter-Modality Cross-Attention (IMCA), and Learnable Inter-Modality Cross-Attention (LICA). Finally, we introduce a new Multimodal Attention-based Normalizing Flow to enable the scalability of our proposed method to high-dimensional multimodal data. Our experimental results on three different multimodal learning tasks, i.e., semantic segmentation, image-to-image translation, and movie genre classification, have illustrated the state-of-the-art (SoTA) performance of the proposed approach.

BIMA: Bijective Maximum Likelihood Learning Approach to Hallucination Prediction and Mitigation in Large Vision-Language Models

May 30, 2025

Abstract:Large vision-language models have become widely adopted to advance in various domains. However, developing a trustworthy system with minimal interpretable characteristics of large-scale models presents a significant challenge. One of the most prevalent terms associated with the fallacy functions caused by these systems is hallucination, where the language model generates a response that does not correspond to the visual content. To mitigate this problem, several approaches have been developed, and one prominent direction is to ameliorate the decoding process. In this paper, we propose a new Bijective Maximum Likelihood Learning (BIMA) approach to hallucination mitigation using normalizing flow theories. The proposed BIMA method can efficiently mitigate the hallucination problem in prevailing vision-language models, resulting in significant improvements. Notably, BIMA achieves the average F1 score of 85.06% on POPE benchmark and remarkably reduce CHAIRS and CHAIRI by 7.6% and 2.6%, respectively. To the best of our knowledge, this is one of the first studies that contemplates the bijection means to reduce hallucination induced by large vision-language models.

MEX: Memory-efficient Approach to Referring Multi-Object Tracking

Feb 19, 2025Abstract:Referring Multi-Object Tracking (RMOT) is a relatively new concept that has rapidly gained traction as a promising research direction at the intersection of computer vision and natural language processing. Unlike traditional multi-object tracking, RMOT identifies and tracks objects and incorporates textual descriptions for object class names, making the approach more intuitive. Various techniques have been proposed to address this challenging problem; however, most require the training of the entire network due to their end-to-end nature. Among these methods, iKUN has emerged as a particularly promising solution. Therefore, we further explore its pipeline and enhance its performance. In this paper, we introduce a practical module dubbed Memory-Efficient Cross-modality -- MEX. This memory-efficient technique can be directly applied to off-the-shelf trackers like iKUN, resulting in significant architectural improvements. Our method proves effective during inference on a single GPU with 4 GB of memory. Among the various benchmarks, the Refer-KITTI dataset, which offers diverse autonomous driving scenes with relevant language expressions, is particularly useful for studying this problem. Empirically, our method demonstrates effectiveness and efficiency regarding HOTA tracking scores, substantially improving memory allocation and processing speed.

HyperGLM: HyperGraph for Video Scene Graph Generation and Anticipation

Nov 27, 2024Abstract:Multimodal LLMs have advanced vision-language tasks but still struggle with understanding video scenes. To bridge this gap, Video Scene Graph Generation (VidSGG) has emerged to capture multi-object relationships across video frames. However, prior methods rely on pairwise connections, limiting their ability to handle complex multi-object interactions and reasoning. To this end, we propose Multimodal LLMs on a Scene HyperGraph (HyperGLM), promoting reasoning about multi-way interactions and higher-order relationships. Our approach uniquely integrates entity scene graphs, which capture spatial relationships between objects, with a procedural graph that models their causal transitions, forming a unified HyperGraph. Significantly, HyperGLM enables reasoning by injecting this unified HyperGraph into LLMs. Additionally, we introduce a new Video Scene Graph Reasoning (VSGR) dataset featuring 1.9M frames from third-person, egocentric, and drone views and supports five tasks: Scene Graph Generation, Scene Graph Anticipation, Video Question Answering, Video Captioning, and Relation Reasoning. Empirically, HyperGLM consistently outperforms state-of-the-art methods across five tasks, effectively modeling and reasoning complex relationships in diverse video scenes.

COBRA: A Continual Learning Approach to Vision-Brain Understanding

Nov 25, 2024

Abstract:Vision-Brain Understanding (VBU) aims to extract visual information perceived by humans from brain activity recorded through functional Magnetic Resonance Imaging (fMRI). Despite notable advancements in recent years, existing studies in VBU continue to face the challenge of catastrophic forgetting, where models lose knowledge from prior subjects as they adapt to new ones. Addressing continual learning in this field is, therefore, essential. This paper introduces a novel framework called Continual Learning for Vision-Brain (COBRA) to address continual learning in VBU. Our approach includes three novel modules: a Subject Commonality (SC) module, a Prompt-based Subject Specific (PSS) module, and a transformer-based module for fMRI, denoted as MRIFormer module. The SC module captures shared vision-brain patterns across subjects, preserving this knowledge as the model encounters new subjects, thereby reducing the impact of catastrophic forgetting. On the other hand, the PSS module learns unique vision-brain patterns specific to each subject. Finally, the MRIFormer module contains a transformer encoder and decoder that learns the fMRI features for VBU from common and specific patterns. In a continual learning setup, COBRA is trained in new PSS and MRIFormer modules for new subjects, leaving the modules of previous subjects unaffected. As a result, COBRA effectively addresses catastrophic forgetting and achieves state-of-the-art performance in both continual learning and vision-brain reconstruction tasks, surpassing previous methods.

Quantum-Brain: Quantum-Inspired Neural Network Approach to Vision-Brain Understanding

Nov 20, 2024

Abstract:Vision-brain understanding aims to extract semantic information about brain signals from human perceptions. Existing deep learning methods for vision-brain understanding are usually introduced in a traditional learning paradigm missing the ability to learn the connectivities between brain regions. Meanwhile, the quantum computing theory offers a new paradigm for designing deep learning models. Motivated by the connectivities in the brain signals and the entanglement properties in quantum computing, we propose a novel Quantum-Brain approach, a quantum-inspired neural network, to tackle the vision-brain understanding problem. To compute the connectivity between areas in brain signals, we introduce a new Quantum-Inspired Voxel-Controlling module to learn the impact of a brain voxel on others represented in the Hilbert space. To effectively learn connectivity, a novel Phase-Shifting module is presented to calibrate the value of the brain signals. Finally, we introduce a new Measurement-like Projection module to present the connectivity information from the Hilbert space into the feature space. The proposed approach can learn to find the connectivities between fMRI voxels and enhance the semantic information obtained from human perceptions. Our experimental results on the Natural Scene Dataset benchmarks illustrate the effectiveness of the proposed method with Top-1 accuracies of 95.1% and 95.6% on image and brain retrieval tasks and an Inception score of 95.3% on fMRI-to-image reconstruction task. Our proposed quantum-inspired network brings a potential paradigm to solving the vision-brain problems via the quantum computing theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge