Marcel Geppert

Infrastructure-based Multi-Camera Calibration using Radial Projections

Jul 30, 2020

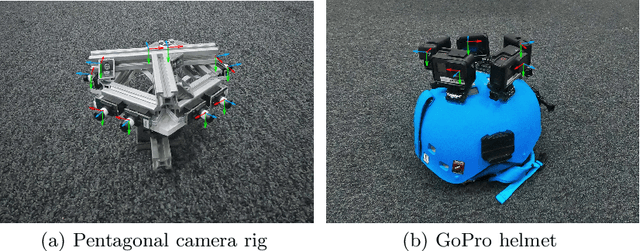

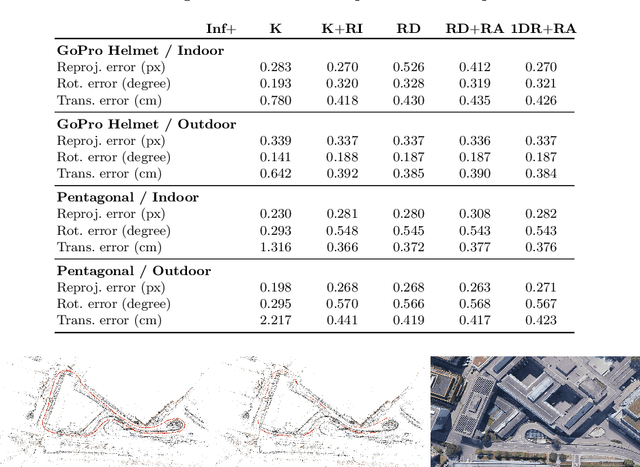

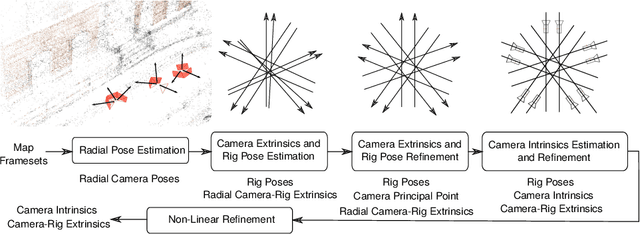

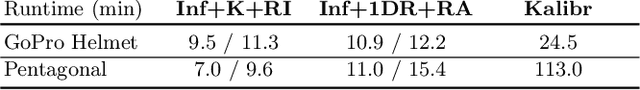

Abstract:Multi-camera systems are an important sensor platform for intelligent systems such as self-driving cars. Pattern-based calibration techniques can be used to calibrate the intrinsics of the cameras individually. However, extrinsic calibration of systems with little to no visual overlap between the cameras is a challenge. Given the camera intrinsics, infrastucture-based calibration techniques are able to estimate the extrinsics using 3D maps pre-built via SLAM or Structure-from-Motion. In this paper, we propose to fully calibrate a multi-camera system from scratch using an infrastructure-based approach. Assuming that the distortion is mainly radial, we introduce a two-stage approach. We first estimate the camera-rig extrinsics up to a single unknown translation component per camera. Next, we solve for both the intrinsic parameters and the missing translation components. Extensive experiments on multiple indoor and outdoor scenes with multiple multi-camera systems show that our calibration method achieves high accuracy and robustness. In particular, our approach is more robust than the naive approach of first estimating intrinsic parameters and pose per camera before refining the extrinsic parameters of the system. The implementation is available at https://github.com/youkely/InfrasCal.

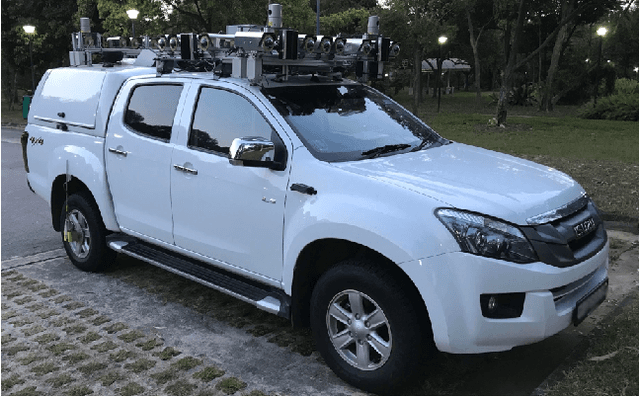

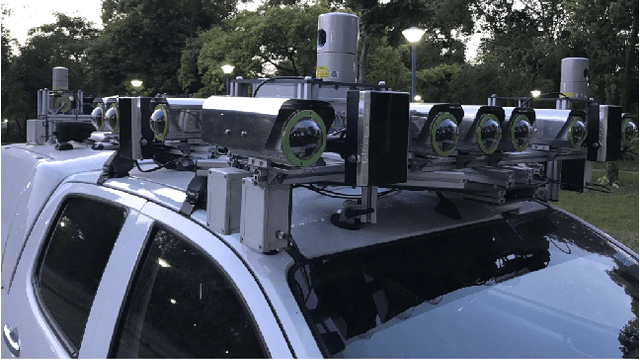

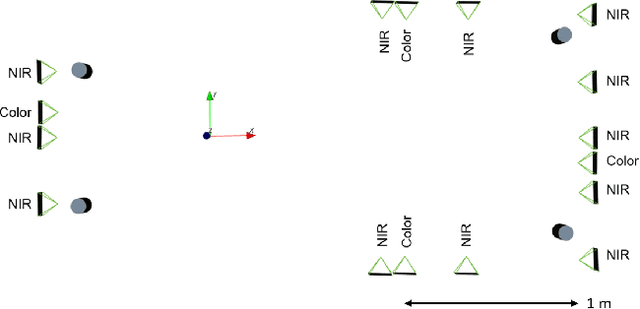

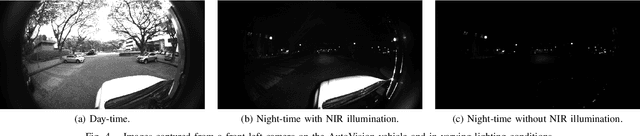

Project AutoVision: Localization and 3D Scene Perception for an Autonomous Vehicle with a Multi-Camera System

Mar 05, 2019

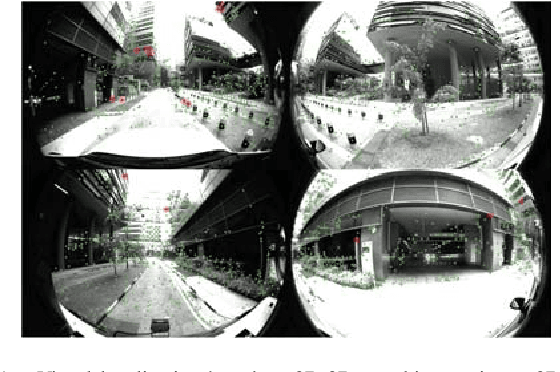

Abstract:Project AutoVision aims to develop localization and 3D scene perception capabilities for a self-driving vehicle. Such capabilities will enable autonomous navigation in urban and rural environments, in day and night, and with cameras as the only exteroceptive sensors. The sensor suite employs many cameras for both 360-degree coverage and accurate multi-view stereo; the use of low-cost cameras keeps the cost of this sensor suite to a minimum. In addition, the project seeks to extend the operating envelope to include GNSS-less conditions which are typical for environments with tall buildings, foliage, and tunnels. Emphasis is placed on leveraging multi-view geometry and deep learning to enable the vehicle to localize and perceive in 3D space. This paper presents an overview of the project, and describes the sensor suite and current progress in the areas of calibration, localization, and perception.

Efficient 2D-3D Matching for Multi-Camera Visual Localization

Sep 17, 2018

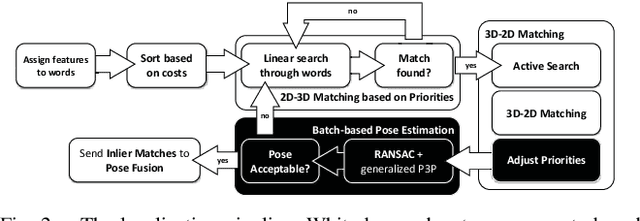

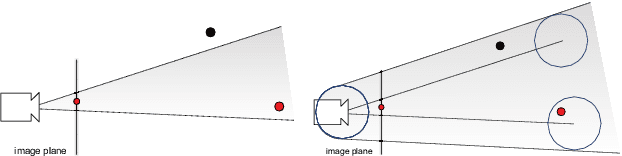

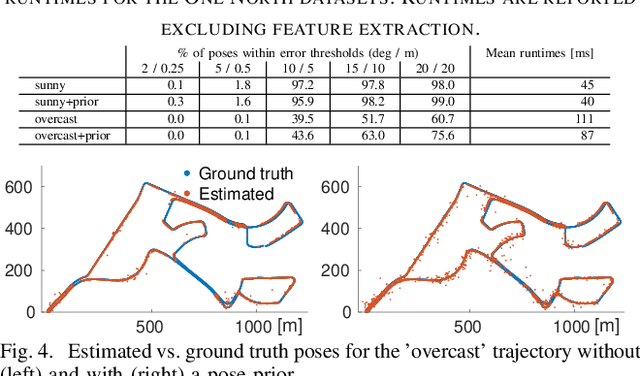

Abstract:Visual localization, i.e., determining the position and orientation of a vehicle with respect to a map, is a key problem in autonomous driving. We present a multi-camera visual inertial localization algorithm for large scale environments. To efficiently and effectively match features against a pre-built global 3D map, we propose a prioritized feature matching scheme for multi-camera systems. In contrast to existing works, designed for monocular cameras, we (1) tailor the prioritization function to the multi-camera setup and (2) run feature matching and pose estimation in parallel. This significantly accelerates the matching and pose estimation stages and allows us to dynamically adapt the matching efforts based on the surrounding environment. In addition, we show how pose priors can be integrated into the localization system to increase efficiency and robustness. Finally, we extend our algorithm by fusing the absolute pose estimates with motion estimates from a multi-camera visual inertial odometry pipeline (VIO). This results in a system that provides reliable and drift-less pose estimations for high speed autonomous driving. Extensive experiments show that our localization runs fast and robust under varying conditions, and that our extended algorithm enables reliable real-time pose estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge