Lionel Heng

Continuous-time Radar-inertial Odometry for Automotive Radars

Jan 07, 2022

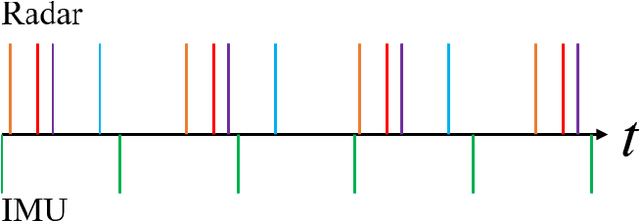

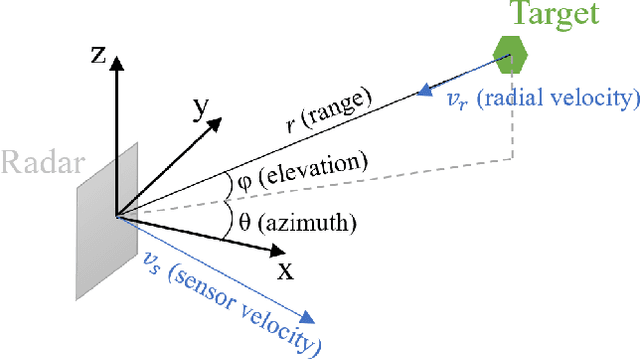

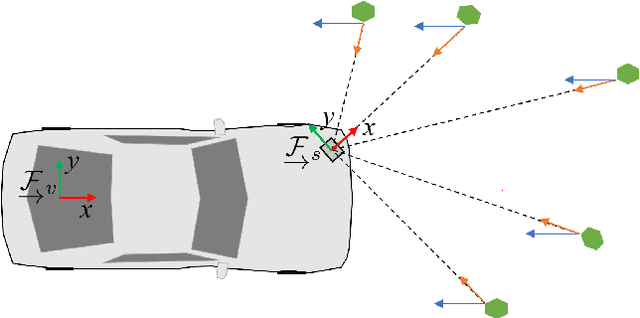

Abstract:We present an approach for radar-inertial odometry which uses a continuous-time framework to fuse measurements from multiple automotive radars and an inertial measurement unit (IMU). Adverse weather conditions do not have a significant impact on the operating performance of radar sensors unlike that of camera and LiDAR sensors. Radar's robustness in such conditions and the increasing prevalence of radars on passenger vehicles motivate us to look at the use of radar for ego-motion estimation. A continuous-time trajectory representation is applied not only as a framework to enable heterogeneous and asynchronous multi-sensor fusion, but also, to facilitate efficient optimization by being able to compute poses and their derivatives in closed-form and at any given time along the trajectory. We compare our continuous-time estimates to those from a discrete-time radar-inertial odometry approach and show that our continuous-time method outperforms the discrete-time method. To the best of our knowledge, this is the first time a continuous-time framework has been applied to radar-inertial odometry.

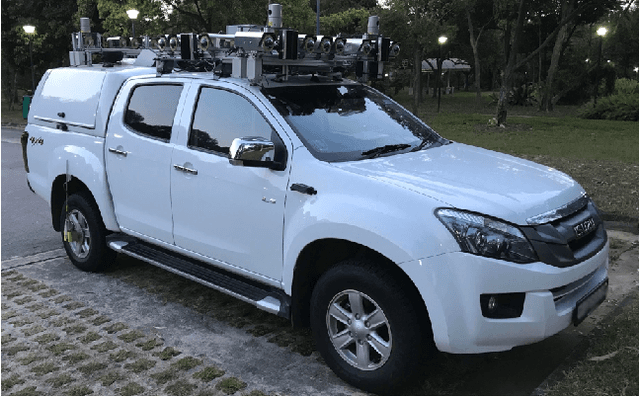

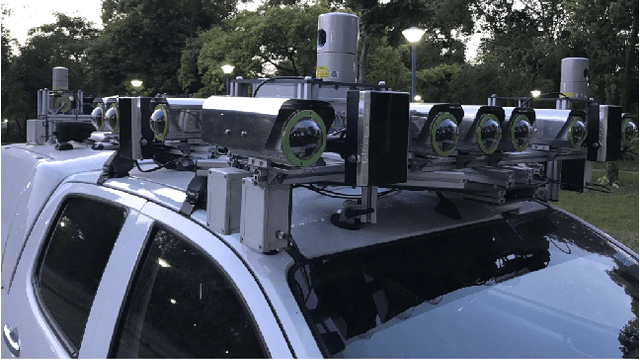

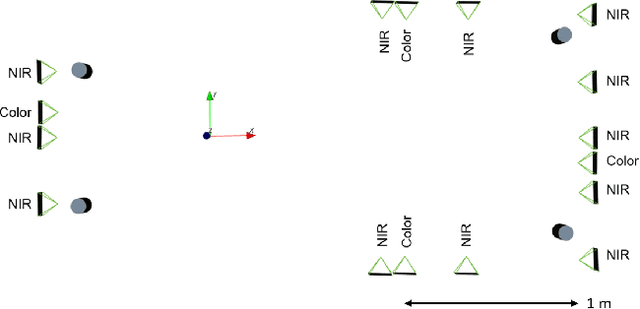

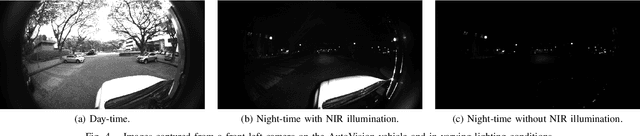

Project AutoVision: Localization and 3D Scene Perception for an Autonomous Vehicle with a Multi-Camera System

Mar 05, 2019

Abstract:Project AutoVision aims to develop localization and 3D scene perception capabilities for a self-driving vehicle. Such capabilities will enable autonomous navigation in urban and rural environments, in day and night, and with cameras as the only exteroceptive sensors. The sensor suite employs many cameras for both 360-degree coverage and accurate multi-view stereo; the use of low-cost cameras keeps the cost of this sensor suite to a minimum. In addition, the project seeks to extend the operating envelope to include GNSS-less conditions which are typical for environments with tall buildings, foliage, and tunnels. Emphasis is placed on leveraging multi-view geometry and deep learning to enable the vehicle to localize and perceive in 3D space. This paper presents an overview of the project, and describes the sensor suite and current progress in the areas of calibration, localization, and perception.

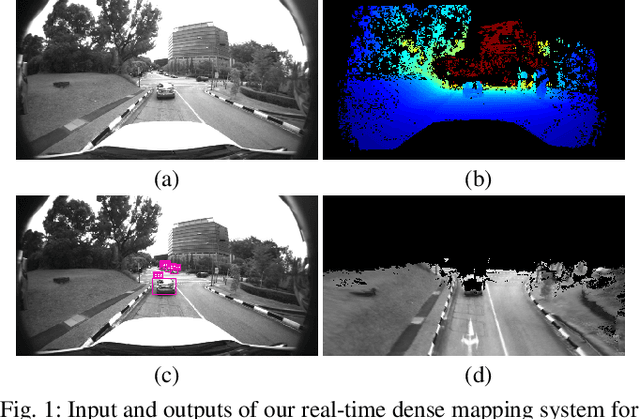

Real-Time Dense Mapping for Self-driving Vehicles using Fisheye Cameras

Sep 17, 2018

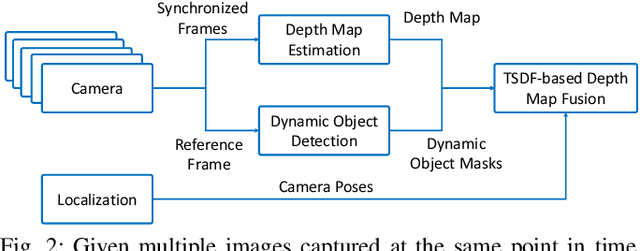

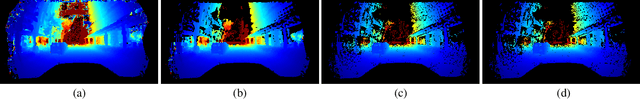

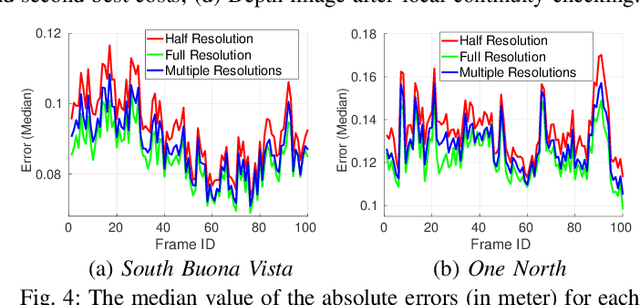

Abstract:We present a real-time dense geometric mapping algorithm for large-scale environments. Unlike existing methods which use pinhole cameras, our implementation is based on fisheye cameras which have larger field of view and benefit some other tasks including Visual-Inertial Odometry, localization and object detection around vehicles. Our algorithm runs on in-vehicle PCs at 15 Hz approximately, enabling vision-only 3D scene perception for self-driving vehicles. For each synchronized set of images captured by multiple cameras, we first compute a depth map for a reference camera using plane-sweeping stereo. To maintain both accuracy and efficiency, while accounting for the fact that fisheye images have a rather low resolution, we recover the depths using multiple image resolutions. We adopt the fast object detection framework YOLOv3 to remove potentially dynamic objects. At the end of the pipeline, we fuse the fisheye depth images into the truncated signed distance function (TSDF) volume to obtain a 3D map. We evaluate our method on large-scale urban datasets, and results show that our method works well even in complex environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge