Benjamin Choi

Continuous-time Radar-inertial Odometry for Automotive Radars

Jan 07, 2022

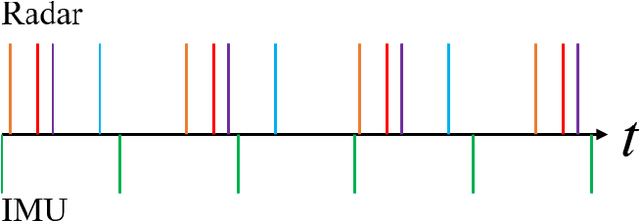

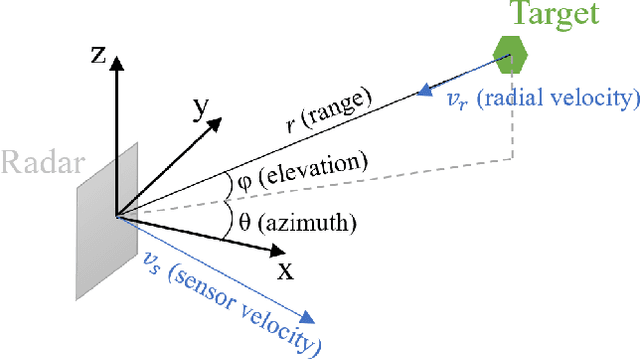

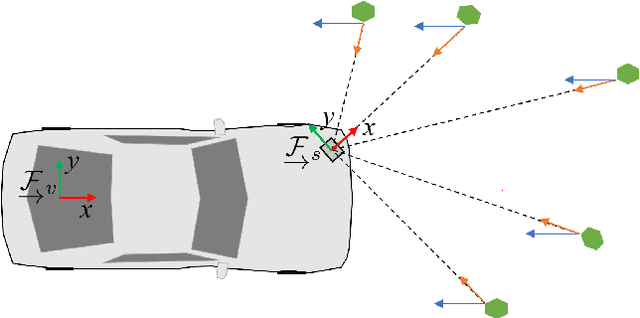

Abstract:We present an approach for radar-inertial odometry which uses a continuous-time framework to fuse measurements from multiple automotive radars and an inertial measurement unit (IMU). Adverse weather conditions do not have a significant impact on the operating performance of radar sensors unlike that of camera and LiDAR sensors. Radar's robustness in such conditions and the increasing prevalence of radars on passenger vehicles motivate us to look at the use of radar for ego-motion estimation. A continuous-time trajectory representation is applied not only as a framework to enable heterogeneous and asynchronous multi-sensor fusion, but also, to facilitate efficient optimization by being able to compute poses and their derivatives in closed-form and at any given time along the trajectory. We compare our continuous-time estimates to those from a discrete-time radar-inertial odometry approach and show that our continuous-time method outperforms the discrete-time method. To the best of our knowledge, this is the first time a continuous-time framework has been applied to radar-inertial odometry.

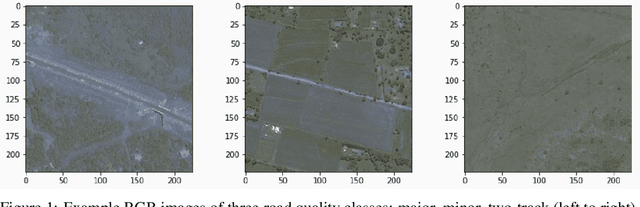

Crowd-Sourced Road Quality Mapping in the Developing World

Dec 01, 2020

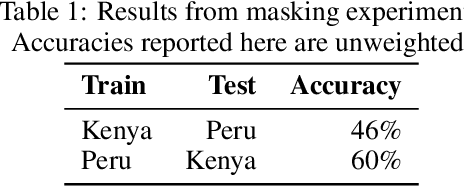

Abstract:Road networks are among the most essential components of a country's infrastructure. By facilitating the movement and exchange of goods, people, and ideas, they support economic and cultural activity both within and across borders. Up-to-date mapping of the the geographical distribution of roads and their quality is essential in high-impact applications ranging from land use planning to wilderness conservation. Mapping presents a particularly pressing challenge in developing countries, where documentation is poor and disproportionate amounts of road construction are expected to occur in the coming decades. We present a new crowd-sourced approach capable of assessing road quality and identify key challenges and opportunities in the transferability of deep learning based methods across domains.

Road Mapping in Low Data Environments with OpenStreetMap

Jun 14, 2020

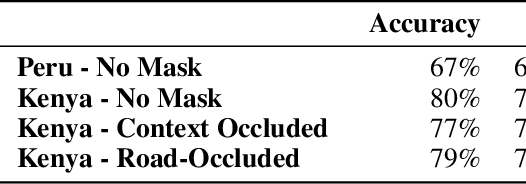

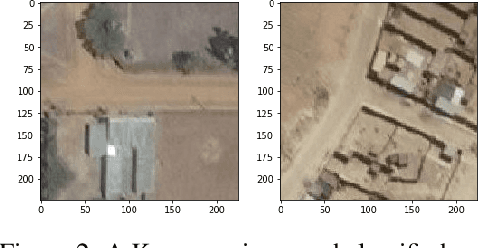

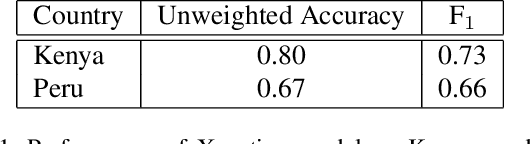

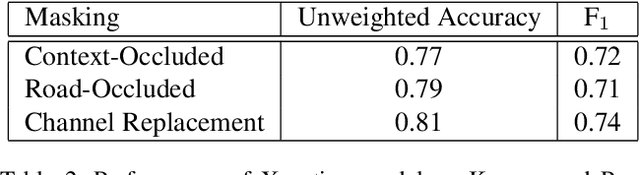

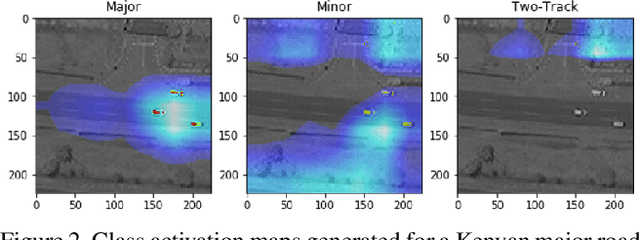

Abstract:Roads are among the most essential components of any country's infrastructure. By facilitating the movement and exchange of people, ideas, and goods, they support economic and cultural activity both within and across local and international borders. A comprehensive, up-to-date mapping of the geographical distribution of roads and their quality thus has the potential to act as an indicator for broader economic development. Such an indicator has a variety of high-impact applications, particularly in the planning of rural development projects where up-to-date infrastructure information is not available. This work investigates the viability of high resolution satellite imagery and crowd-sourced resources like OpenStreetMap in the construction of such a mapping. We experiment with state-of-the-art deep learning methods to explore the utility of OpenStreetMap data in road classification and segmentation tasks. We also compare the performance of models in different mask occlusion scenarios as well as out-of-country domains. Our comparison raises important pitfalls to consider in image-based infrastructure classification tasks, and shows the need for local training data specific to regions of interest for reliable performance.

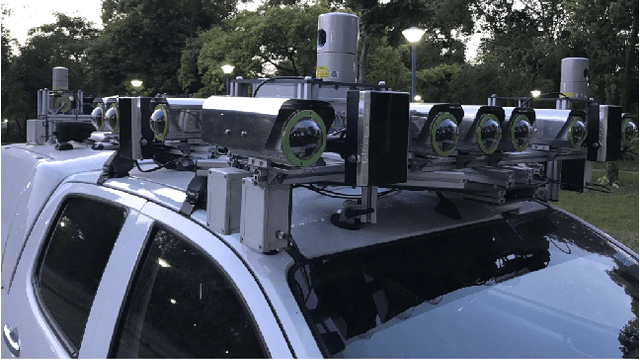

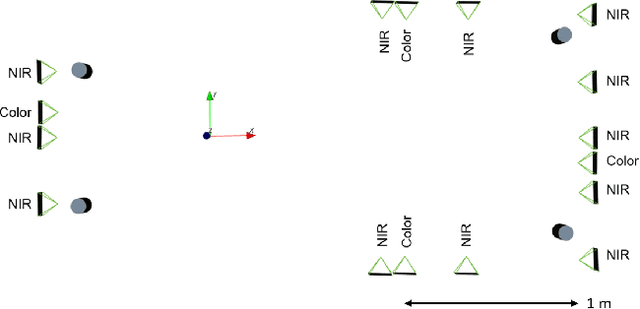

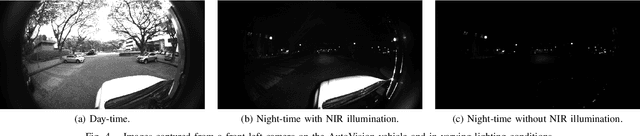

Project AutoVision: Localization and 3D Scene Perception for an Autonomous Vehicle with a Multi-Camera System

Mar 05, 2019

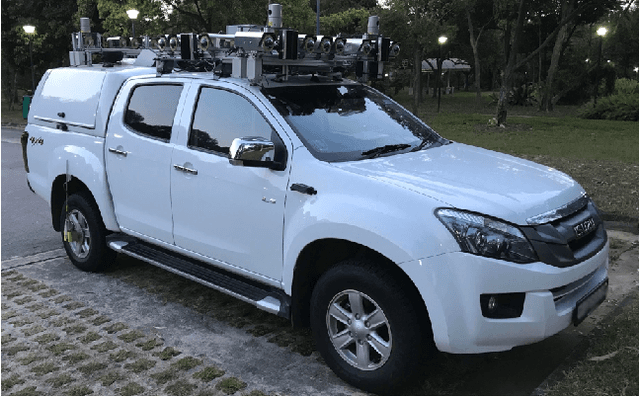

Abstract:Project AutoVision aims to develop localization and 3D scene perception capabilities for a self-driving vehicle. Such capabilities will enable autonomous navigation in urban and rural environments, in day and night, and with cameras as the only exteroceptive sensors. The sensor suite employs many cameras for both 360-degree coverage and accurate multi-view stereo; the use of low-cost cameras keeps the cost of this sensor suite to a minimum. In addition, the project seeks to extend the operating envelope to include GNSS-less conditions which are typical for environments with tall buildings, foliage, and tunnels. Emphasis is placed on leveraging multi-view geometry and deep learning to enable the vehicle to localize and perceive in 3D space. This paper presents an overview of the project, and describes the sensor suite and current progress in the areas of calibration, localization, and perception.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge