Yiping Xie

PhysLLM: Harnessing Large Language Models for Cross-Modal Remote Physiological Sensing

May 06, 2025Abstract:Remote photoplethysmography (rPPG) enables non-contact physiological measurement but remains highly susceptible to illumination changes, motion artifacts, and limited temporal modeling. Large Language Models (LLMs) excel at capturing long-range dependencies, offering a potential solution but struggle with the continuous, noise-sensitive nature of rPPG signals due to their text-centric design. To bridge this gap, we introduce PhysLLM, a collaborative optimization framework that synergizes LLMs with domain-specific rPPG components. Specifically, the Text Prototype Guidance (TPG) strategy is proposed to establish cross-modal alignment by projecting hemodynamic features into LLM-interpretable semantic space, effectively bridging the representational gap between physiological signals and linguistic tokens. Besides, a novel Dual-Domain Stationary (DDS) Algorithm is proposed for resolving signal instability through adaptive time-frequency domain feature re-weighting. Finally, rPPG task-specific cues systematically inject physiological priors through physiological statistics, environmental contextual answering, and task description, leveraging cross-modal learning to integrate both visual and textual information, enabling dynamic adaptation to challenging scenarios like variable illumination and subject movements. Evaluation on four benchmark datasets, PhysLLM achieves state-of-the-art accuracy and robustness, demonstrating superior generalization across lighting variations and motion scenarios.

CardiacMamba: A Multimodal RGB-RF Fusion Framework with State Space Models for Remote Physiological Measurement

Feb 19, 2025

Abstract:Heart rate (HR) estimation via remote photoplethysmography (rPPG) offers a non-invasive solution for health monitoring. However, traditional single-modality approaches (RGB or Radio Frequency (RF)) face challenges in balancing robustness and accuracy due to lighting variations, motion artifacts, and skin tone bias. In this paper, we propose CardiacMamba, a multimodal RGB-RF fusion framework that leverages the complementary strengths of both modalities. It introduces the Temporal Difference Mamba Module (TDMM) to capture dynamic changes in RF signals using timing differences between frames, enhancing the extraction of local and global features. Additionally, CardiacMamba employs a Bidirectional SSM for cross-modal alignment and a Channel-wise Fast Fourier Transform (CFFT) to effectively capture and refine the frequency domain characteristics of RGB and RF signals, ultimately improving heart rate estimation accuracy and periodicity detection. Extensive experiments on the EquiPleth dataset demonstrate state-of-the-art performance, achieving marked improvements in accuracy and robustness. CardiacMamba significantly mitigates skin tone bias, reducing performance disparities across demographic groups, and maintains resilience under missing-modality scenarios. By addressing critical challenges in fairness, adaptability, and precision, the framework advances rPPG technology toward reliable real-world deployment in healthcare. The codes are available at: https://github.com/WuZheng42/CardiacMamba.

Semi-rPPG: Semi-Supervised Remote Physiological Measurement with Curriculum Pseudo-Labeling

Feb 06, 2025

Abstract:Remote Photoplethysmography (rPPG) is a promising technique to monitor physiological signals such as heart rate from facial videos. However, the labeled facial videos in this research are challenging to collect. Current rPPG research is mainly based on several small public datasets collected in simple environments, which limits the generalization and scale of the AI models. Semi-supervised methods that leverage a small amount of labeled data and abundant unlabeled data can fill this gap for rPPG learning. In this study, a novel semi-supervised learning method named Semi-rPPG that combines curriculum pseudo-labeling and consistency regularization is proposed to extract intrinsic physiological features from unlabelled data without impairing the model from noises. Specifically, a curriculum pseudo-labeling strategy with signal-to-noise ratio (SNR) criteria is proposed to annotate the unlabelled data while adaptively filtering out the low-quality unlabelled data. Besides, a novel consistency regularization term for quasi-periodic signals is proposed through weak and strong augmented clips. To benefit the research on semi-supervised rPPG measurement, we establish a novel semi-supervised benchmark for rPPG learning through intra-dataset and cross-dataset evaluation on four public datasets. The proposed Semi-rPPG method achieves the best results compared with three classical semi-supervised methods under different protocols. Ablation studies are conducted to prove the effectiveness of the proposed methods.

Robotic transcatheter tricuspid valve replacement with hybrid enhanced intelligence: a new paradigm and first-in-vivo study

Nov 19, 2024

Abstract:Transcatheter tricuspid valve replacement (TTVR) is the latest treatment for tricuspid regurgitation and is in the early stages of clinical adoption. Intelligent robotic approaches are expected to overcome the challenges of surgical manipulation and widespread dissemination, but systems and protocols with high clinical utility have not yet been reported. In this study, we propose a complete solution that includes a passive stabilizer, robotic drive, detachable delivery catheter and valve manipulation mechanism. Working towards autonomy, a hybrid augmented intelligence approach based on reinforcement learning, Monte Carlo probabilistic maps and human-robot co-piloted control was introduced. Systematic tests in phantom and first-in-vivo animal experiments were performed to verify that the system design met the clinical requirement. Furthermore, the experimental results confirmed the advantages of co-piloted control over conventional master-slave control in terms of time efficiency, control efficiency, autonomy and stability of operation. In conclusion, this study provides a comprehensive pathway for robotic TTVR and, to our knowledge, completes the first animal study that not only successfully demonstrates the application of hybrid enhanced intelligence in interventional robotics, but also provides a solution with high application value for a cutting-edge procedure.

SFDA-rPPG: Source-Free Domain Adaptive Remote Physiological Measurement with Spatio-Temporal Consistency

Sep 18, 2024

Abstract:Remote Photoplethysmography (rPPG) is a non-contact method that uses facial video to predict changes in blood volume, enabling physiological metrics measurement. Traditional rPPG models often struggle with poor generalization capacity in unseen domains. Current solutions to this problem is to improve its generalization in the target domain through Domain Generalization (DG) or Domain Adaptation (DA). However, both traditional methods require access to both source domain data and target domain data, which cannot be implemented in scenarios with limited access to source data, and another issue is the privacy of accessing source domain data. In this paper, we propose the first Source-free Domain Adaptation benchmark for rPPG measurement (SFDA-rPPG), which overcomes these limitations by enabling effective domain adaptation without access to source domain data. Our framework incorporates a Three-Branch Spatio-Temporal Consistency Network (TSTC-Net) to enhance feature consistency across domains. Furthermore, we propose a new rPPG distribution alignment loss based on the Frequency-domain Wasserstein Distance (FWD), which leverages optimal transport to align power spectrum distributions across domains effectively and further enforces the alignment of the three branches. Extensive cross-domain experiments and ablation studies demonstrate the effectiveness of our proposed method in source-free domain adaptation settings. Our findings highlight the significant contribution of the proposed FWD loss for distributional alignment, providing a valuable reference for future research and applications. The source code is available at https://github.com/XieYiping66/SFDA-rPPG

PhysMamba: Efficient Remote Physiological Measurement with SlowFast Temporal Difference Mamba

Sep 18, 2024

Abstract:Facial-video based Remote photoplethysmography (rPPG) aims at measuring physiological signals and monitoring heart activity without any contact, showing significant potential in various applications. Previous deep learning based rPPG measurement are primarily based on CNNs and Transformers. However, the limited receptive fields of CNNs restrict their ability to capture long-range spatio-temporal dependencies, while Transformers also struggle with modeling long video sequences with high complexity. Recently, the state space models (SSMs) represented by Mamba are known for their impressive performance on capturing long-range dependencies from long sequences. In this paper, we propose the PhysMamba, a Mamba-based framework, to efficiently represent long-range physiological dependencies from facial videos. Specifically, we introduce the Temporal Difference Mamba block to first enhance local dynamic differences and further model the long-range spatio-temporal context. Moreover, a dual-stream SlowFast architecture is utilized to fuse the multi-scale temporal features. Extensive experiments are conducted on three benchmark datasets to demonstrate the superiority and efficiency of PhysMamba. The codes are available at https://github.com/Chaoqi31/PhysMamba

NeuRSS: Enhancing AUV Localization and Bathymetric Mapping with Neural Rendering for Sidescan SLAM

May 09, 2024Abstract:Implicit neural representations and neural rendering have gained increasing attention for bathymetry estimation from sidescan sonar (SSS). These methods incorporate multiple observations of the same place from SSS data to constrain the elevation estimate, converging to a globally-consistent bathymetric model. However, the quality and precision of the bathymetric estimate are limited by the positioning accuracy of the autonomous underwater vehicle (AUV) equipped with the sonar. The global positioning estimate of the AUV relying on dead reckoning (DR) has an unbounded error due to the absence of a geo-reference system like GPS underwater. To address this challenge, we propose in this letter a modern and scalable framework, NeuRSS, for SSS SLAM based on DR and loop closures (LCs) over large timescales, with an elevation prior provided by the bathymetric estimate using neural rendering from SSS. This framework is an iterative procedure that improves localization and bathymetric mapping. Initially, the bathymetry estimated from SSS using the DR estimate, though crude, can provide an important elevation prior in the nonlinear least-squares (NLS) optimization that estimates the relative pose between two loop-closure vertices in a pose graph. Subsequently, the global pose estimate from the SLAM component improves the positioning estimate of the vehicle, thus improving the bathymetry estimation. We validate our localization and mapping approach on two large surveys collected with a surface vessel and an AUV, respectively. We evaluate their localization results against the ground truth and compare the bathymetry estimation against data collected with multibeam echo sounders (MBES).

Bathymetric Surveying with Imaging Sonar Using Neural Volume Rendering

Apr 23, 2024Abstract:This research addresses the challenge of estimating bathymetry from imaging sonars where the state-of-the-art works have primarily relied on either supervised learning with ground-truth labels or surface rendering based on the Lambertian assumption. In this letter, we propose a novel, self-supervised framework based on volume rendering for reconstructing bathymetry using forward-looking sonar (FLS) data collected during standard surveys. We represent the seafloor as a neural heightmap encapsulated with a parametric multi-resolution hash encoding scheme and model the sonar measurements with a differentiable renderer using sonar volumetric rendering employed with hierarchical sampling techniques. Additionally, we model the horizontal and vertical beam patterns and estimate them jointly with the bathymetry. We evaluate the proposed method quantitatively on simulation and field data collected by remotely operated vehicles (ROVs) during low-altitude surveys. Results show that the proposed method outperforms the current state-of-the-art approaches that use imaging sonars for seabed mapping. We also demonstrate that the proposed approach can potentially be used to increase the resolution of a low-resolution prior map with FLS data from low-altitude surveys.

A Dense Subframe-based SLAM Framework with Side-scan Sonar

Dec 21, 2023

Abstract:Side-scan sonar (SSS) is a lightweight acoustic sensor that is commonly deployed on autonomous underwater vehicles (AUVs) to provide high-resolution seafloor images. However, leveraging side-scan images for simultaneous localization and mapping (SLAM) presents a notable challenge, primarily due to the difficulty of establishing sufficient amount of accurate correspondences between these images. To address this, we introduce a novel subframe-based dense SLAM framework utilizing side-scan sonar data, enabling effective dense matching in overlapping regions of paired side-scan images. With each image being evenly divided into subframes, we propose a robust estimation pipeline to estimate the relative pose between each paired subframes, by using a good inlier set identified from dense correspondences. These relative poses are then integrated as edge constraints in a factor graph to optimize the AUV pose trajectory. The proposed framework is evaluated on three real datasets collected by a Hugin AUV. Among one of them includes manually-annotated keypoint correspondences as ground truth and is used for evaluation of pose trajectory. We also present a feasible way of evaluating mapping quality against multi-beam echosounder (MBES) data without the influence of pose. Experimental results demonstrate that our approach effectively mitigates drift from the dead-reckoning (DR) system and enables quasi-dense bathymetry reconstruction. An open-source implementation of this work is available.

Evaluation of a Canonical Image Representation for Sidescan Sonar

Apr 18, 2023

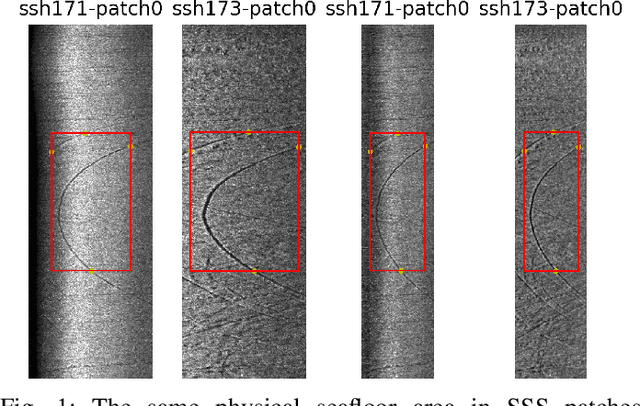

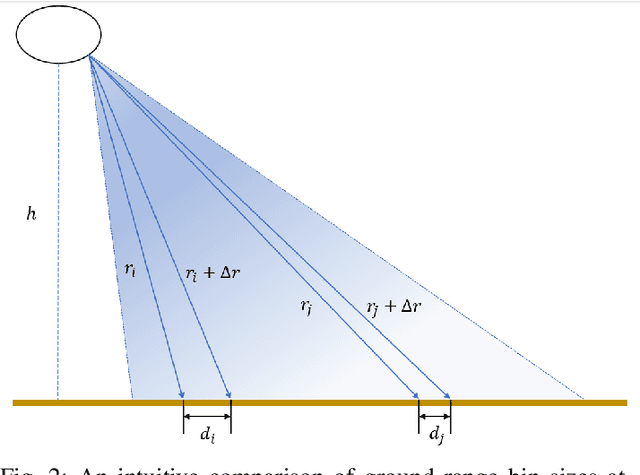

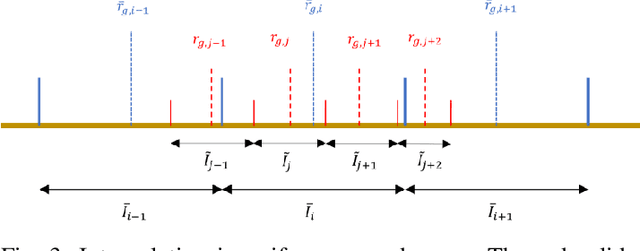

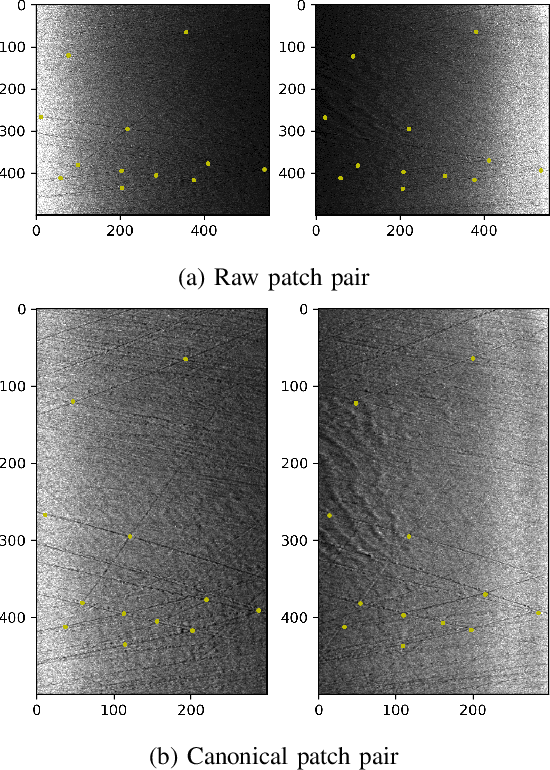

Abstract:Acoustic sensors play an important role in autonomous underwater vehicles (AUVs). Sidescan sonar (SSS) detects a wide range and provides photo-realistic images in high resolution. However, SSS projects the 3D seafloor to 2D images, which are distorted by the AUV's altitude, target's range and sensor's resolution. As a result, the same physical area can show significant visual differences in SSS images from different survey lines, causing difficulties in tasks such as pixel correspondence and template matching. In this paper, a canonical transformation method consisting of intensity correction and slant range correction is proposed to decrease the above distortion. The intensity correction includes beam pattern correction and incident angle correction using three different Lambertian laws (cos, cos2, cot), whereas the slant range correction removes the nadir zone and projects the position of SSS elements into equally horizontally spaced, view-point independent bins. The proposed method is evaluated on real data collected by a HUGIN AUV, with manually-annotated pixel correspondence as ground truth reference. Experimental results on patch pairs compare similarity measures and keypoint descriptor matching. The results show that the canonical transformation can improve the patch similarity, as well as SIFT descriptor matching accuracy in different images where the same physical area was ensonified.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge