Evaluation of a Canonical Image Representation for Sidescan Sonar

Paper and Code

Apr 18, 2023

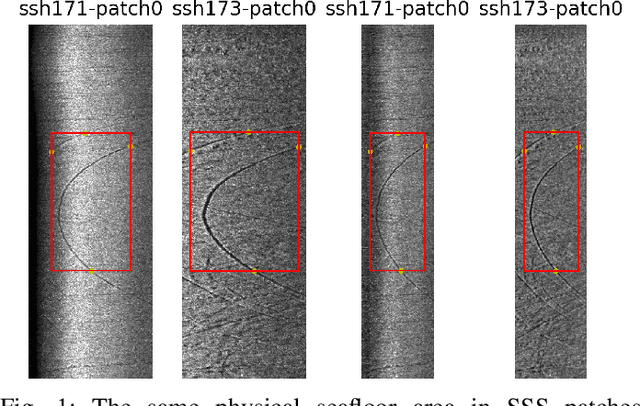

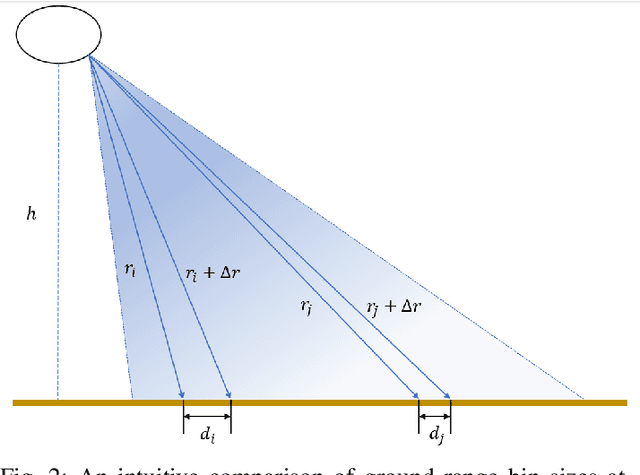

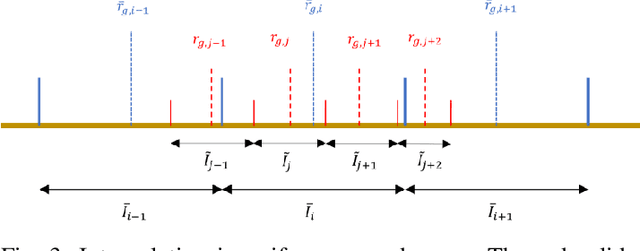

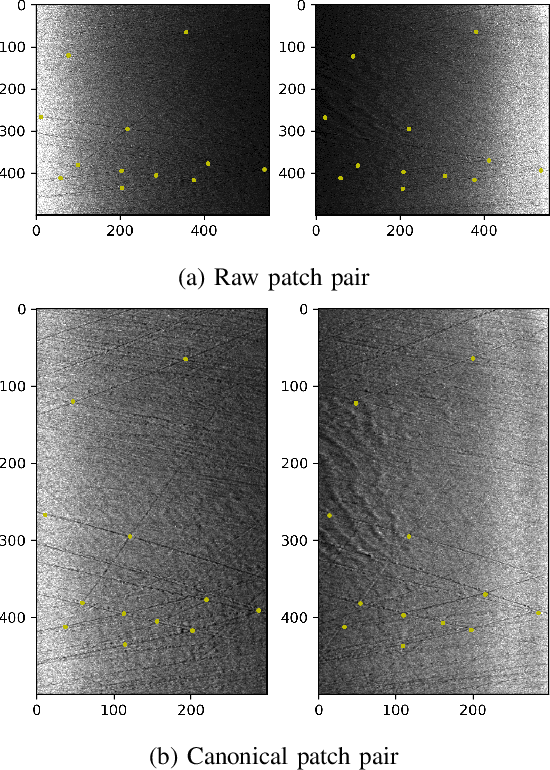

Acoustic sensors play an important role in autonomous underwater vehicles (AUVs). Sidescan sonar (SSS) detects a wide range and provides photo-realistic images in high resolution. However, SSS projects the 3D seafloor to 2D images, which are distorted by the AUV's altitude, target's range and sensor's resolution. As a result, the same physical area can show significant visual differences in SSS images from different survey lines, causing difficulties in tasks such as pixel correspondence and template matching. In this paper, a canonical transformation method consisting of intensity correction and slant range correction is proposed to decrease the above distortion. The intensity correction includes beam pattern correction and incident angle correction using three different Lambertian laws (cos, cos2, cot), whereas the slant range correction removes the nadir zone and projects the position of SSS elements into equally horizontally spaced, view-point independent bins. The proposed method is evaluated on real data collected by a HUGIN AUV, with manually-annotated pixel correspondence as ground truth reference. Experimental results on patch pairs compare similarity measures and keypoint descriptor matching. The results show that the canonical transformation can improve the patch similarity, as well as SIFT descriptor matching accuracy in different images where the same physical area was ensonified.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge