Nils Bore

Benchmarking Classical and Learning-Based Multibeam Point Cloud Registration

May 10, 2024Abstract:Deep learning has shown promising results for multiple 3D point cloud registration datasets. However, in the underwater domain, most registration of multibeam echo-sounder (MBES) point cloud data are still performed using classical methods in the iterative closest point (ICP) family. In this work, we curate and release DotsonEast Dataset, a semi-synthetic MBES registration dataset constructed from an autonomous underwater vehicle in West Antarctica. Using this dataset, we systematically benchmark the performance of 2 classical and 4 learning-based methods. The experimental results show that the learning-based methods work well for coarse alignment, and are better at recovering rough transforms consistently at high overlap (20-50%). In comparison, GICP (a variant of ICP) performs well for fine alignment and is better across all metrics at extremely low overlap (10%). To the best of our knowledge, this is the first work to benchmark both learning-based and classical registration methods on an AUV-based MBES dataset. To facilitate future research, both the code and data are made available online.

NeuRSS: Enhancing AUV Localization and Bathymetric Mapping with Neural Rendering for Sidescan SLAM

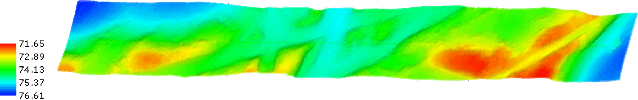

May 09, 2024Abstract:Implicit neural representations and neural rendering have gained increasing attention for bathymetry estimation from sidescan sonar (SSS). These methods incorporate multiple observations of the same place from SSS data to constrain the elevation estimate, converging to a globally-consistent bathymetric model. However, the quality and precision of the bathymetric estimate are limited by the positioning accuracy of the autonomous underwater vehicle (AUV) equipped with the sonar. The global positioning estimate of the AUV relying on dead reckoning (DR) has an unbounded error due to the absence of a geo-reference system like GPS underwater. To address this challenge, we propose in this letter a modern and scalable framework, NeuRSS, for SSS SLAM based on DR and loop closures (LCs) over large timescales, with an elevation prior provided by the bathymetric estimate using neural rendering from SSS. This framework is an iterative procedure that improves localization and bathymetric mapping. Initially, the bathymetry estimated from SSS using the DR estimate, though crude, can provide an important elevation prior in the nonlinear least-squares (NLS) optimization that estimates the relative pose between two loop-closure vertices in a pose graph. Subsequently, the global pose estimate from the SLAM component improves the positioning estimate of the vehicle, thus improving the bathymetry estimation. We validate our localization and mapping approach on two large surveys collected with a surface vessel and an AUV, respectively. We evaluate their localization results against the ground truth and compare the bathymetry estimation against data collected with multibeam echo sounders (MBES).

Bathymetric Surveying with Imaging Sonar Using Neural Volume Rendering

Apr 23, 2024Abstract:This research addresses the challenge of estimating bathymetry from imaging sonars where the state-of-the-art works have primarily relied on either supervised learning with ground-truth labels or surface rendering based on the Lambertian assumption. In this letter, we propose a novel, self-supervised framework based on volume rendering for reconstructing bathymetry using forward-looking sonar (FLS) data collected during standard surveys. We represent the seafloor as a neural heightmap encapsulated with a parametric multi-resolution hash encoding scheme and model the sonar measurements with a differentiable renderer using sonar volumetric rendering employed with hierarchical sampling techniques. Additionally, we model the horizontal and vertical beam patterns and estimate them jointly with the bathymetry. We evaluate the proposed method quantitatively on simulation and field data collected by remotely operated vehicles (ROVs) during low-altitude surveys. Results show that the proposed method outperforms the current state-of-the-art approaches that use imaging sonars for seabed mapping. We also demonstrate that the proposed approach can potentially be used to increase the resolution of a low-resolution prior map with FLS data from low-altitude surveys.

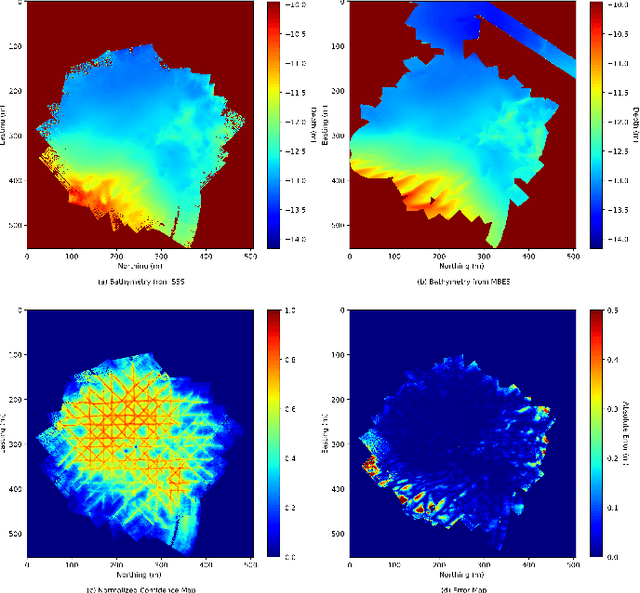

Neural Shape-from-Shading for Survey-Scale Self-Consistent Bathymetry from Sidescan

Jun 18, 2022

Abstract:Sidescan sonar is a small and cost-effective sensing solution that can be easily mounted on most vessels. Historically, it has been used to produce high-definition images that experts may use to identify targets on the seafloor or in the water column. While solutions have been proposed to produce bathymetry solely from sidescan, or in conjunction with multibeam, they have had limited impact. This is partly a result of mostly being limited to single sidescan lines. In this paper, we propose a modern, salable solution to create high quality survey-scale bathymetry from many sidescan lines. By incorporating multiple observations of the same place, results can be improved as the estimates reinforce each other. Our method is based on sinusoidal representation networks, a recent advance in neural representation learning. We demonstrate the scalability of the approach by producing bathymetry from a large sidescan survey. The resulting quality is demonstrated by comparing to data collected with a high-precision multibeam sensor.

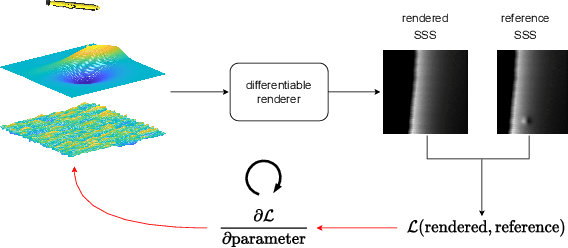

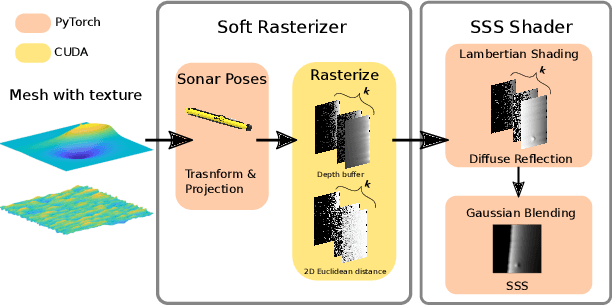

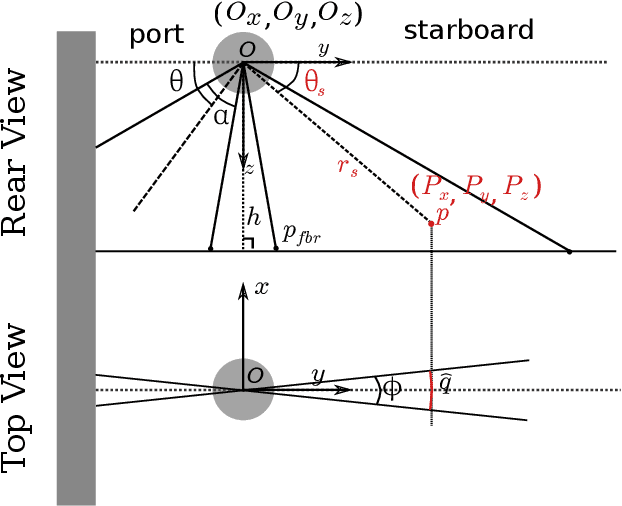

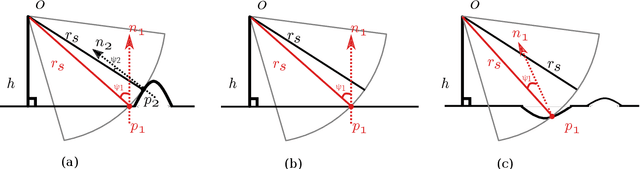

Towards Differentiable Rendering for Sidescan Sonar Imagery

Jun 15, 2022

Abstract:Recent advances in differentiable rendering, which allow calculating the gradients of 2D pixel values with respect to 3D object models, can be applied to estimation of the model parameters by gradient-based optimization with only 2D supervision. It is easy to incorporate deep neural networks into such an optimization pipeline, allowing the leveraging of deep learning techniques. This also largely reduces the requirement for collecting and annotating 3D data, which is very difficult for applications, for example when constructing geometry from 2D sensors. In this work, we propose a differentiable renderer for sidescan sonar imagery. We further demonstrate its ability to solve the inverse problem of directly reconstructing a 3D seafloor mesh from only 2D sidescan sonar data.

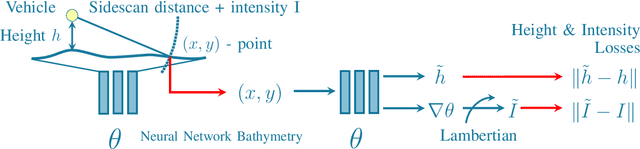

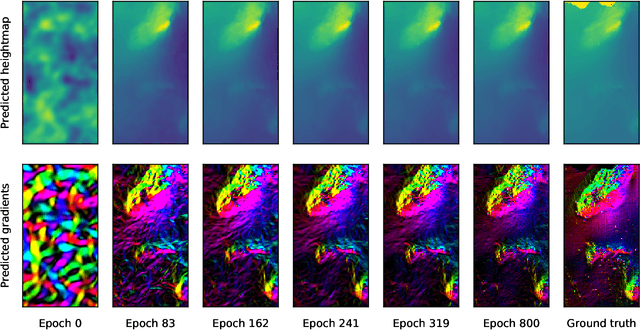

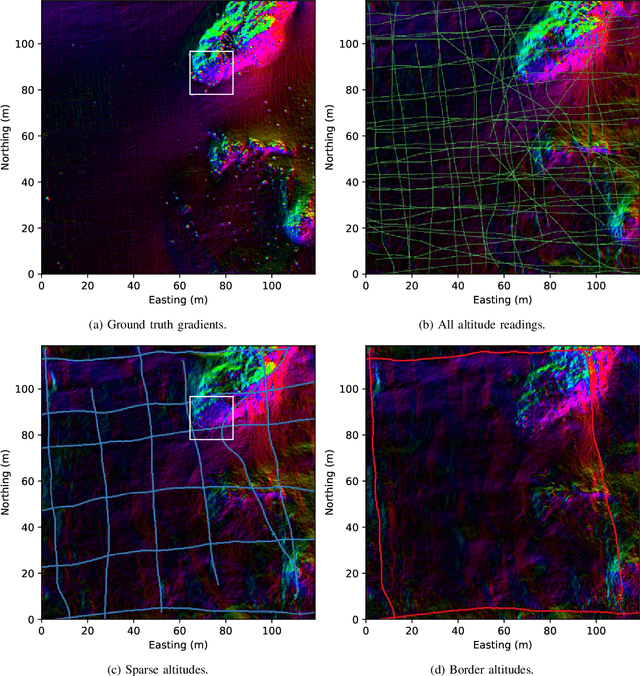

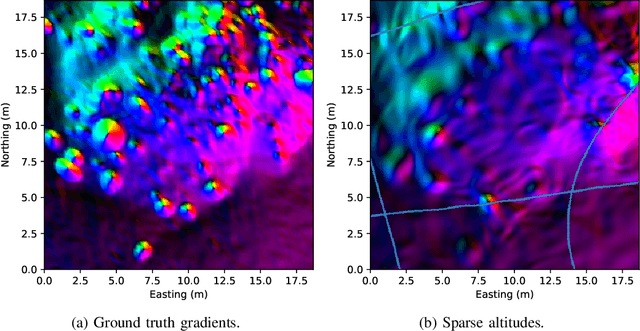

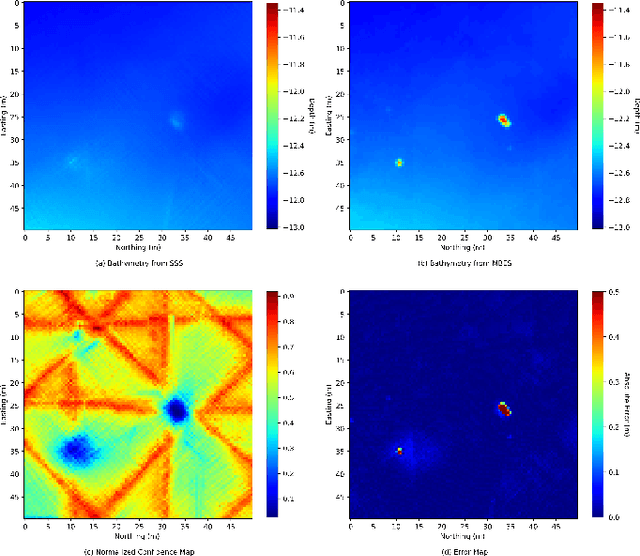

Neural Network Normal Estimation and Bathymetry Reconstruction from Sidescan Sonar

Jun 15, 2022

Abstract:Sidescan sonar intensity encodes information about the changes of surface normal of the seabed. However, other factors such as seabed geometry as well as its material composition also affect the return intensity. One can model these intensity changes in a forward direction from the surface normals from bathymetric map and physical properties to the measured intensity or alternatively one can use an inverse model which starts from the intensities and models the surface normals. Here we use an inverse model which leverages deep learning's ability to learn from data; a convolutional neural network is used to estimate the surface normal from the sidescan. Thus the internal properties of the seabed are only implicitly learned. Once this information is estimated, a bathymetric map can be reconstructed through an optimization framework that also includes altimeter readings to provide a sparse depth profile as a constraint. Implicit neural representation learning was recently proposed to represent the bathymetric map in such an optimization framework. In this article, we use a neural network to represent the map and optimize it under constraints of altimeter points and estimated surface normal from sidescan. By fusing multiple observations from different angles from several sidescan lines, the estimated results are improved through optimization. We demonstrate the efficiency and scalability of the approach by reconstructing a high-quality bathymetry using sidescan data from a large sidescan survey. We compare the proposed data-driven inverse model approach of modeling a sidescan with a forward Lambertian model. We assess the quality of each reconstruction by comparing it with data constructed from a multibeam sensor. We are thus able to discuss the strengths and weaknesses of each approach.

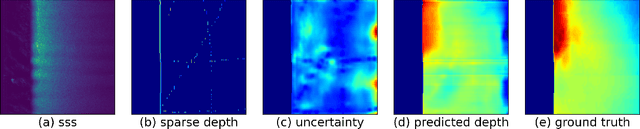

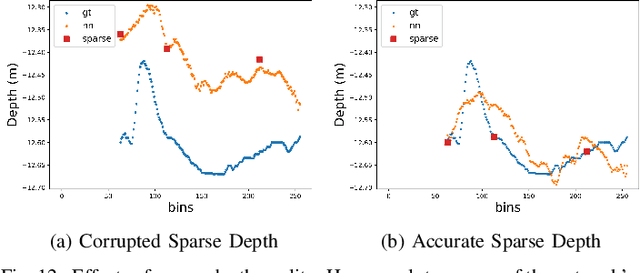

High-Resolution Bathymetric Reconstruction From Sidescan Sonar With Deep Neural Networks

Jun 15, 2022

Abstract:We propose a novel data-driven approach for high-resolution bathymetric reconstruction from sidescan. Sidescan sonar (SSS) intensities as a function of range do contain some information about the slope of the seabed. However, that information must be inferred. Additionally, the navigation system provides the estimated trajectory, and normally the altitude along this trajectory is also available. From these we obtain a very coarse seabed bathymetry as an input. This is then combined with the indirect but high-resolution seabed slope information from the sidescan to estimate the full bathymetry. This sparse depth could be acquired by single-beam echo sounder, Doppler Velocity Log (DVL), other bottom tracking sensors or bottom tracking algorithm from sidescan itself. In our work, a fully convolutional network is used to estimate the depth contour and its aleatoric uncertainty from the sidescan images and sparse depth in an end-to-end fashion. The estimated depth is then used together with the range to calculate the point's 3D location on the seafloor. A high-quality bathymetric map can be reconstructed after fusing the depth predictions and the corresponding confidence measures from the neural networks. We show the improvement of the bathymetric map gained by using sparse depths with sidescan over estimates with sidescan alone. We also show the benefit of confidence weighting when fusing multiple bathymetric estimates into a single map.

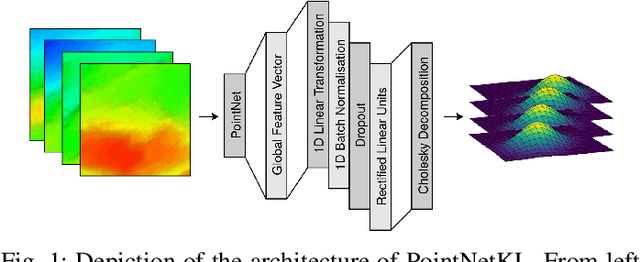

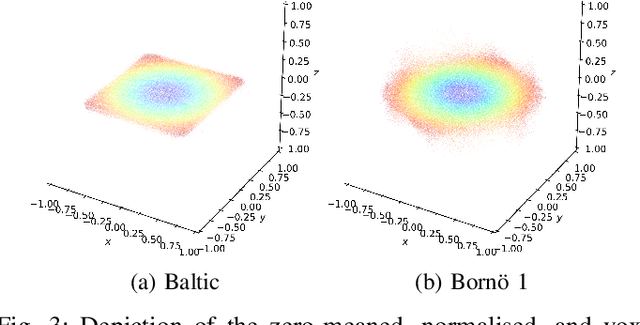

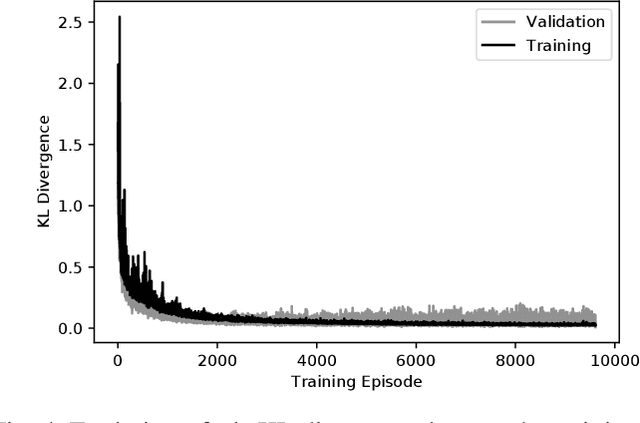

PointNetKL: Deep Inference for GICP Covariance Estimation in Bathymetric SLAM

Mar 24, 2020

Abstract:Registration methods for point clouds have become a key component of many SLAM systems on autonomous vehicles. However, an accurate estimate of the uncertainty of such registration is a key requirement to a consistent fusion of this kind of measurements in a SLAM filter. This estimate, which is normally given as a covariance in the transformation computed between point cloud reference frames, has been modelled following different approaches, among which the most accurate is considered to be the Monte Carlo method. However, a Monte Carlo approximation is cumbersome to use inside a time-critical application such as online SLAM. Efforts have been made to estimate this covariance via machine learning using carefully designed features to abstract the raw point clouds. However, the performance of this approach is sensitive to the features chosen. We argue that it is possible to learn the features along with the covariance by working with the raw data and thus we propose a new approach based on PointNet. In this work, we train this network using the KL divergence between the learned uncertainty distribution and one computed by the Monte Carlo method as the loss. We test the performance of the general model presented applying it to our target use-case of SLAM with an autonomous underwater vehicle (AUV) restricted to the 2-dimensional registration of 3D bathymetric point clouds.

Detection and Tracking of General Movable Objects in Large 3D Maps

Jan 30, 2018

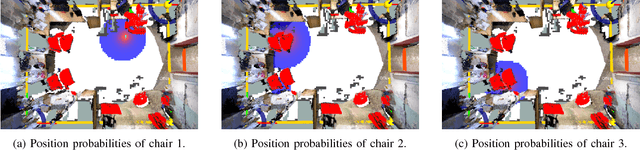

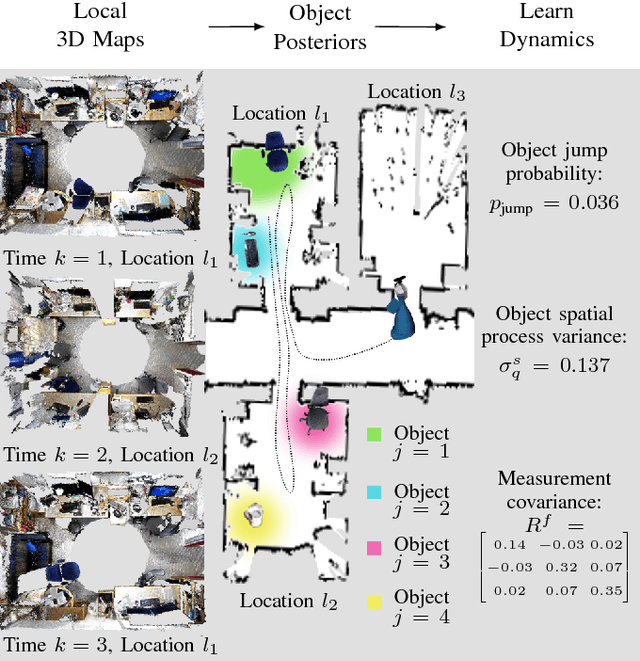

Abstract:This paper studies the problem of detection and tracking of general objects with long-term dynamics, observed by a mobile robot moving in a large environment. A key problem is that due to the environment scale, it can only observe a subset of the objects at any given time. Since some time passes between observations of objects in different places, the objects might be moved when the robot is not there. We propose a model for this movement in which the objects typically only move locally, but with some small probability they jump longer distances, through what we call global motion. For filtering, we decompose the posterior over local and global movements into two linked processes. The posterior over the global movements and measurement associations is sampled, while we track the local movement analytically using Kalman filters. This novel filter is evaluated on point cloud data gathered autonomously by a mobile robot over an extended period of time. We show that tracking jumping objects is feasible, and that the proposed probabilistic treatment outperforms previous methods when applied to real world data. The key to efficient probabilistic tracking in this scenario is focused sampling of the object posteriors.

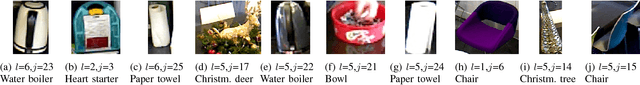

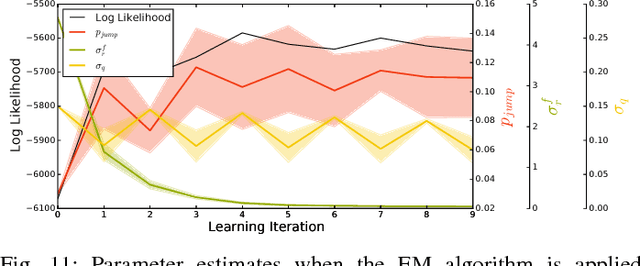

Multiple Object Detection, Tracking and Long-Term Dynamics Learning in Large 3D Maps

Jan 28, 2018

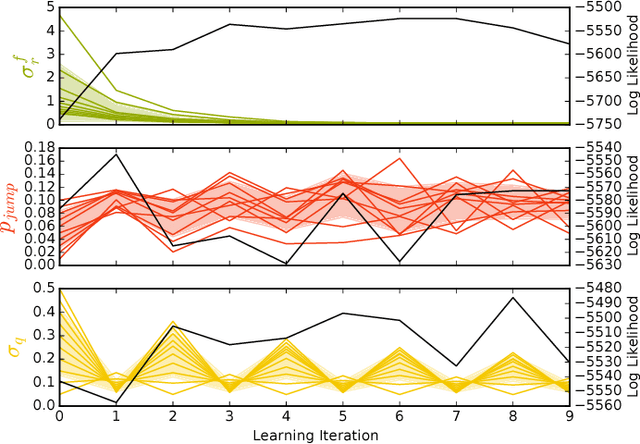

Abstract:In this work, we present a method for tracking and learning the dynamics of all objects in a large scale robot environment. A mobile robot patrols the environment and visits the different locations one by one. Movable objects are discovered by change detection, and tracked throughout the robot deployment. For tracking, we extend the Rao-Blackwellized particle filter of previous work with birth and death processes, enabling the method to handle an arbitrary number of objects. Target births and associations are sampled using Gibbs sampling. The parameters of the system are then learnt using the Expectation Maximization algorithm in an unsupervised fashion. The system therefore enables learning of the dynamics of one particular environment, and of its objects. The algorithm is evaluated on data collected autonomously by a mobile robot in an office environment during a real-world deployment. We show that the algorithm automatically identifies and tracks the moving objects within 3D maps and infers plausible dynamics models, significantly decreasing the modeling bias of our previous work. The proposed method represents an improvement over previous methods for environment dynamics learning as it allows for learning of fine grained processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge