Yanfeng Sun

Contrastive Learning Meets Pseudo-label-assisted Mixup Augmentation: A Comprehensive Graph Representation Framework from Local to Global

Jan 30, 2025

Abstract:Graph Neural Networks (GNNs) have demonstrated remarkable effectiveness in various graph representation learning tasks. However, most existing GNNs focus primarily on capturing local information through explicit graph convolution, often neglecting global message-passing. This limitation hinders the establishment of a collaborative interaction between global and local information, which is crucial for comprehensively understanding graph data. To address these challenges, we propose a novel framework called Comprehensive Graph Representation Learning (ComGRL). ComGRL integrates local information into global information to derive powerful representations. It achieves this by implicitly smoothing local information through flexible graph contrastive learning, ensuring reliable representations for subsequent global exploration. Then ComGRL transfers the locally derived representations to a multi-head self-attention module, enhancing their discriminative ability by uncovering diverse and rich global correlations. To further optimize local information dynamically under the self-supervision of pseudo-labels, ComGRL employs a triple sampling strategy to construct mixed node pairs and applies reliable Mixup augmentation across attributes and structure for local contrastive learning. This approach broadens the receptive field and facilitates coordination between local and global representation learning, enabling them to reinforce each other. Experimental results across six widely used graph datasets demonstrate that ComGRL achieves excellent performance in node classification tasks. The code could be available at https://github.com/JinluWang1002/ComGRL.

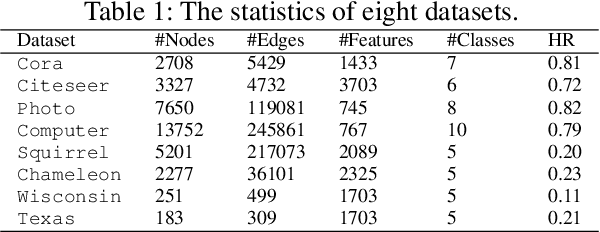

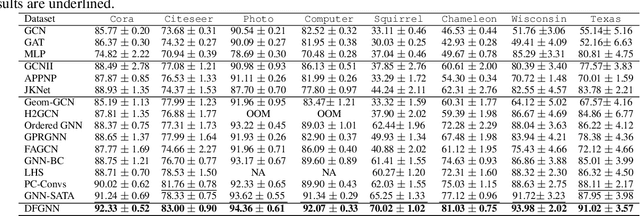

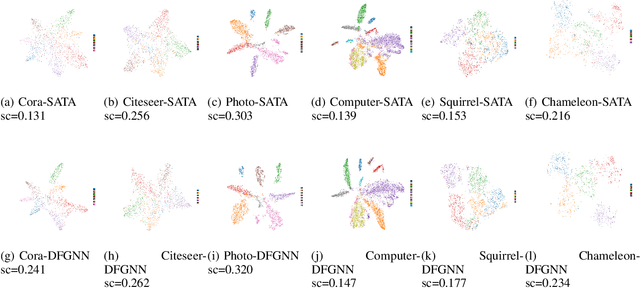

Dual-Frequency Filtering Self-aware Graph Neural Networks for Homophilic and Heterophilic Graphs

Nov 18, 2024

Abstract:Graph Neural Networks (GNNs) have excelled in handling graph-structured data, attracting significant research interest. However, two primary challenges have emerged: interference between topology and attributes distorting node representations, and the low-pass filtering nature of most GNNs leading to the oversight of valuable high-frequency information in graph signals. These issues are particularly pronounced in heterophilic graphs. To address these challenges, we propose Dual-Frequency Filtering Self-aware Graph Neural Networks (DFGNN). DFGNN integrates low-pass and high-pass filters to extract smooth and detailed topological features, using frequency-specific constraints to minimize noise and redundancy in the respective frequency bands. The model dynamically adjusts filtering ratios to accommodate both homophilic and heterophilic graphs. Furthermore, DFGNN mitigates interference by aligning topological and attribute representations through dynamic correspondences between their respective frequency bands, enhancing overall model performance and expressiveness. Extensive experiments conducted on benchmark datasets demonstrate that DFGNN outperforms state-of-the-art methods in classification performance, highlighting its effectiveness in handling both homophilic and heterophilic graphs.

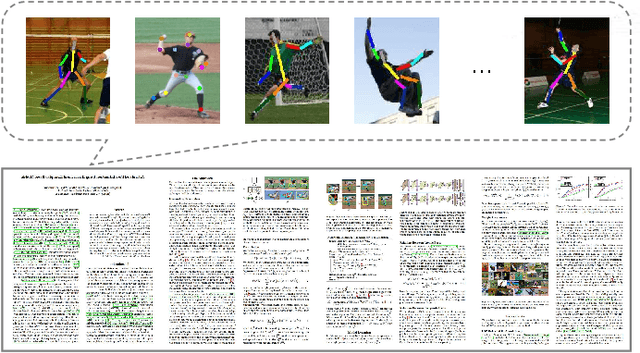

Hierarchical Multi-modal Transformer for Cross-modal Long Document Classification

Jul 14, 2024

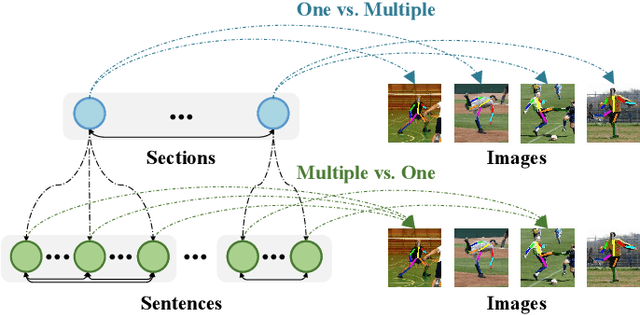

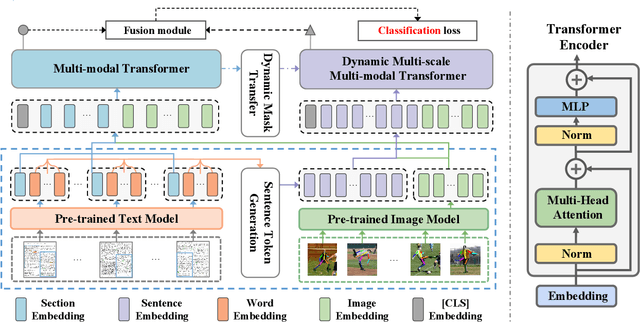

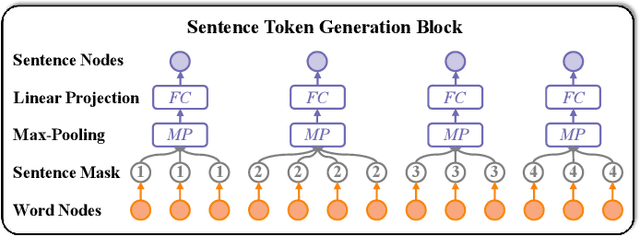

Abstract:Long Document Classification (LDC) has gained significant attention recently. However, multi-modal data in long documents such as texts and images are not being effectively utilized. Prior studies in this area have attempted to integrate texts and images in document-related tasks, but they have only focused on short text sequences and images of pages. How to classify long documents with hierarchical structure texts and embedding images is a new problem and faces multi-modal representation difficulties. In this paper, we propose a novel approach called Hierarchical Multi-modal Transformer (HMT) for cross-modal long document classification. The HMT conducts multi-modal feature interaction and fusion between images and texts in a hierarchical manner. Our approach uses a multi-modal transformer and a dynamic multi-scale multi-modal transformer to model the complex relationships between image features, and the section and sentence features. Furthermore, we introduce a new interaction strategy called the dynamic mask transfer module to integrate these two transformers by propagating features between them. To validate our approach, we conduct cross-modal LDC experiments on two newly created and two publicly available multi-modal long document datasets, and the results show that the proposed HMT outperforms state-of-the-art single-modality and multi-modality methods.

DGNN: Decoupled Graph Neural Networks with Structural Consistency between Attribute and Graph Embedding Representations

Jan 28, 2024Abstract:Graph neural networks (GNNs) demonstrate a robust capability for representation learning on graphs with complex structures, showcasing superior performance in various applications. The majority of existing GNNs employ a graph convolution operation by using both attribute and structure information through coupled learning. In essence, GNNs, from an optimization perspective, seek to learn a consensus and compromise embedding representation that balances attribute and graph information, selectively exploring and retaining valid information. To obtain a more comprehensive embedding representation of nodes, a novel GNNs framework, dubbed Decoupled Graph Neural Networks (DGNN), is introduced. DGNN explores distinctive embedding representations from the attribute and graph spaces by decoupled terms. Considering that semantic graph, constructed from attribute feature space, consists of different node connection information and provides enhancement for the topological graph, both topological and semantic graphs are combined for the embedding representation learning. Further, structural consistency among attribute embedding and graph embeddings is promoted to effectively remove redundant information and establish soft connection. This involves promoting factor sharing for adjacency reconstruction matrices, facilitating the exploration of a consensus and high-level correlation. Finally, a more powerful and complete representation is achieved through the concatenation of these embeddings. Experimental results conducted on several graph benchmark datasets verify its superiority in node classification task.

Adversarial Privacy-preserving Filter

Aug 04, 2020

Abstract:While widely adopted in practical applications, face recognition has been critically discussed regarding the malicious use of face images and the potential privacy problems, e.g., deceiving payment system and causing personal sabotage. Online photo sharing services unintentionally act as the main repository for malicious crawler and face recognition applications. This work aims to develop a privacy-preserving solution, called Adversarial Privacy-preserving Filter (APF), to protect the online shared face images from being maliciously used.We propose an end-cloud collaborated adversarial attack solution to satisfy requirements of privacy, utility and nonaccessibility. Specifically, the solutions consist of three modules: (1) image-specific gradient generation, to extract image-specific gradient in the user end with a compressed probe model; (2) adversarial gradient transfer, to fine-tune the image-specific gradient in the server cloud; and (3) universal adversarial perturbation enhancement, to append image-independent perturbation to derive the final adversarial noise. Extensive experiments on three datasets validate the effectiveness and efficiency of the proposed solution. A prototype application is also released for further evaluation.We hope the end-cloud collaborated attack framework could shed light on addressing the issue of online multimedia sharing privacy-preserving issues from user side.

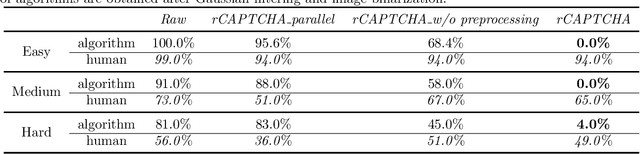

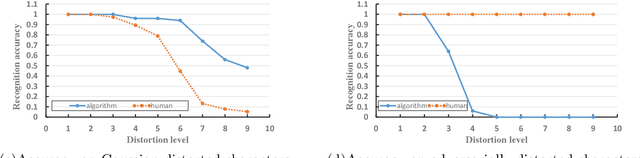

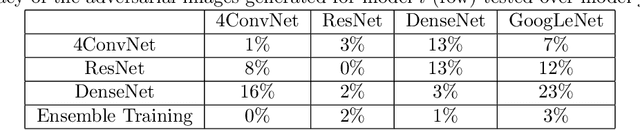

blessing in disguise: Designing Robust Turing Test by Employing Algorithm Unrobustness

Apr 22, 2019

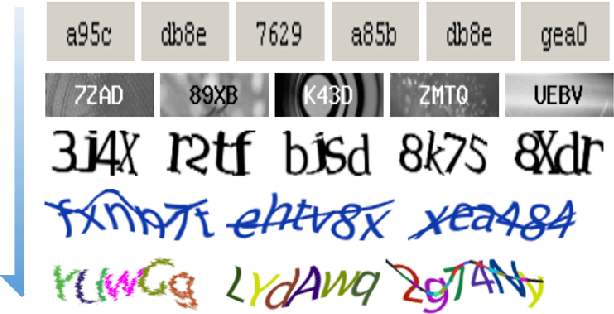

Abstract:Turing test was originally proposed to examine whether machine's behavior is indistinguishable from a human. The most popular and practical Turing test is CAPTCHA, which is to discriminate algorithm from human by offering recognition-alike questions. The recent development of deep learning has significantly advanced the capability of algorithm in solving CAPTCHA questions, forcing CAPTCHA designers to increase question complexity. Instead of designing questions difficult for both algorithm and human, this study attempts to employ the limitations of algorithm to design robust CAPTCHA questions easily solvable to human. Specifically, our data analysis observes that human and algorithm demonstrates different vulnerability to visual distortions: adversarial perturbation is significantly annoying to algorithm yet friendly to human. We are motivated to employ adversarially perturbed images for robust CAPTCHA design in the context of character-based questions. Three modules of multi-target attack, ensemble adversarial training, and image preprocessing differentiable approximation are proposed to address the characteristics of character-based CAPTCHA cracking. Qualitative and quantitative experimental results demonstrate the effectiveness of the proposed solution. We hope this study can lead to the discussions around adversarial attack/defense in CAPTCHA design and also inspire the future attempts in employing algorithm limitation for practical usage.

Attention, Please! Adversarial Defense via Attention Rectification and Preservation

Nov 24, 2018

Abstract:This study provides a new understanding of the adversarial attack problem by examining the correlation between adversarial attack and visual attention change. In particular, we observed that: (1) images with incomplete attention regions are more vulnerable to adversarial attacks; and (2) successful adversarial attacks lead to deviated and scattered attention map. Accordingly, an attention-based adversarial defense framework is designed to simultaneously rectify the attention map for prediction and preserve the attention area between adversarial and original images. The problem of adding iteratively attacked samples is also discussed in the context of visual attention change. We hope the attention-related data analysis and defense solution in this study will shed some light on the mechanism behind the adversarial attack and also facilitate future adversarial defense/attack model design.

Vectorial Dimension Reduction for Tensors Based on Bayesian Inference

Jul 03, 2017

Abstract:Dimensionality reduction for high-order tensors is a challenging problem. In conventional approaches, higher order tensors are `vectorized` via Tucker decomposition to obtain lower order tensors. This will destroy the inherent high-order structures or resulting in undesired tensors, respectively. This paper introduces a probabilistic vectorial dimensionality reduction model for tensorial data. The model represents a tensor by employing a linear combination of same order basis tensors, thus it offers a mechanism to directly reduce a tensor to a vector. Under this expression, the projection base of the model is based on the tensor CandeComp/PARAFAC (CP) decomposition and the number of free parameters in the model only grows linearly with the number of modes rather than exponentially. A Bayesian inference has been established via the variational EM approach. A criterion to set the parameters (factor number of CP decomposition and the number of extracted features) is empirically given. The model outperforms several existing PCA-based methods and CP decomposition on several publicly available databases in terms of classification and clustering accuracy.

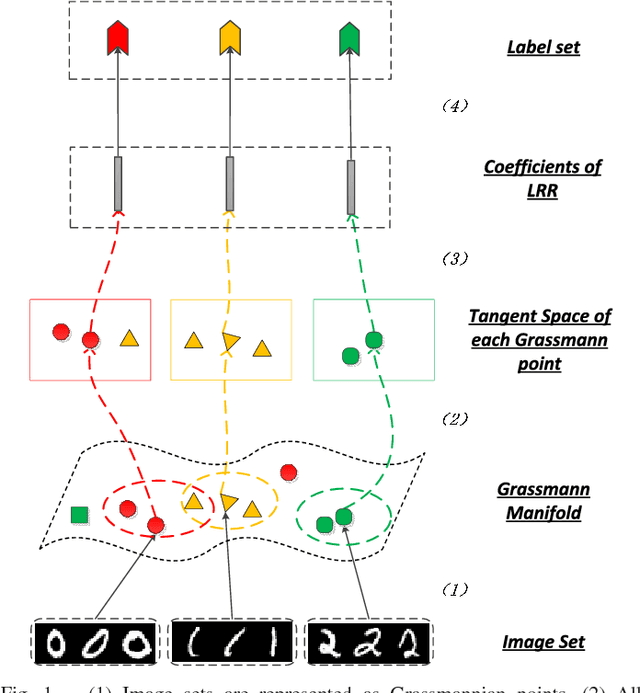

Localized LRR on Grassmann Manifolds: An Extrinsic View

May 17, 2017

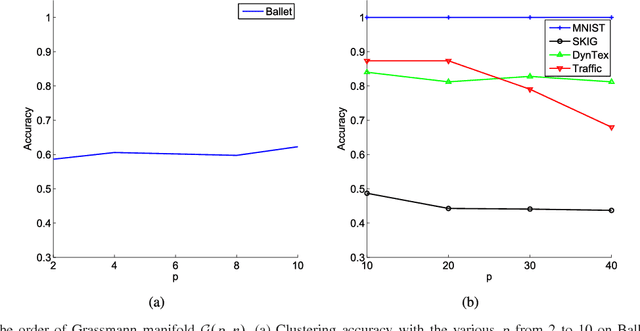

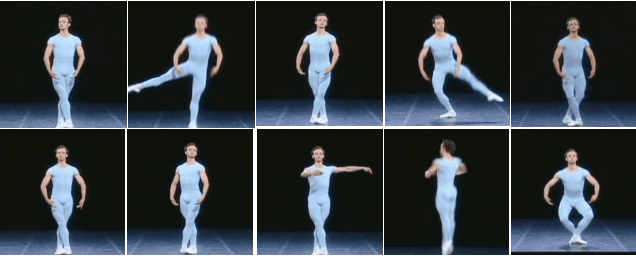

Abstract:Subspace data representation has recently become a common practice in many computer vision tasks. It demands generalizing classical machine learning algorithms for subspace data. Low-Rank Representation (LRR) is one of the most successful models for clustering vectorial data according to their subspace structures. This paper explores the possibility of extending LRR for subspace data on Grassmann manifolds. Rather than directly embedding the Grassmann manifolds into the symmetric matrix space, an extrinsic view is taken to build the LRR self-representation in the local area of the tangent space at each Grassmannian point, resulting in a localized LRR method on Grassmann manifolds. A novel algorithm for solving the proposed model is investigated and implemented. The performance of the new clustering algorithm is assessed through experiments on several real-world datasets including MNIST handwritten digits, ballet video clips, SKIG action clips, DynTex++ dataset and highway traffic video clips. The experimental results show the new method outperforms a number of state-of-the-art clustering methods

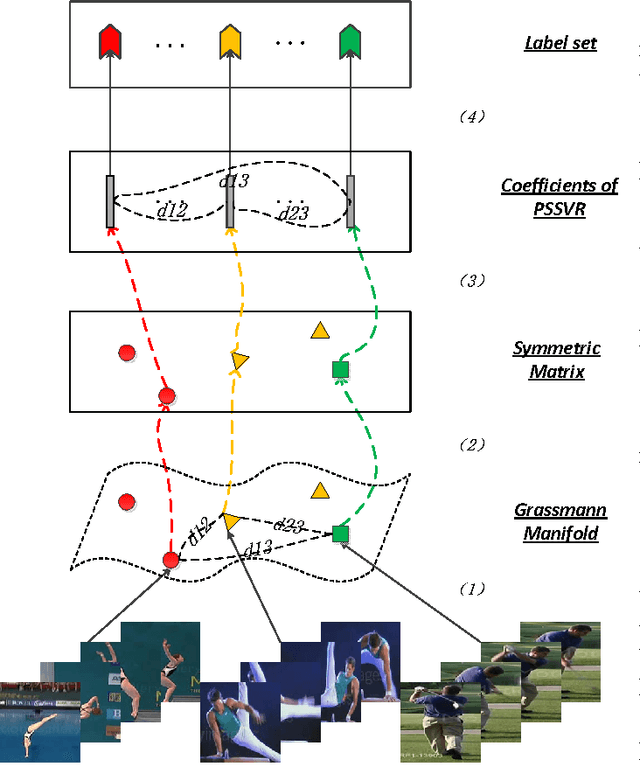

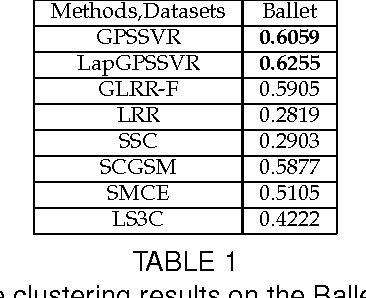

Partial Sum Minimization of Singular Values Representation on Grassmann Manifolds

Apr 28, 2017

Abstract:As a significant subspace clustering method, low rank representation (LRR) has attracted great attention in recent years. To further improve the performance of LRR and extend its applications, there are several issues to be resolved. The nuclear norm in LRR does not sufficiently use the prior knowledge of the rank which is known in many practical problems. The LRR is designed for vectorial data from linear spaces, thus not suitable for high dimensional data with intrinsic non-linear manifold structure. This paper proposes an extended LRR model for manifold-valued Grassmann data which incorporates prior knowledge by minimizing partial sum of singular values instead of the nuclear norm, namely Partial Sum minimization of Singular Values Representation (GPSSVR). The new model not only enforces the global structure of data in low rank, but also retains important information by minimizing only smaller singular values. To further maintain the local structures among Grassmann points, we also integrate the Laplacian penalty with GPSSVR. An effective algorithm is proposed to solve the optimization problem based on the GPSSVR model. The proposed model and algorithms are assessed on some widely used human action video datasets and a real scenery dataset. The experimental results show that the proposed methods obviously outperform other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge