Xun Gong

Bagpiper: Solving Open-Ended Audio Tasks via Rich Captions

Feb 05, 2026Abstract:Current audio foundation models typically rely on rigid, task-specific supervision, addressing isolated factors of audio rather than the whole. In contrast, human intelligence processes audio holistically, seamlessly bridging physical signals with abstract cognitive concepts to execute complex tasks. Grounded in this philosophy, we introduce Bagpiper, an 8B audio foundation model that interprets physical audio via rich captions, i.e., comprehensive natural language descriptions that encapsulate the critical cognitive concepts inherent in the signal (e.g., transcription, audio events). By pre-training on a massive corpus of 600B tokens, the model establishes a robust bidirectional mapping between raw audio and this high-level conceptual space. During fine-tuning, Bagpiper adopts a caption-then-process workflow, simulating an intermediate cognitive reasoning step to solve diverse tasks without task-specific priors. Experimentally, Bagpiper outperforms Qwen-2.5-Omni on MMAU and AIRBench for audio understanding and surpasses CosyVoice3 and TangoFlux in generation quality, capable of synthesizing arbitrary compositions of speech, music, and sound effects. To the best of our knowledge, Bagpiper is among the first works that achieve unified understanding generation for general audio. Model, data, and code are available at Bagpiper Home Page.

From Failures to Fixes: LLM-Driven Scenario Repair for Self-Evolving Autonomous Driving

May 28, 2025Abstract:Ensuring robust and generalizable autonomous driving requires not only broad scenario coverage but also efficient repair of failure cases, particularly those related to challenging and safety-critical scenarios. However, existing scenario generation and selection methods often lack adaptivity and semantic relevance, limiting their impact on performance improvement. In this paper, we propose \textbf{SERA}, an LLM-powered framework that enables autonomous driving systems to self-evolve by repairing failure cases through targeted scenario recommendation. By analyzing performance logs, SERA identifies failure patterns and dynamically retrieves semantically aligned scenarios from a structured bank. An LLM-based reflection mechanism further refines these recommendations to maximize relevance and diversity. The selected scenarios are used for few-shot fine-tuning, enabling targeted adaptation with minimal data. Experiments on the benchmark show that SERA consistently improves key metrics across multiple autonomous driving baselines, demonstrating its effectiveness and generalizability under safety-critical conditions.

BR-ASR: Efficient and Scalable Bias Retrieval Framework for Contextual Biasing ASR in Speech LLM

May 25, 2025Abstract:While speech large language models (SpeechLLMs) have advanced standard automatic speech recognition (ASR), contextual biasing for named entities and rare words remains challenging, especially at scale. To address this, we propose BR-ASR: a Bias Retrieval framework for large-scale contextual biasing (up to 200k entries) via two innovations: (1) speech-and-bias contrastive learning to retrieve semantically relevant candidates; (2) dynamic curriculum learning that mitigates homophone confusion which negatively impacts the final performance. The is a general framework that allows seamless integration of the retrieved candidates into diverse ASR systems without fine-tuning. Experiments on LibriSpeech test-clean/-other achieve state-of-the-art (SOTA) biased word error rates (B-WER) of 2.8%/7.1% with 2000 bias words, delivering 45% relative improvement over prior methods. BR-ASR also demonstrates high scalability: when expanding the bias list to 200k where traditional methods generally fail, it induces only 0.3 / 2.9% absolute WER / B-WER degradation with a 99.99% pruning rate and only 20ms latency per query on test-other.

RepFace: Refining Closed-Set Noise with Progressive Label Correction for Face Recognition

Dec 16, 2024Abstract:Face recognition has made remarkable strides, driven by the expanding scale of datasets, advancements in various backbone and discriminative losses. However, face recognition performance is heavily affected by the label noise, especially closed-set noise. While numerous studies have focused on handling label noise, addressing closed-set noise still poses challenges. This paper identifies this challenge as training isn't robust to noise at the early-stage training, and necessitating an appropriate learning strategy for samples with low confidence, which are often misclassified as closed-set noise in later training phases. To address these issues, we propose a new framework to stabilize the training at early stages and split the samples into clean, ambiguous and noisy groups which are devised with separate training strategies. Initially, we employ generated auxiliary closed-set noisy samples to enable the model to identify noisy data at the early stages of training. Subsequently, we introduce how samples are split into clean, ambiguous and noisy groups by their similarity to the positive and nearest negative centers. Then we perform label fusion for ambiguous samples by incorporating accumulated model predictions. Finally, we apply label smoothing within the closed set, adjusting the label to a point between the nearest negative class and the initially assigned label. Extensive experiments validate the effectiveness of our method on mainstream face datasets, achieving state-of-the-art results. The code will be released upon acceptance.

CLIP-based Camera-Agnostic Feature Learning for Intra-camera Person Re-Identification

Sep 29, 2024

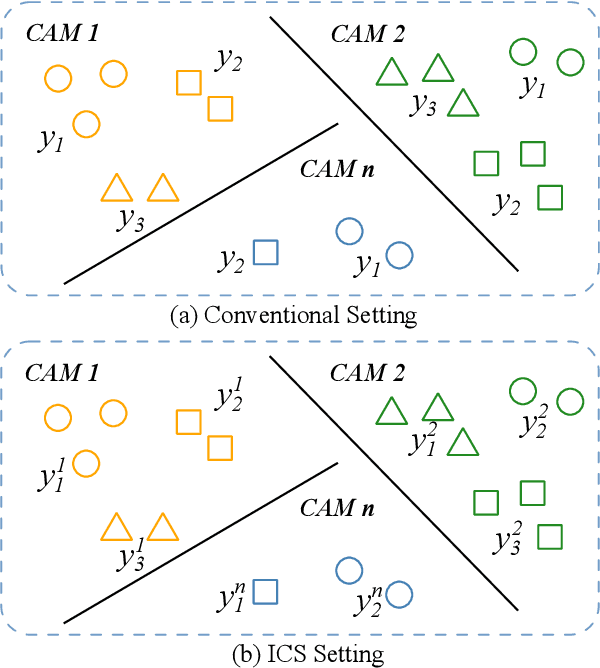

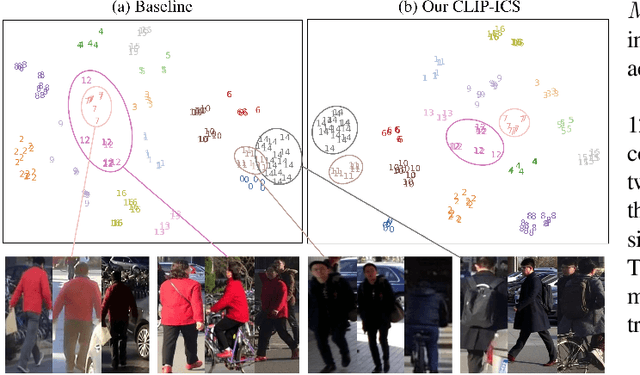

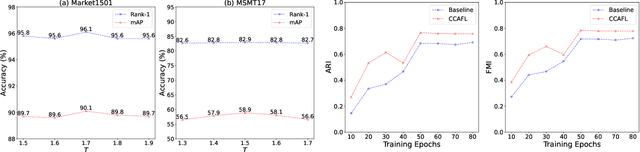

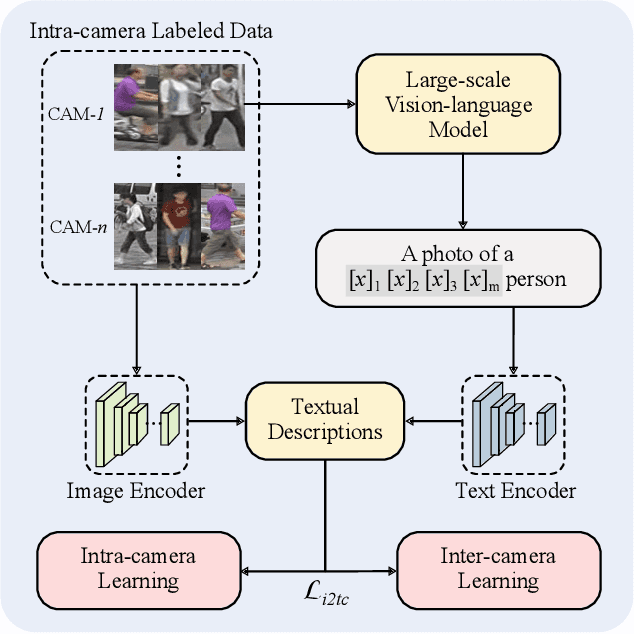

Abstract:Contrastive Language-Image Pre-Training (CLIP) model excels in traditional person re-identification (ReID) tasks due to its inherent advantage in generating textual descriptions for pedestrian images. However, applying CLIP directly to intra-camera supervised person re-identification (ICS ReID) presents challenges. ICS ReID requires independent identity labeling within each camera, without associations across cameras. This limits the effectiveness of text-based enhancements. To address this, we propose a novel framework called CLIP-based Camera-Agnostic Feature Learning (CCAFL) for ICS ReID. Accordingly, two custom modules are designed to guide the model to actively learn camera-agnostic pedestrian features: Intra-Camera Discriminative Learning (ICDL) and Inter-Camera Adversarial Learning (ICAL). Specifically, we first establish learnable textual prompts for intra-camera pedestrian images to obtain crucial semantic supervision signals for subsequent intra- and inter-camera learning. Then, we design ICDL to increase inter-class variation by considering the hard positive and hard negative samples within each camera, thereby learning intra-camera finer-grained pedestrian features. Additionally, we propose ICAL to reduce inter-camera pedestrian feature discrepancies by penalizing the model's ability to predict the camera from which a pedestrian image originates, thus enhancing the model's capability to recognize pedestrians from different viewpoints. Extensive experiments on popular ReID datasets demonstrate the effectiveness of our approach. Especially, on the challenging MSMT17 dataset, we arrive at 58.9\% in terms of mAP accuracy, surpassing state-of-the-art methods by 7.6\%. Code will be available at: https://github.com/Trangle12/CCAFL.

LCE: A Framework for Explainability of DNNs for Ultrasound Image Based on Concept Discovery

Aug 19, 2024

Abstract:Explaining the decisions of Deep Neural Networks (DNNs) for medical images has become increasingly important. Existing attribution methods have difficulty explaining the meaning of pixels while existing concept-based methods are limited by additional annotations or specific model structures that are difficult to apply to ultrasound images. In this paper, we propose the Lesion Concept Explainer (LCE) framework, which combines attribution methods with concept-based methods. We introduce the Segment Anything Model (SAM), fine-tuned on a large number of medical images, for concept discovery to enable a meaningful explanation of ultrasound image DNNs. The proposed framework is evaluated in terms of both faithfulness and understandability. We point out deficiencies in the popular faithfulness evaluation metrics and propose a new evaluation metric. Our evaluation of public and private breast ultrasound datasets (BUSI and FG-US-B) shows that LCE performs well compared to commonly-used explainability methods. Finally, we also validate that LCE can consistently provide reliable explanations for more meaningful fine-grained diagnostic tasks in breast ultrasound.

HcNet: Image Modeling with Heat Conduction Equation

Aug 13, 2024

Abstract:Foundation models, such as CNNs and ViTs, have powered the development of image modeling. However, general guidance to model architecture design is still missing. The design of many modern model architectures, such as residual structures, multiplicative gating signal, and feed-forward networks, can be interpreted in terms of the heat conduction equation. This finding inspired us to model images by the heat conduction equation, where the essential idea is to conceptualize image features as temperatures and model their information interaction as the diffusion of thermal energy. We can take advantage of the rich knowledge in the heat conduction equation to guide us in designing new and more interpretable models. As an example, we propose Heat Conduction Layer and Refine Approximation Layer inspired by solving the heat conduction equation using Finite Difference Method and Fourier series, respectively. This paper does not aim to present a state-of-the-art model; instead, it seeks to integrate the overall architectural design of the model into the heat conduction theory framework. Nevertheless, our Heat Conduction Network (HcNet) still shows competitive performance. Code available at \url{https://github.com/ZheminZhang1/HcNet}.

Tri-VQA: Triangular Reasoning Medical Visual Question Answering for Multi-Attribute Analysis

Jun 21, 2024

Abstract:The intersection of medical Visual Question Answering (Med-VQA) is a challenging research topic with advantages including patient engagement and clinical expert involvement for second opinions. However, existing Med-VQA methods based on joint embedding fail to explain whether their provided results are based on correct reasoning or coincidental answers, which undermines the credibility of VQA answers. In this paper, we investigate the construction of a more cohesive and stable Med-VQA structure. Motivated by causal effect, we propose a novel Triangular Reasoning VQA (Tri-VQA) framework, which constructs reverse causal questions from the perspective of "Why this answer?" to elucidate the source of the answer and stimulate more reasonable forward reasoning processes. We evaluate our method on the Endoscopic Ultrasound (EUS) multi-attribute annotated dataset from five centers, and test it on medical VQA datasets. Experimental results demonstrate the superiority of our approach over existing methods. Our codes and pre-trained models are available at https://anonymous.4open.science/r/Tri_VQA.

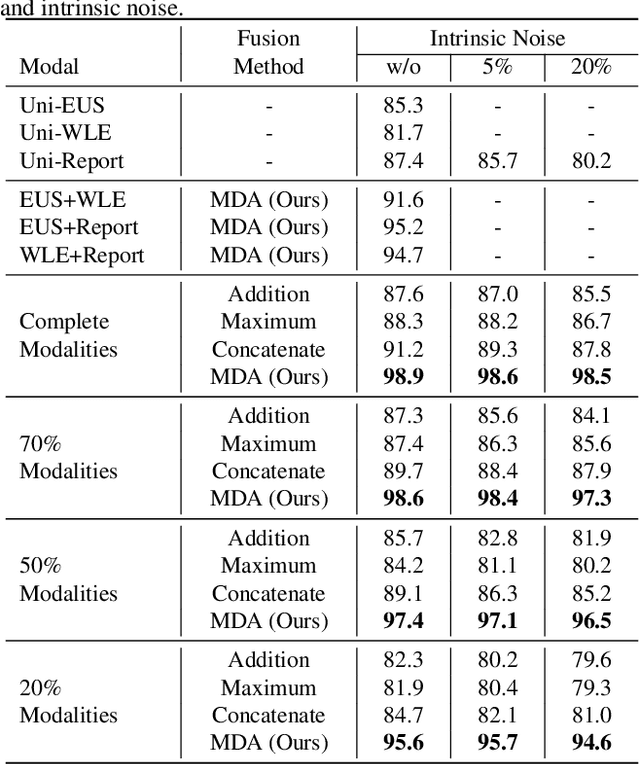

MDA: An Interpretable Multi-Modal Fusion with Missing Modalities and Intrinsic Noise

Jun 15, 2024

Abstract:Multi-modal fusion is crucial in medical data research, enabling a comprehensive understanding of diseases and improving diagnostic performance by combining diverse modalities. However, multi-modal fusion faces challenges, including capturing interactions between modalities, addressing missing modalities, handling erroneous modal information, and ensuring interpretability. Many existing researchers tend to design different solutions for these problems, often overlooking the commonalities among them. This paper proposes a novel multi-modal fusion framework that achieves adaptive adjustment over the weights of each modality by introducing the Modal-Domain Attention (MDA). It aims to facilitate the fusion of multi-modal information while allowing for the inclusion of missing modalities or intrinsic noise, thereby enhancing the representation of multi-modal data. We provide visualizations of accuracy changes and MDA weights by observing the process of modal fusion, offering a comprehensive analysis of its interpretability. Extensive experiments on various gastrointestinal disease benchmarks, the proposed MDA maintains high accuracy even in the presence of missing modalities and intrinsic noise. One thing worth mentioning is that the visualization of MDA is highly consistent with the conclusions of existing clinical studies on the dependence of different diseases on various modalities. Code and dataset will be made available.

Advanced Long-Content Speech Recognition With Factorized Neural Transducer

Mar 20, 2024

Abstract:In this paper, we propose two novel approaches, which integrate long-content information into the factorized neural transducer (FNT) based architecture in both non-streaming (referred to as LongFNT ) and streaming (referred to as SLongFNT ) scenarios. We first investigate whether long-content transcriptions can improve the vanilla conformer transducer (C-T) models. Our experiments indicate that the vanilla C-T models do not exhibit improved performance when utilizing long-content transcriptions, possibly due to the predictor network of C-T models not functioning as a pure language model. Instead, FNT shows its potential in utilizing long-content information, where we propose the LongFNT model and explore the impact of long-content information in both text (LongFNT-Text) and speech (LongFNT-Speech). The proposed LongFNT-Text and LongFNT-Speech models further complement each other to achieve better performance, with transcription history proving more valuable to the model. The effectiveness of our LongFNT approach is evaluated on LibriSpeech and GigaSpeech corpora, and obtains relative 19% and 12% word error rate reduction, respectively. Furthermore, we extend the LongFNT model to the streaming scenario, which is named SLongFNT , consisting of SLongFNT-Text and SLongFNT-Speech approaches to utilize long-content text and speech information. Experiments show that the proposed SLongFNT model achieves relative 26% and 17% WER reduction on LibriSpeech and GigaSpeech respectively while keeping a good latency, compared to the FNT baseline. Overall, our proposed LongFNT and SLongFNT highlight the significance of considering long-content speech and transcription knowledge for improving both non-streaming and streaming speech recognition systems.

* Accepted by TASLP 2024

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge