Xingyu Ji

TARGET: Benchmarking Table Retrieval for Generative Tasks

May 14, 2025Abstract:The data landscape is rich with structured data, often of high value to organizations, driving important applications in data analysis and machine learning. Recent progress in representation learning and generative models for such data has led to the development of natural language interfaces to structured data, including those leveraging text-to-SQL. Contextualizing interactions, either through conversational interfaces or agentic components, in structured data through retrieval-augmented generation can provide substantial benefits in the form of freshness, accuracy, and comprehensiveness of answers. The key question is: how do we retrieve the right table(s) for the analytical query or task at hand? To this end, we introduce TARGET: a benchmark for evaluating TAble Retrieval for GEnerative Tasks. With TARGET we analyze the retrieval performance of different retrievers in isolation, as well as their impact on downstream tasks. We find that dense embedding-based retrievers far outperform a BM25 baseline which is less effective than it is for retrieval over unstructured text. We also surface the sensitivity of retrievers across various metadata (e.g., missing table titles), and demonstrate a stark variation of retrieval performance across datasets and tasks. TARGET is available at https://target-benchmark.github.io.

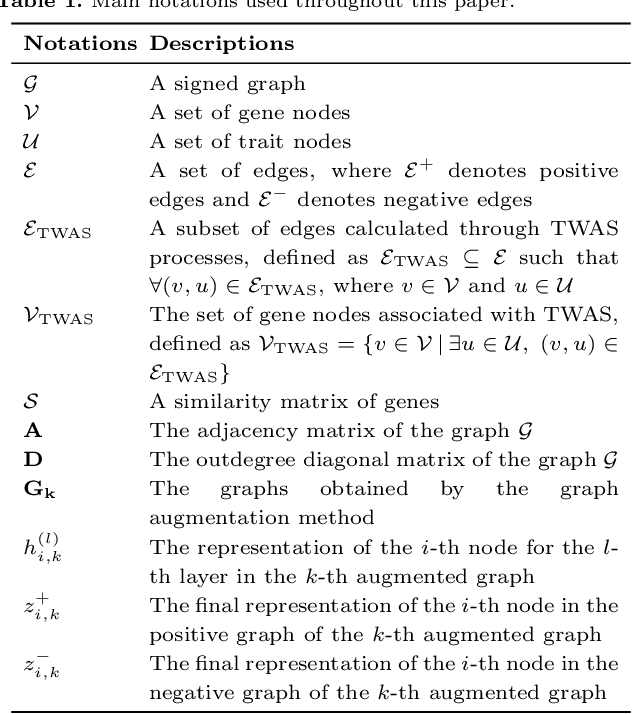

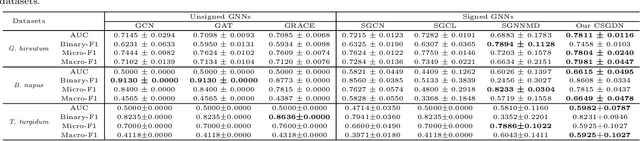

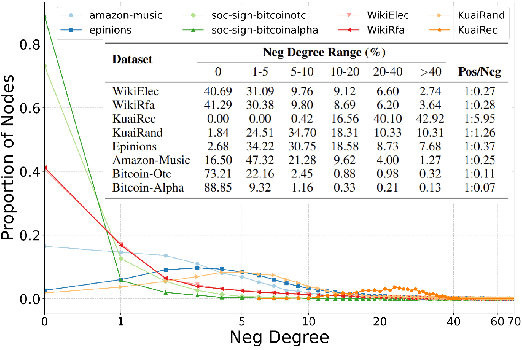

CSGDN: Contrastive Signed Graph Diffusion Network for Predicting Crop Gene-Trait Associations

Oct 10, 2024

Abstract:Positive and negative association preidiction between gene and trait help studies for crops to perform complex physiological functions. The transcription and regulation activity of specific genes will be adjusted accordingly in different cell types, developmental stages, and physiological states to meet the needs of organisms. Determing gene-trait associations can resolve the mechanism of trait formation and benefit the improvement of crop yield and quality. There are the following two problems in obtaining the positive/negative associations between gene and trait: 1) High-throughput DNA/RNA sequencing and trait data collection are expensive and time-consuming due to the need to process large sample sizes; 2) experiments introduce both random and systematic errors, and, at the same time, calculations or predictions using software or models may produce noise. To address these two issues, we propose a Contrastive Signed Graph Diffusion Network, CSGDN, to learn robust node representations with fewer training samples to achieve higher link prediction accuracy. CSGDN employs a signed graph diffusion method to uncover the underlying regulatory associations between genes and traits. Then, stochastic perterbation strategies are used to create two views for both original and diffusive graphs. At last, a multi-view contrastive learning paradigm loss is designed to unify the node presentations learned from the two views to resist interference and reduce noise. We conduct experiments to validate the performance of CSGDN on three crop datasets: Gossypium hirsutum, Brassica napus, and Triticum turgidum. The results demonstrate that the proposed model outperforms state-of-the-art methods by up to 9.28% AUC for link sign prediction in G. hirsutum dataset.

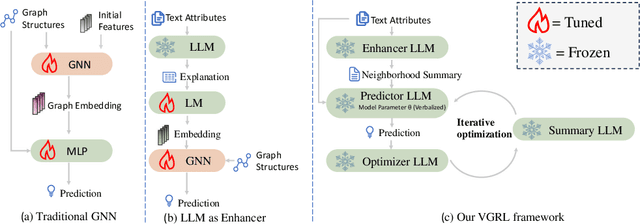

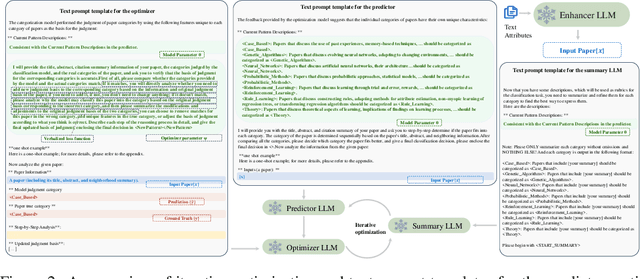

Verbalized Graph Representation Learning: A Fully Interpretable Graph Model Based on Large Language Models Throughout the Entire Process

Oct 02, 2024

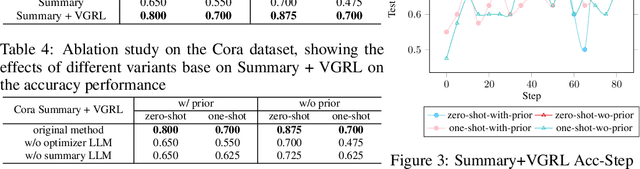

Abstract:Representation learning on text-attributed graphs (TAGs) has attracted significant interest due to its wide-ranging real-world applications, particularly through Graph Neural Networks (GNNs). Traditional GNN methods focus on encoding the structural information of graphs, often using shallow text embeddings for node or edge attributes. This limits the model to understand the rich semantic information in the data and its reasoning ability for complex downstream tasks, while also lacking interpretability. With the rise of large language models (LLMs), an increasing number of studies are combining them with GNNs for graph representation learning and downstream tasks. While these approaches effectively leverage the rich semantic information in TAGs datasets, their main drawback is that they are only partially interpretable, which limits their application in critical fields. In this paper, we propose a verbalized graph representation learning (VGRL) method which is fully interpretable. In contrast to traditional graph machine learning models, which are usually optimized within a continuous parameter space, VGRL constrains this parameter space to be text description which ensures complete interpretability throughout the entire process, making it easier for users to understand and trust the decisions of the model. We conduct several studies to empirically evaluate the effectiveness of VGRL and we believe these method can serve as a stepping stone in graph representation learning.

SGBA: Semantic Gaussian Mixture Model-Based LiDAR Bundle Adjustment

Oct 02, 2024Abstract:LiDAR bundle adjustment (BA) is an effective approach to reduce the drifts in pose estimation from the front-end. Existing works on LiDAR BA usually rely on predefined geometric features for landmark representation. This reliance restricts generalizability, as the system will inevitably deteriorate in environments where these specific features are absent. To address this issue, we propose SGBA, a LiDAR BA scheme that models the environment as a semantic Gaussian mixture model (GMM) without predefined feature types. This approach encodes both geometric and semantic information, offering a comprehensive and general representation adaptable to various environments. Additionally, to limit computational complexity while ensuring generalizability, we propose an adaptive semantic selection framework that selects the most informative semantic clusters for optimization by evaluating the condition number of the cost function. Lastly, we introduce a probabilistic feature association scheme that considers the entire probability density of assignments, which can manage uncertainties in measurement and initial pose estimation. We have conducted various experiments and the results demonstrate that SGBA can achieve accurate and robust pose refinement even in challenging scenarios with low-quality initial pose estimation and limited geometric features. We plan to open-source the work for the benefit of the community https://github.com/Ji1Xinyu/SGBA.

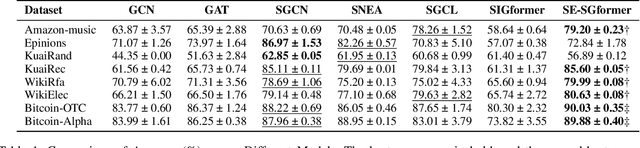

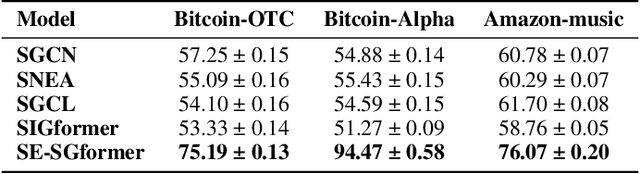

SE-SGformer: A Self-Explainable Signed Graph Transformer for Link Sign Prediction

Aug 16, 2024

Abstract:Signed Graph Neural Networks (SGNNs) have been shown to be effective in analyzing complex patterns in real-world situations where positive and negative links coexist. However, SGNN models suffer from poor explainability, which limit their adoptions in critical scenarios that require understanding the rationale behind predictions. To the best of our knowledge, there is currently no research work on the explainability of the SGNN models. Our goal is to address the explainability of decision-making for the downstream task of link sign prediction specific to signed graph neural networks. Since post-hoc explanations are not derived directly from the models, they may be biased and misrepresent the true explanations. Therefore, in this paper we introduce a Self-Explainable Signed Graph transformer (SE-SGformer) framework, which can not only outputs explainable information while ensuring high prediction accuracy. Specifically, We propose a new Transformer architecture for signed graphs and theoretically demonstrate that using positional encoding based on signed random walks has greater expressive power than current SGNN methods and other positional encoding graph Transformer-based approaches. We constructs a novel explainable decision process by discovering the $K$-nearest (farthest) positive (negative) neighbors of a node to replace the neural network-based decoder for predicting edge signs. These $K$ positive (negative) neighbors represent crucial information about the formation of positive (negative) edges between nodes and thus can serve as important explanatory information in the decision-making process. We conducted experiments on several real-world datasets to validate the effectiveness of SE-SGformer, which outperforms the state-of-the-art methods by improving 2.2\% prediction accuracy and 73.1\% explainablity accuracy in the best-case scenario.

Reliable Spatial-Temporal Voxels For Multi-Modal Test-Time Adaptation

Mar 15, 2024Abstract:Multi-modal test-time adaptation (MM-TTA) is proposed to adapt models to an unlabeled target domain by leveraging the complementary multi-modal inputs in an online manner. Previous MM-TTA methods rely on predictions of cross-modal information in each input frame, while they ignore the fact that predictions of geometric neighborhoods within consecutive frames are highly correlated, leading to unstable predictions across time. To fulfill this gap, we propose ReLiable Spatial-temporal Voxels (Latte), an MM-TTA method that leverages reliable cross-modal spatial-temporal correspondences for multi-modal 3D segmentation. Motivated by the fact that reliable predictions should be consistent with their spatial-temporal correspondences, Latte aggregates consecutive frames in a slide window manner and constructs ST voxel to capture temporally local prediction consistency for each modality. After filtering out ST voxels with high ST entropy, Latte conducts cross-modal learning for each point and pixel by attending to those with reliable and consistent predictions among both spatial and temporal neighborhoods. Experimental results show that Latte achieves state-of-the-art performance on three different MM-TTA benchmarks compared to previous MM-TTA or TTA methods.

Multipath Time-delay Estimation with Impulsive Noise via Bayesian Compressive Sensing

Jul 05, 2023Abstract:Multipath time-delay estimation is commonly encountered in radar and sonar signal processing. In some real-life environments, impulse noise is ubiquitous and significantly degrades estimation performance. Here, we propose a Bayesian approach to tailor the Bayesian Compressive Sensing (BCS) to mitigate impulsive noises. In particular, a heavy-tail Laplacian distribution is used as a statistical model for impulse noise, while Laplacian prior is used for sparse multipath modeling. The Bayesian learning problem contains hyperparameters learning and parameter estimation, solved under the BCS inference framework. The performance of our proposed method is compared with benchmark methods, including compressive sensing (CS), BCS, and Laplacian-prior BCS (L-BCS). The simulation results show that our proposed method can estimate the multipath parameters more accurately and have a lower root mean squared estimation error (RMSE) in intensely impulsive noise.

LIO-GVM: an Accurate, Tightly-Coupled Lidar-Inertial Odometry with Gaussian Voxel Map

Jun 30, 2023Abstract:This letter presents an accurate and robust Lidar Inertial Odometry framework. We fuse LiDAR scans with IMU data using a tightly-coupled iterative error state Kalman filter for robust and fast localization. To achieve robust correspondence matching, we represent the points as a set of Gaussian distributions and evaluate the divergence in variance for outlier rejection. Based on the fitted distributions, a new residual metric is proposed for the filter-based Lidar inertial odometry, which demonstrates an improvement from merely quantifying distance to incorporating variance disparity, further enriching the comprehensiveness and accuracy of the residual metric. Due to the strategic design of the residual metric, we propose a simple yet effective voxel-solely mapping scheme, which only necessities the maintenance of one centroid and one covariance matrix for each voxel. Experiments on different datasets demonstrate the robustness and accuracy of our framework for various data inputs and environments. To the benefit of the robotics society, we open source the code at https://github.com/Ji1Xingyu/lio_gvm.

Segregator: Global Point Cloud Registration with Semantic and Geometric Cues

Jan 18, 2023Abstract:This paper presents Segregator, a global point cloud registration framework that exploits both semantic information and geometric distribution to efficiently build up outlier-robust correspondences and search for inliers. Current state-of-the-art algorithms rely on point features to set up putative correspondences and refine them by employing pair-wise distance consistency checks. However, such a scheme suffers from degenerate cases, where the descriptive capability of local point features downgrades, and unconstrained cases, where length-preserving (l-TRIMs)-based checks cannot sufficiently constrain whether the current observation is consistent with others, resulting in a complexified NP-complete problem to solve. To tackle these problems, on the one hand, we propose a novel degeneracy-robust and efficient corresponding procedure consisting of both instance-level semantic clusters and geometric-level point features. On the other hand, Gaussian distribution-based translation and rotation invariant measurements (G-TRIMs) are proposed to conduct the consistency check and further constrain the problem size. We validated our proposed algorithm on extensive real-world data-based experiments. The code is available: https://github.com/Pamphlett/Segregator.

Tensor-based Basis Function Learning for Three-dimensional Sound Speed Fields

Jan 21, 2022

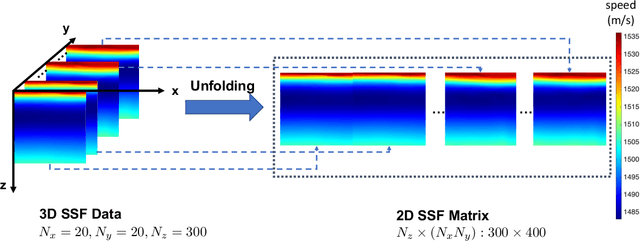

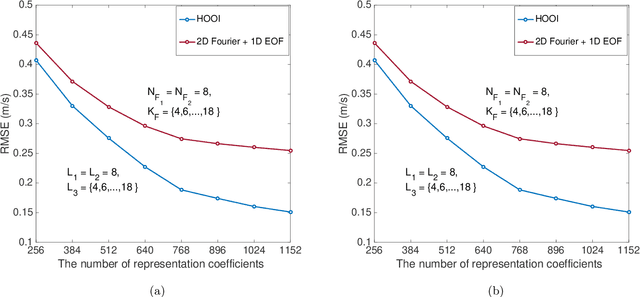

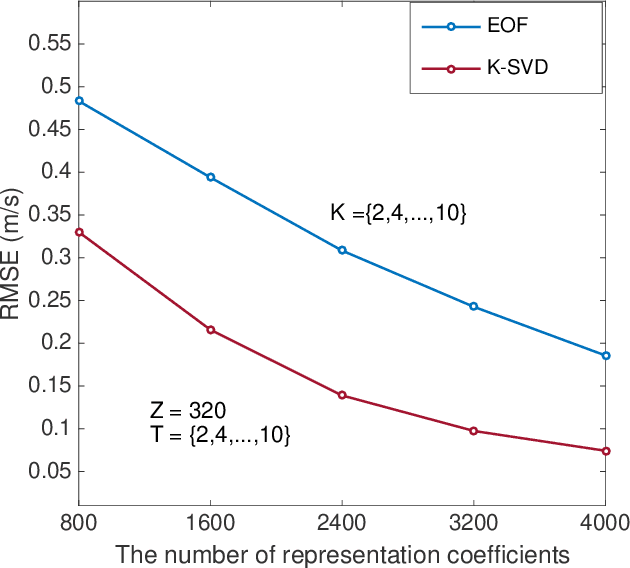

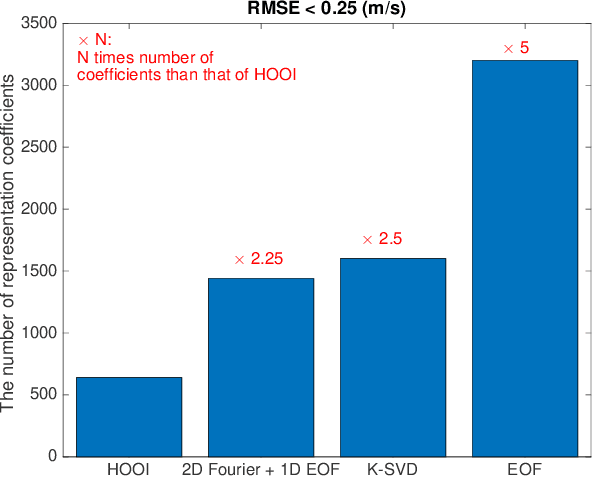

Abstract:Basis function learning is the stepping stone towards effective three-dimensional (3D) sound speed field (SSF) inversion for various acoustic signal processing tasks, including ocean acoustic tomography, underwater target localization/tracking, and underwater communications. Classical basis functions include the empirical orthogonal functions (EOFs), Fourier basis functions, and their combinations. The unsupervised machine learning method, e.g., the K-SVD algorithm, has recently tapped into the basis function design, showing better representation performance than the EOFs. However, existing methods do not consider basis function learning approaches that treat 3D SSF data as a third-order tensor, and thus cannot fully utilize the 3D interactions/correlations therein. To circumvent such a drawback, basis function learning is linked to tensor decomposition in this paper, which is the primary drive for recent multi-dimensional data mining. In particular, a tensor-based basis function learning framework is proposed, which can include the classical basis functions (using EOFs and/or Fourier basis functions) as its special cases. This provides a unified tensor perspective for understanding and representing 3D SSFs. Numerical results using the South China Sea 3D SSF data have demonstrated the excellent performance of the tensor-based basis functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge