Xiao Song

SocialMOIF: Multi-Order Intention Fusion for Pedestrian Trajectory Prediction

Apr 22, 2025Abstract:The analysis and prediction of agent trajectories are crucial for decision-making processes in intelligent systems, with precise short-term trajectory forecasting being highly significant across a range of applications. Agents and their social interactions have been quantified and modeled by researchers from various perspectives; however, substantial limitations exist in the current work due to the inherent high uncertainty of agent intentions and the complex higher-order influences among neighboring groups. SocialMOIF is proposed to tackle these challenges, concentrating on the higher-order intention interactions among neighboring groups while reinforcing the primary role of first-order intention interactions between neighbors and the target agent. This method develops a multi-order intention fusion model to achieve a more comprehensive understanding of both direct and indirect intention information. Within SocialMOIF, a trajectory distribution approximator is designed to guide the trajectories toward values that align more closely with the actual data, thereby enhancing model interpretability. Furthermore, a global trajectory optimizer is introduced to enable more accurate and efficient parallel predictions. By incorporating a novel loss function that accounts for distance and direction during training, experimental results demonstrate that the model outperforms previous state-of-the-art baselines across multiple metrics in both dynamic and static datasets.

TacoDepth: Towards Efficient Radar-Camera Depth Estimation with One-stage Fusion

Apr 16, 2025

Abstract:Radar-Camera depth estimation aims to predict dense and accurate metric depth by fusing input images and Radar data. Model efficiency is crucial for this task in pursuit of real-time processing on autonomous vehicles and robotic platforms. However, due to the sparsity of Radar returns, the prevailing methods adopt multi-stage frameworks with intermediate quasi-dense depth, which are time-consuming and not robust. To address these challenges, we propose TacoDepth, an efficient and accurate Radar-Camera depth estimation model with one-stage fusion. Specifically, the graph-based Radar structure extractor and the pyramid-based Radar fusion module are designed to capture and integrate the graph structures of Radar point clouds, delivering superior model efficiency and robustness without relying on the intermediate depth results. Moreover, TacoDepth can be flexible for different inference modes, providing a better balance of speed and accuracy. Extensive experiments are conducted to demonstrate the efficacy of our method. Compared with the previous state-of-the-art approach, TacoDepth improves depth accuracy and processing speed by 12.8% and 91.8%. Our work provides a new perspective on efficient Radar-Camera depth estimation.

SparseLIF: High-Performance Sparse LiDAR-Camera Fusion for 3D Object Detection

Mar 12, 2024Abstract:Sparse 3D detectors have received significant attention since the query-based paradigm embraces low latency without explicit dense BEV feature construction. However, these detectors achieve worse performance than their dense counterparts. In this paper, we find the key to bridging the performance gap is to enhance the awareness of rich representations in two modalities. Here, we present a high-performance fully sparse detector for end-to-end multi-modality 3D object detection. The detector, termed SparseLIF, contains three key designs, which are (1) Perspective-Aware Query Generation (PAQG) to generate high-quality 3D queries with perspective priors, (2) RoI-Aware Sampling (RIAS) to further refine prior queries by sampling RoI features from each modality, (3) Uncertainty-Aware Fusion (UAF) to precisely quantify the uncertainty of each sensor modality and adaptively conduct final multi-modality fusion, thus achieving great robustness against sensor noises. By the time of submission (2024/03/08), SparseLIF achieves state-of-the-art performance on the nuScenes dataset, ranking 1st on both validation set and test benchmark, outperforming all state-of-the-art 3D object detectors by a notable margin. The source code will be released upon acceptance.

Rethinking Radiology Report Generation via Causal Reasoning and Counterfactual Augmentation

Dec 05, 2023

Abstract:Radiology Report Generation (RRG) draws attention as an interaction between vision and language fields. Previous works inherited the ideology of vision-to-language generation tasks,aiming to generate paragraphs with high consistency as reports. However, one unique characteristic of RRG, the independence between diseases, was neglected, leading to the injection of disease co-occurrence as a confounder that effects the results through backdoor path. Unfortunately, this confounder confuses the process of report generation worse because of the biased RRG data distribution. In this paper, to rethink this issue thoroughly, we reason about its causes and effects from a novel perspective of statistics and causality, where the Joint Vision Coupling and the Conditional Sentence Coherence Coupling are two aspects prone to implicitly decrease the accuracy of reports. Then, a counterfactual augmentation strategy that contains the Counterfactual Sample Synthesis and the Counterfactual Report Reconstruction sub-methods is proposed to break these two aspects of spurious effects. Experimental results and further analyses on two widely used datasets justify our reasoning and proposed methods.

Filter and evolve: progressive pseudo label refining for semi-supervised automatic speech recognition

Oct 28, 2022

Abstract:Fine tuning self supervised pretrained models using pseudo labels can effectively improve speech recognition performance. But, low quality pseudo labels can misguide decision boundaries and degrade performance. We propose a simple yet effective strategy to filter low quality pseudo labels to alleviate this problem. Specifically, pseudo-labels are produced over the entire training set and filtered via average probability scores calculated from the model output. Subsequently, an optimal percentage of utterances with high probability scores are considered reliable training data with trustworthy labels. The model is iteratively updated to correct the unreliable pseudo labels to minimize the effect of noisy labels. The process above is repeated until unreliable pseudo abels have been adequately corrected. Extensive experiments on LibriSpeech show that these filtered samples enable the refined model to yield more correct predictions, leading to better ASR performances under various experimental settings.

AdaStereo: An Efficient Domain-Adaptive Stereo Matching Approach

Dec 09, 2021

Abstract:Recently, records on stereo matching benchmarks are constantly broken by end-to-end disparity networks. However, the domain adaptation ability of these deep models is quite limited. Addressing such problem, we present a novel domain-adaptive approach called AdaStereo that aims to align multi-level representations for deep stereo matching networks. Compared to previous methods, our AdaStereo realizes a more standard, complete and effective domain adaptation pipeline. Firstly, we propose a non-adversarial progressive color transfer algorithm for input image-level alignment. Secondly, we design an efficient parameter-free cost normalization layer for internal feature-level alignment. Lastly, a highly related auxiliary task, self-supervised occlusion-aware reconstruction is presented to narrow the gaps in output space. We perform intensive ablation studies and break-down comparisons to validate the effectiveness of each proposed module. With no extra inference overhead and only a slight increase in training complexity, our AdaStereo models achieve state-of-the-art cross-domain performance on multiple benchmarks, including KITTI, Middlebury, ETH3D and DrivingStereo, even outperforming some state-of-the-art disparity networks finetuned with target-domain ground-truths. Moreover, based on two additional evaluation metrics, the superiority of our domain-adaptive stereo matching pipeline is further uncovered from more perspectives. Finally, we demonstrate that our method is robust to various domain adaptation settings, and can be easily integrated into quick adaptation application scenarios and real-world deployments.

LIF-Seg: LiDAR and Camera Image Fusion for 3D LiDAR Semantic Segmentation

Aug 17, 2021

Abstract:Camera and 3D LiDAR sensors have become indispensable devices in modern autonomous driving vehicles, where the camera provides the fine-grained texture, color information in 2D space and LiDAR captures more precise and farther-away distance measurements of the surrounding environments. The complementary information from these two sensors makes the two-modality fusion be a desired option. However, two major issues of the fusion between camera and LiDAR hinder its performance, \ie, how to effectively fuse these two modalities and how to precisely align them (suffering from the weak spatiotemporal synchronization problem). In this paper, we propose a coarse-to-fine LiDAR and camera fusion-based network (termed as LIF-Seg) for LiDAR segmentation. For the first issue, unlike these previous works fusing the point cloud and image information in a one-to-one manner, the proposed method fully utilizes the contextual information of images and introduces a simple but effective early-fusion strategy. Second, due to the weak spatiotemporal synchronization problem, an offset rectification approach is designed to align these two-modality features. The cooperation of these two components leads to the success of the effective camera-LiDAR fusion. Experimental results on the nuScenes dataset show the superiority of the proposed LIF-Seg over existing methods with a large margin. Ablation studies and analyses demonstrate that our proposed LIF-Seg can effectively tackle the weak spatiotemporal synchronization problem.

Temporal-Channel Transformer for 3D Lidar-Based Video Object Detection in Autonomous Driving

Nov 27, 2020

Abstract:The strong demand of autonomous driving in the industry has lead to strong interest in 3D object detection and resulted in many excellent 3D object detection algorithms. However, the vast majority of algorithms only model single-frame data, ignoring the temporal information of the sequence of data. In this work, we propose a new transformer, called Temporal-Channel Transformer, to model the spatial-temporal domain and channel domain relationships for video object detecting from Lidar data. As a special design of this transformer, the information encoded in the encoder is different from that in the decoder, i.e. the encoder encodes temporal-channel information of multiple frames while the decoder decodes the spatial-channel information for the current frame in a voxel-wise manner. Specifically, the temporal-channel encoder of the transformer is designed to encode the information of different channels and frames by utilizing the correlation among features from different channels and frames. On the other hand, the spatial decoder of the transformer will decode the information for each location of the current frame. Before conducting the object detection with detection head, the gate mechanism is deployed for re-calibrating the features of current frame, which filters out the object irrelevant information by repetitively refine the representation of target frame along with the up-sampling process. Experimental results show that we achieve the state-of-the-art performance in grid voxel-based 3D object detection on the nuScenes benchmark.

Cylinder3D: An Effective 3D Framework for Driving-scene LiDAR Semantic Segmentation

Aug 04, 2020

Abstract:State-of-the-art methods for large-scale driving-scene LiDAR semantic segmentation often project and process the point clouds in the 2D space. The projection methods includes spherical projection, bird-eye view projection, etc. Although this process makes the point cloud suitable for the 2D CNN-based networks, it inevitably alters and abandons the 3D topology and geometric relations. A straightforward solution to tackle the issue of 3D-to-2D projection is to keep the 3D representation and process the points in the 3D space. In this work, we first perform an in-depth analysis for different representations and backbones in 2D and 3D spaces, and reveal the effectiveness of 3D representations and networks on LiDAR segmentation. Then, we develop a 3D cylinder partition and a 3D cylinder convolution based framework, termed as Cylinder3D, which exploits the 3D topology relations and structures of driving-scene point clouds. Moreover, a dimension-decomposition based context modeling module is introduced to explore the high-rank context information in point clouds in a progressive manner. We evaluate the proposed model on a large-scale driving-scene dataset, i.e. SematicKITTI. Our method achieves state-of-the-art performance and outperforms existing methods by 6% in terms of mIoU.

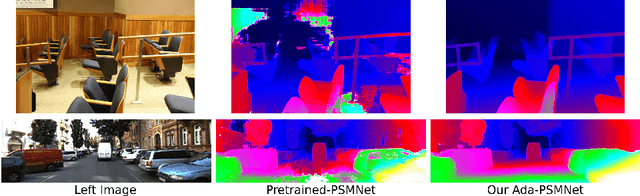

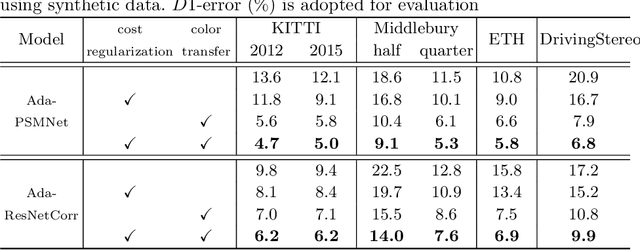

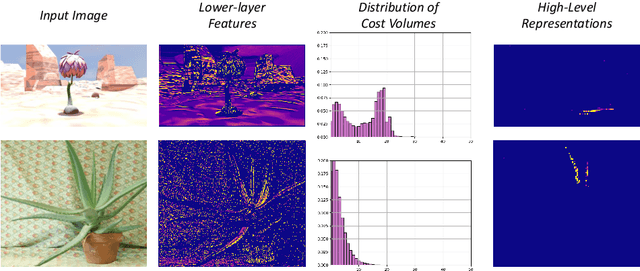

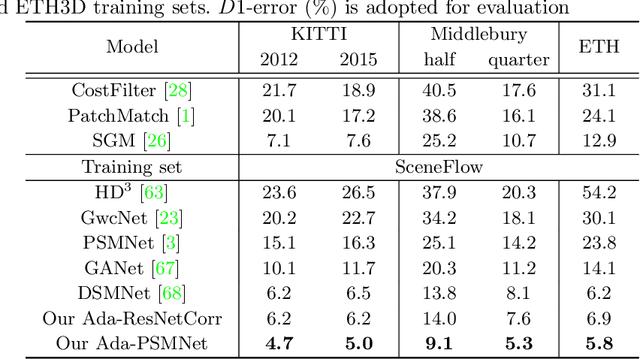

AdaStereo: A Simple and Efficient Approach for Adaptive Stereo Matching

Apr 09, 2020

Abstract:In this paper, we attempt to solve the domain adaptation problem for deep stereo matching networks. Instead of resorting to black-box structures or layers to find implicit connections across domains, we focus on investigating adaptation gaps for stereo matching. By visual inspections and extensive experiments, we conclude that low-level aligning is crucial for adaptive stereo matching, since main gaps across domains lie in the inconsistent input color and cost volume distributions. Correspondingly, we design a bottom-up domain adaptation method, in which two particular approaches are proposed, i.e. color transfer and cost regularization, that can be easily integrated into existing stereo matching models. The color transfer enables transferring a large amount of synthetic data to the same color spaces with target domains during training. The cost regularization can further constrain both the lower-layer features and cost volumes to domain-invariant distributions. Although our proposed strategies are simple and have no parameters to learn, they do improve the generalization ability of existing disparity networks by a large margin. We conduct experiments across multiple datasets, including Scene Flow, KITTI, Middlebury, ETH3D and DrivingStereo. Without whistles and bells, our synthetic-data pretrained models achieve state-of-the-art cross-domain performance compared to previous domain-invariant methods, even outperform state-of-the-art disparity networks fine-tuned with target domain ground-truths on multiple stereo matching benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge