Werner Ritter

The Radar Ghost Dataset -- An Evaluation of Ghost Objects in Automotive Radar Data

Apr 01, 2024Abstract:Radar sensors have a long tradition in advanced driver assistance systems (ADAS) and also play a major role in current concepts for autonomous vehicles. Their importance is reasoned by their high robustness against meteorological effects, such as rain, snow, or fog, and the radar's ability to measure relative radial velocity differences via the Doppler effect. The cause for these advantages, namely the large wavelength, is also one of the drawbacks of radar sensors. Compared to camera or lidar sensor, a lot more surfaces in a typical traffic scenario appear flat relative to the radar's emitted signal. This results in multi-path reflections or so called ghost detections in the radar signal. Ghost objects pose a major source for potential false positive detections in a vehicle's perception pipeline. Therefore, it is important to be able to segregate multi-path reflections from direct ones. In this article, we present a dataset with detailed manual annotations for different kinds of ghost detections. Moreover, two different approaches for identifying these kinds of objects are evaluated. We hope that our dataset encourages more researchers to engage in the fields of multi-path object suppression or exploitation.

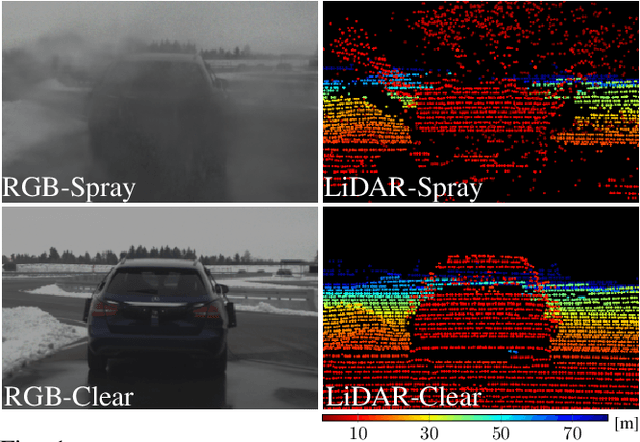

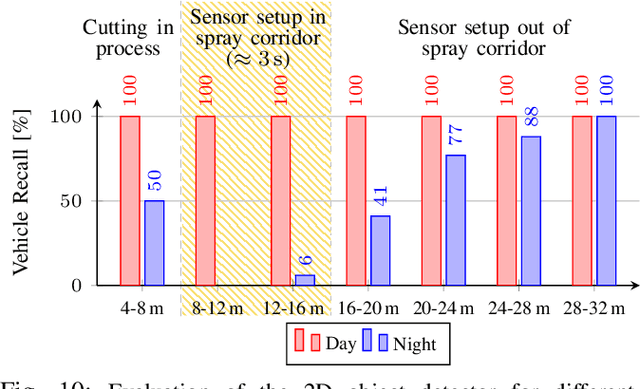

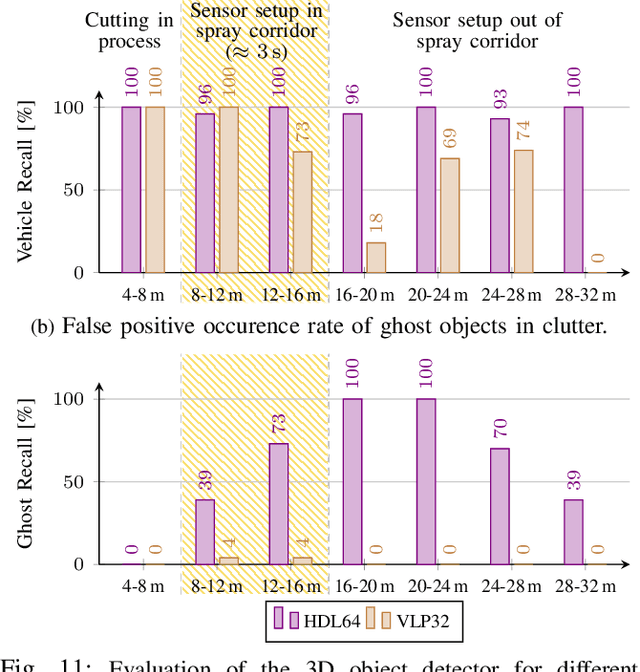

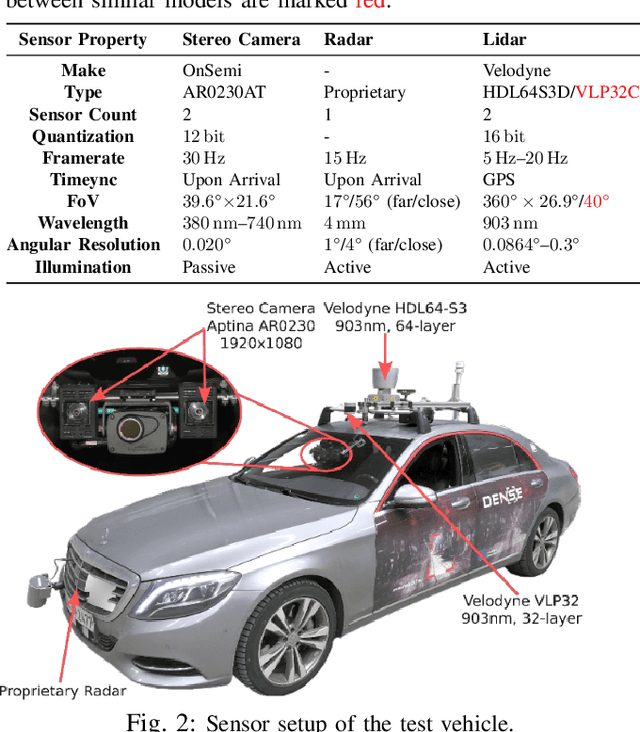

Simulating Road Spray Effects in Automotive Lidar Sensor Models

Dec 16, 2022Abstract:Modeling perception sensors is key for simulation based testing of automated driving functions. Beyond weather conditions themselves, sensors are also subjected to object dependent environmental influences like tire spray caused by vehicles moving on wet pavement. In this work, a novel modeling approach for spray in lidar data is introduced. The model conforms to the Open Simulation Interface (OSI) standard and is based on the formation of detection clusters within a spray plume. The detections are rendered with a simple custom ray casting algorithm without the need of a fluid dynamics simulation or physics engine. The model is subsequently used to generate training data for object detection algorithms. It is shown that the model helps to improve detection in real-world spray scenarios significantly. Furthermore, a systematic real-world data set is recorded and published for analysis, model calibration and validation of spray effects in active perception sensors. Experiments are conducted on a test track by driving over artificially watered pavement with varying vehicle speeds, vehicle types and levels of pavement wetness. All models and data of this work are available open source.

Gated2Gated: Self-Supervised Depth Estimation from Gated Images

Dec 04, 2021

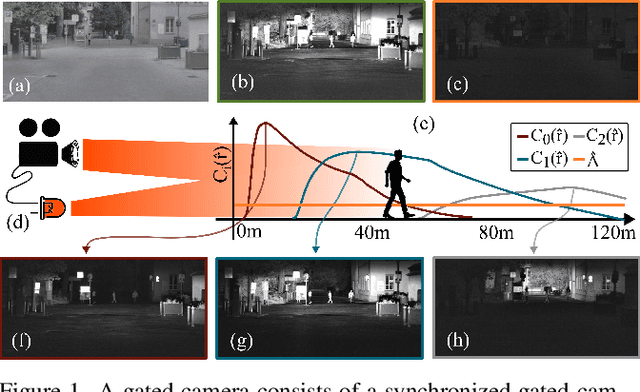

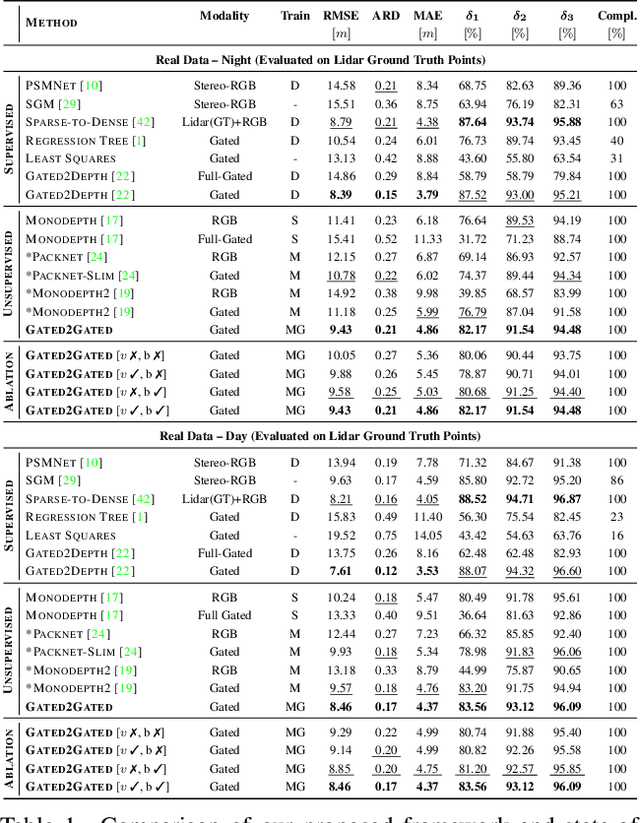

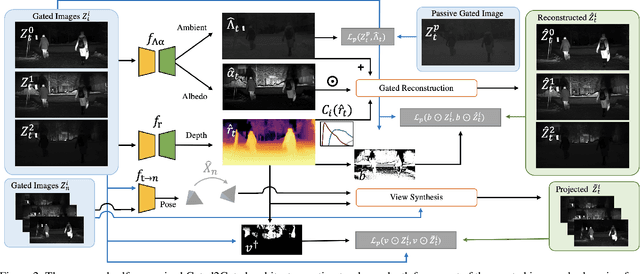

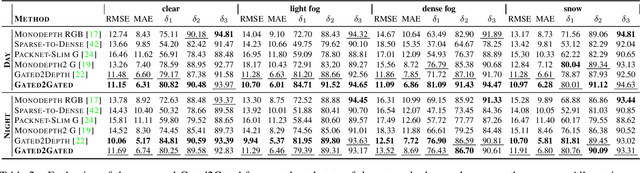

Abstract:Gated cameras hold promise as an alternative to scanning LiDAR sensors with high-resolution 3D depth that is robust to back-scatter in fog, snow, and rain. Instead of sequentially scanning a scene and directly recording depth via the photon time-of-flight, as in pulsed LiDAR sensors, gated imagers encode depth in the relative intensity of a handful of gated slices, captured at megapixel resolution. Although existing methods have shown that it is possible to decode high-resolution depth from such measurements, these methods require synchronized and calibrated LiDAR to supervise the gated depth decoder -- prohibiting fast adoption across geographies, training on large unpaired datasets, and exploring alternative applications outside of automotive use cases. In this work, we fill this gap and propose an entirely self-supervised depth estimation method that uses gated intensity profiles and temporal consistency as a training signal. The proposed model is trained end-to-end from gated video sequences, does not require LiDAR or RGB data, and learns to estimate absolute depth values. We take gated slices as input and disentangle the estimation of the scene albedo, depth, and ambient light, which are then used to learn to reconstruct the input slices through a cyclic loss. We rely on temporal consistency between a given frame and neighboring gated slices to estimate depth in regions with shadows and reflections. We experimentally validate that the proposed approach outperforms existing supervised and self-supervised depth estimation methods based on monocular RGB and stereo images, as well as supervised methods based on gated images.

A Benchmark for Spray from Nearby Cutting Vehicles

Aug 24, 2021

Abstract:Current driver assistance systems and autonomous driving stacks are limited to well-defined environment conditions and geo fenced areas. To increase driving safety in adverse weather conditions, broadening the application spectrum of autonomous driving and driver assistance systems is necessary. In order to enable this development, reproducible benchmarking methods are required to quantify the expected distortions. In this publication, a testing methodology for disturbances from spray is presented. It introduces a novel lightweight and configurable spray setup alongside an evaluation scheme to assess the disturbances caused by spray. The analysis covers an automotive RGB camera and two different LiDAR systems, as well as downstream detection algorithms based on YOLOv3 and PV-RCNN. In a common scenario of a closely cutting vehicle, it is visible that the distortions are severely affecting the perception stack up to four seconds showing the necessity of benchmarking the influences of spray.

ZeroScatter: Domain Transfer for Long Distance Imaging and Vision through Scattering Media

Feb 11, 2021

Abstract:Adverse weather conditions, including snow, rain, and fog pose a challenge for both human and computer vision in outdoor scenarios. Handling these environmental conditions is essential for safe decision making, especially in autonomous vehicles, robotics, and drones. Most of today's supervised imaging and vision approaches, however, rely on training data collected in the real world that is biased towards good weather conditions, with dense fog, snow, and heavy rain as outliers in these datasets. Without training data, let alone paired data, existing autonomous vehicles often limit themselves to good conditions and stop when dense fog or snow is detected. In this work, we tackle the lack of supervised training data by combining synthetic and indirect supervision. We present ZeroScatter, a domain transfer method for converting RGB-only captures taken in adverse weather into clear daytime scenes. ZeroScatter exploits model-based, temporal, multi-view, multi-modal, and adversarial cues in a joint fashion, allowing us to train on unpaired, biased data. We assess the proposed method using real-world captures, and the proposed method outperforms existing monocular de-scattering approaches by 2.8 dB PSNR on controlled fog chamber measurements.

Using Machine Learning to Detect Ghost Images in Automotive Radar

Jul 10, 2020

Abstract:Radar sensors are an important part of driver assistance systems and intelligent vehicles due to their robustness against all kinds of adverse conditions, e.g., fog, snow, rain, or even direct sunlight. This robustness is achieved by a substantially larger wavelength compared to light-based sensors such as cameras or lidars. As a side effect, many surfaces act like mirrors at this wavelength, resulting in unwanted ghost detections. In this article, we present a novel approach to detect these ghost objects by applying data-driven machine learning algorithms. For this purpose, we use a large-scale automotive data set with annotated ghost objects. We show that we can use a state-of-the-art automotive radar classifier in order to detect ghost objects alongside real objects. Furthermore, we are able to reduce the amount of false positive detections caused by ghost images in some settings.

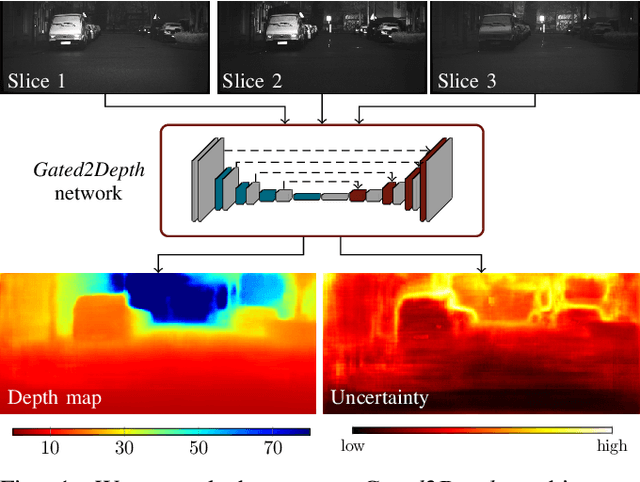

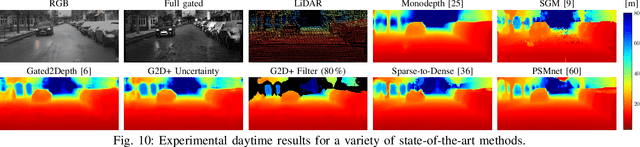

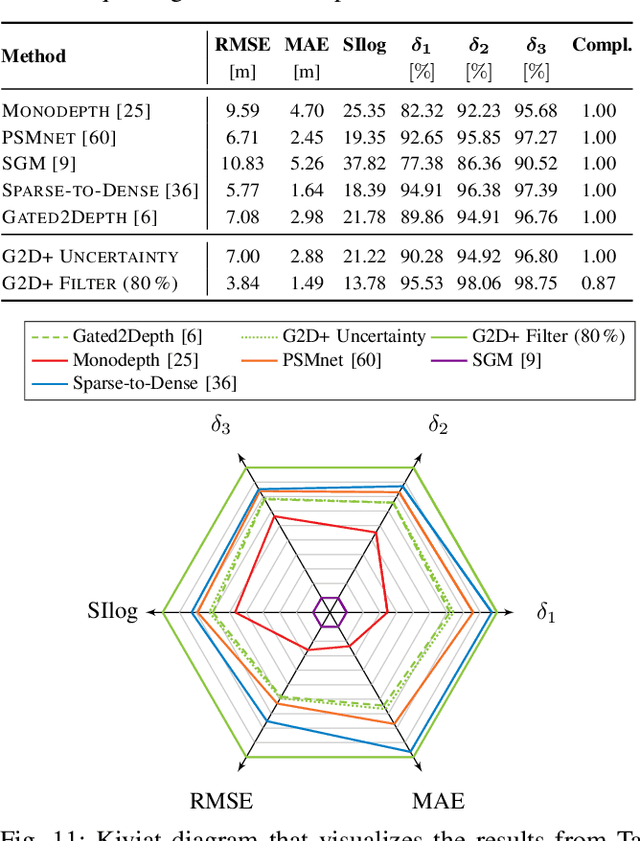

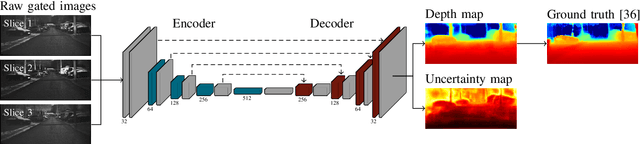

Uncertainty depth estimation with gated images for 3D reconstruction

Mar 11, 2020

Abstract:Gated imaging is an emerging sensor technology for self-driving cars that provides high-contrast images even under adverse weather influence. It has been shown that this technology can even generate high-fidelity dense depth maps with accuracy comparable to scanning LiDAR systems. In this work, we extend the recent Gated2Depth framework with aleatoric uncertainty providing an additional confidence measure for the depth estimates. This confidence can help to filter out uncertain estimations in regions without any illumination. Moreover, we show that training on dense depth maps generated by LiDAR depth completion algorithms can further improve the performance.

Seeing Around Street Corners: Non-Line-of-Sight Detection and Tracking In-the-Wild Using Doppler Radar

Dec 13, 2019

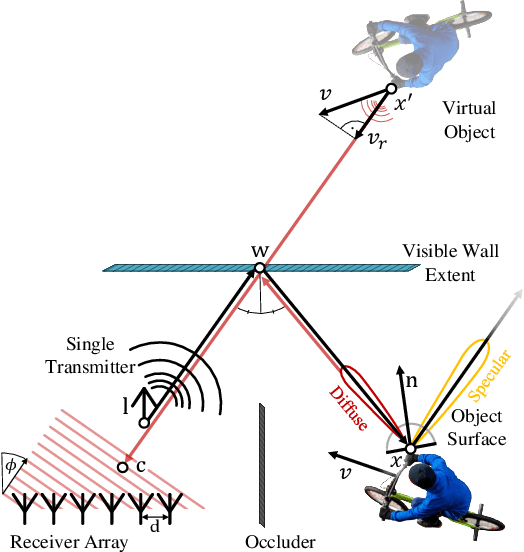

Abstract:Conventional sensor systems record information about directly visible objects, whereas occluded scene components are considered lost in the measurement process. Nonline-of-sight (NLOS) methods try to recover such hidden objects from their indirect reflections - faint signal components, traditionally treated as measurement noise. Existing NLOS approaches struggle to record these low-signal components outside the lab, and do not scale to large-scale outdoor scenes and high-speed motion, typical in automotive scenarios. Especially optical NLOS is fundamentally limited by the quartic intensity falloff of diffuse indirect reflections. In this work, we depart from visible-wavelength approaches and demonstrate detection, classification, and tracking of hidden objects in large-scale dynamic scenes using a Doppler radar which can be foreseen as a low-cost serial product in the near future. To untangle noisy indirect and direct reflections, we learn from temporal sequences of Doppler velocity and position measurements, which we fuse in a joint NLOS detection and tracking network over time. We validate the approach on in-the-wild automotive scenes, including sequences of parked cars or house facades as indirect reflectors, and demonstrate low-cost, real-time NLOS in dynamic automotive environments.

A Benchmark for Lidar Sensors in Fog: Is Detection Breaking Down?

Dec 06, 2019

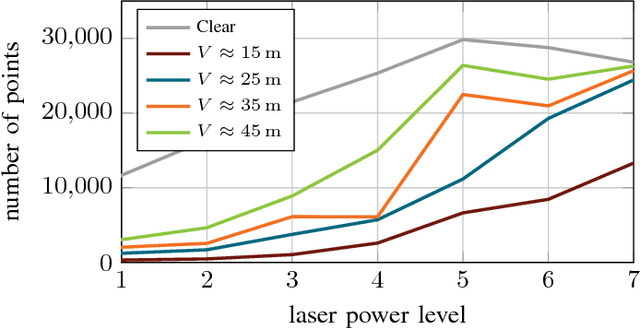

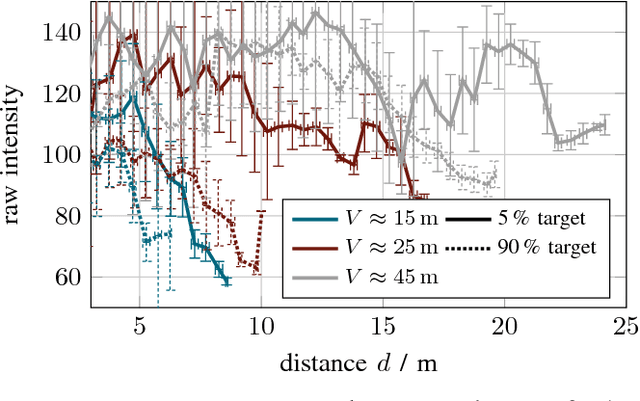

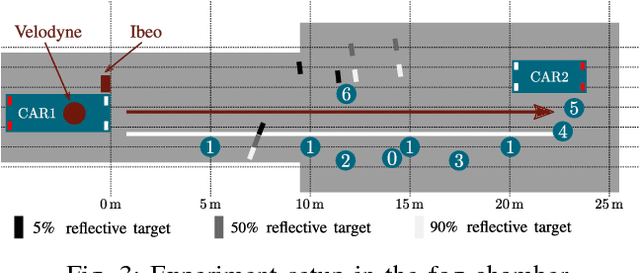

Abstract:Autonomous driving at level five does not only means self-driving in the sunshine. Adverse weather is especially critical because fog, rain, and snow degrade the perception of the environment. In this work, current state of the art light detection and ranging (lidar) sensors are tested in controlled conditions in a fog chamber. We present current problems and disturbance patterns for four different state of the art lidar systems. Moreover, we investigate how tuning internal parameters can improve their performance in bad weather situations. This is of great importance because most state of the art detection algorithms are based on undisturbed lidar data.

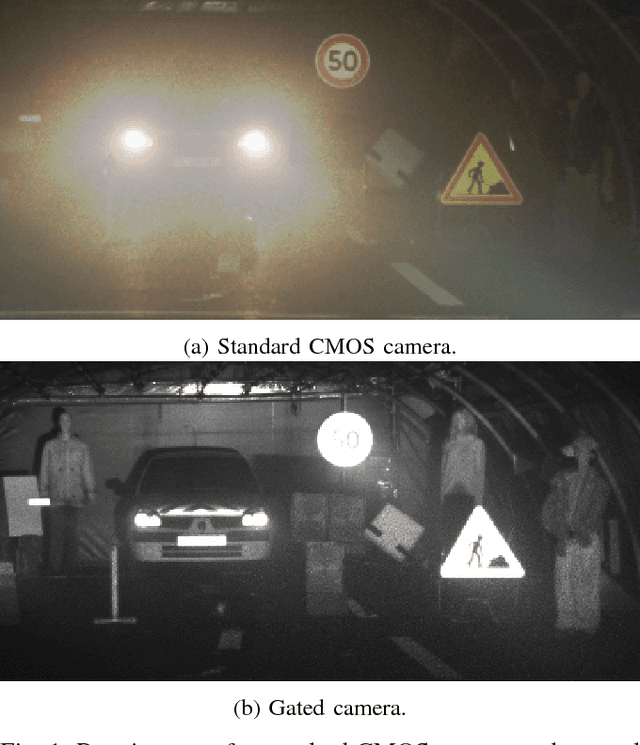

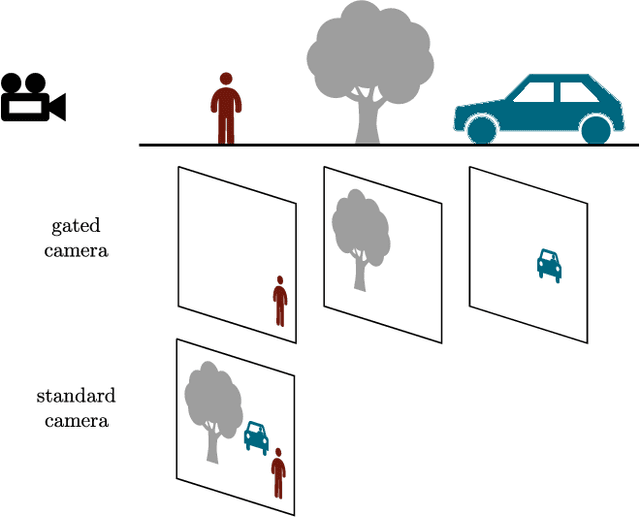

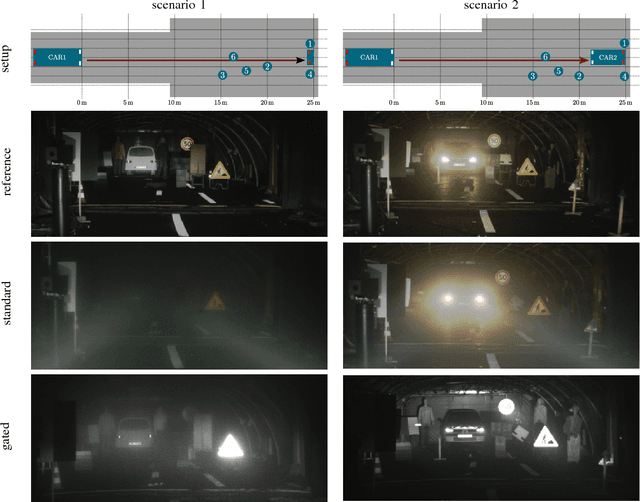

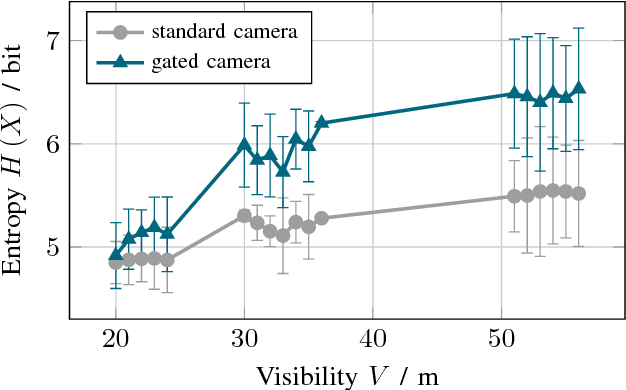

Benchmarking Image Sensors Under Adverse Weather Conditions for Autonomous Driving

Dec 06, 2019

Abstract:Adverse weather conditions are very challenging for autonomous driving because most of the state-of-the-art sensors stop working reliably under these conditions. In order to develop robust sensors and algorithms, tests with current sensors in defined weather conditions are crucial for determining the impact of bad weather for each sensor. This work describes a testing and evaluation methodology that helps to benchmark novel sensor technologies and compare them to state-of-the-art sensors. As an example, gated imaging is compared to standard imaging under foggy conditions. It is shown that gated imaging outperforms state-of-the-art standard passive imaging due to time-synchronized active illumination.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge