Jürgen Dickmann

Landmark-based Vehicle Self-Localization Using Automotive Polarimetric Radars

Aug 11, 2024Abstract:Automotive self-localization is an essential task for any automated driving function. This means that the vehicle has to reliably know its position and orientation with an accuracy of a few centimeters and degrees, respectively. This paper presents a radar-based approach to self-localization, which exploits fully polarimetric scattering information for robust landmark detection. The proposed method requires no input from sensors other than radar during localization for a given map. By association of landmark observations with map landmarks, the vehicle's position is inferred. Abstract point- and line-shaped landmarks allow for compact map sizes and, in combination with the factor graph formulation used, for an efficient implementation. Evaluation of extensive real-world experiments in diverse environments shows a promising overall localization performance of $0.12 \text{m}$ RMS absolute trajectory and $0.43 {}^\circ$ RMS heading error by leveraging the polarimetric information. A comparison of the performance of different levels of polarimetric information proves the advantage in challenging scenarios.

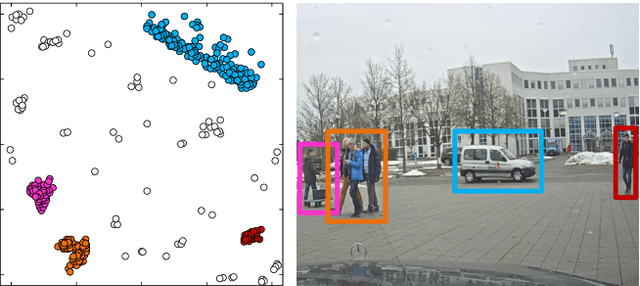

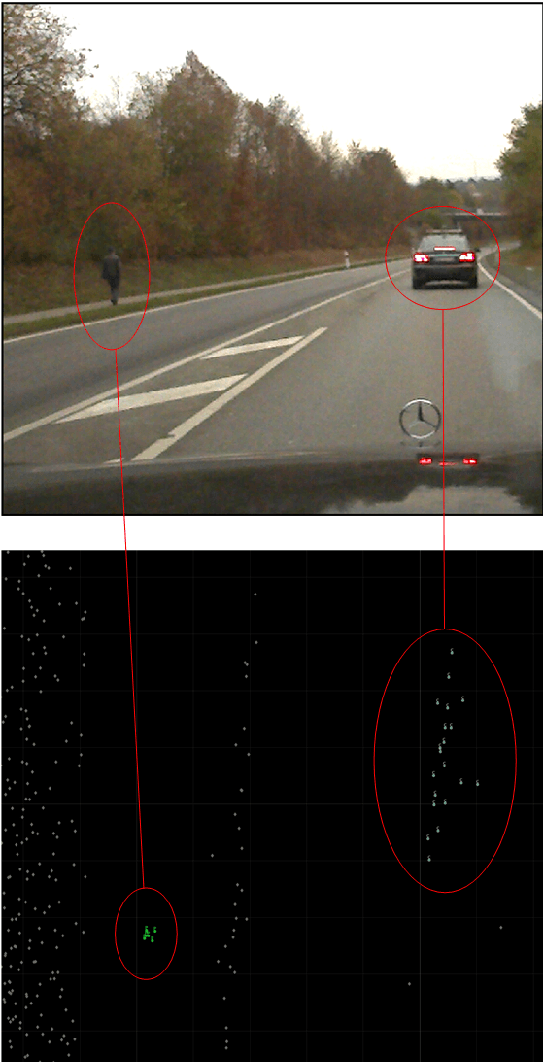

RadarScenes: A Real-World Radar Point Cloud Data Set for Automotive Applications

Apr 06, 2021

Abstract:A new automotive radar data set with measurements and point-wise annotations from more than four hours of driving is presented. Data provided by four series radar sensors mounted on one test vehicle were recorded and the individual detections of dynamic objects were manually grouped to clusters and labeled afterwards. The purpose of this data set is to enable the development of novel (machine learning-based) radar perception algorithms with the focus on moving road users. Images of the recorded sequences were captured using a documentary camera. For the evaluation of future object detection and classification algorithms, proposals for score calculation are made so that researchers can evaluate their algorithms on a common basis. Additional information as well as download instructions can be found on the website of the data set: www.radar-scenes.com.

Motion Classification and Height Estimation of Pedestrians Using Sparse Radar Data

Mar 03, 2021

Abstract:A complete overview of the surrounding vehicle environment is important for driver assistance systems and highly autonomous driving. Fusing results of multiple sensor types like camera, radar and lidar is crucial for increasing the robustness. The detection and classification of objects like cars, bicycles or pedestrians has been analyzed in the past for many sensor types. Beyond that, it is also helpful to refine these classes and distinguish for example between different pedestrian types or activities. This task is usually performed on camera data, though recent developments are based on radar spectrograms. However, for most automotive radar systems, it is only possible to obtain radar targets instead of the original spectrograms. This work demonstrates that it is possible to estimate the body height of walking pedestrians using 2D radar targets. Furthermore, different pedestrian motion types are classified.

* 6 pages, 6 figures, 1 table

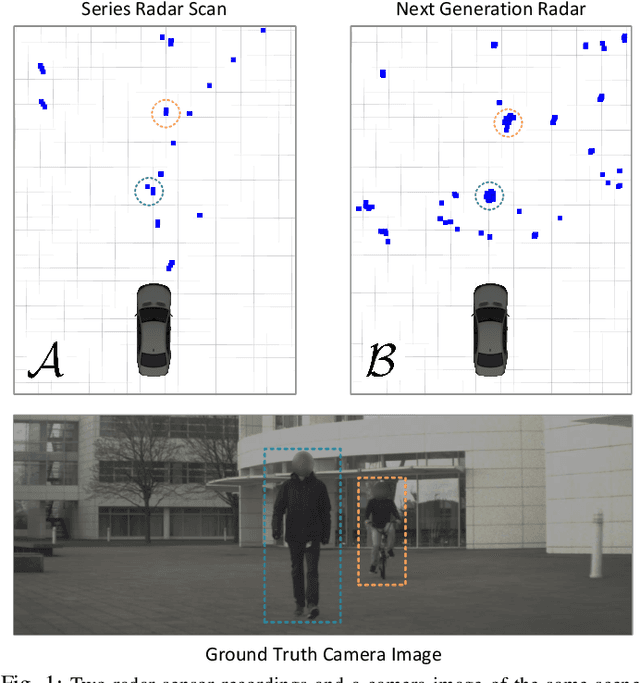

Off-the-shelf sensor vs. experimental radar -- How much resolution is necessary in automotive radar classification?

Jun 09, 2020

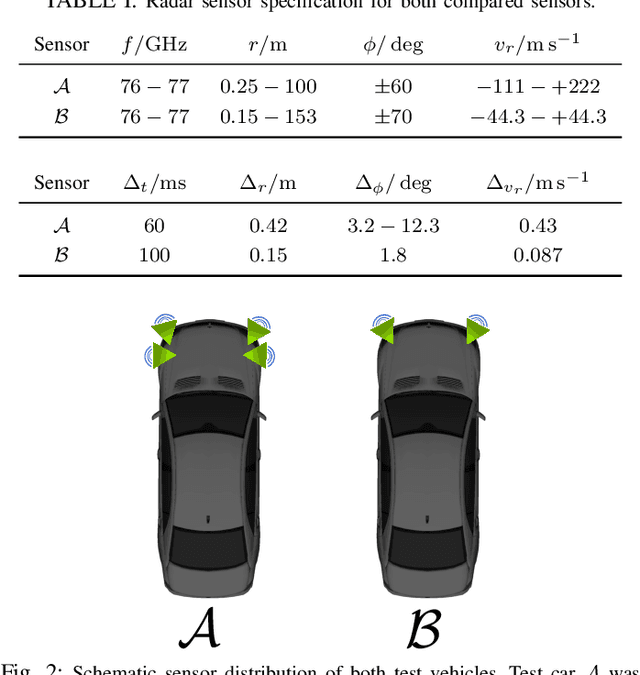

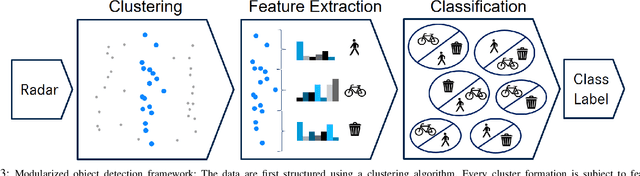

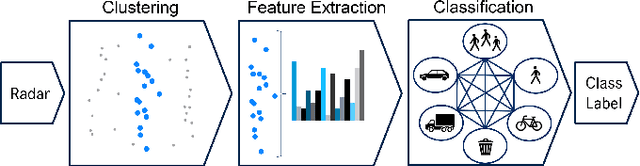

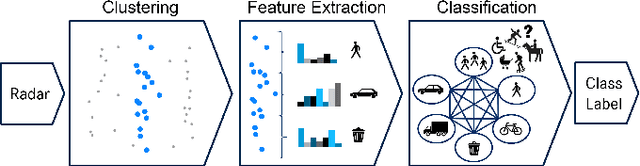

Abstract:Radar-based road user detection is an important topic in the context of autonomous driving applications. The resolution of conventional automotive radar sensors results in a sparse data representation which is tough to refine during subsequent signal processing. On the other hand, a new sensor generation is waiting in the wings for its application in this challenging field. In this article, two sensors of different radar generations are evaluated against each other. The evaluation criterion is the performance on moving road user object detection and classification tasks. To this end, two data sets originating from an off-the-shelf radar and a high resolution next generation radar are compared. Special attention is given on how the two data sets are assembled in order to make them comparable. The utilized object detector consists of a clustering algorithm, a feature extraction module, and a recurrent neural network ensemble for classification. For the assessment, all components are evaluated both individually and, for the first time, as a whole. This allows for indicating where overall performance improvements have their origin in the pipeline. Furthermore, the generalization capabilities of both data sets are evaluated and important comparison metrics for automotive radar object detection are discussed. Results show clear benefits of the next generation radar. Interestingly, those benefits do not actually occur due to better performance at the classification stage, but rather because of the vast improvements at the clustering stage.

Seeing Around Street Corners: Non-Line-of-Sight Detection and Tracking In-the-Wild Using Doppler Radar

Dec 13, 2019

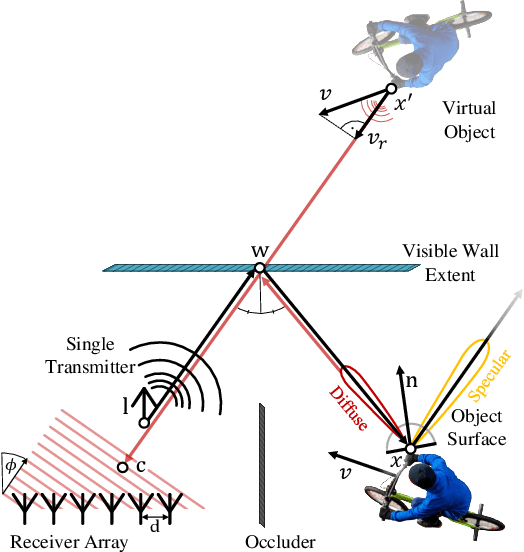

Abstract:Conventional sensor systems record information about directly visible objects, whereas occluded scene components are considered lost in the measurement process. Nonline-of-sight (NLOS) methods try to recover such hidden objects from their indirect reflections - faint signal components, traditionally treated as measurement noise. Existing NLOS approaches struggle to record these low-signal components outside the lab, and do not scale to large-scale outdoor scenes and high-speed motion, typical in automotive scenarios. Especially optical NLOS is fundamentally limited by the quartic intensity falloff of diffuse indirect reflections. In this work, we depart from visible-wavelength approaches and demonstrate detection, classification, and tracking of hidden objects in large-scale dynamic scenes using a Doppler radar which can be foreseen as a low-cost serial product in the near future. To untangle noisy indirect and direct reflections, we learn from temporal sequences of Doppler velocity and position measurements, which we fuse in a joint NLOS detection and tracking network over time. We validate the approach on in-the-wild automotive scenes, including sequences of parked cars or house facades as indirect reflectors, and demonstrate low-cost, real-time NLOS in dynamic automotive environments.

A Multi-Stage Clustering Framework for Automotive Radar Data

Jul 08, 2019

Abstract:Radar sensors provide a unique method for executing environmental perception tasks towards autonomous driving. Especially their capability to perform well in adverse weather conditions often makes them superior to other sensors such as cameras or lidar. Nevertheless, the high sparsity and low dimensionality of the commonly used detection data level is a major challenge for subsequent signal processing. Therefore, the data points are often merged in order to form larger entities from which more information can be gathered. The merging process is often implemented in form of a clustering algorithm. This article describes a novel approach for first filtering out static background data before applying a twostage clustering approach. The two-stage clustering follows the same paradigm as the idea for data association itself: First, clustering what is ought to belong together in a low dimensional parameter space, then, extracting additional features from the newly created clusters in order to perform a final clustering step. Parameters are optimized for filtering and both clustering steps. All techniques are assessed both individually and as a whole in order to demonstrate their effectiveness. Final results indicate clear benefits of the first two methods and also the cluster merging process under specific circumstances.

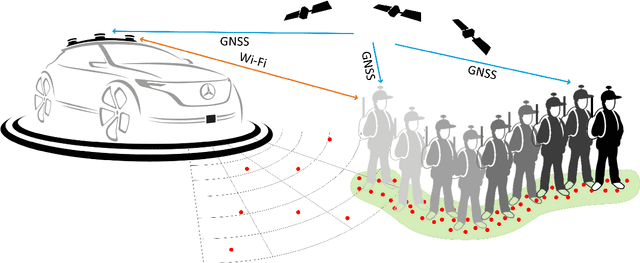

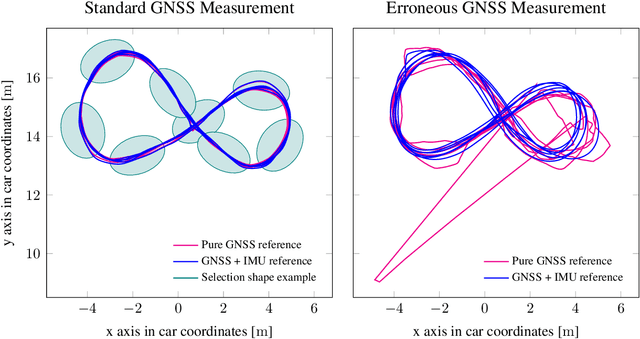

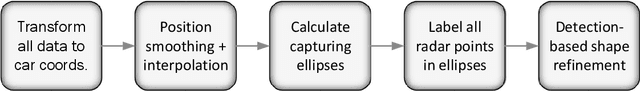

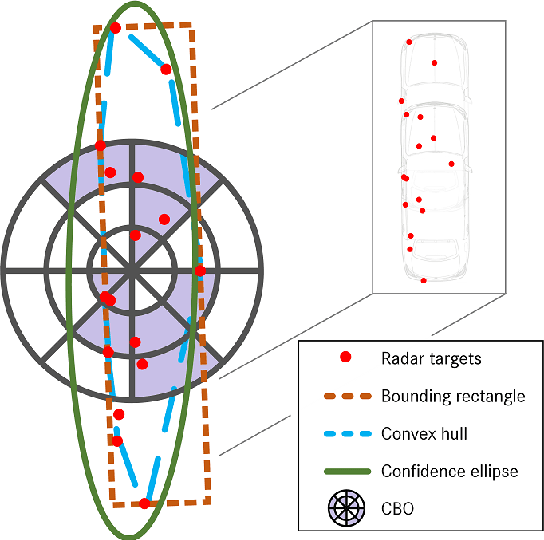

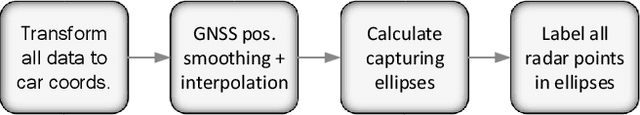

Automated Ground Truth Estimation For Automotive Radar Tracking Applications With Portable GNSS And IMU Devices

Jun 03, 2019

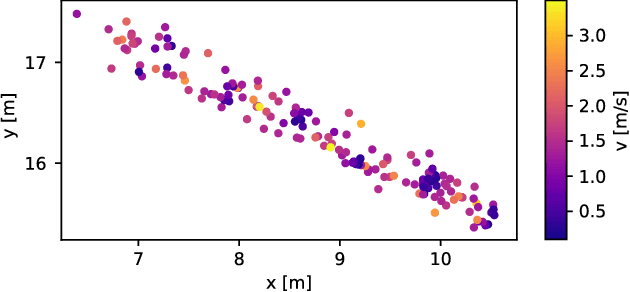

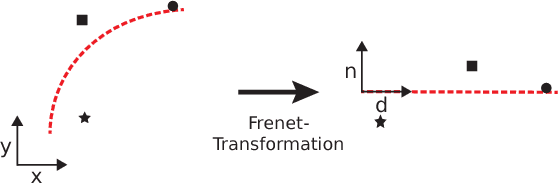

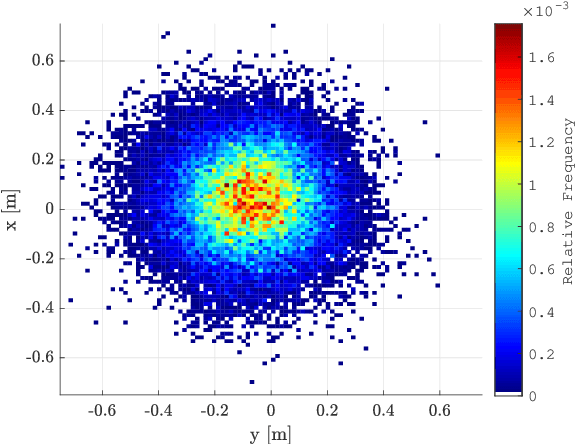

Abstract:Baseline generation for tracking applications is a difficult task when working with real world radar data. Data sparsity usually only allows an indirect way of estimating the original tracks as most objects' centers are not represented in the data. This article proposes an automated way of acquiring reference trajectories by using a highly accurate hand-held global navigation satellite system (GNSS). An embedded inertial measurement unit (IMU) is used for estimating orientation and motion behavior. This article contains two major contributions. A method for associating radar data to vulnerable road user (VRU) tracks is described. It is evaluated how accurate the system performs under different GNSS reception conditions and how carrying a reference system alters radar measurements. Second, the system is used to track pedestrians and cyclists over many measurement cycles in order to generate object centered occupancy grid maps. The reference system allows to much more precisely generate real world radar data distributions of VRUs than compared to conventional methods. Hereby, an important step towards radar-based VRU tracking is accomplished.

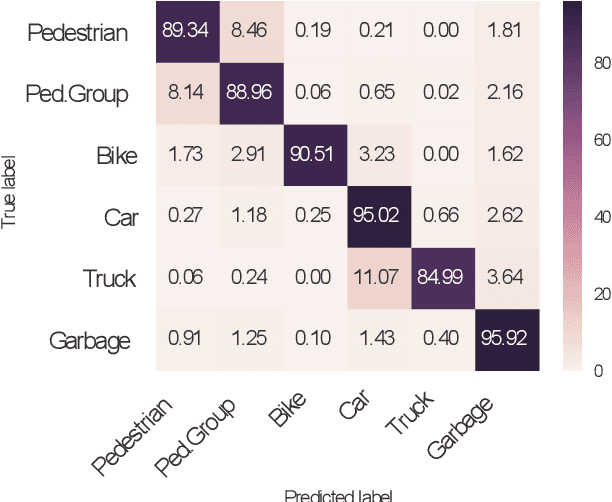

Radar-based Road User Classification and Novelty Detection with Recurrent Neural Network Ensembles

May 28, 2019

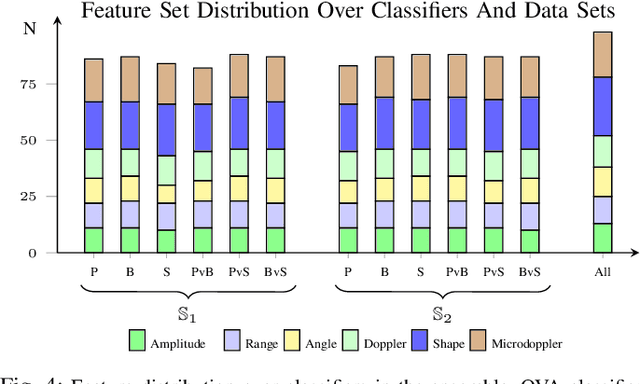

Abstract:Radar-based road user classification is an important yet still challenging task towards autonomous driving applications. The resolution of conventional automotive radar sensors results in a sparse data representation which is tough to recover by subsequent signal processing. In this article, classifier ensembles originating from a one-vs-one binarization paradigm are enriched by one-vs-all correction classifiers. They are utilized to efficiently classify individual traffic participants and also identify hidden object classes which have not been presented to the classifiers during training. For each classifier of the ensemble an individual feature set is determined from a total set of 98 features. Thereby, the overall classification performance can be improved when compared to previous methods and, additionally, novel classes can be identified much more accurately. Furthermore, the proposed structure allows to give new insights in the importance of features for the recognition of individual classes which is crucial for the development of new algorithms and sensor requirements.

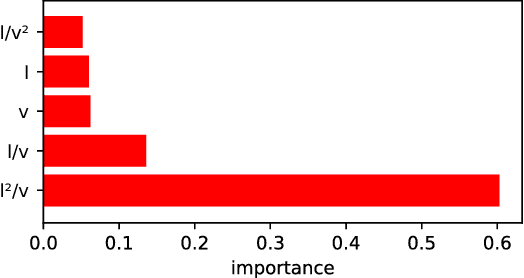

Radar-based Feature Design and Multiclass Classification for Road User Recognition

May 27, 2019

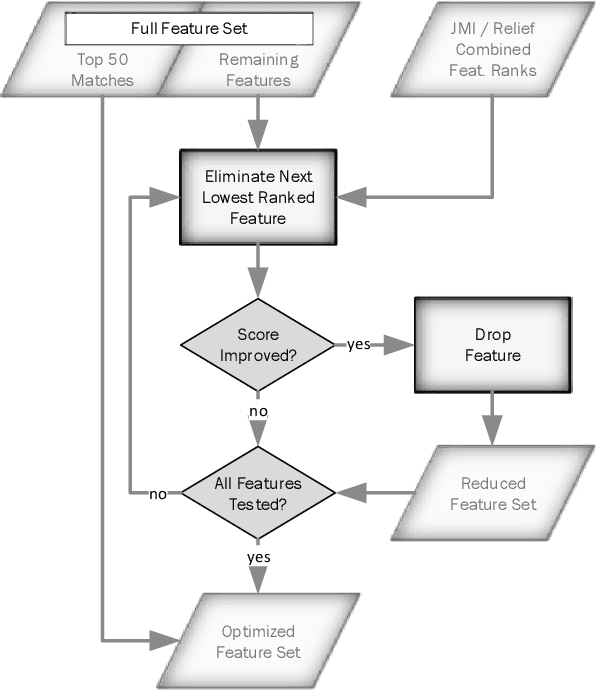

Abstract:The classification of individual traffic participants is a complex task, especially for challenging scenarios with multiple road users or under bad weather conditions. Radar sensors provide an - with respect to well established camera systems - orthogonal way of measuring such scenes. In order to gain accurate classification results, 50 different features are extracted from the measurement data and tested on their performance. From these features a suitable subset is chosen and passed to random forest and long short-term memory (LSTM) classifiers to obtain class predictions for the radar input. Moreover, it is shown why data imbalance is an inherent problem in automotive radar classification when the dataset is not sufficiently large. To overcome this issue, classifier binarization is used among other techniques in order to better account for underrepresented classes. A new method to couple the resulting probabilities is proposed and compared to others with great success. Final results show substantial improvements when compared to ordinary multiclass classification

* 8 pages, 6 figures

Automated Ground Truth Estimation of Vulnerable Road Users in Automotive Radar Data Using GNSS

May 27, 2019

Abstract:Annotating automotive radar data is a difficult task. This article presents an automated way of acquiring data labels which uses a highly accurate and portable global navigation satellite system (GNSS). The proposed system is discussed besides a revision of other label acquisitions techniques and a problem description of manual data annotation. The article concludes with a systematic comparison of conventional hand labeling and automatic data acquisition. The results show clear advantages of the proposed method without a relevant loss in labeling accuracy. Minor changes can be observed in the measured radar data, but the so introduced bias of the GNSS reference is clearly outweighed by the indisputable time savings. Beside data annotation, the proposed system can also provide a ground truth for validating object tracking or other automated driving system applications.

* 5 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge