Markus Horn

User Feedback and Sample Weighting for Ill-Conditioned Hand-Eye Calibration

Aug 11, 2023

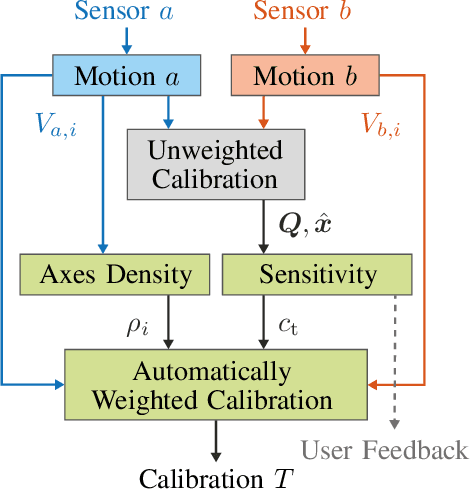

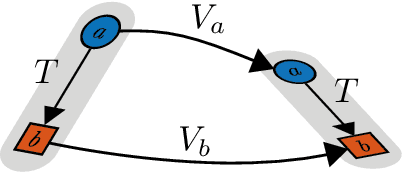

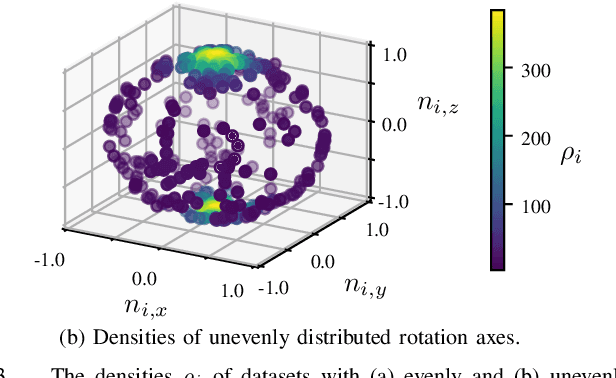

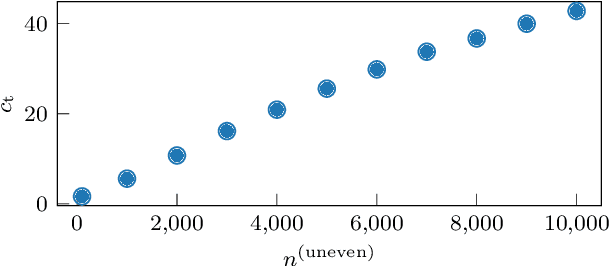

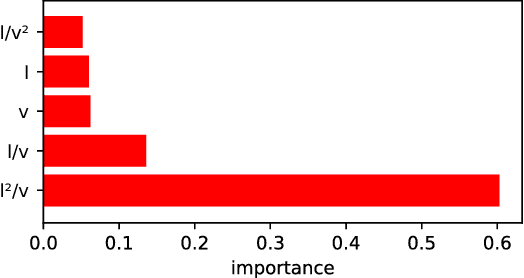

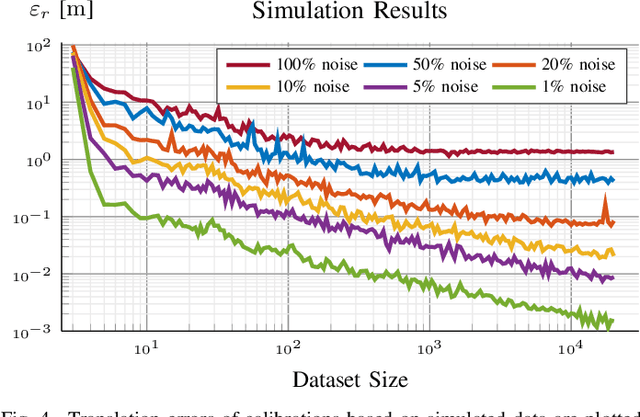

Abstract:Hand-eye calibration is an important and extensively researched method for calibrating rigidly coupled sensors, solely based on estimates of their motion. Due to the geometric structure of this problem, at least two motion estimates with non-parallel rotation axes are required for a unique solution. If the majority of rotation axes are almost parallel, the resulting optimization problem is ill-conditioned. In this paper, we propose an approach to automatically weight the motion samples of such an ill-conditioned optimization problem for improving the conditioning. The sample weights are chosen in relation to the local density of all available rotation axes. Furthermore, we present an approach for estimating the sensitivity and conditioning of the cost function, separated into the translation and the rotation part. This information can be employed as user feedback when recording the calibration data to prevent ill-conditioning in advance. We evaluate and compare our approach on artificially augmented data from the KITTI odometry dataset.

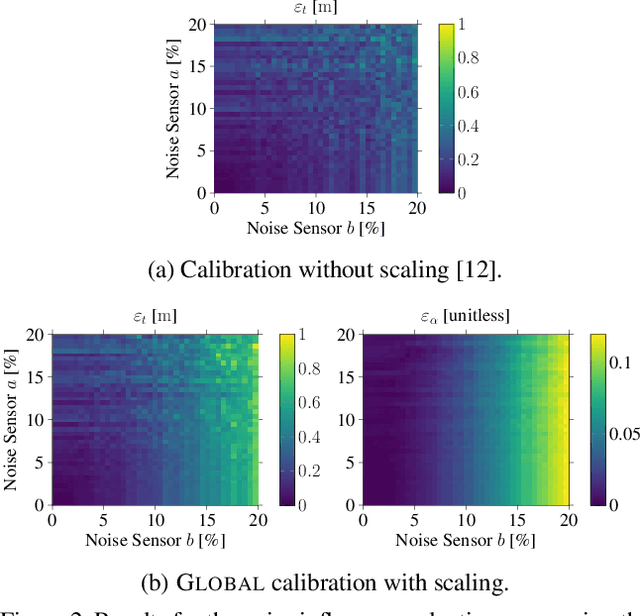

Extrinsic Infrastructure Calibration Using the Hand-Eye Robot-World Formulation

May 02, 2023

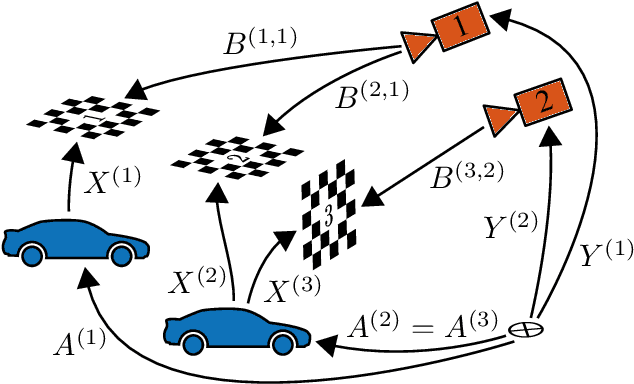

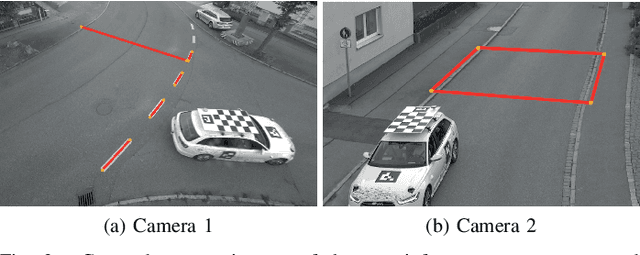

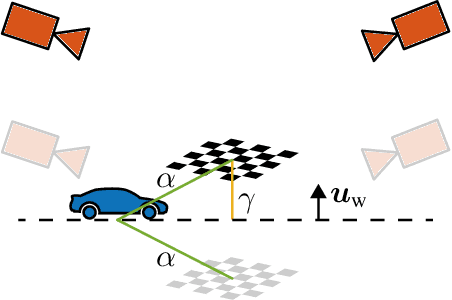

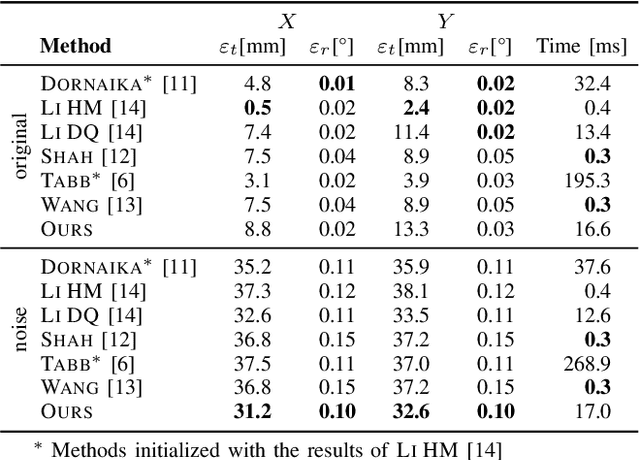

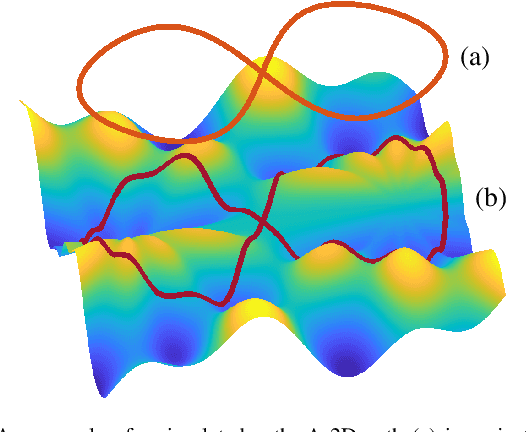

Abstract:We propose a certifiably globally optimal approach for solving the hand-eye robot-world problem supporting multiple sensors and targets at once. Further, we leverage this formulation for estimating a geo-referenced calibration of infrastructure sensors. Since vehicle motion recorded by infrastructure sensors is mostly planar, obtaining a unique solution for the respective hand-eye robot-world problem is unfeasible without incorporating additional knowledge. Hence, we extend our proposed method to include a-priori knowledge, i.e., the translation norm of calibration targets, to yield a unique solution. Our approach achieves state-of-the-art results on simulated and real-world data. Especially on real-world intersection data, our approach utilizing the translation norm is the only method providing accurate results.

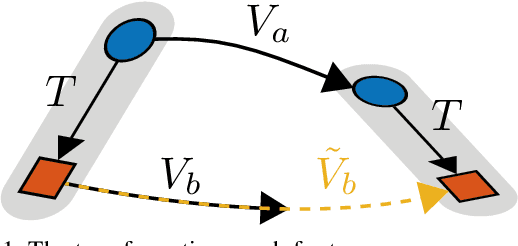

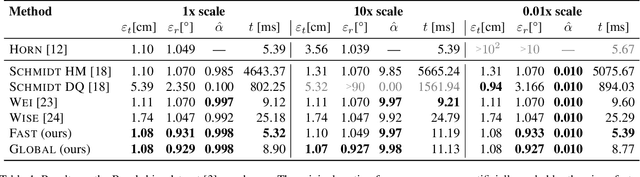

Globally Optimal Multi-Scale Monocular Hand-Eye Calibration Using Dual Quaternions

Jan 12, 2022

Abstract:In this work, we present an approach for monocular hand-eye calibration from per-sensor ego-motion based on dual quaternions. Due to non-metrically scaled translations of monocular odometry, a scaling factor has to be estimated in addition to the rotation and translation calibration. For this, we derive a quadratically constrained quadratic program that allows a combined estimation of all extrinsic calibration parameters. Using dual quaternions leads to low run-times due to their compact representation. Our problem formulation further allows to estimate multiple scalings simultaneously for different sequences of the same sensor setup. Based on our problem formulation, we derive both, a fast local and a globally optimal solving approach. Finally, our algorithms are evaluated and compared to state-of-the-art approaches on simulated and real-world data, e.g., the EuRoC MAV dataset.

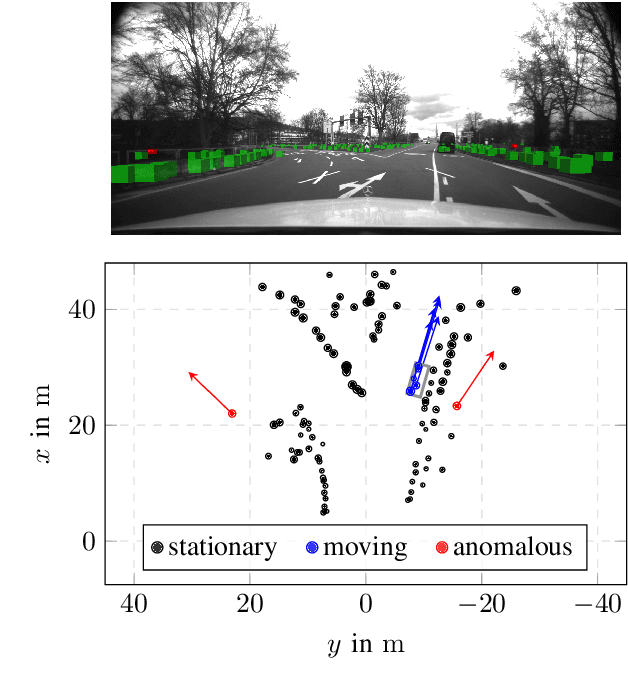

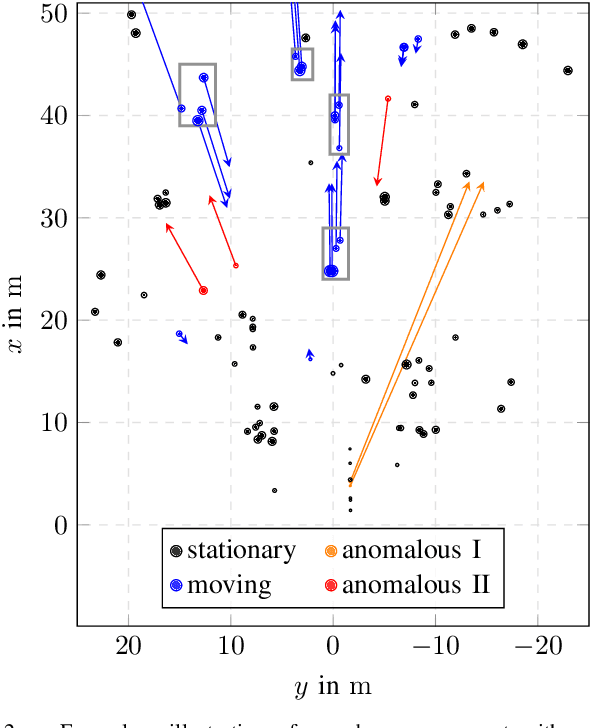

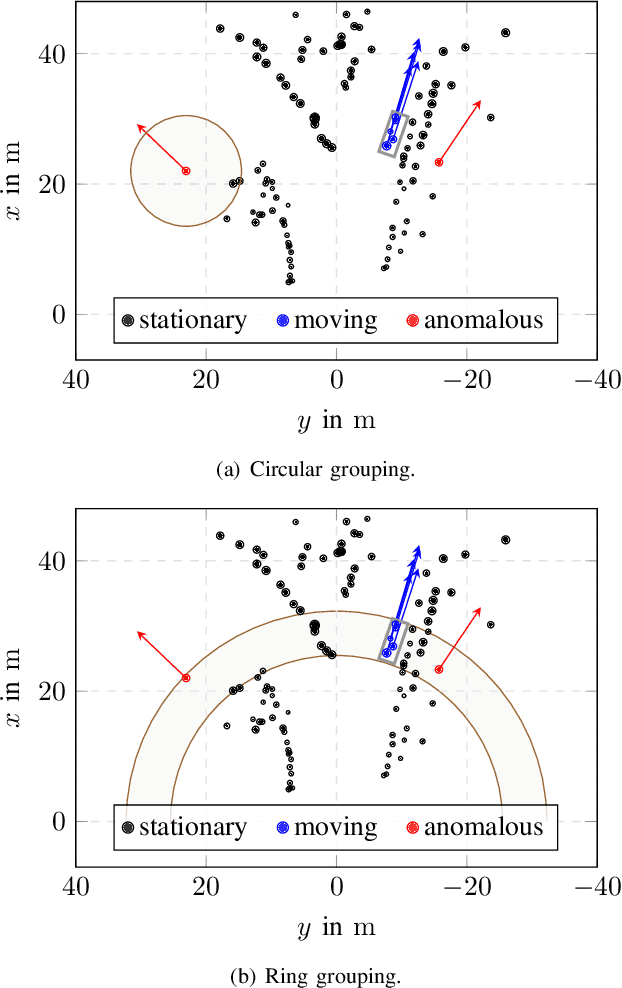

Anomaly Detection in Radar Data Using PointNets

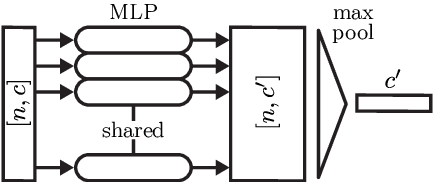

Sep 20, 2021

Abstract:For autonomous driving, radar is an important sensor type. On the one hand, radar offers a direct measurement of the radial velocity of targets in the environment. On the other hand, in literature, radar sensors are known for their robustness against several kinds of adverse weather conditions. However, on the downside, radar is susceptible to ghost targets or clutter which can be caused by several different causes, e.g., reflective surfaces in the environment. Ghost targets, for instance, can result in erroneous object detections. To this end, it is desirable to identify anomalous targets as early as possible in radar data. In this work, we present an approach based on PointNets to detect anomalous radar targets. Modifying the PointNet-architecture driven by our task, we developed a novel grouping variant which contributes to a multi-form grouping module. Our method is evaluated on a real-world dataset in urban scenarios and shows promising results for the detection of anomalous radar targets.

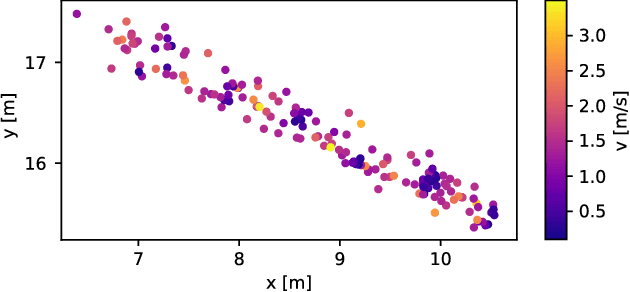

Motion Classification and Height Estimation of Pedestrians Using Sparse Radar Data

Mar 03, 2021

Abstract:A complete overview of the surrounding vehicle environment is important for driver assistance systems and highly autonomous driving. Fusing results of multiple sensor types like camera, radar and lidar is crucial for increasing the robustness. The detection and classification of objects like cars, bicycles or pedestrians has been analyzed in the past for many sensor types. Beyond that, it is also helpful to refine these classes and distinguish for example between different pedestrian types or activities. This task is usually performed on camera data, though recent developments are based on radar spectrograms. However, for most automotive radar systems, it is only possible to obtain radar targets instead of the original spectrograms. This work demonstrates that it is possible to estimate the body height of walking pedestrians using 2D radar targets. Furthermore, different pedestrian motion types are classified.

* 6 pages, 6 figures, 1 table

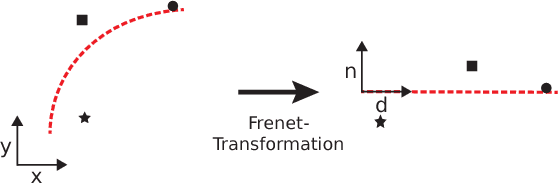

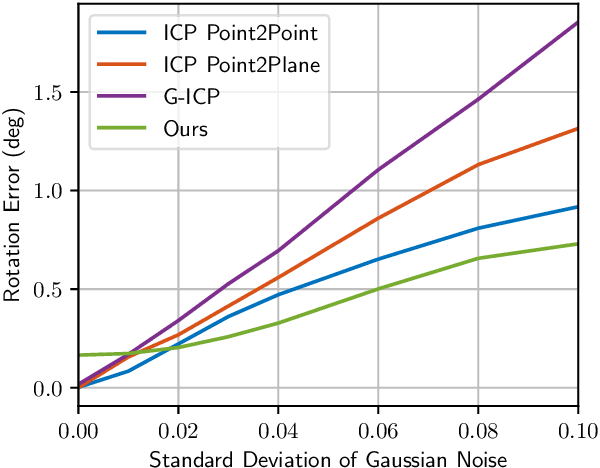

Online Extrinsic Calibration based on Per-Sensor Ego-Motion Using Dual Quaternions

Jan 27, 2021

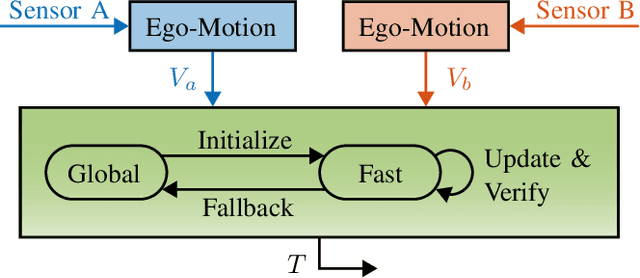

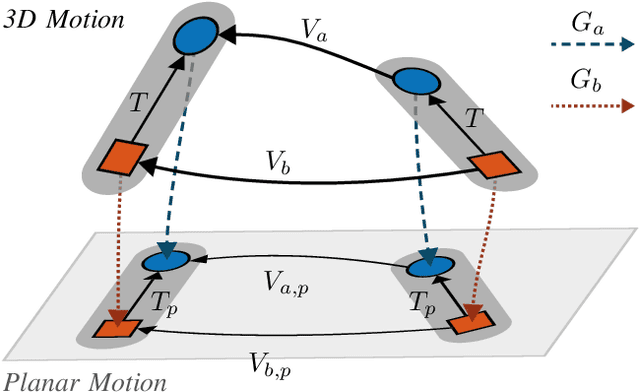

Abstract:In this work, we propose an approach for extrinsic sensor calibration from per-sensor ego-motion estimates. Our problem formulation is based on dual quaternions, enabling two different online capable solving approaches. We provide a certifiable globally optimal and a fast local approach along with a method to verify the globality of the local approach. Additionally, means for integrating previous knowledge, for example, a common ground plane for planar sensor motion, are described. Our algorithms are evaluated on simulated data and on a publicly available dataset containing RGB-D camera images. Further, our online calibration approach is tested on the KITTI odometry dataset, which provides data of a lidar and two stereo camera systems mounted on a vehicle. Our evaluation confirms the short run time, state-of-the-art accuracy, as well as online capability of our approach while retaining the global optimality of the solution at any time.

DeepCLR: Correspondence-Less Architecture for Deep End-to-End Point Cloud Registration

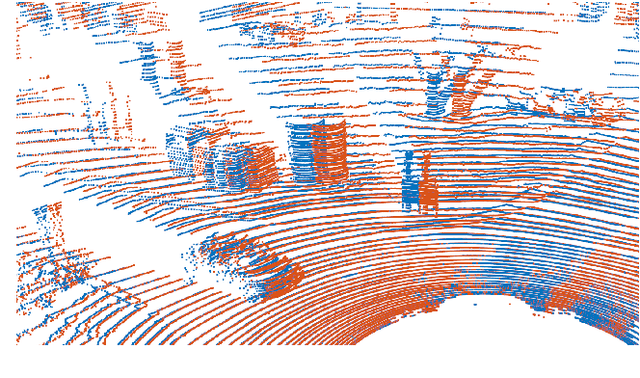

Jul 22, 2020

Abstract:This work addresses the problem of point cloud registration using deep neural networks. We propose an approach to predict the alignment between two point clouds with overlapping data content, but displaced origins. Such point clouds originate, for example, from consecutive measurements of a LiDAR mounted on a moving platform. The main difficulty in deep registration of raw point clouds is the fusion of template and source point cloud. Our proposed architecture applies flow embedding to tackle this problem, which generates features that describe the motion of each template point. These features are then used to predict the alignment in an end-to-end fashion without extracting explicit point correspondences between both input clouds. We rely on the KITTI odometry and ModelNet40 datasets for evaluating our method on various point distributions. Our approach achieves state-of-the-art accuracy and the lowest run-time of the compared methods.

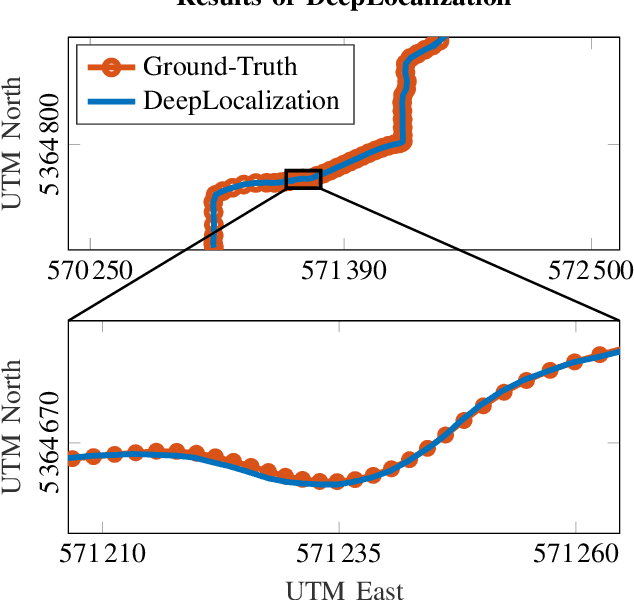

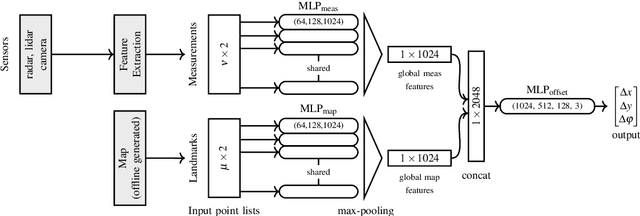

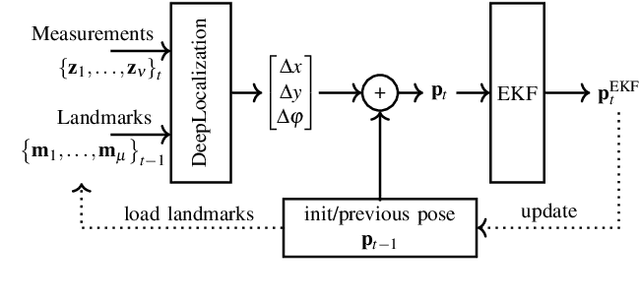

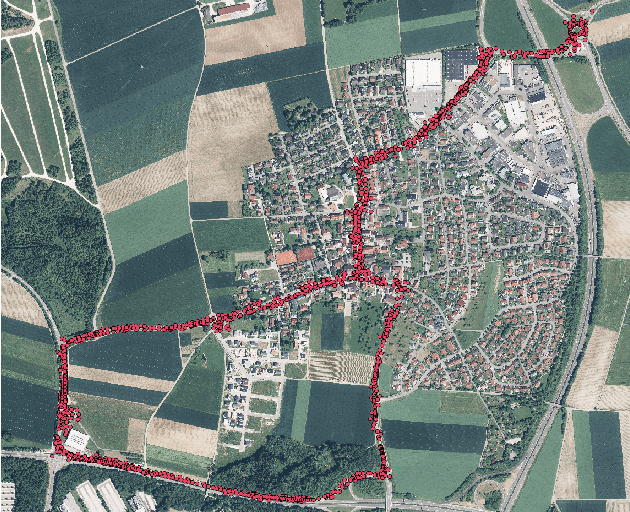

DeepLocalization: Landmark-based Self-Localization with Deep Neural Networks

Apr 18, 2019

Abstract:We address the problem of landmark-based vehicle self-localization by relying on multi-modal sensory information. Our goal is to determine the autonomous vehicle's pose based on landmark measurements and map landmarks. The map is built by extracting landmarks from the vehicle's field of view in an off-line way, while the measurements are collected in the same way during inference. To map the measurements and map landmarks to the vehicle's pose, we propose DeepLocalization, a deep neural network that copes with dynamic input. Our network is robust to missing landmarks that occur due to the dynamic environment and handles unordered and adaptive input. In real-world experiments, we evaluate two inference approaches to show that DeepLocalization can be combined with GPS-sensors and is complementary to filtering approaches such as an extended Kalman filter. We show that our approach achieves state-of-the-art accuracy and is about ten times faster than the related work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge