Michael Buchholz

Toward Fully Autonomous Driving: AI, Challenges, Opportunities, and Needs

Jan 30, 2026Abstract:Automated driving (AD) is promising, but the transition to fully autonomous driving is, among other things, subject to the real, ever-changing open world and the resulting challenges. However, research in the field of AD demonstrates the ability of artificial intelligence (AI) to outperform classical approaches, handle higher complexities, and reach a new level of autonomy. At the same time, the use of AI raises further questions of safety and transferability. To identify the challenges and opportunities arising from AI concerning autonomous driving functionalities, we have analyzed the current state of AD, outlined limitations, and identified foreseeable technological possibilities. Thereby, various further challenges are examined in the context of prospective developments. In this way, this article reconsiders fully autonomous driving with respect to advancements in the field of AI and carves out the respective needs and resulting research questions.

* Published in IEEE Access, 29 January 2026

Generalized Coordination of Partially Cooperative Urban Traffic

May 27, 2025Abstract:Vehicle-to-anything connectivity, especially for autonomous vehicles, promises to increase passenger comfort and safety of road traffic, for example, by sharing perception and driving intention. Cooperative maneuver planning uses connectivity to enhance traffic efficiency, which has, so far, been mainly considered for automated intersection management. In this article, we present a novel cooperative maneuver planning approach that is generalized to various situations found in urban traffic. Our framework handles challenging mixed traffic, that is, traffic comprising both cooperative connected vehicles and other vehicles at any distribution. Our solution is based on an optimization approach accompanied by an efficient heuristic method for high-load scenarios. We extensively evaluate the proposed planer in a distinctly realistic simulation framework and show significant efficiency gains already at a cooperation rate of 40%. Traffic throughput increases, while the average waiting time and the number of stopped vehicles are reduced, without impacting traffic safety.

Dynamic Objective MPC for Motion Planning of Seamless Docking Maneuvers

Apr 04, 2025Abstract:Automated vehicles and logistics robots must often position themselves in narrow environments with high precision in front of a specific target, such as a package or their charging station. Often, these docking scenarios are solved in two steps: path following and rough positioning followed by a high-precision motion planning algorithm. This can generate suboptimal trajectories caused by bad positioning in the first phase and, therefore, prolong the time it takes to reach the goal. In this work, we propose a unified approach, which is based on a Model Predictive Control (MPC) that unifies the advantages of Model Predictive Contouring Control (MPCC) with a Cartesian MPC to reach a specific goal pose. The paper's main contributions are the adaption of the dynamic weight allocation method to reach path ends and goal poses inside driving corridors, and the development of the so-called dynamic objective MPC. The latter is an improvement of the dynamic weight allocation method, which can inherently switch state-dependent from an MPCC to a Cartesian MPC to solve the path-following problem and the high-precision positioning tasks independently of the location of the goal pose seamlessly by one algorithm. This leads to foresighted, feasible, and safe motion plans, which can decrease the mission time and result in smoother trajectories.

The ADUULM-360 Dataset -- A Multi-Modal Dataset for Depth Estimation in Adverse Weather

Nov 18, 2024Abstract:Depth estimation is an essential task toward full scene understanding since it allows the projection of rich semantic information captured by cameras into 3D space. While the field has gained much attention recently, datasets for depth estimation lack scene diversity or sensor modalities. This work presents the ADUULM-360 dataset, a novel multi-modal dataset for depth estimation. The ADUULM-360 dataset covers all established autonomous driving sensor modalities, cameras, lidars, and radars. It covers a frontal-facing stereo setup, six surround cameras covering the full 360-degree, two high-resolution long-range lidar sensors, and five long-range radar sensors. It is also the first depth estimation dataset that contains diverse scenes in good and adverse weather conditions. We conduct extensive experiments using state-of-the-art self-supervised depth estimation methods under different training tasks, such as monocular training, stereo training, and full surround training. Discussing these results, we demonstrate common limitations of state-of-the-art methods, especially in adverse weather conditions, which hopefully will inspire future research in this area. Our dataset, development kit, and trained baselines are available at https://github.com/uulm-mrm/aduulm_360_dataset.

MGNiceNet: Unified Monocular Geometric Scene Understanding

Nov 18, 2024Abstract:Monocular geometric scene understanding combines panoptic segmentation and self-supervised depth estimation, focusing on real-time application in autonomous vehicles. We introduce MGNiceNet, a unified approach that uses a linked kernel formulation for panoptic segmentation and self-supervised depth estimation. MGNiceNet is based on the state-of-the-art real-time panoptic segmentation method RT-K-Net and extends the architecture to cover both panoptic segmentation and self-supervised monocular depth estimation. To this end, we introduce a tightly coupled self-supervised depth estimation predictor that explicitly uses information from the panoptic path for depth prediction. Furthermore, we introduce a panoptic-guided motion masking method to improve depth estimation without relying on video panoptic segmentation annotations. We evaluate our method on two popular autonomous driving datasets, Cityscapes and KITTI. Our model shows state-of-the-art results compared to other real-time methods and closes the gap to computationally more demanding methods. Source code and trained models are available at https://github.com/markusschoen/MGNiceNet.

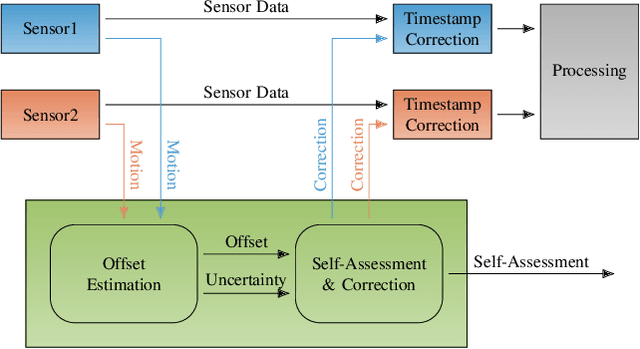

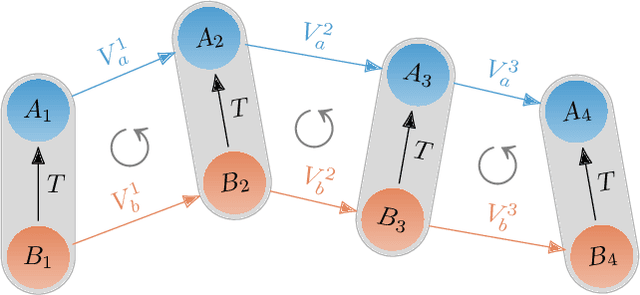

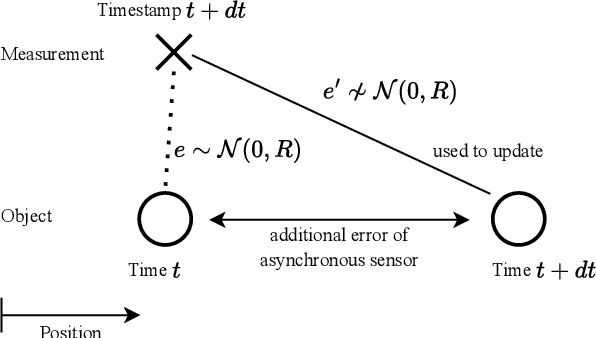

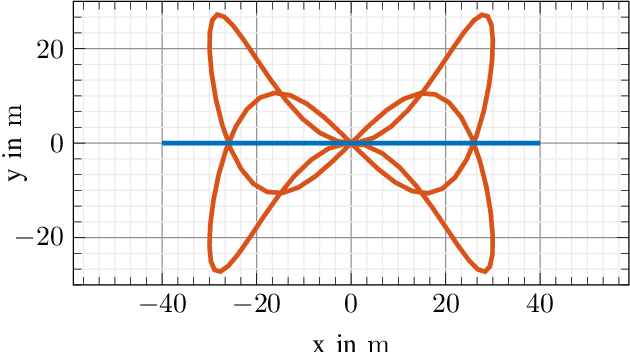

Self-Assessment and Correction of Sensor Synchronization

Sep 30, 2024

Abstract:We propose an approach to assess the synchronization of rigidly mounted sensors based on their rotational motion. Using function similarity measures combined with a sliding window approach, our approach is capable of estimating time-varying time offsets. Further, the estimated offset allows the correction of erroneously assigned time stamps on measurements. This mitigates the effect of synchronization issues on subsequent modules in autonomous software stacks, such as tracking systems that heavily rely on accurate measurement time stamps. Additionally, a self-assessment based on an uncertainty measure is derived, and correction strategies are described. Our approach is evaluated with Monte Carlo experiments containing different error patterns. The results show that our approach accurately estimates time offsets and, thus, is able to detect and assess synchronization issues. To further embrace the importance of our approach for autonomous systems, we investigate the effect of synchronization inconsistencies in tracking systems in more detail and demonstrate the beneficial effect of our proposed offset correction.

Self-Assessment of Evidential Grid Map Fusion for Robust Motion Planning

Sep 30, 2024

Abstract:Conflicting sensor measurements pose a huge problem for the environment representation of an autonomous robot. Therefore, in this paper, we address the self-assessment of an evidential grid map in which data from conflicting LiDAR sensor measurements are fused, followed by methods for robust motion planning under these circumstances. First, conflicting measurements aggregated in Subjective-Logic-based evidential grid maps are classified. Then, a self-assessment framework evaluates these conflicts and estimates their severity for the overall system by calculating a degradation score. This enables the detection of calibration errors and insufficient sensor setups. In contrast to other motion planning approaches, the information gained from the evidential grid maps is further used inside our proposed path-planning algorithm. Here, the impact of conflicting measurements on the current motion plan is evaluated, and a robust and curious path-planning strategy is derived to plan paths under the influence of conflicting data. This ensures that the system integrity is maintained in severely degraded environment representations which can prevent the unnecessary abortion of planning tasks.

Globally Optimal GNSS Multi-Antenna Lever Arm Calibration

Jun 14, 2024

Abstract:Sensor calibration is crucial for autonomous driving, providing the basis for accurate localization and consistent data fusion. Enabling the use of high-accuracy GNSS sensors, this work focuses on the antenna lever arm calibration. We propose a globally optimal multi-antenna lever arm calibration approach based on motion measurements. For this, we derive an optimization method that further allows the integration of a-priori knowledge. Globally optimal solutions are obtained by leveraging the Lagrangian dual problem and a primal recovery strategy. Generally, motion-based calibration for autonomous vehicles is known to be difficult due to cars' predominantly planar motion. Therefore, we first describe the motion requirements for a unique solution and then propose a planar motion extension to overcome this issue and enable a calibration based on the restricted motion of autonomous vehicles. Last we present and discuss the results of our thorough evaluation. Using simulated and augmented real-world data, we achieve accurate calibration results and fast run times that allow online deployment.

Towards Cooperative Maneuver Planning in Mixed Traffic at Urban Intersections

Mar 25, 2024

Abstract:Connected automated driving promises a significant improvement of traffic efficiency and safety on highways and in urban areas. Apart from sharing of awareness and perception information over wireless communication links, cooperative maneuver planning may facilitate active guidance of connected automated vehicles at urban intersections. Research in automatic intersection management put forth a large body of works that mostly employ rule-based or optimization-based approaches primarily in fully automated simulated environments. In this work, we present two cooperative planning approaches that are capable of handling mixed traffic, i.e., the road being shared by automated vehicles and regular vehicles driven by humans. Firstly, we propose an optimization-based planner trained on real driving data that cyclically selects the most efficient out of multiple predicted coordinated maneuvers. Additionally, we present a cooperative planning approach based on graph-based reinforcement learning, which conquers the lack of ground truth data for cooperative maneuvers. We present evaluation results of both cooperative planners in high-fidelity simulation and real-world traffic. Simulative experiments in fully automated traffic and mixed traffic show that cooperative maneuver planning leads to less delay due to interaction and a reduced number of stops. In real-world experiments with three prototype connected automated vehicles in public traffic, both planners demonstrate their ability to perform efficient cooperative maneuvers.

Fast Long-Term Multi-Scenario Prediction for Maneuver Planning at Unsignalized Intersections

Jan 26, 2024Abstract:Motion prediction for intelligent vehicles typically focuses on estimating the most probable future evolutions of a traffic scenario. Estimating the gap acceptance, i.e., whether a vehicle merges or crosses before another vehicle with the right of way, is often handled implicitly in the prediction. However, an infrastructure-based maneuver planning can assign artificial priorities between cooperative vehicles, so it needs to evaluate many more potential scenarios. Additionally, the prediction horizon has to be long enough to assess the impact of a maneuver. We, therefore, present a novel long-term prediction approach handling the gap acceptance estimation and the velocity prediction in two separate stages. Thereby, the behavior of regular vehicles as well as priority assignments of cooperative vehicles can be considered. We train both stages on real-world traffic observations to achieve realistic prediction results. Our method has a competitive accuracy and is fast enough to predict a multitude of scenarios in a short time, making it suitable to be used in a maneuver planning framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge