Jan Strohbeck

Fast Long-Term Multi-Scenario Prediction for Maneuver Planning at Unsignalized Intersections

Jan 26, 2024Abstract:Motion prediction for intelligent vehicles typically focuses on estimating the most probable future evolutions of a traffic scenario. Estimating the gap acceptance, i.e., whether a vehicle merges or crosses before another vehicle with the right of way, is often handled implicitly in the prediction. However, an infrastructure-based maneuver planning can assign artificial priorities between cooperative vehicles, so it needs to evaluate many more potential scenarios. Additionally, the prediction horizon has to be long enough to assess the impact of a maneuver. We, therefore, present a novel long-term prediction approach handling the gap acceptance estimation and the velocity prediction in two separate stages. Thereby, the behavior of regular vehicles as well as priority assignments of cooperative vehicles can be considered. We train both stages on real-world traffic observations to achieve realistic prediction results. Our method has a competitive accuracy and is fast enough to predict a multitude of scenarios in a short time, making it suitable to be used in a maneuver planning framework.

Graph-based Trajectory Prediction with Cooperative Information

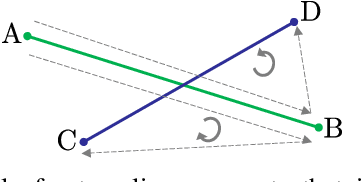

Oct 24, 2023Abstract:For automated driving, predicting the future trajectories of other road users in complex traffic situations is a hard problem. Modern neural networks use the past trajectories of traffic participants as well as map data to gather hints about the possible driver intention and likely maneuvers. With increasing connectivity between cars and other traffic actors, cooperative information is another source of data that can be used as inputs for trajectory prediction algorithms. Connected actors might transmit their intended path or even complete planned trajectories to other actors, which simplifies the prediction problem due to the imposed constraints. In this work, we outline the benefits of using this source of data for trajectory prediction and propose a graph-based neural network architecture that can leverage this additional data. We show that the network performance increases substantially if cooperative data is present. Also, our proposed training scheme improves the network's performance even for cases where no cooperative information is available. We also show that the network can deal with inaccurate cooperative data, which allows it to be used in real automated driving environments.

Automated Static Camera Calibration with Intelligent Vehicles

Apr 21, 2023Abstract:Connected and cooperative driving requires precise calibration of the roadside infrastructure for having a reliable perception system. To solve this requirement in an automated manner, we present a robust extrinsic calibration method for automated geo-referenced camera calibration. Our method requires a calibration vehicle equipped with a combined GNSS/RTK receiver and an inertial measurement unit (IMU) for self-localization. In order to remove any requirements for the target's appearance and the local traffic conditions, we propose a novel approach using hypothesis filtering. Our method does not require any human interaction with the information recorded by both the infrastructure and the vehicle. Furthermore, we do not limit road access for other road users during calibration. We demonstrate the feasibility and accuracy of our approach by evaluating our approach on synthetic datasets as well as a real-world connected intersection, and deploying the calibration on real infrastructure. Our source code is publicly available.

Extrinsic Camera Calibration with Semantic Segmentation

Aug 08, 2022

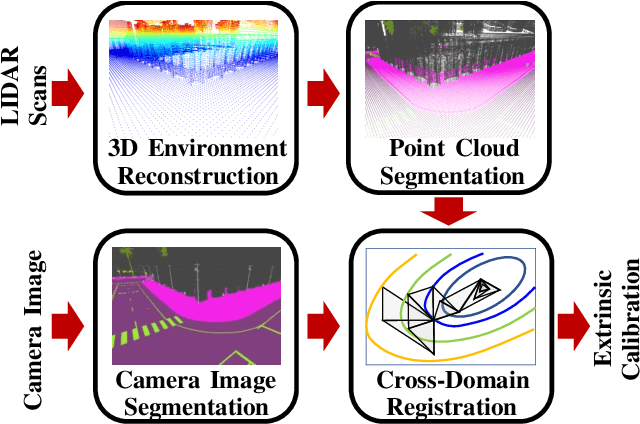

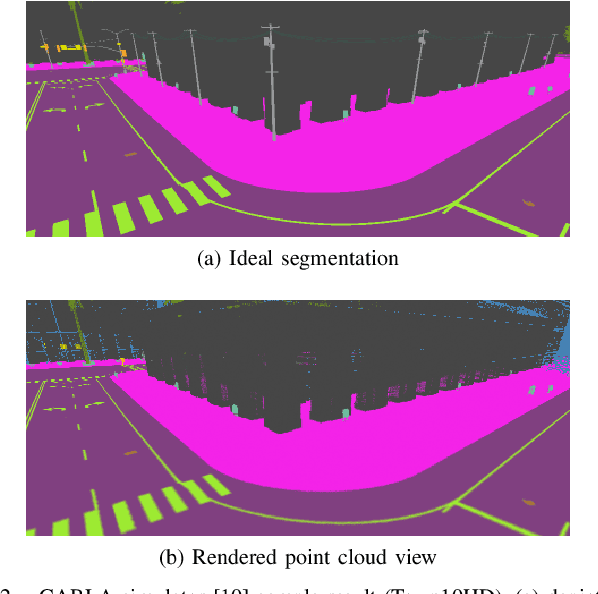

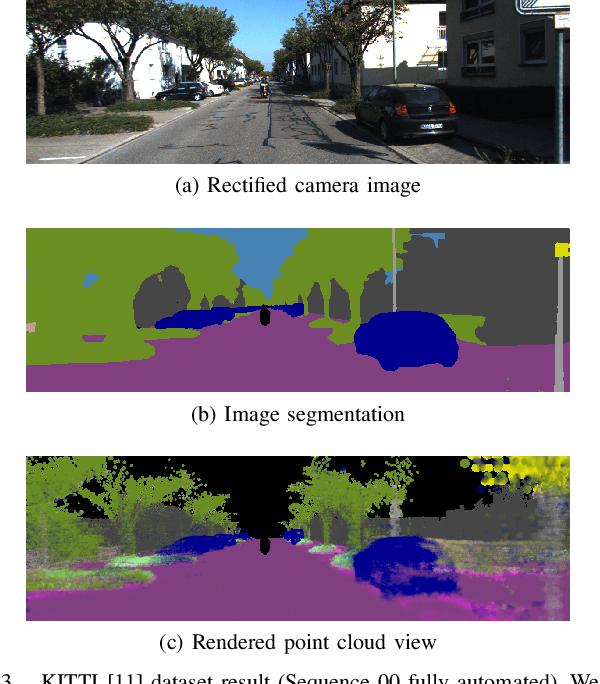

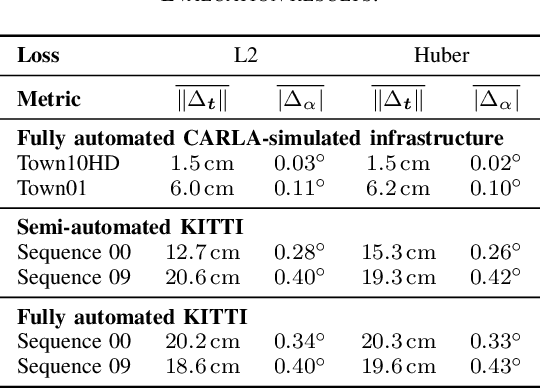

Abstract:Monocular camera sensors are vital to intelligent vehicle operation and automated driving assistance and are also heavily employed in traffic control infrastructure. Calibrating the monocular camera, though, is time-consuming and often requires significant manual intervention. In this work, we present an extrinsic camera calibration approach that automatizes the parameter estimation by utilizing semantic segmentation information from images and point clouds. Our approach relies on a coarse initial measurement of the camera pose and builds on lidar sensors mounted on a vehicle with high-precision localization to capture a point cloud of the camera environment. Afterward, a mapping between the camera and world coordinate spaces is obtained by performing a lidar-to-camera registration of the semantically segmented sensor data. We evaluate our method on simulated and real-world data to demonstrate low error measurements in the calibration results. Our approach is suitable for infrastructure sensors as well as vehicle sensors, while it does not require motion of the camera platform.

Identification of Threat Regions From a Dynamic Occupancy Grid Map for Situation-Aware Environment Perception

Jul 11, 2022

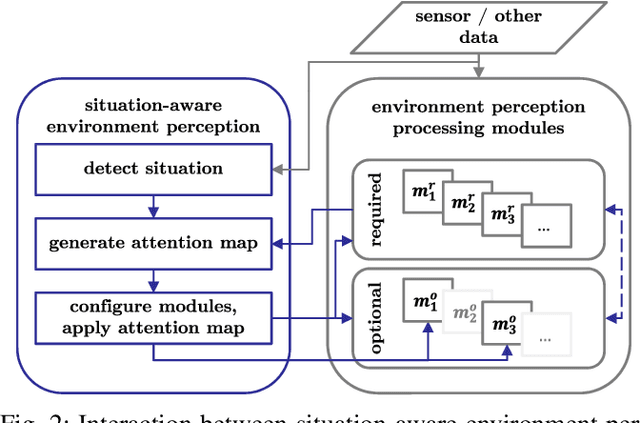

Abstract:The advance towards higher levels of automation within the field of automated driving is accompanied by increasing requirements for the operational safety of vehicles. Induced by the limitation of computational resources, trade-offs between the computational complexity of algorithms and their potential to ensure safe operation of automated vehicles are often encountered. Situation-aware environment perception presents one promising example, where computational resources are distributed to regions within the perception area that are relevant for the task of the automated vehicle. While prior map knowledge is often leveraged to identify relevant regions, in this work, we present a lightweight identification of safety-relevant regions that relies solely on online information. We show that our approach enables safe vehicle operation in critical scenarios, while retaining the benefits of non-uniformly distributed resources within the environment perception.

Motion Planning for Connected Automated Vehicles at Occluded Intersections With Infrastructure Sensors

Oct 21, 2021

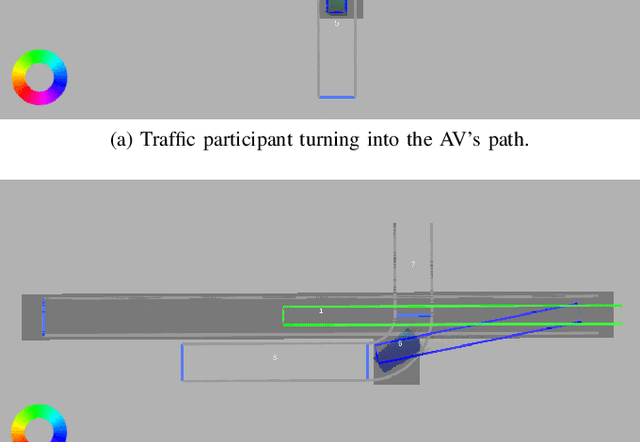

Abstract:Motion planning at urban intersections that accounts for the situation context, handles occlusions, and deals with measurement and prediction uncertainty is a major challenge on the way to urban automated driving. In this work, we address this challenge with a sampling-based optimization approach. For this, we formulate an optimal control problem that optimizes for low risk and high passenger comfort. The risk is calculated on the basis of the perception information and the respective uncertainty using a risk model. The risk model combines set-based methods and probabilistic approaches. Thus, the approach provides safety guarantees in a probabilistic sense, while for a vanishing risk, the formal safety guarantees of the set-based methods are inherited. By exploring all available behavior options, our approach solves decision making and longitudinal trajectory planning in one step. The available behavior options are provided by a formal representation of the situation context, which is also used to reduce calculation efforts. Occlusions are resolved using the external perception of infrastructure-mounted sensors. Yet, instead of merging external and ego perception with track-to-track fusion, the information is used in parallel. The motion planning scheme is validated through real-world experiments.

LMB Filter Based Tracking Allowing for Multiple Hypotheses in Object Reference Point Association*

Nov 11, 2020

Abstract:Autonomous vehicles need precise knowledge on dynamic objects in their surroundings. Especially in urban areas with many objects and possible occlusions, an infrastructure system based on a multi-sensor setup can provide the required environment model for the vehicles. Previously, we have published a concept of object reference points (e.g. the corners of an object), which allows for generic sensor "plug and play" interfaces and relatively cheap sensors. This paper describes a novel method to additionally incorporate multiple hypotheses for fusing the measurements of the object reference points using an extension to the previously presented Labeled Multi-Bernoulli (LMB) filter. In contrast to the previous work, this approach improves the tracking quality in the cases where the correct association of the measurement and the object reference point is unknown. Furthermore, this paper identifies options based on physical models to sort out inconsistent and unfeasible associations at an early stage in order to keep the method computationally tractable for real-time applications. The method is evaluated on simulations as well as on real scenarios. In comparison to comparable methods, the proposed approach shows a considerable performance increase, especially the number of non-continuous tracks is decreased significantly.

LACI: Low-effort Automatic Calibration of Infrastructure Sensors

Nov 05, 2019

Abstract:Sensor calibration usually is a time consuming yet important task. While classical approaches are sensor-specific and often need calibration targets as well as a widely overlapping field of view (FOV), within this work, a cooperative intelligent vehicle is used as callibration target. The vehicleis detected in the sensor frame and then matched with the information received from the cooperative awareness messagessend by the coperative intelligent vehicle. The presented algorithm is fully automated as well as sensor-independent, relying only on a very common set of assumptions. Due to the direct registration on the world frame, no overlapping FOV is necessary. The algorithm is evaluated through experiment for four laserscanners as well as one pair of stereo cameras showing a repetition error within the measurement uncertainty of the sensors. A plausibility check rules out systematic errors that might not have been covered by evaluating the repetition error.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge