Jona Ruof

The ADUULM-360 Dataset -- A Multi-Modal Dataset for Depth Estimation in Adverse Weather

Nov 18, 2024Abstract:Depth estimation is an essential task toward full scene understanding since it allows the projection of rich semantic information captured by cameras into 3D space. While the field has gained much attention recently, datasets for depth estimation lack scene diversity or sensor modalities. This work presents the ADUULM-360 dataset, a novel multi-modal dataset for depth estimation. The ADUULM-360 dataset covers all established autonomous driving sensor modalities, cameras, lidars, and radars. It covers a frontal-facing stereo setup, six surround cameras covering the full 360-degree, two high-resolution long-range lidar sensors, and five long-range radar sensors. It is also the first depth estimation dataset that contains diverse scenes in good and adverse weather conditions. We conduct extensive experiments using state-of-the-art self-supervised depth estimation methods under different training tasks, such as monocular training, stereo training, and full surround training. Discussing these results, we demonstrate common limitations of state-of-the-art methods, especially in adverse weather conditions, which hopefully will inspire future research in this area. Our dataset, development kit, and trained baselines are available at https://github.com/uulm-mrm/aduulm_360_dataset.

Fast Long-Term Multi-Scenario Prediction for Maneuver Planning at Unsignalized Intersections

Jan 26, 2024Abstract:Motion prediction for intelligent vehicles typically focuses on estimating the most probable future evolutions of a traffic scenario. Estimating the gap acceptance, i.e., whether a vehicle merges or crosses before another vehicle with the right of way, is often handled implicitly in the prediction. However, an infrastructure-based maneuver planning can assign artificial priorities between cooperative vehicles, so it needs to evaluate many more potential scenarios. Additionally, the prediction horizon has to be long enough to assess the impact of a maneuver. We, therefore, present a novel long-term prediction approach handling the gap acceptance estimation and the velocity prediction in two separate stages. Thereby, the behavior of regular vehicles as well as priority assignments of cooperative vehicles can be considered. We train both stages on real-world traffic observations to achieve realistic prediction results. Our method has a competitive accuracy and is fast enough to predict a multitude of scenarios in a short time, making it suitable to be used in a maneuver planning framework.

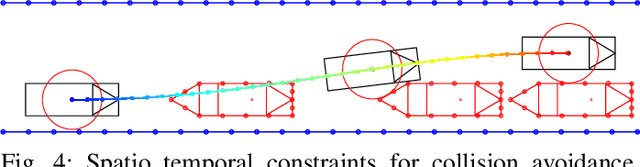

Real-Time Spatial Trajectory Planning for Urban Environments Using Dynamic Optimization

May 04, 2023Abstract:Planning trajectories for automated vehicles in urban environments requires methods with high generality, long planning horizons, and fast update rates. Using a path-velocity decomposition, we contribute a novel planning framework, which generates foresighted trajectories and can handle a wide variety of state and control constraints effectively. In contrast to related work, the proposed optimal control problems are formulated over space rather than time. This spatial formulation decouples environmental constraints from the optimization variables, which allows the application of simple, yet efficient shooting methods. To this end, we present a tailored solution strategy based on ILQR, in the Augmented Lagrangian framework, to rapidly minimize the trajectory objective costs, even under infeasible initial solutions. Evaluations in simulation and on a full-sized automated vehicle in real-world urban traffic show the real-time capability and versatility of the proposed approach.

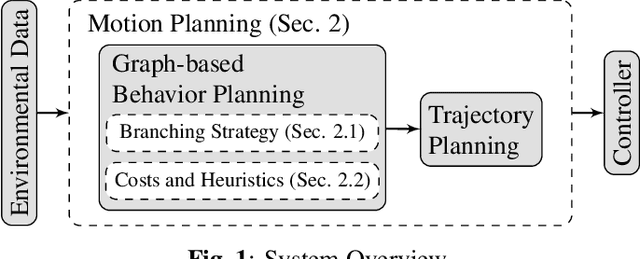

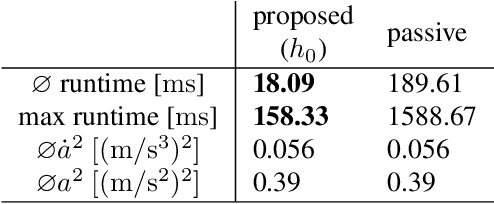

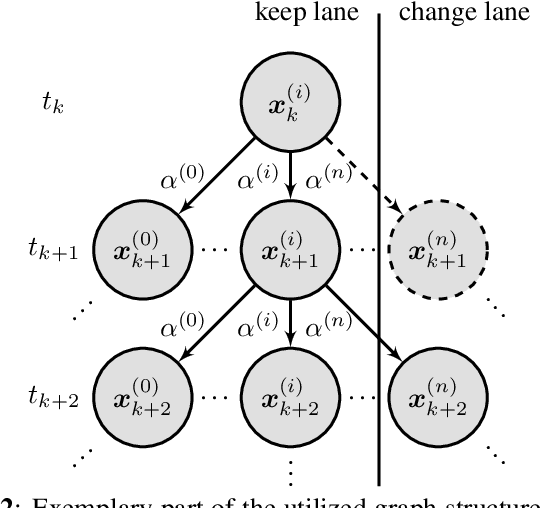

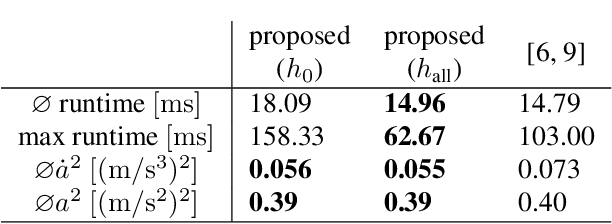

Graph-based Motion Planning for Automated Vehicles using Multi-model Branching and Admissible Heuristics

Feb 15, 2021

Abstract:Automated driving in urban scenarios requires efficient planning algorithms able to handle complex situations in real-time. A popular approach is to use graph-based planning methods in order to obtain a rough trajectory which is subsequently optimized. A key aspect is the generation of trajectories implementing comfortable and safe behavior already during graph-search while keeping computation times low. To capture this aspect, on the one hand, a branching strategy is presented in this work that leads to better performance in terms of quality of resulting trajectories and runtime. On the other hand, admissible heuristics are shown which guide the graph-search efficiently, where the solution remains optimal.

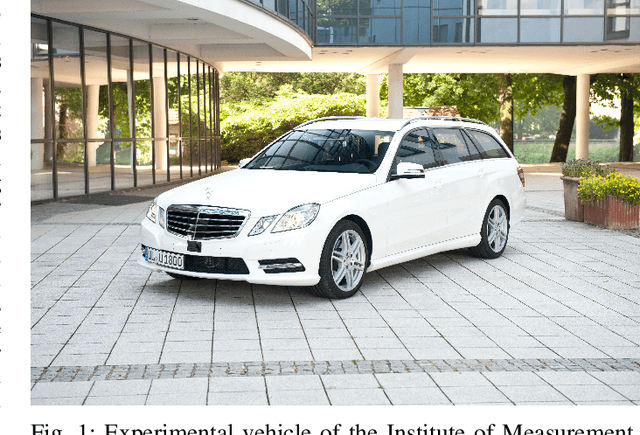

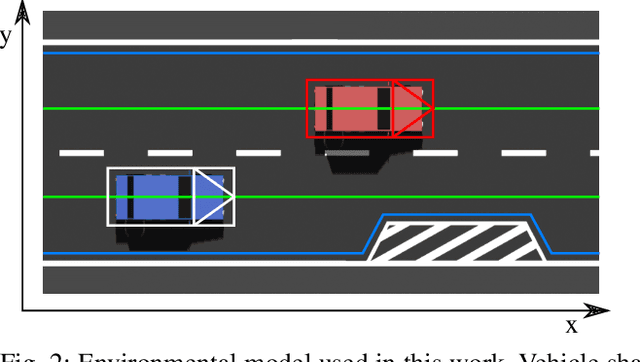

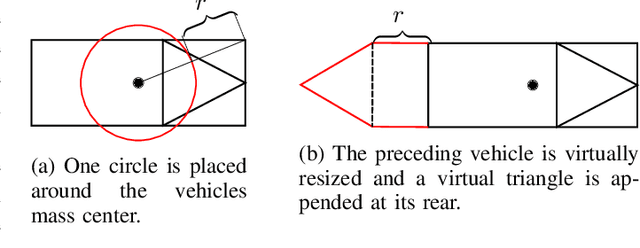

On-Road Motion Planning for Automated Vehicles at Ulm University

Dec 07, 2020

Abstract:The Institute of Measurement, Control and Microtechnology of the University of Ulm investigates advanced driver assistance systems for decades and concentrates large parts on autonomous driving. It is well known: Motion planning is a key technology for autonomous driving. It is first and foremost responsible for the safety of the vehicle passengers as well as of all surrounding traffic participants. However, a further task consists also in providing a smooth and comfortable driving behavior. In Ulm, we have the grateful opportunity to test our algorithms under real conditions in public traffic and diversified scenarios. In this paper, we would like to give the readers an insight of our work, about the vehicle, the test track, as well as of the related problems, challenges and solutions. Therefore, we will describe the motion planning system and explain the implemented functionalities. Furthermore, we will show how our vehicle moves through public road traffic and how it deals with challenging scenarios like e.g. driving through roundabouts and intersections.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge