Thomas Wodtko

The ADUULM-360 Dataset -- A Multi-Modal Dataset for Depth Estimation in Adverse Weather

Nov 18, 2024Abstract:Depth estimation is an essential task toward full scene understanding since it allows the projection of rich semantic information captured by cameras into 3D space. While the field has gained much attention recently, datasets for depth estimation lack scene diversity or sensor modalities. This work presents the ADUULM-360 dataset, a novel multi-modal dataset for depth estimation. The ADUULM-360 dataset covers all established autonomous driving sensor modalities, cameras, lidars, and radars. It covers a frontal-facing stereo setup, six surround cameras covering the full 360-degree, two high-resolution long-range lidar sensors, and five long-range radar sensors. It is also the first depth estimation dataset that contains diverse scenes in good and adverse weather conditions. We conduct extensive experiments using state-of-the-art self-supervised depth estimation methods under different training tasks, such as monocular training, stereo training, and full surround training. Discussing these results, we demonstrate common limitations of state-of-the-art methods, especially in adverse weather conditions, which hopefully will inspire future research in this area. Our dataset, development kit, and trained baselines are available at https://github.com/uulm-mrm/aduulm_360_dataset.

Self-Assessment of Evidential Grid Map Fusion for Robust Motion Planning

Sep 30, 2024

Abstract:Conflicting sensor measurements pose a huge problem for the environment representation of an autonomous robot. Therefore, in this paper, we address the self-assessment of an evidential grid map in which data from conflicting LiDAR sensor measurements are fused, followed by methods for robust motion planning under these circumstances. First, conflicting measurements aggregated in Subjective-Logic-based evidential grid maps are classified. Then, a self-assessment framework evaluates these conflicts and estimates their severity for the overall system by calculating a degradation score. This enables the detection of calibration errors and insufficient sensor setups. In contrast to other motion planning approaches, the information gained from the evidential grid maps is further used inside our proposed path-planning algorithm. Here, the impact of conflicting measurements on the current motion plan is evaluated, and a robust and curious path-planning strategy is derived to plan paths under the influence of conflicting data. This ensures that the system integrity is maintained in severely degraded environment representations which can prevent the unnecessary abortion of planning tasks.

Self-Assessment and Correction of Sensor Synchronization

Sep 30, 2024

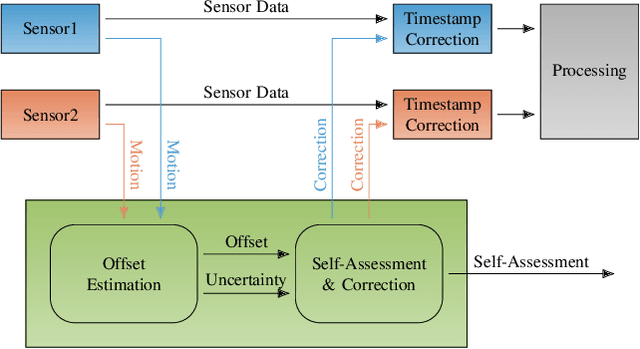

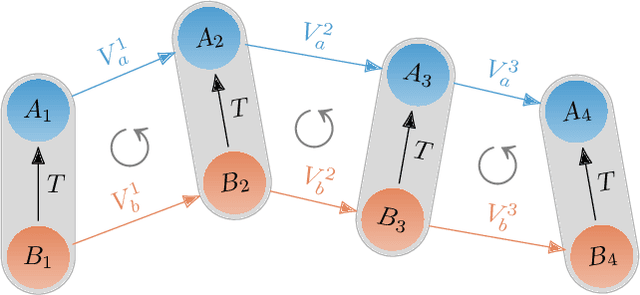

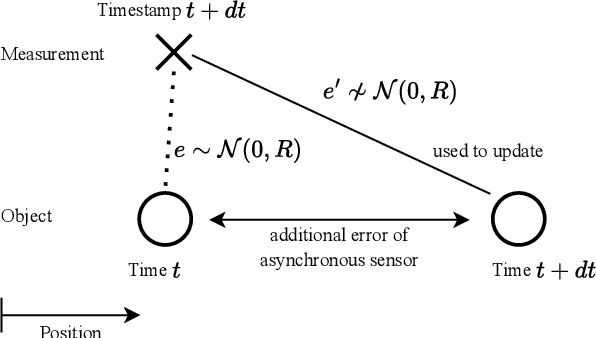

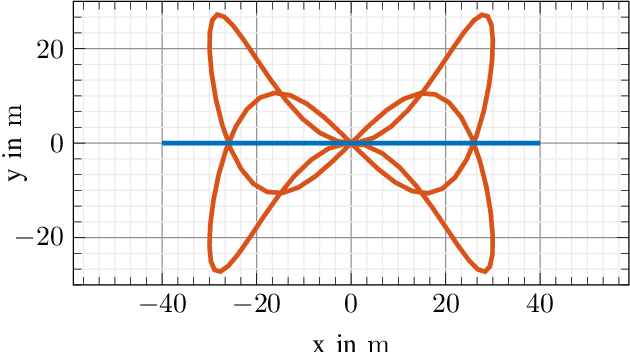

Abstract:We propose an approach to assess the synchronization of rigidly mounted sensors based on their rotational motion. Using function similarity measures combined with a sliding window approach, our approach is capable of estimating time-varying time offsets. Further, the estimated offset allows the correction of erroneously assigned time stamps on measurements. This mitigates the effect of synchronization issues on subsequent modules in autonomous software stacks, such as tracking systems that heavily rely on accurate measurement time stamps. Additionally, a self-assessment based on an uncertainty measure is derived, and correction strategies are described. Our approach is evaluated with Monte Carlo experiments containing different error patterns. The results show that our approach accurately estimates time offsets and, thus, is able to detect and assess synchronization issues. To further embrace the importance of our approach for autonomous systems, we investigate the effect of synchronization inconsistencies in tracking systems in more detail and demonstrate the beneficial effect of our proposed offset correction.

Globally Optimal GNSS Multi-Antenna Lever Arm Calibration

Jun 14, 2024

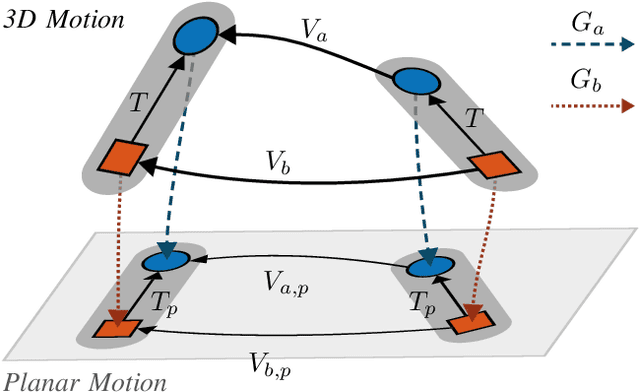

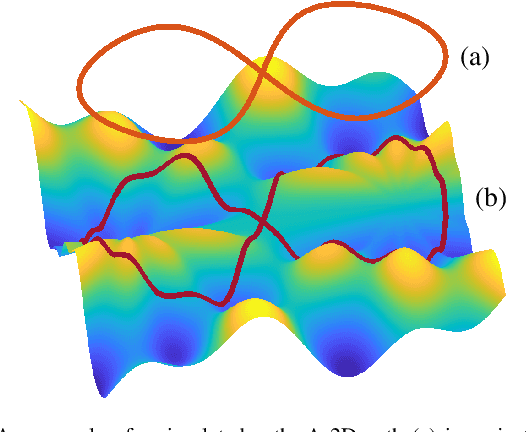

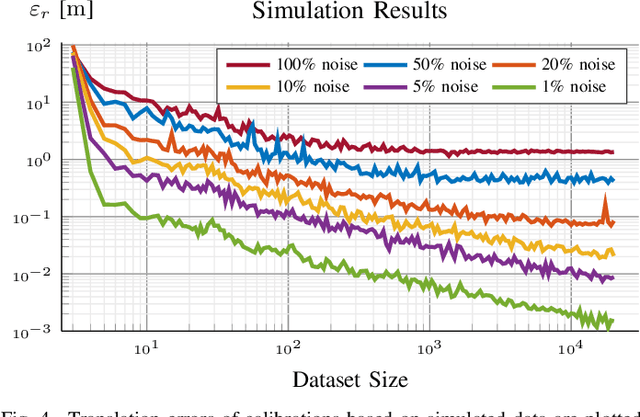

Abstract:Sensor calibration is crucial for autonomous driving, providing the basis for accurate localization and consistent data fusion. Enabling the use of high-accuracy GNSS sensors, this work focuses on the antenna lever arm calibration. We propose a globally optimal multi-antenna lever arm calibration approach based on motion measurements. For this, we derive an optimization method that further allows the integration of a-priori knowledge. Globally optimal solutions are obtained by leveraging the Lagrangian dual problem and a primal recovery strategy. Generally, motion-based calibration for autonomous vehicles is known to be difficult due to cars' predominantly planar motion. Therefore, we first describe the motion requirements for a unique solution and then propose a planar motion extension to overcome this issue and enable a calibration based on the restricted motion of autonomous vehicles. Last we present and discuss the results of our thorough evaluation. Using simulated and augmented real-world data, we achieve accurate calibration results and fast run times that allow online deployment.

User Feedback and Sample Weighting for Ill-Conditioned Hand-Eye Calibration

Aug 11, 2023

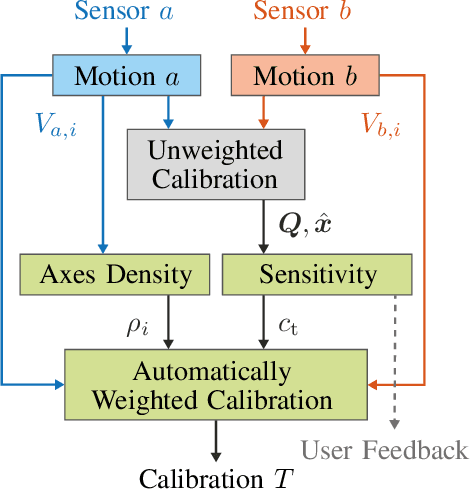

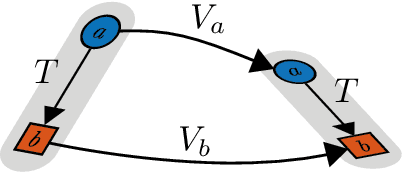

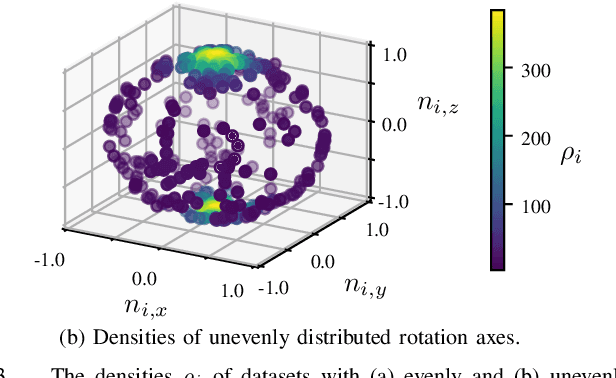

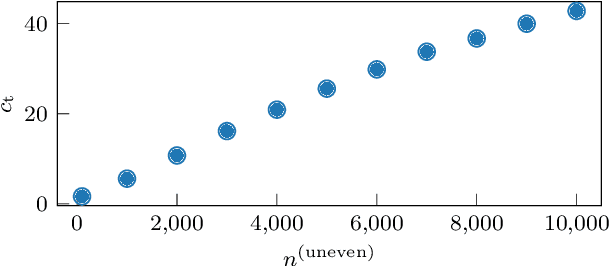

Abstract:Hand-eye calibration is an important and extensively researched method for calibrating rigidly coupled sensors, solely based on estimates of their motion. Due to the geometric structure of this problem, at least two motion estimates with non-parallel rotation axes are required for a unique solution. If the majority of rotation axes are almost parallel, the resulting optimization problem is ill-conditioned. In this paper, we propose an approach to automatically weight the motion samples of such an ill-conditioned optimization problem for improving the conditioning. The sample weights are chosen in relation to the local density of all available rotation axes. Furthermore, we present an approach for estimating the sensitivity and conditioning of the cost function, separated into the translation and the rotation part. This information can be employed as user feedback when recording the calibration data to prevent ill-conditioning in advance. We evaluate and compare our approach on artificially augmented data from the KITTI odometry dataset.

Adaptive Patched Grid Mapping

Aug 07, 2023

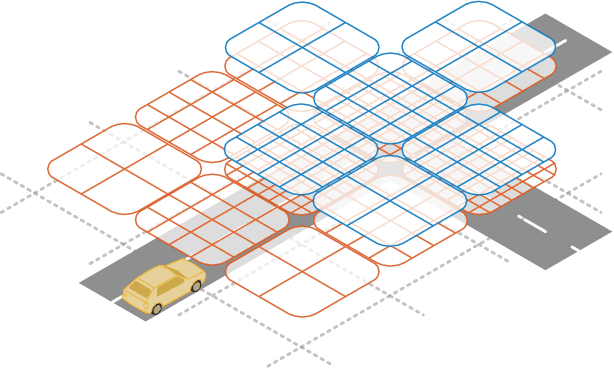

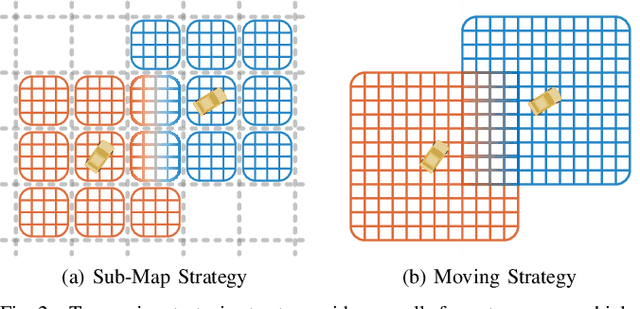

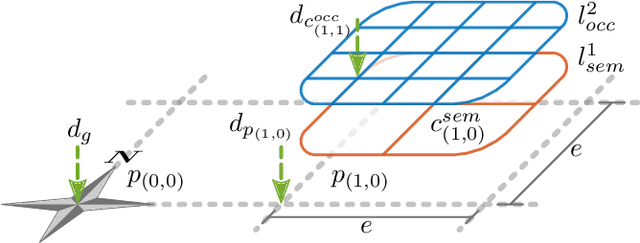

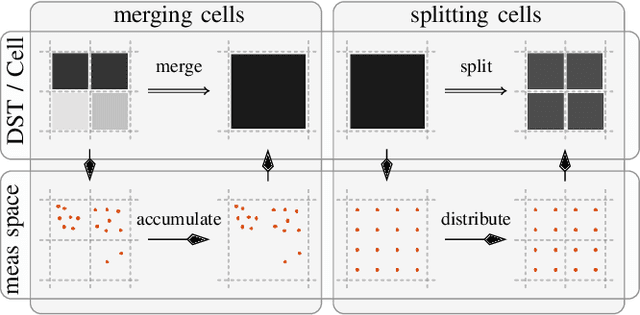

Abstract:In this work, we propose a novel adaptive grid mapping approach, the Adaptive Patched Grid Map, which enables a situational aware grid based perception for autonomous vehicles. Its structure allows a flexible representation of the surrounding unstructured environment. By splitting types of information into separate layers less memory is allocated when data is unevenly or sporadically available. However, layers must be resampled during the fusion process to cope with dynamically changing cell sizes. Therefore, we propose a novel spatial cell fusion approach. Together with the proposed fusion framework, dynamically changing external requirements, such as cell resolution specifications and horizon targets, are considered. For our evaluation, real-world data were recorded from an autonomous vehicle driving through various traffic situations. Based on this, the memory efficiency is compared to other approaches, and fusion execution times are determined. The results confirm the adaptation to requirement changes and a significant memory usage reduction.

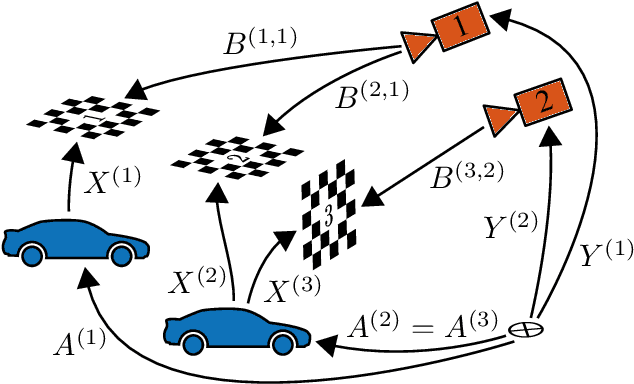

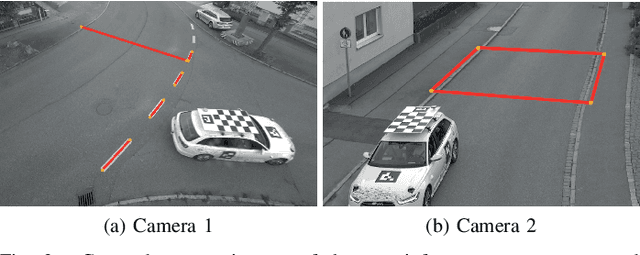

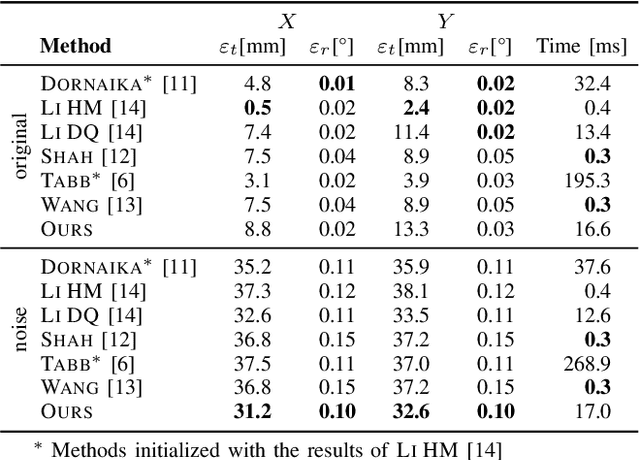

Extrinsic Infrastructure Calibration Using the Hand-Eye Robot-World Formulation

May 02, 2023

Abstract:We propose a certifiably globally optimal approach for solving the hand-eye robot-world problem supporting multiple sensors and targets at once. Further, we leverage this formulation for estimating a geo-referenced calibration of infrastructure sensors. Since vehicle motion recorded by infrastructure sensors is mostly planar, obtaining a unique solution for the respective hand-eye robot-world problem is unfeasible without incorporating additional knowledge. Hence, we extend our proposed method to include a-priori knowledge, i.e., the translation norm of calibration targets, to yield a unique solution. Our approach achieves state-of-the-art results on simulated and real-world data. Especially on real-world intersection data, our approach utilizing the translation norm is the only method providing accurate results.

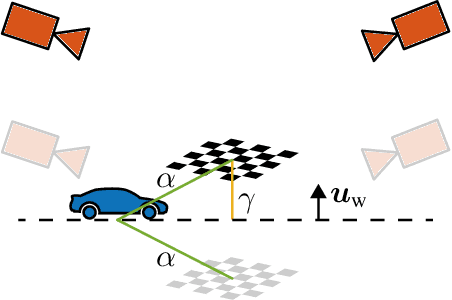

Globally Optimal Multi-Scale Monocular Hand-Eye Calibration Using Dual Quaternions

Jan 12, 2022

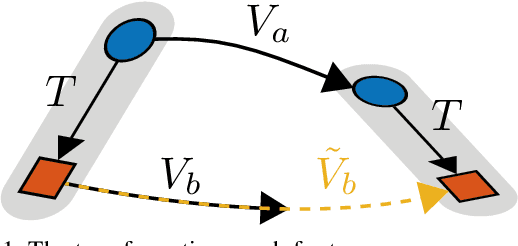

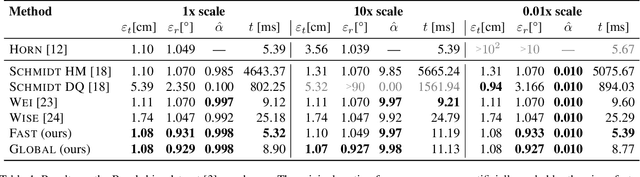

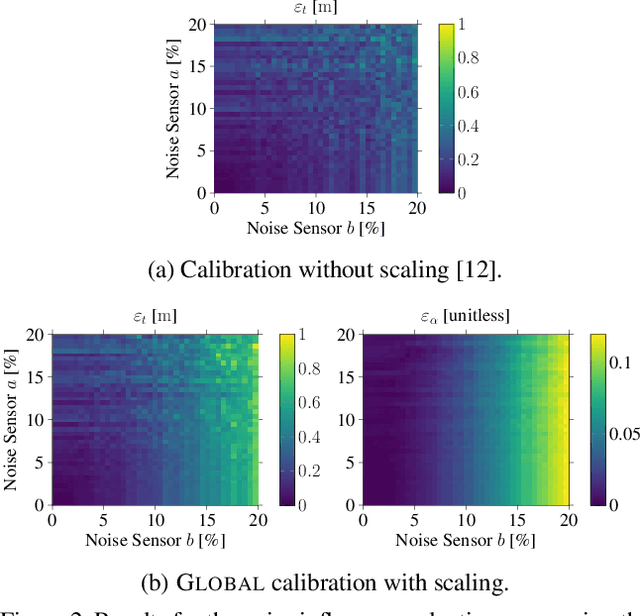

Abstract:In this work, we present an approach for monocular hand-eye calibration from per-sensor ego-motion based on dual quaternions. Due to non-metrically scaled translations of monocular odometry, a scaling factor has to be estimated in addition to the rotation and translation calibration. For this, we derive a quadratically constrained quadratic program that allows a combined estimation of all extrinsic calibration parameters. Using dual quaternions leads to low run-times due to their compact representation. Our problem formulation further allows to estimate multiple scalings simultaneously for different sequences of the same sensor setup. Based on our problem formulation, we derive both, a fast local and a globally optimal solving approach. Finally, our algorithms are evaluated and compared to state-of-the-art approaches on simulated and real-world data, e.g., the EuRoC MAV dataset.

Online Extrinsic Calibration based on Per-Sensor Ego-Motion Using Dual Quaternions

Jan 27, 2021

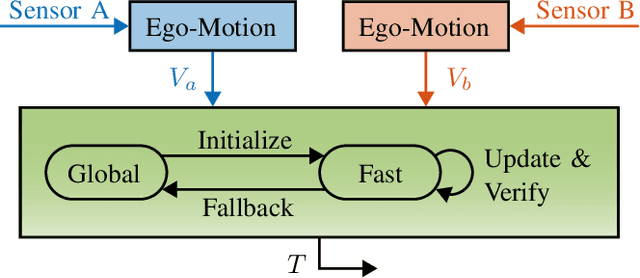

Abstract:In this work, we propose an approach for extrinsic sensor calibration from per-sensor ego-motion estimates. Our problem formulation is based on dual quaternions, enabling two different online capable solving approaches. We provide a certifiable globally optimal and a fast local approach along with a method to verify the globality of the local approach. Additionally, means for integrating previous knowledge, for example, a common ground plane for planar sensor motion, are described. Our algorithms are evaluated on simulated data and on a publicly available dataset containing RGB-D camera images. Further, our online calibration approach is tested on the KITTI odometry dataset, which provides data of a lidar and two stereo camera systems mounted on a vehicle. Our evaluation confirms the short run time, state-of-the-art accuracy, as well as online capability of our approach while retaining the global optimality of the solution at any time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge