Matti Henning

Advancing Frame-Dropping in Multi-Object Tracking-by-Detection Systems Through Event-Based Detection Triggering

Aug 01, 2023Abstract:With rising computational requirements modern automated vehicles (AVs) often consider trade-offs between energy consumption and perception performance, potentially jeopardizing their safe operation. Frame-dropping in tracking-by-detection perception systems presents a promising approach, although late traffic participant detection might be induced. In this paper, we extend our previous work on frame-dropping in tracking-by-detection perception systems. We introduce an additional event-based triggering mechanism using camera object detections to increase both the system's efficiency, as well as its safety. Evaluating both single and multi-modal tracking methods we show that late object detections are mitigated while the potential for reduced energy consumption is significantly increased, reaching nearly 60 Watt per reduced point in HOTA score.

The Impact of Frame-Dropping on Performance and Energy Consumption for Multi-Object Tracking

Apr 17, 2023Abstract:The safety of automated vehicles (AVs) relies on the representation of their environment. Consequently, state-of-the-art AVs employ potent sensor systems to achieve the best possible environment representation at all times. Although these high-performing systems achieve impressive results, they induce significant requirements for the processing capabilities of an AV's computational hardware components and their energy consumption. To enable a dynamic adaptation of such perception systems based on the situational perception requirements, we introduce a model-agnostic method for the scalable employment of single-frame object detection models using frame-dropping in tracking-by-detection systems. We evaluate our approach on the KITTI 3D Tracking Benchmark, showing that significant energy savings can be achieved at acceptable performance degradation, reaching up to 28% reduction of energy consumption at a performance decline of 6.6% in HOTA score.

Identification of Threat Regions From a Dynamic Occupancy Grid Map for Situation-Aware Environment Perception

Jul 11, 2022

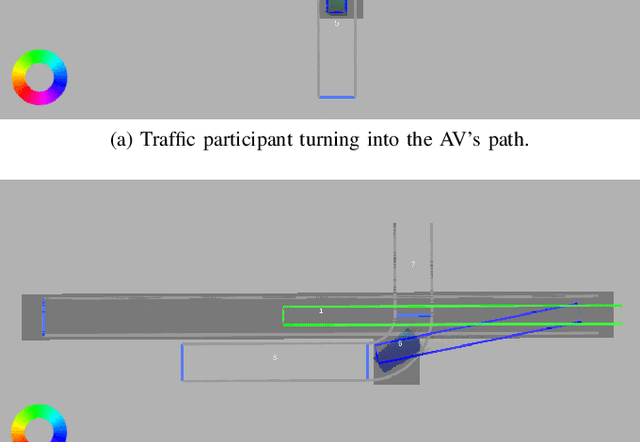

Abstract:The advance towards higher levels of automation within the field of automated driving is accompanied by increasing requirements for the operational safety of vehicles. Induced by the limitation of computational resources, trade-offs between the computational complexity of algorithms and their potential to ensure safe operation of automated vehicles are often encountered. Situation-aware environment perception presents one promising example, where computational resources are distributed to regions within the perception area that are relevant for the task of the automated vehicle. While prior map knowledge is often leveraged to identify relevant regions, in this work, we present a lightweight identification of safety-relevant regions that relies solely on online information. We show that our approach enables safe vehicle operation in critical scenarios, while retaining the benefits of non-uniformly distributed resources within the environment perception.

Situation-Aware Environment Perception for Decentralized Automation Architectures

Jul 05, 2022

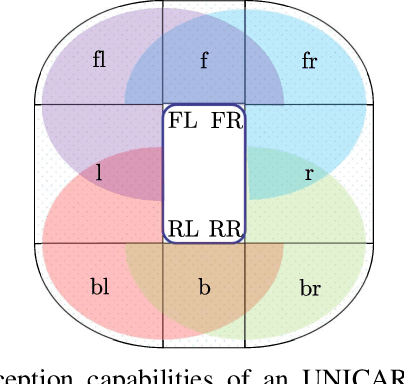

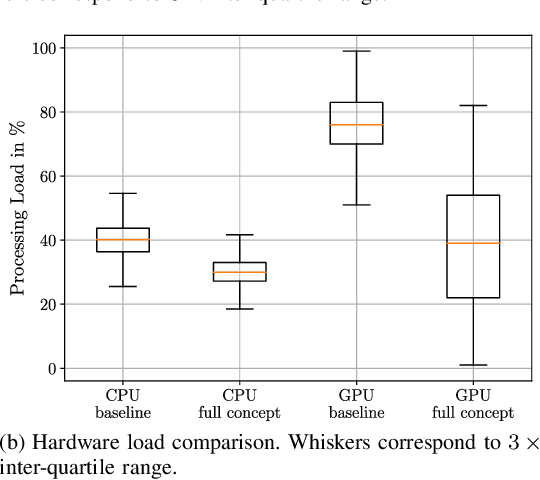

Abstract:Advances in the field of environment perception for automated agents have resulted in an ongoing increase in generated sensor data. The available computational resources to process these data are bound to become insufficient for real-time applications. Reducing the amount of data to be processed by identifying the most relevant data based on the agents' situation, often referred to as situation-awareness, has gained increasing research interest, and the importance of complementary approaches is expected to increase further in the near future. In this work, we extend the applicability range of our recently introduced concept for situation-aware environment perception to the decentralized automation architecture of the UNICARagil project. Considering the specific driving capabilities of the vehicle and using real-world data on target hardware in a post-processing manner, we provide an estimate for the daily reduction in power consumption that accumulates to 36.2%. While achieving these promising results, we additionally show the need to consider scalability in data processing in the design of software modules as well as in the design of functional systems if the benefits of situation-awareness shall be leveraged optimally.

Situation-Aware Environment Perception Using a Multi-Layer Attention Map

Dec 02, 2021

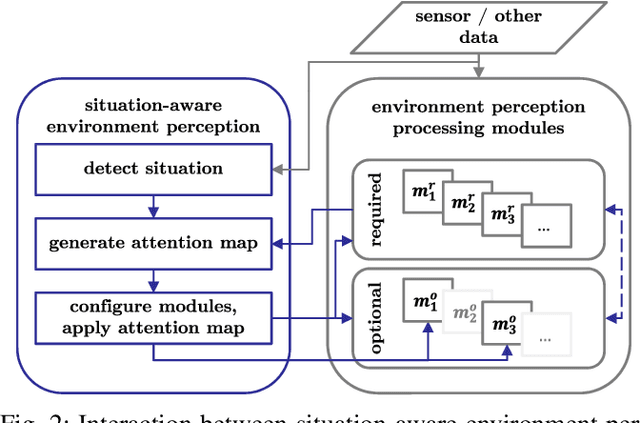

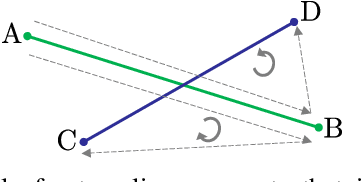

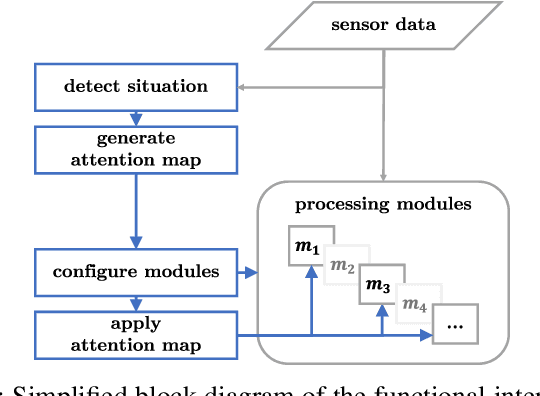

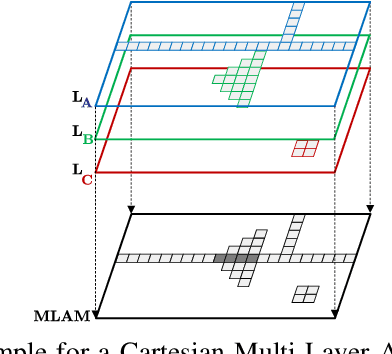

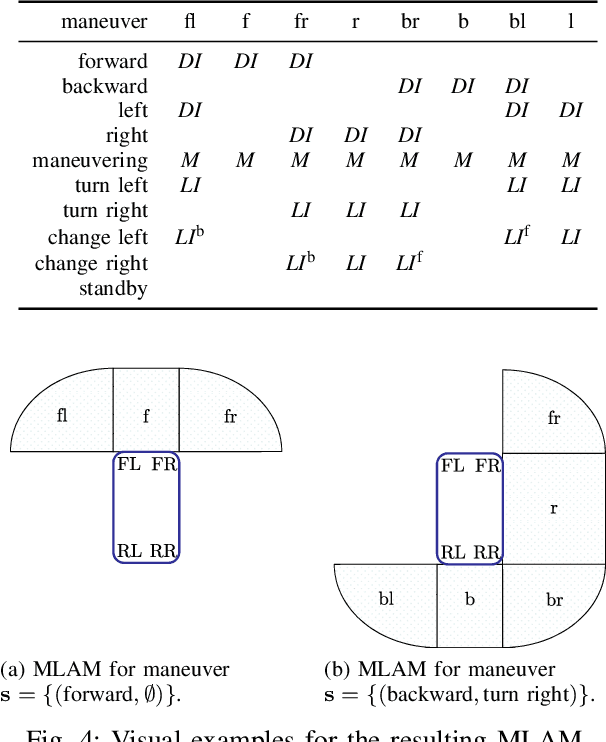

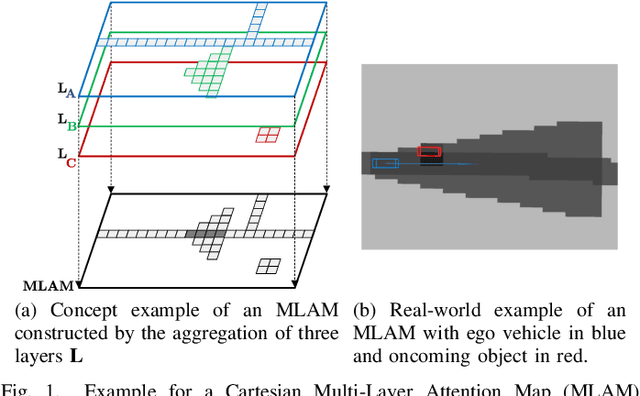

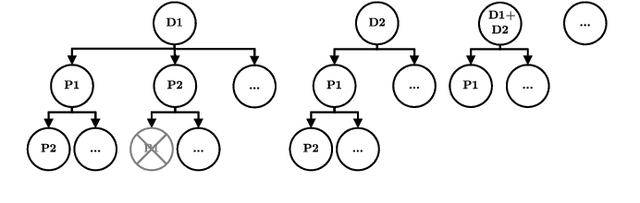

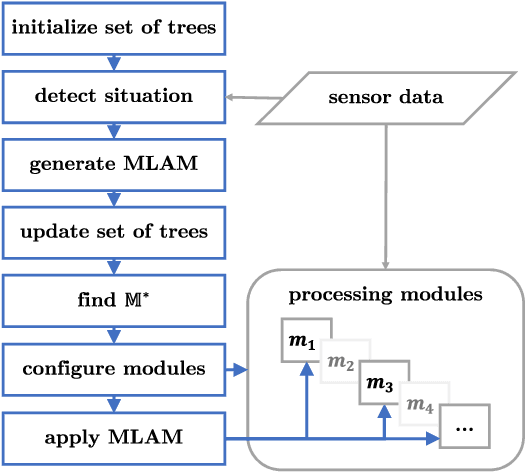

Abstract:Within the field of automated driving, a clear trend in environment perception tends towards more sensors, higher redundancy, and overall increase in computational power. This is mainly driven by the paradigm to perceive the entire environment as best as possible at all times. However, due to the ongoing rise in functional complexity, compromises have to be considered to ensure real-time capabilities of the perception system. In this work, we introduce a concept for situation-aware environment perception to control the resource allocation towards processing relevant areas within the data as well as towards employing only a subset of functional modules for environment perception, if sufficient for the current driving task. Specifically, we propose to evaluate the context of an automated vehicle to derive a multi-layer attention map (MLAM) that defines relevant areas. Using this MLAM, the optimum of active functional modules is dynamically configured and intra-module processing of only relevant data is enforced. We outline the feasibility of application of our concept using real-world data in a straight-forward implementation for our system at hand. While retaining overall functionality, we achieve a reduction of accumulated processing time of 59%.

Anomaly Detection in Radar Data Using PointNets

Sep 20, 2021

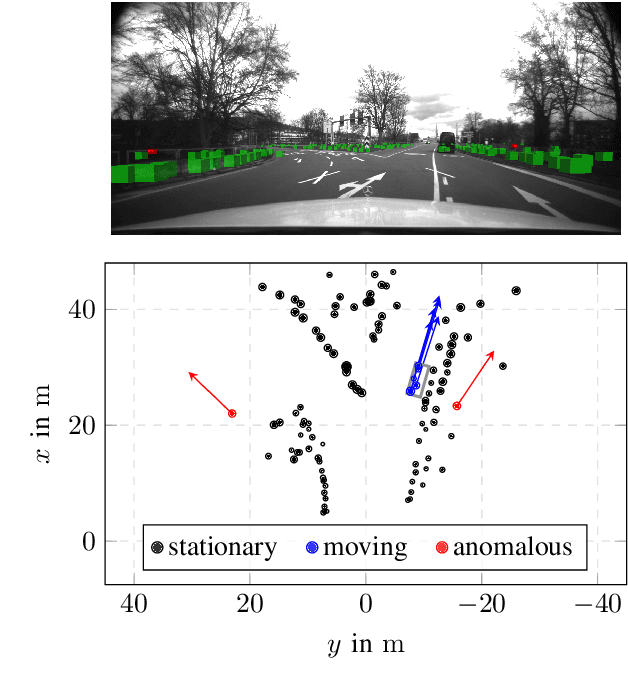

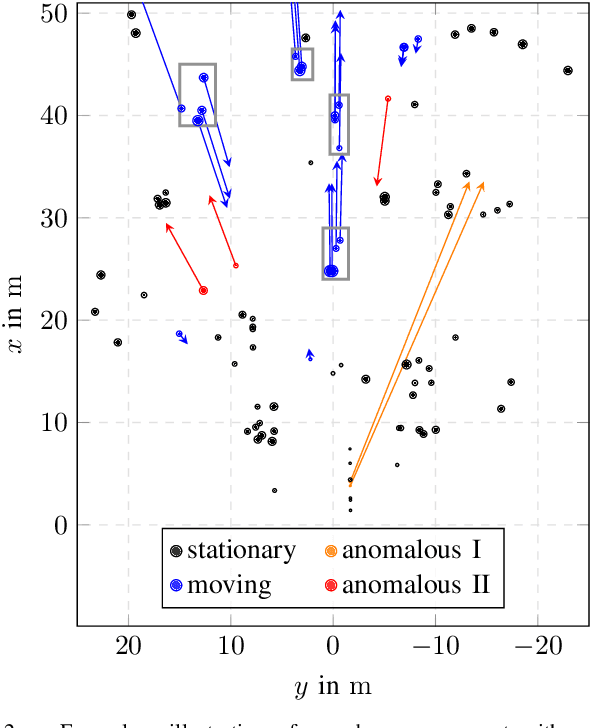

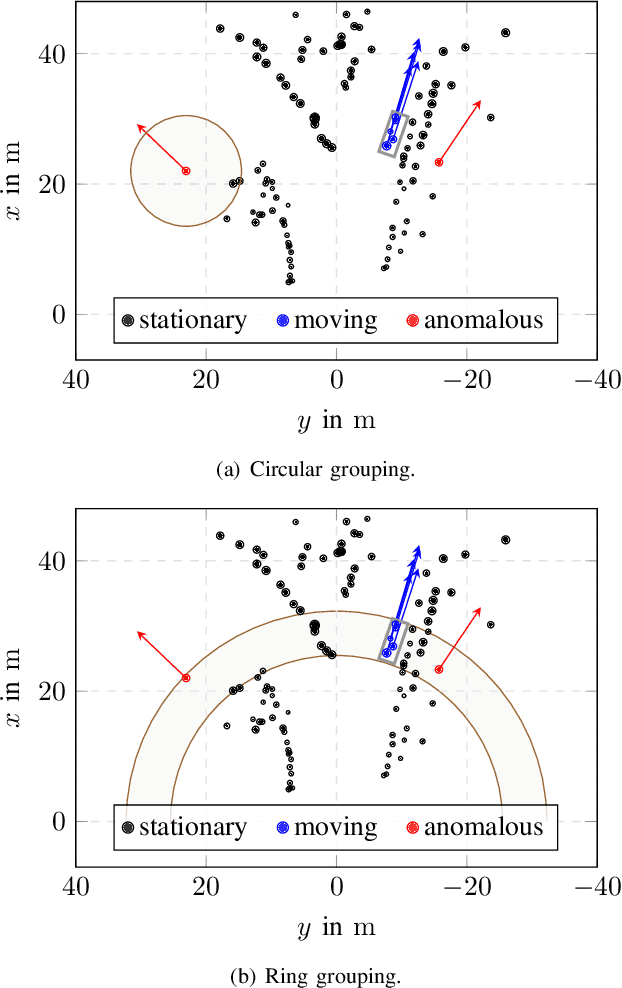

Abstract:For autonomous driving, radar is an important sensor type. On the one hand, radar offers a direct measurement of the radial velocity of targets in the environment. On the other hand, in literature, radar sensors are known for their robustness against several kinds of adverse weather conditions. However, on the downside, radar is susceptible to ghost targets or clutter which can be caused by several different causes, e.g., reflective surfaces in the environment. Ghost targets, for instance, can result in erroneous object detections. To this end, it is desirable to identify anomalous targets as early as possible in radar data. In this work, we present an approach based on PointNets to detect anomalous radar targets. Modifying the PointNet-architecture driven by our task, we developed a novel grouping variant which contributes to a multi-form grouping module. Our method is evaluated on a real-world dataset in urban scenarios and shows promising results for the detection of anomalous radar targets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge