Marvin Klimke

Towards Cooperative Maneuver Planning in Mixed Traffic at Urban Intersections

Mar 25, 2024

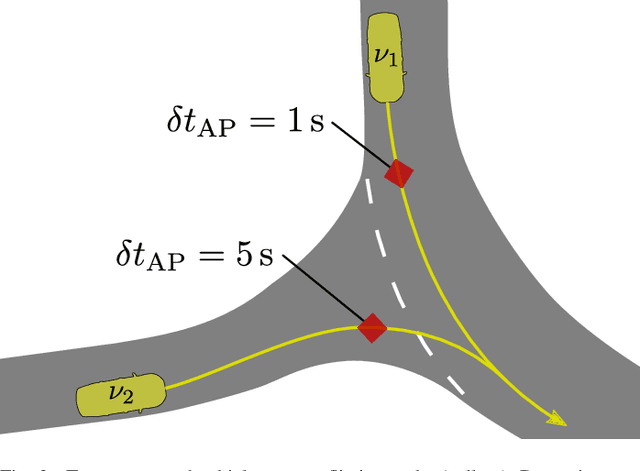

Abstract:Connected automated driving promises a significant improvement of traffic efficiency and safety on highways and in urban areas. Apart from sharing of awareness and perception information over wireless communication links, cooperative maneuver planning may facilitate active guidance of connected automated vehicles at urban intersections. Research in automatic intersection management put forth a large body of works that mostly employ rule-based or optimization-based approaches primarily in fully automated simulated environments. In this work, we present two cooperative planning approaches that are capable of handling mixed traffic, i.e., the road being shared by automated vehicles and regular vehicles driven by humans. Firstly, we propose an optimization-based planner trained on real driving data that cyclically selects the most efficient out of multiple predicted coordinated maneuvers. Additionally, we present a cooperative planning approach based on graph-based reinforcement learning, which conquers the lack of ground truth data for cooperative maneuvers. We present evaluation results of both cooperative planners in high-fidelity simulation and real-world traffic. Simulative experiments in fully automated traffic and mixed traffic show that cooperative maneuver planning leads to less delay due to interaction and a reduced number of stops. In real-world experiments with three prototype connected automated vehicles in public traffic, both planners demonstrate their ability to perform efficient cooperative maneuvers.

Integration of Reinforcement Learning Based Behavior Planning With Sampling Based Motion Planning for Automated Driving

Apr 17, 2023

Abstract:Reinforcement learning has received high research interest for developing planning approaches in automated driving. Most prior works consider the end-to-end planning task that yields direct control commands and rarely deploy their algorithm to real vehicles. In this work, we propose a method to employ a trained deep reinforcement learning policy for dedicated high-level behavior planning. By populating an abstract objective interface, established motion planning algorithms can be leveraged, which derive smooth and drivable trajectories. Given the current environment model, we propose to use a built-in simulator to predict the traffic scene for a given horizon into the future. The behavior of automated vehicles in mixed traffic is determined by querying the learned policy. To the best of our knowledge, this work is the first to apply deep reinforcement learning in this manner, and as such lacks a state-of-the-art benchmark. Thus, we validate the proposed approach by comparing an idealistic single-shot plan with cyclic replanning through the learned policy. Experiments with a real testing vehicle on proving grounds demonstrate the potential of our approach to shrink the simulation to real world gap of deep reinforcement learning based planning approaches. Additional simulative analyses reveal that more complex multi-agent maneuvers can be managed by employing the cycling replanning approach.

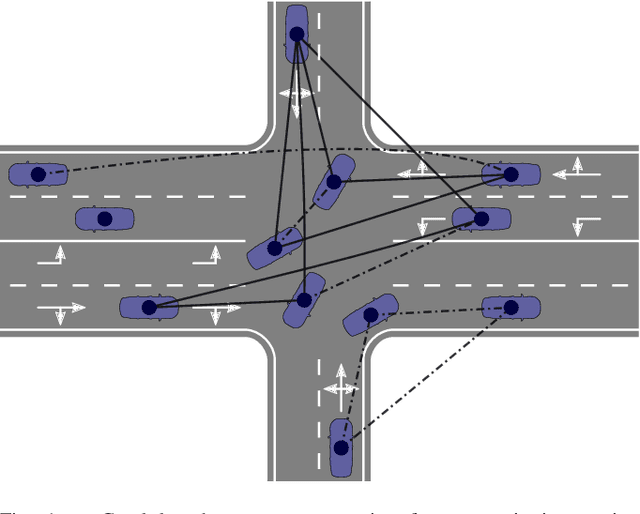

Automatic Intersection Management in Mixed Traffic Using Reinforcement Learning and Graph Neural Networks

Jan 30, 2023Abstract:Connected automated driving has the potential to significantly improve urban traffic efficiency, e.g., by alleviating issues due to occlusion. Cooperative behavior planning can be employed to jointly optimize the motion of multiple vehicles. Most existing approaches to automatic intersection management, however, only consider fully automated traffic. In practice, mixed traffic, i.e., the simultaneous road usage by automated and human-driven vehicles, will be prevalent. The present work proposes to leverage reinforcement learning and a graph-based scene representation for cooperative multi-agent planning. We build upon our previous works that showed the applicability of such machine learning methods to fully automated traffic. The scene representation is extended for mixed traffic and considers uncertainty in the human drivers' intentions. In the simulation-based evaluation, we model measurement uncertainties through noise processes that are tuned using real-world data. The paper evaluates the proposed method against an enhanced first in - first out scheme, our baseline for mixed traffic management. With increasing share of automated vehicles, the learned planner significantly increases the vehicle throughput and reduces the delay due to interaction. Non-automated vehicles benefit virtually alike.

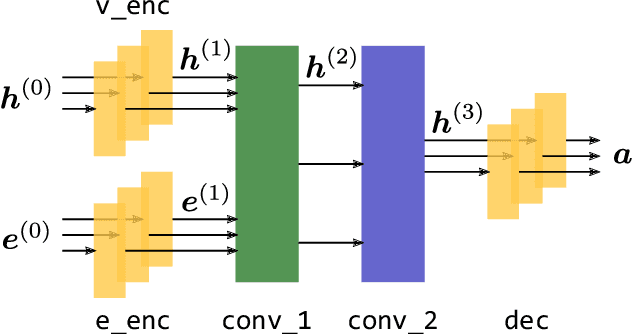

An Enhanced Graph Representation for Machine Learning Based Automatic Intersection Management

Jul 18, 2022

Abstract:The improvement of traffic efficiency at urban intersections receives strong research interest in the field of automated intersection management. So far, mostly non-learning algorithms like reservation or optimization-based ones were proposed to solve the underlying multi-agent planning problem. At the same time, automated driving functions for a single ego vehicle are increasingly implemented using machine learning methods. In this work, we build upon a previously presented graph-based scene representation and graph neural network to approach the problem using reinforcement learning. The scene representation is improved in key aspects by using edge features in addition to the existing node features for the vehicles. This leads to an increased representation quality that is leveraged by an updated network architecture. The paper provides an in-depth evaluation of the proposed method against baselines that are commonly used in automatic intersection management. Compared to a traditional signalized intersection and an enhanced first-in-first-out scheme, a significant reduction of induced delay is observed at varying traffic densities. Finally, the generalization capability of the graph-based representation is evaluated by testing the policy on intersection layouts not seen during training. The model generalizes virtually without restrictions to smaller intersection layouts and within certain limits to larger ones.

Cooperative Behavioral Planning for Automated Driving using Graph Neural Networks

Feb 23, 2022

Abstract:Urban intersections are prone to delays and inefficiencies due to static precedence rules and occlusions limiting the view on prioritized traffic. Existing approaches to improve traffic flow, widely known as automatic intersection management systems, are mostly based on non-learning reservation schemes or optimization algorithms. Machine learning-based techniques show promising results in planning for a single ego vehicle. This work proposes to leverage machine learning algorithms to optimize traffic flow at urban intersections by jointly planning for multiple vehicles. Learning-based behavior planning poses several challenges, demanding for a suited input and output representation as well as large amounts of ground-truth data. We address the former issue by using a flexible graph-based input representation accompanied by a graph neural network. This allows to efficiently encode the scene and inherently provide individual outputs for all involved vehicles. To learn a sensible policy, without relying on the imitation of expert demonstrations, the cooperative planning task is phrased as a reinforcement learning problem. We train and evaluate the proposed method in an open-source simulation environment for decision making in automated driving. Compared to a first-in-first-out scheme and traffic governed by static priority rules, the learned planner shows a significant gain in flow rate, while reducing the number of induced stops. In addition to synthetic simulations, the approach is also evaluated based on real-world traffic data taken from the publicly available inD dataset.

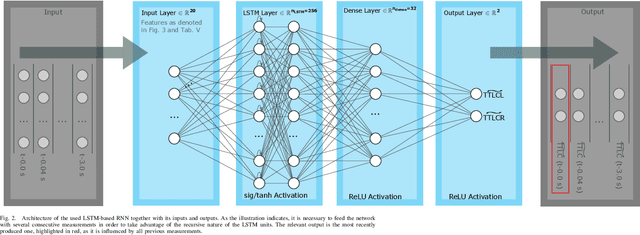

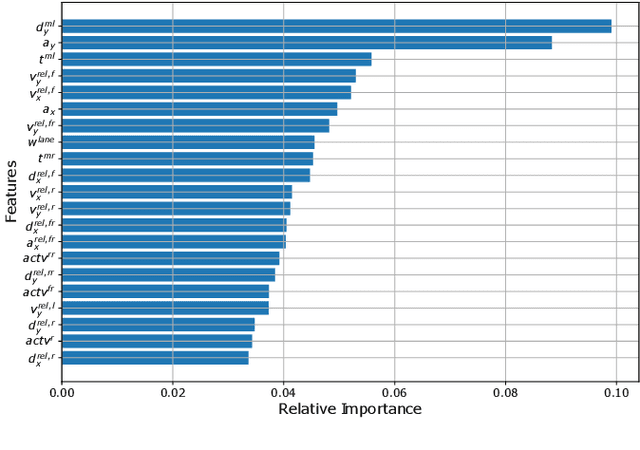

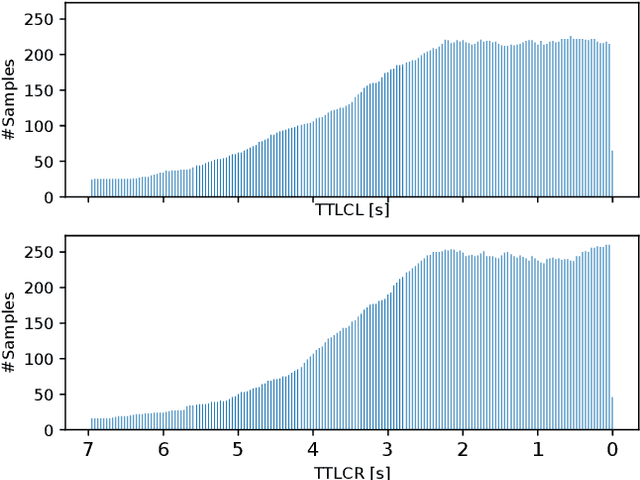

Predicting the Time Until a Vehicle Changes the Lane Using LSTM-based Recurrent Neural Networks

Feb 03, 2021

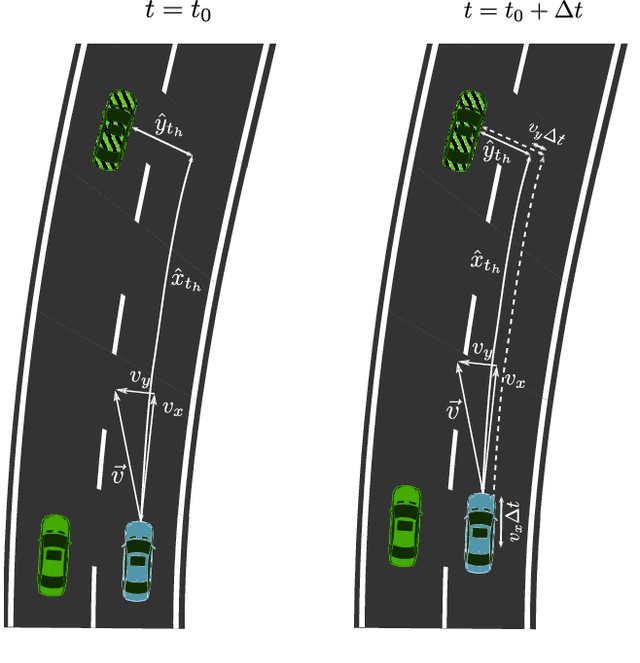

Abstract:To plan safe and comfortable trajectories for automated vehicles on highways, accurate predictions of traffic situations are needed. So far, a lot of research effort has been spent on detecting lane change maneuvers rather than on estimating the point in time a lane change actually happens. In practice, however, this temporal information might be even more useful. This paper deals with the development of a system that accurately predicts the time to the next lane change of surrounding vehicles on highways using long short-term memory-based recurrent neural networks. An extensive evaluation based on a large real-world data set shows that our approach is able to make reliable predictions, even in the most challenging situations, with a root mean squared error around 0.7 seconds. Already 3.5 seconds prior to lane changes the predictions become highly accurate, showing a median error of less than 0.25 seconds. In summary, this article forms a fundamental step towards downstreamed highly accurate position predictions.

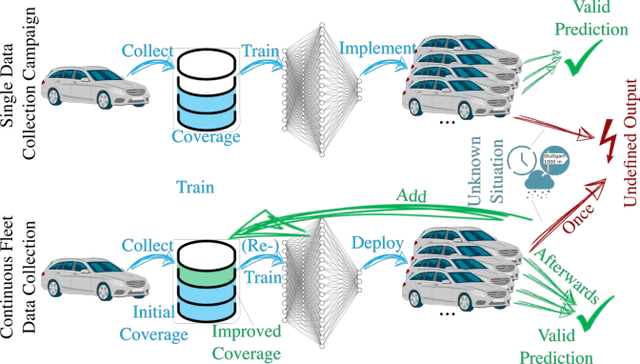

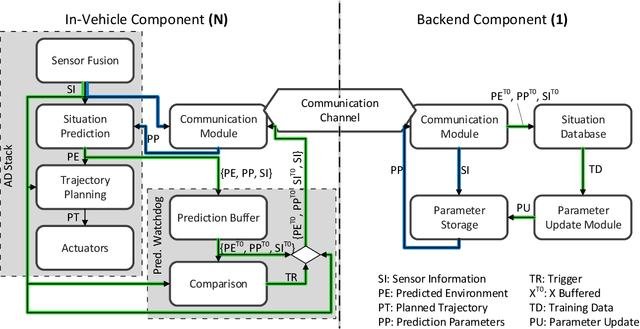

A Fleet Learning Architecture for Enhanced Behavior Predictions during Challenging External Conditions

Sep 24, 2020

Abstract:Already today, driver assistance systems help to make daily traffic more comfortable and safer. However, there are still situations that are quite rare but are hard to handle at the same time. In order to cope with these situations and to bridge the gap towards fully automated driving, it becomes necessary to not only collect enormous amounts of data but rather the right ones. This data can be used to develop and validate the systems through machine learning and simulation pipelines. Along this line this paper presents a fleet learning-based architecture that enables continuous improvements of systems predicting the movement of surrounding traffic participants. Moreover, the presented architecture is applied to a testing vehicle in order to prove the fundamental feasibility of the system. Finally, it is shown that the system collects meaningful data which are helpful to improve the underlying prediction systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge