Markus Hahn

RadarScenes: A Real-World Radar Point Cloud Data Set for Automotive Applications

Apr 06, 2021

Abstract:A new automotive radar data set with measurements and point-wise annotations from more than four hours of driving is presented. Data provided by four series radar sensors mounted on one test vehicle were recorded and the individual detections of dynamic objects were manually grouped to clusters and labeled afterwards. The purpose of this data set is to enable the development of novel (machine learning-based) radar perception algorithms with the focus on moving road users. Images of the recorded sequences were captured using a documentary camera. For the evaluation of future object detection and classification algorithms, proposals for score calculation are made so that researchers can evaluate their algorithms on a common basis. Additional information as well as download instructions can be found on the website of the data set: www.radar-scenes.com.

Motion Classification and Height Estimation of Pedestrians Using Sparse Radar Data

Mar 03, 2021

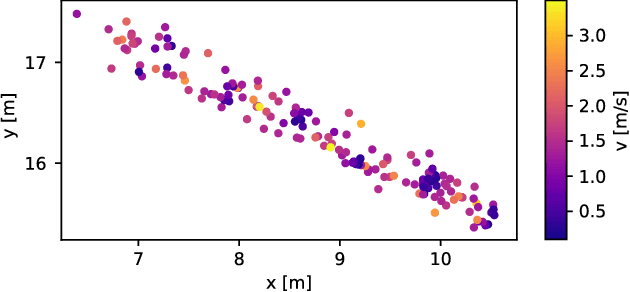

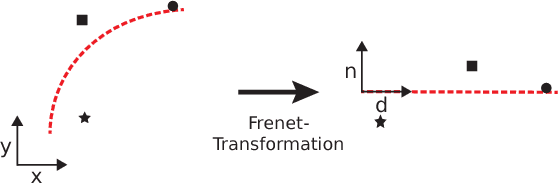

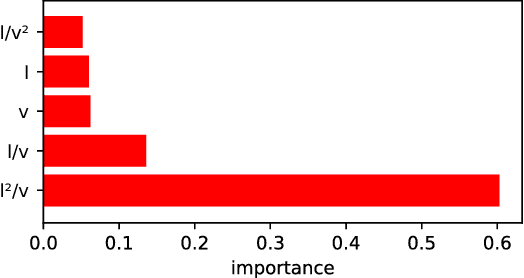

Abstract:A complete overview of the surrounding vehicle environment is important for driver assistance systems and highly autonomous driving. Fusing results of multiple sensor types like camera, radar and lidar is crucial for increasing the robustness. The detection and classification of objects like cars, bicycles or pedestrians has been analyzed in the past for many sensor types. Beyond that, it is also helpful to refine these classes and distinguish for example between different pedestrian types or activities. This task is usually performed on camera data, though recent developments are based on radar spectrograms. However, for most automotive radar systems, it is only possible to obtain radar targets instead of the original spectrograms. This work demonstrates that it is possible to estimate the body height of walking pedestrians using 2D radar targets. Furthermore, different pedestrian motion types are classified.

* 6 pages, 6 figures, 1 table

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge