Vincent Fremont

HEUDIASYC

seg_3D_by_PC2D: Multi-View Projection for Domain Generalization and Adaptation in 3D Semantic Segmentation

May 21, 2025Abstract:3D semantic segmentation plays a pivotal role in autonomous driving and road infrastructure analysis, yet state-of-the-art 3D models are prone to severe domain shift when deployed across different datasets. We propose a novel multi-view projection framework that excels in both domain generalization (DG) and unsupervised domain adaptation (UDA). Our approach first aligns Lidar scans into coherent 3D scenes and renders them from multiple virtual camera poses to create a large-scale synthetic 2D dataset (PC2D). We then use it to train a 2D segmentation model in-domain. During inference, the model processes hundreds of views per scene; the resulting logits are back-projected to 3D with an occlusion-aware voting scheme to generate final point-wise labels. Our framework is modular and enables extensive exploration of key design parameters, such as view generation optimization (VGO), visualization modality optimization (MODO), and 2D model choice. We evaluate on the nuScenes and SemanticKITTI datasets under both the DG and UDA settings. We achieve state-of-the-art results in UDA and close to state-of-the-art in DG, with particularly large gains on large, static classes. Our code and dataset generation tools will be publicly available at https://github.com/andrewcaunes/ia4markings

Mixed Signals: A Diverse Point Cloud Dataset for Heterogeneous LiDAR V2X Collaboration

Feb 19, 2025Abstract:Vehicle-to-everything (V2X) collaborative perception has emerged as a promising solution to address the limitations of single-vehicle perception systems. However, existing V2X datasets are limited in scope, diversity, and quality. To address these gaps, we present Mixed Signals, a comprehensive V2X dataset featuring 45.1k point clouds and 240.6k bounding boxes collected from three connected autonomous vehicles (CAVs) equipped with two different types of LiDAR sensors, plus a roadside unit with dual LiDARs. Our dataset provides precisely aligned point clouds and bounding box annotations across 10 classes, ensuring reliable data for perception training. We provide detailed statistical analysis on the quality of our dataset and extensively benchmark existing V2X methods on it. Mixed Signals V2X Dataset is one of the highest quality, large-scale datasets publicly available for V2X perception research. Details on the website https://mixedsignalsdataset.cs.cornell.edu/.

Collaborative Active SLAM: Synchronous and Asynchronous Coordination Among Agents

Oct 03, 2023

Abstract:In the realm of autonomous robotics, a critical challenge lies in developing robust solutions for Active Collaborative SLAM, wherein multiple robots must collaboratively explore and map an unknown environment while intelligently coordinating their movements and sensor data acquisitions. To this aim, we present two approaches for coordinating a system consisting of multiple robots to perform Active Collaborative SLAM (AC-SLAM) for environmental exploration. Our two coordination approaches, synchronous and asynchronous implement a methodology to prioritize robot goal assignments by the central server. We also present a method to efficiently spread the robots for maximum exploration while keeping SLAM uncertainty low. Both coordination approaches were evaluated through simulation on publicly available datasets, obtaining promising results.

Active SLAM: A Review On Last Decade

Dec 22, 2022

Abstract:This article presents a novel review of Active SLAM (A-SLAM) research conducted in the last decade. We discuss the formulation, application, and methodology applied in A-SLAM for trajectory generation and control action selection using information theory based approaches. Our extensive qualitative and quantitative analysis highlights the approaches, scenarios, configurations, types of robots, sensor types, dataset usage, and path planning approaches of A-SLAM research. We conclude by presenting the limitations and proposing future research possibilities. We believe that this survey will be helpful to researchers in understanding the various methods and techniques applied to A-SLAM formulation.

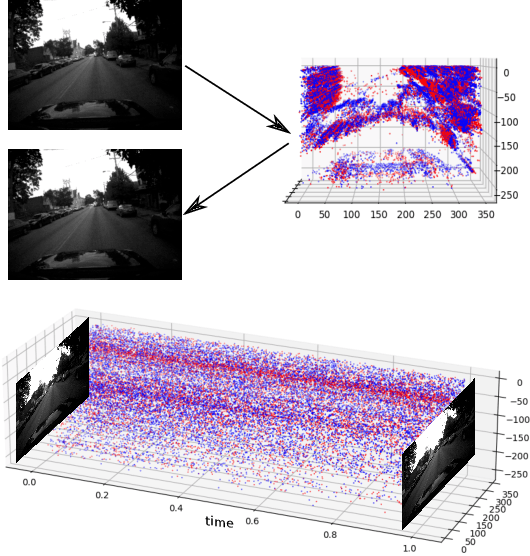

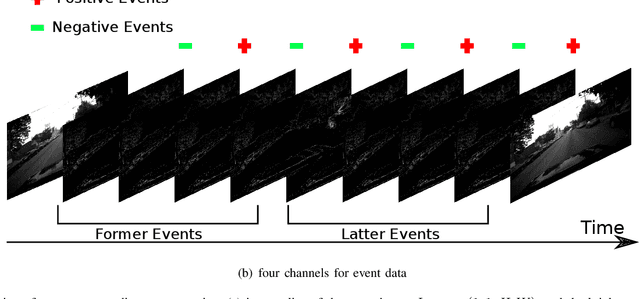

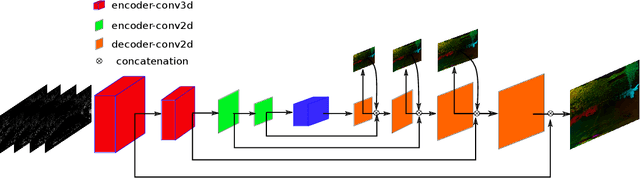

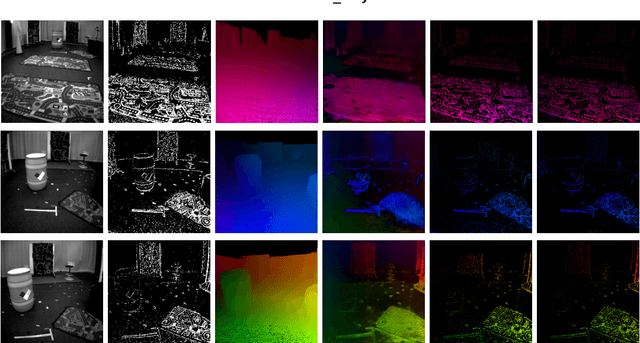

3D-FlowNet: Event-based optical flow estimation with 3D representation

Jan 28, 2022

Abstract:Event-based cameras can overpass frame-based cameras limitations for important tasks such as high-speed motion detection during self-driving cars navigation in low illumination conditions. The event cameras' high temporal resolution and high dynamic range, allow them to work in fast motion and extreme light scenarios. However, conventional computer vision methods, such as Deep Neural Networks, are not well adapted to work with event data as they are asynchronous and discrete. Moreover, the traditional 2D-encoding representation methods for event data, sacrifice the time resolution. In this paper, we first improve the 2D-encoding representation by expanding it into three dimensions to better preserve the temporal distribution of the events. We then propose 3D-FlowNet, a novel network architecture that can process the 3D input representation and output optical flow estimations according to the new encoding methods. A self-supervised training strategy is adopted to compensate the lack of labeled datasets for the event-based camera. Finally, the proposed network is trained and evaluated with the Multi-Vehicle Stereo Event Camera (MVSEC) dataset. The results show that our 3D-FlowNet outperforms state-of-the-art approaches with less training epoch (30 compared to 100 of Spike-FlowNet).

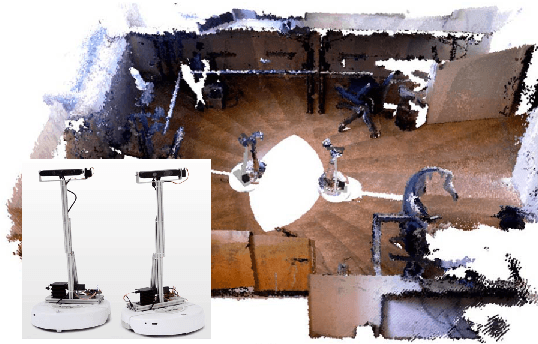

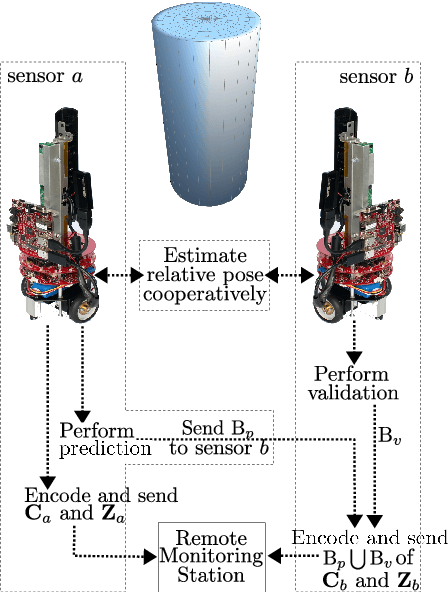

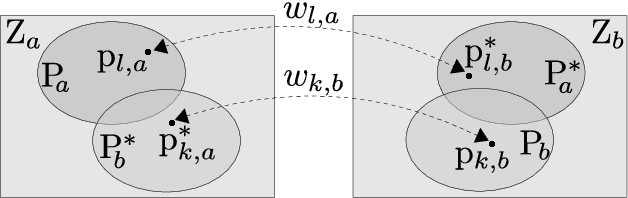

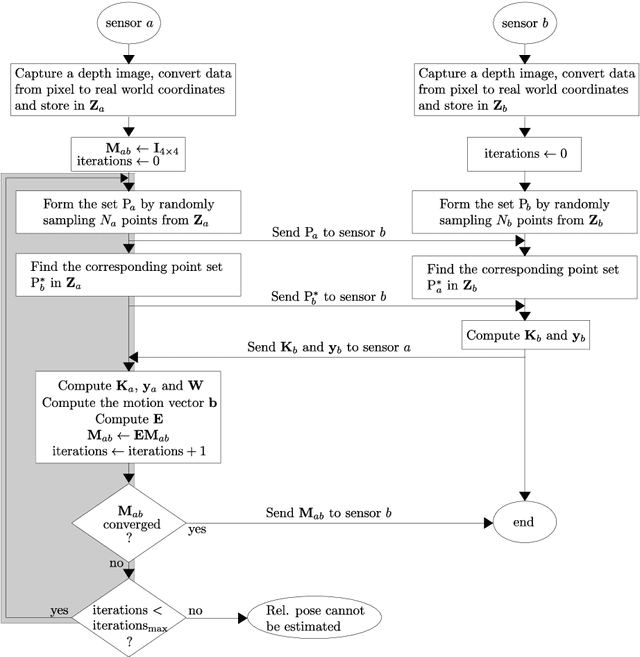

Relative Pose Based Redundancy Removal: Collaborative RGB-D Data Transmission in Mobile Visual Sensor Networks

Feb 27, 2018

Abstract:The Relative Pose based Redundancy Removal(RPRR) scheme is presented, which has been designed for mobile RGB-D sensor networks operating under bandwidth-constrained operational scenarios. Participating sensor nodes detect the redundant visual and depth information to prevent their transmission leading to a significant improvement in wireless channel usage efficiency and power savings. Experimental results show that wireless channel utilization is improved by 250% and battery consumption is halved when the RPRR scheme is used instead of sending the sensor images independently.

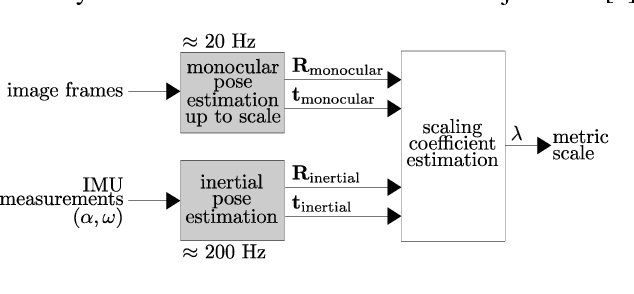

A Loosely-Coupled Approach for Metric Scale Estimation in Monocular Vision-Inertial Systems

Oct 09, 2017

Abstract:In monocular vision systems, lack of knowledge about metric distances caused by the inherent scale ambiguity can be a strong limitation for some applications. We offer a method for fusing inertial measurements with monocular odometry or tracking to estimate metric distances in inertial-monocular systems and to increase the rate of pose estimates. As we performed the fusion in a loosely-coupled manner, each input block can be easily replaced with one's preference, which makes our method quite flexible. We experimented our method using the ORB-SLAM algorithm for the monocular tracking input and Euler forward integration to process the inertial measurements. We chose sets of data recorded on UAVs to design a suitable system for flying robots.

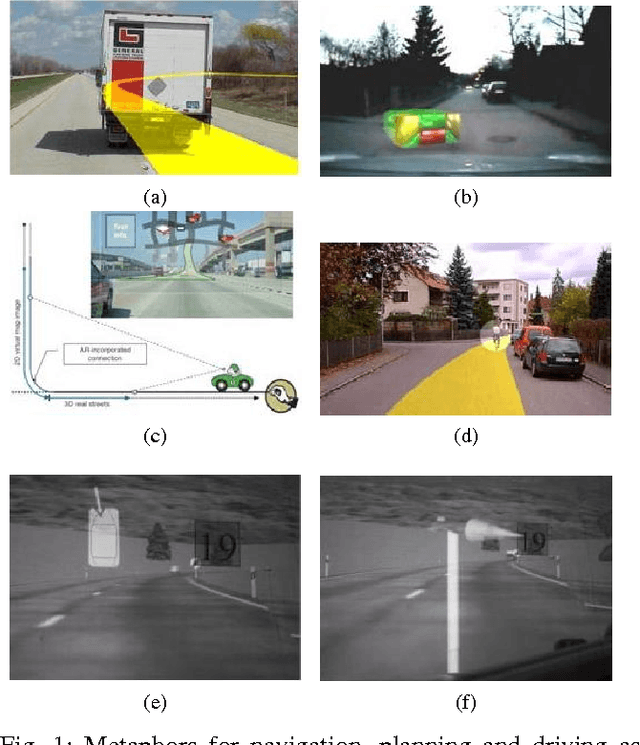

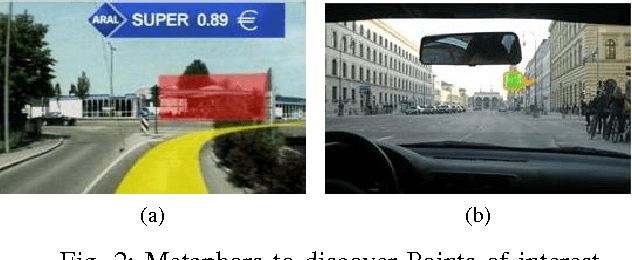

DAARIA: Driver Assistance by Augmented Reality for Intelligent Automobile

Sep 27, 2012

Abstract:Taking into account the drivers' state is a major challenge for designing new advanced driver assistance systems. In this paper we present a driver assistance system strongly coupled to the user. DAARIA 1 stands for Driver Assistance by Augmented Reality for Intelligent Automobile. It is an augmented reality interface powered by several sensors. The detection has two goals: one is the position of obstacles and the quantification of the danger represented by them. The other is the driver's behavior. A suitable visualization metaphor allows the driver to perceive at any time the location of the relevant hazards while keeping his eyes on the road. First results show that our method could be applied to a vehicle but also to aerospace, fluvial or maritime navigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge