Antonio Sgorbissa

Enhancing Worker Safety in Harbors Using Quadruped Robots

Jan 27, 2026Abstract:Infrastructure inspection is becoming increasingly relevant in the field of robotics due to its significant impact on ensuring workers' safety. The harbor environment presents various challenges in designing a robotic solution for inspection, given the complexity of daily operations. This work introduces an initial phase to identify critical areas within the port environment. Following this, a preliminary solution using a quadruped robot for inspecting these critical areas is analyzed.

Designing Empathetic Companions: Exploring Personality, Emotion, and Trust in Social Robots

Apr 17, 2025Abstract:How should a companion robot behave? In this research, we present a cognitive architecture based on a tailored personality model to investigate the impact of robotic personalities on the perception of companion robots. Drawing from existing literature, we identified empathy, trust, and enjoyability as key factors in building companionship with social robots. Based on these insights, we implemented a personality-dependent, emotion-aware generator, recognizing the crucial role of robot emotions in shaping these elements. We then conducted a user study involving 84 dyadic conversation sessions with the emotional robot Navel, which exhibited different personalities. Results were derived from a multimodal analysis, including questionnaires, open-ended responses, and behavioral observations. This approach allowed us to validate the developed emotion generator and explore the relationship between the personality traits of Agreeableness, Extraversion, Conscientiousness, and Empathy. Furthermore, we drew robust conclusions on how these traits influence relational trust, capability trust, enjoyability, and sociability.

Toward a Universal Concept of Artificial Personality: Implementing Robotic Personality in a Kinova Arm

Jan 12, 2025

Abstract:The fundamental role of personality in shaping interactions is increasingly being exploited in robotics. A carefully designed robotic personality has been shown to improve several key aspects of Human-Robot Interaction (HRI). However, the fragmentation and rigidity of existing approaches reveal even greater challenges when applied to non-humanoid robots. On one hand, the state of the art is very dispersed; on the other hand, Industry 4.0 is moving towards a future where humans and industrial robots are going to coexist. In this context, the proper design of a robotic personality can lead to more successful interactions. This research takes a first step in that direction by integrating a comprehensive cognitive architecture built upon the definition of robotic personality - validated on humanoid robots - into a robotic Kinova Jaco2 arm. The robot personality is defined through the cognitive architecture as a vector in the three-dimensional space encompassing Conscientiousness, Extroversion, and Agreeableness, affecting how actions are executed, the action selection process, and the internal reaction to environmental stimuli. Our main objective is to determine whether users perceive distinct personalities in the robot, regardless of its shape, and to understand the role language plays in shaping these perceptions. To achieve this, we conducted a user study comprising 144 sessions of a collaborative game between a Kinova Jaco2 arm and participants, where the robot's behavior was influenced by its assigned personality. Furthermore, we compared two conditions: in the first, the robot communicated solely through gestures and action choices, while in the second, it also utilized verbal interaction.

Moderating Group Conversation Dynamics with Social Robots

Jul 31, 2024Abstract:This research investigates the impact of social robot participation in group conversations and assesses the effectiveness of various addressing policies. The study involved 300 participants, divided into groups of four, interacting with a humanoid robot serving as the moderator. The robot utilized conversation data to determine the most appropriate speaker to address. The findings indicate that the robot's addressing policy significantly influenced conversation dynamics, resulting in more balanced attention to each participant and a reduction in subgroup formation.

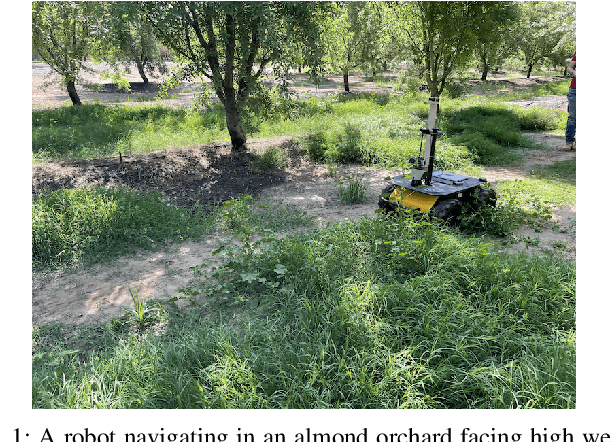

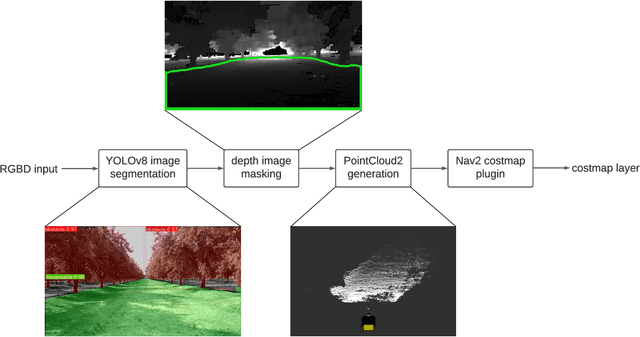

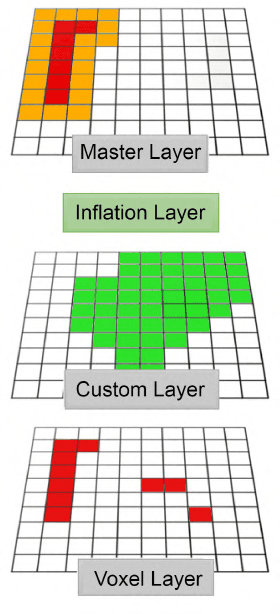

Improving the ROS 2 Navigation Stack with Real-Time Local Costmap Updates for Agricultural Applications

Jul 26, 2024

Abstract:The ROS 2 Navigation Stack (Nav2) has emerged as a widely used software component providing the underlying basis to develop a variety of high-level functionalities. However, when used in outdoor environments such as orchards and vineyards, its functionality is notably limited by the presence of obstacles and/or situations not commonly found in indoor settings. One such example is given by tall grass and weeds that can be safely traversed by a robot, but that can be perceived as obstacles by LiDAR sensors, and then force the robot to take longer paths to avoid them, or abort navigation altogether. To overcome these limitations, domain specific extensions must be developed and integrated into the software pipeline. This paper presents a new, lightweight approach to address this challenge and improve outdoor robot navigation. Leveraging the multi-scale nature of the costmaps supporting Nav2, we developed a system that using a depth camera performs pixel level classification on the images, and in real time injects corrections into the local cost map, thus enabling the robot to traverse areas that would otherwise be avoided by the Nav2. Our approach has been implemented and validated on a Clearpath Husky and we demonstrate that with this extension the robot is able to perform navigation tasks that would be otherwise not practical with the standard components.

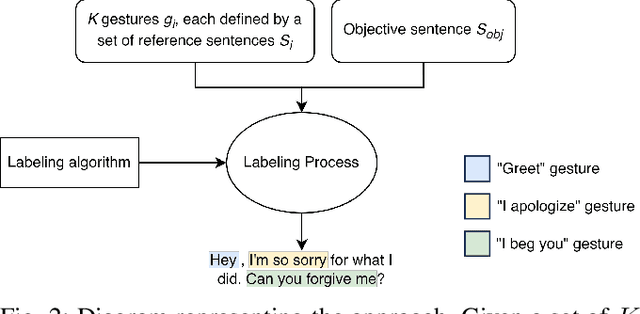

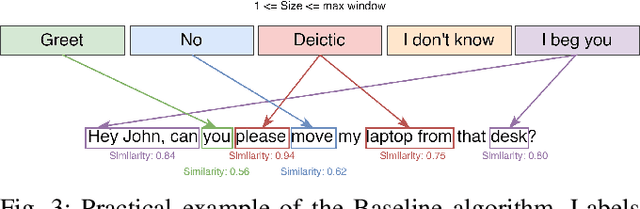

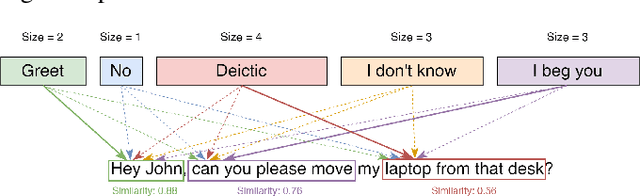

Labeling Sentences with Symbolic and Deictic Gestures via Semantic Similarity

Jul 03, 2024

Abstract:Co-speech gesture generation on artificial agents has gained attention recently, mainly when it is based on data-driven models. However, end-to-end methods often fail to generate co-speech gestures related to semantics with specific forms, i.e., Symbolic and Deictic gestures. In this work, we identify which words in a sentence are contextually related to Symbolic and Deictic gestures. Firstly, we appropriately chose 12 gestures recognized by people from the Italian culture, which different humanoid robots can reproduce. Then, we implemented two rule-based algorithms to label sentences with Symbolic and Deictic gestures. The rules depend on the semantic similarity scores computed with the RoBerta model between sentences that heuristically represent gestures and sub-sentences inside an objective sentence that artificial agents have to pronounce. We also implemented a baseline algorithm that assigns gestures without computing similarity scores. Finally, to validate the results, we asked 30 persons to label a set of sentences with Deictic and Symbolic gestures through a Graphical User Interface (GUI), and we compared the labels with the ones produced by our algorithms. For this scope, we computed Average Precision (AP) and Intersection Over Union (IOU) scores, and we evaluated the Average Computational Time (ACT). Our results show that semantic similarity scores are useful for finding Symbolic and Deictic gestures in utterances.

Enhancing LLM-Based Human-Robot Interaction with Nuances for Diversity Awareness

Jun 25, 2024

Abstract:This paper presents a system for diversity-aware autonomous conversation leveraging the capabilities of large language models (LLMs). The system adapts to diverse populations and individuals, considering factors like background, personality, age, gender, and culture. The conversation flow is guided by the structure of the system's pre-established knowledge base, while LLMs are tasked with various functions, including generating diversity-aware sentences. Achieving diversity-awareness involves providing carefully crafted prompts to the models, incorporating comprehensive information about users, conversation history, contextual details, and specific guidelines. To assess the system's performance, we conducted both controlled and real-world experiments, measuring a wide range of performance indicators.

Collaborative Active SLAM: Synchronous and Asynchronous Coordination Among Agents

Oct 03, 2023

Abstract:In the realm of autonomous robotics, a critical challenge lies in developing robust solutions for Active Collaborative SLAM, wherein multiple robots must collaboratively explore and map an unknown environment while intelligently coordinating their movements and sensor data acquisitions. To this aim, we present two approaches for coordinating a system consisting of multiple robots to perform Active Collaborative SLAM (AC-SLAM) for environmental exploration. Our two coordination approaches, synchronous and asynchronous implement a methodology to prioritize robot goal assignments by the central server. We also present a method to efficiently spread the robots for maximum exploration while keeping SLAM uncertainty low. Both coordination approaches were evaluated through simulation on publicly available datasets, obtaining promising results.

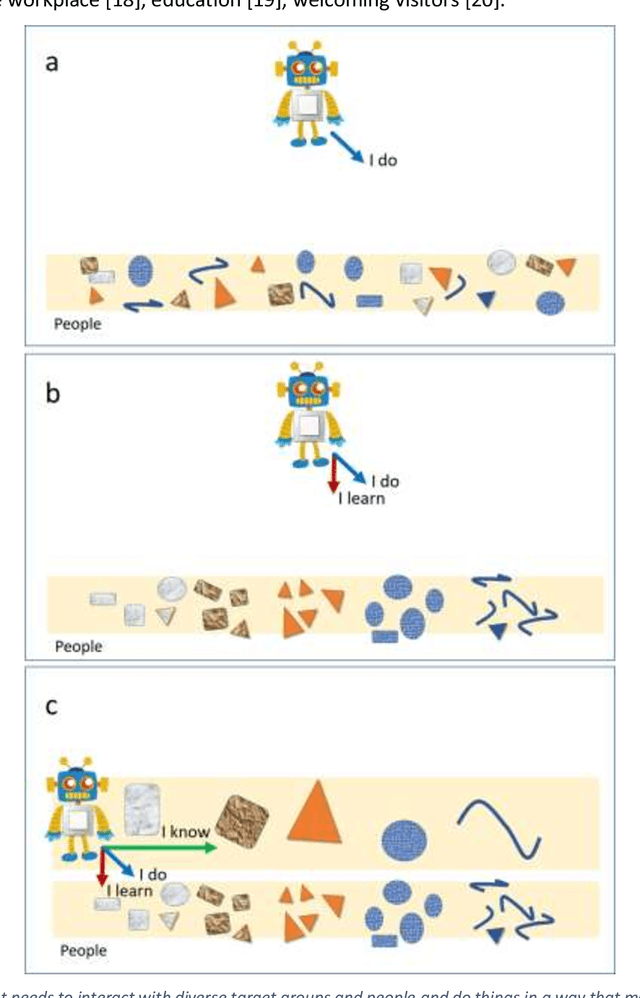

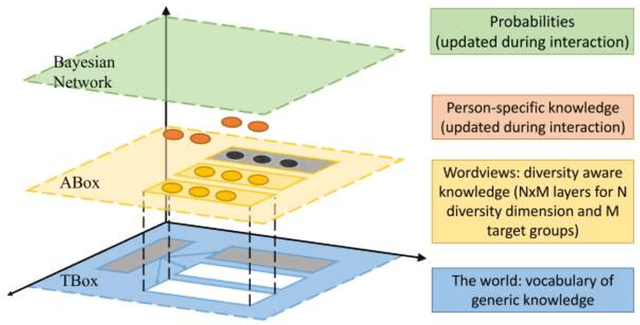

Diversity-aware social robots meet people: beyond context-aware embodied AI

Jul 12, 2022

Abstract:The article introduces the concept of "diversity-aware" robotics and discusses the need to develop computational models to embed robots with diversity-awareness: that is, robots capable of adapting and re-configuring their behavior to recognize, respect, and value the uniqueness of the person they interact with to promote inclusion regardless of their age, race, gender, cognitive or physical capabilities, etc. Finally, the article discusses possible technical solutions based on Ontologies and Bayesian Networks, starting from previous experience with culturally competent robots.

* The article has been presented during the Roundtable "AI in holistic care and healing practices: the caring encounter beyond COVID-19", Anthropology, AI and the Future of Human Society, 6-10 June 2022, Royal Anthropological Institute

Sustainable Verbal and Non-verbal Human-Robot Interaction Through Cloud Services

Mar 04, 2022

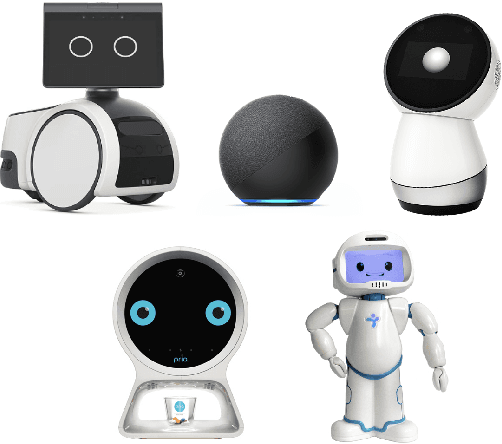

Abstract:This article presents the design and the implementation of CAIR: a cloud system for knowledge-based autonomous interaction devised for Social Robots and other conversational agents. The system is particularly convenient for low-cost robots and devices. Developers are provided with a sustainable solution to manage verbal and non-verbal interaction through a network connection, with about 3,000 topics of conversation ready for "chit-chatting" and a library of pre-cooked plans that only needs to be grounded into the robot's physical capabilities. The system is structured as a set of REST API endpoints so that it can be easily expanded by adding new APIs to improve the capabilities of the clients connected to the cloud. Another key feature of the system is that it has been designed to make the development of its clients straightforward: in this way, multiple devices can be easily endowed with the capability of autonomously interacting with the user, understanding when to perform specific actions, and exploiting all the information provided by cloud services. The article outlines and discusses the results of the experiments performed to assess the system's performance in terms of response time, paving the way for its use both for research and market solutions. Links to repositories with clients for ROS and popular robots such as Pepper and NAO are given.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge