Luca Morando

Intuitive Human-Drone Collaborative Navigation in Unknown Environments through Mixed Reality

Apr 02, 2025Abstract:Considering the widespread integration of aerial robots in inspection, search and rescue, and monitoring tasks, there is a growing demand to design intuitive human-drone interfaces. These aim to streamline and enhance the user interaction and collaboration process during drone navigation, ultimately expediting mission success and accommodating users' inputs. In this paper, we present a novel human-drone mixed reality interface that aims to (a) increase human-drone spatial awareness by sharing relevant spatial information and representations between the human equipped with a Head Mounted Display (HMD) and the robot and (b) enable safer and intuitive human-drone interactive and collaborative navigation in unknown environments beyond the simple command and control or teleoperation paradigm. We validate our framework through extensive user studies and experiments in a simulated post-disaster scenarios, comparing its performance against a traditional First-Person View (FPV) control systems. Furthermore, multiple tests on several users underscore the advantages of the proposed solution, which offers intuitive and natural interaction with the system. This demonstrates the solution's ability to assist humans during a drone navigation mission, ensuring its safe and effective execution.

* Approved at ICUAS 25

Spatial Assisted Human-Drone Collaborative Navigation and Interaction through Immersive Mixed Reality

Feb 06, 2024Abstract:Aerial robots have the potential to play a crucial role in assisting humans with complex and dangerous tasks. Nevertheless, the future industry demands innovative solutions to streamline the interaction process between humans and drones to enable seamless collaboration and efficient co-working. In this paper, we present a novel tele-immersive framework that promotes cognitive and physical collaboration between humans and robots through Mixed Reality (MR). This framework incorporates a novel bi-directional spatial awareness and a multi-modal virtual-physical interaction approaches. The former seamlessly integrates the physical and virtual worlds, offering bidirectional egocentric and exocentric environmental representations. The latter, leveraging the proposed spatial representation, further enhances the collaboration combining a robot planning algorithm for obstacle avoidance with a variable admittance control. This allows users to issue commands based on virtual forces while maintaining compatibility with the environment map. We validate the proposed approach by performing several collaborative planning and exploration tasks involving a drone and an user equipped with a MR headset.

Thermal and Visual Tracking of Photovoltaic Plants for Autonomous UAV inspection

Feb 02, 2022

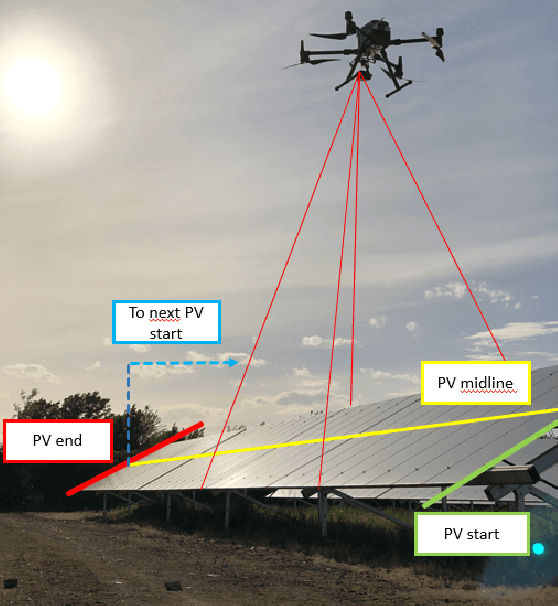

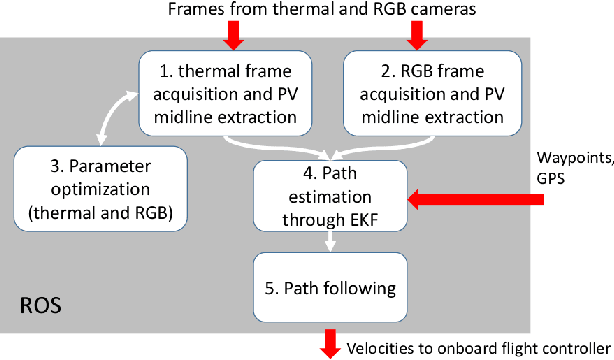

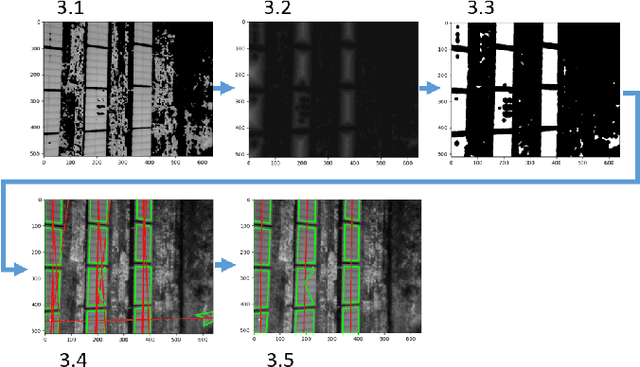

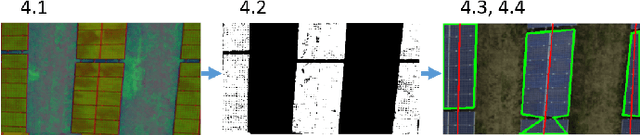

Abstract:Since the demand for renewable solar energy is continuously growing, the need for more frequent, precise, and quick autonomous aerial inspections using Unmanned Aerial Vehicles (UAV) may become fundamental to reduce costs. However, UAV-based inspection of Photovoltaic (PV) arrays is still an open problem. Companies in the field complain that GPS-based navigation is not adequate to accurately cover PV arrays to acquire images to be analyzed to determine the PV panels' status. Indeed, when instructing UAVs to move along a sequency of waypoints at a low altitude, two sources of errors may deteriorate performances: (i) the difference between the actual UAV position and the one estimated with the GPS, and (ii) the difference between the UAV position returned by the GPS and the position of waypoints extracted from georeferenced images acquired through Google Earth or similar tools. These errors make it impossible to reliably track rows of PV modules without human intervention reliably. The article proposes an approach for inspecting PV arrays with autonomous UAVs equipped with an RGB and a thermal camera, the latter being typically used to detect heat failures on the panels' surface: we introduce a portfolio of techniques to process data from both cameras for autonomous navigation. %, including an optimization procedure for improving panel detection and an Extended Kalman Filter (EKF) to filter data from RGB and thermal cameras. Experimental tests performed in simulation and an actual PV plant are reported, confirming the validity of the approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge