Ettore Sani

Improving the ROS 2 Navigation Stack with Real-Time Local Costmap Updates for Agricultural Applications

Jul 26, 2024

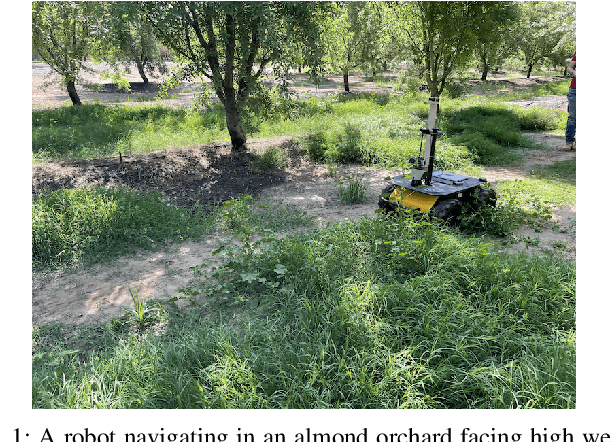

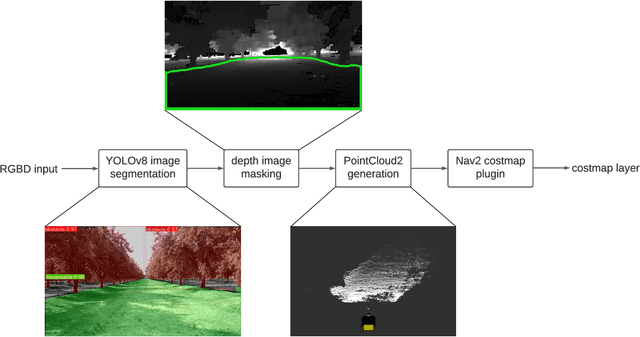

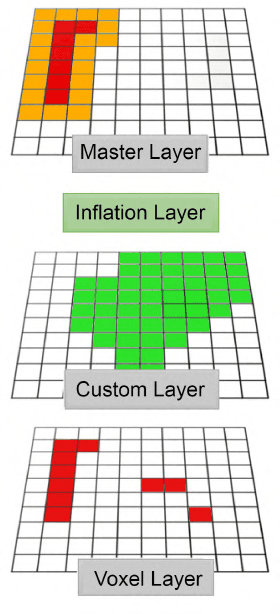

Abstract:The ROS 2 Navigation Stack (Nav2) has emerged as a widely used software component providing the underlying basis to develop a variety of high-level functionalities. However, when used in outdoor environments such as orchards and vineyards, its functionality is notably limited by the presence of obstacles and/or situations not commonly found in indoor settings. One such example is given by tall grass and weeds that can be safely traversed by a robot, but that can be perceived as obstacles by LiDAR sensors, and then force the robot to take longer paths to avoid them, or abort navigation altogether. To overcome these limitations, domain specific extensions must be developed and integrated into the software pipeline. This paper presents a new, lightweight approach to address this challenge and improve outdoor robot navigation. Leveraging the multi-scale nature of the costmaps supporting Nav2, we developed a system that using a depth camera performs pixel level classification on the images, and in real time injects corrections into the local cost map, thus enabling the robot to traverse areas that would otherwise be avoided by the Nav2. Our approach has been implemented and validated on a Clearpath Husky and we demonstrate that with this extension the robot is able to perform navigation tasks that would be otherwise not practical with the standard components.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge