Vasilis Syrgkanis

Sharp Structure-Agnostic Lower Bounds for General Functional Estimation

Dec 19, 2025

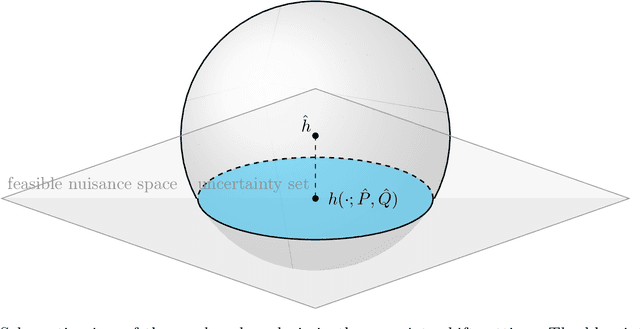

Abstract:The design of efficient nonparametric estimators has long been a central problem in statistics, machine learning, and decision making. Classical optimal procedures often rely on strong structural assumptions, which can be misspecified in practice and complicate deployment. This limitation has sparked growing interest in structure-agnostic approaches -- methods that debias black-box nuisance estimates without imposing structural priors. Understanding the fundamental limits of these methods is therefore crucial. This paper provides a systematic investigation of the optimal error rates achievable by structure-agnostic estimators. We first show that, for estimating the average treatment effect (ATE), a central parameter in causal inference, doubly robust learning attains optimal structure-agnostic error rates. We then extend our analysis to a general class of functionals that depend on unknown nuisance functions and establish the structure-agnostic optimality of debiased/double machine learning (DML). We distinguish two regimes -- one where double robustness is attainable and one where it is not -- leading to different optimal rates for first-order debiasing, and show that DML is optimal in both regimes. Finally, we instantiate our general lower bounds by deriving explicit optimal rates that recover existing results and extend to additional estimands of interest. Our results provide theoretical validation for widely used first-order debiasing methods and guidance for practitioners seeking optimal approaches in the absence of structural assumptions. This paper generalizes and subsumes the ATE lower bound established in \citet{jin2024structure} by the same authors.

Inference on Optimal Policy Values and Other Irregular Functionals via Smoothing

Jul 15, 2025Abstract:Constructing confidence intervals for the value of an optimal treatment policy is an important problem in causal inference. Insight into the optimal policy value can guide the development of reward-maximizing, individualized treatment regimes. However, because the functional that defines the optimal value is non-differentiable, standard semi-parametric approaches for performing inference fail to be directly applicable. Existing approaches for handling this non-differentiability fall roughly into two camps. In one camp are estimators based on constructing smooth approximations of the optimal value. These approaches are computationally lightweight, but typically place unrealistic parametric assumptions on outcome regressions. In another camp are approaches that directly de-bias the non-smooth objective. These approaches don't place parametric assumptions on nuisance functions, but they either require the computation of intractably-many nuisance estimates, assume unrealistic $L^\infty$ nuisance convergence rates, or make strong margin assumptions that prohibit non-response to a treatment. In this paper, we revisit the problem of constructing smooth approximations of non-differentiable functionals. By carefully controlling first-order bias and second-order remainders, we show that a softmax smoothing-based estimator can be used to estimate parameters that are specified as a maximum of scores involving nuisance components. In particular, this includes the value of the optimal treatment policy as a special case. Our estimator obtains $\sqrt{n}$ convergence rates, avoids parametric restrictions/unrealistic margin assumptions, and is often statistically efficient.

It's Hard to Be Normal: The Impact of Noise on Structure-agnostic Estimation

Jul 03, 2025Abstract:Structure-agnostic causal inference studies how well one can estimate a treatment effect given black-box machine learning estimates of nuisance functions (like the impact of confounders on treatment and outcomes). Here, we find that the answer depends in a surprising way on the distribution of the treatment noise. Focusing on the partially linear model of \citet{robinson1988root}, we first show that the widely adopted double machine learning (DML) estimator is minimax rate-optimal for Gaussian treatment noise, resolving an open problem of \citet{mackey2018orthogonal}. Meanwhile, for independent non-Gaussian treatment noise, we show that DML is always suboptimal by constructing new practical procedures with higher-order robustness to nuisance errors. These \emph{ACE} procedures use structure-agnostic cumulant estimators to achieve $r$-th order insensitivity to nuisance errors whenever the $(r+1)$-st treatment cumulant is non-zero. We complement these core results with novel minimax guarantees for binary treatments in the partially linear model. Finally, using synthetic demand estimation experiments, we demonstrate the practical benefits of our higher-order robust estimators.

Discovering Hierarchical Latent Capabilities of Language Models via Causal Representation Learning

Jun 12, 2025Abstract:Faithful evaluation of language model capabilities is crucial for deriving actionable insights that can inform model development. However, rigorous causal evaluations in this domain face significant methodological challenges, including complex confounding effects and prohibitive computational costs associated with extensive retraining. To tackle these challenges, we propose a causal representation learning framework wherein observed benchmark performance is modeled as a linear transformation of a few latent capability factors. Crucially, these latent factors are identified as causally interrelated after appropriately controlling for the base model as a common confounder. Applying this approach to a comprehensive dataset encompassing over 1500 models evaluated across six benchmarks from the Open LLM Leaderboard, we identify a concise three-node linear causal structure that reliably explains the observed performance variations. Further interpretation of this causal structure provides substantial scientific insights beyond simple numerical rankings: specifically, we reveal a clear causal direction starting from general problem-solving capabilities, advancing through instruction-following proficiency, and culminating in mathematical reasoning ability. Our results underscore the essential role of carefully controlling base model variations during evaluation, a step critical to accurately uncovering the underlying causal relationships among latent model capabilities.

Preference Learning with Response Time

May 28, 2025Abstract:This paper investigates the integration of response time data into human preference learning frameworks for more effective reward model elicitation. While binary preference data has become fundamental in fine-tuning foundation models, generative AI systems, and other large-scale models, the valuable temporal information inherent in user decision-making remains largely unexploited. We propose novel methodologies to incorporate response time information alongside binary choice data, leveraging the Evidence Accumulation Drift Diffusion (EZ) model, under which response time is informative of the preference strength. We develop Neyman-orthogonal loss functions that achieve oracle convergence rates for reward model learning, matching the theoretical optimal rates that would be attained if the expected response times for each query were known a priori. Our theoretical analysis demonstrates that for linear reward functions, conventional preference learning suffers from error rates that scale exponentially with reward magnitude. In contrast, our response time-augmented approach reduces this to polynomial scaling, representing a significant improvement in sample efficiency. We extend these guarantees to non-parametric reward function spaces, establishing convergence properties for more complex, realistic reward models. Our extensive experiments validate our theoretical findings in the context of preference learning over images.

A Meta-learner for Heterogeneous Effects in Difference-in-Differences

Feb 07, 2025Abstract:We address the problem of estimating heterogeneous treatment effects in panel data, adopting the popular Difference-in-Differences (DiD) framework under the conditional parallel trends assumption. We propose a novel doubly robust meta-learner for the Conditional Average Treatment Effect on the Treated (CATT), reducing the estimation to a convex risk minimization problem involving a set of auxiliary models. Our framework allows for the flexible estimation of the CATT, when conditioning on any subset of variables of interest using generic machine learning. Leveraging Neyman orthogonality, our proposed approach is robust to estimation errors in the auxiliary models. As a generalization to our main result, we develop a meta-learning approach for the estimation of general conditional functionals under covariate shift. We also provide an extension to the instrumented DiD setting with non-compliance. Empirical results demonstrate the superiority of our approach over existing baselines.

Predicting Long Term Sequential Policy Value Using Softer Surrogates

Dec 30, 2024Abstract:Performing policy evaluation in education, healthcare and online commerce can be challenging, because it can require waiting substantial amounts of time to observe outcomes over the desired horizon of interest. While offline evaluation methods can be used to estimate the performance of a new decision policy from historical data in some cases, such methods struggle when the new policy involves novel actions or is being run in a new decision process with potentially different dynamics. Here we consider how to estimate the full-horizon value of a new decision policy using only short-horizon data from the new policy, and historical full-horizon data from a different behavior policy. We introduce two new estimators for this setting, including a doubly robust estimator, and provide formal analysis of their properties. Our empirical results on two realistic simulators, of HIV treatment and sepsis treatment, show that our methods can often provide informative estimates of a new decision policy ten times faster than waiting for the full horizon, highlighting that it may be possible to quickly identify if a new decision policy, involving new actions, is better or worse than existing past policies.

Automatic Doubly Robust Forests

Dec 10, 2024Abstract:This paper proposes the automatic Doubly Robust Random Forest (DRRF) algorithm for estimating the conditional expectation of a moment functional in the presence of high-dimensional nuisance functions. DRRF combines the automatic debiasing framework using the Riesz representer (Chernozhukov et al., 2022) with non-parametric, forest-based estimation methods for the conditional moment (Athey et al., 2019; Oprescu et al., 2019). In contrast to existing methods, DRRF does not require prior knowledge of the form of the debiasing term nor impose restrictive parametric or semi-parametric assumptions on the target quantity. Additionally, it is computationally efficient for making predictions at multiple query points and significantly reduces runtime compared to methods such as Orthogonal Random Forest (Oprescu et al., 2019). We establish the consistency and asymptotic normality results of DRRF estimator under general assumptions, allowing for the construction of valid confidence intervals. Through extensive simulations in heterogeneous treatment effect (HTE) estimation, we demonstrate the superior performance of DRRF over benchmark approaches in terms of estimation accuracy, robustness, and computational efficiency.

Personalized Adaptation via In-Context Preference Learning

Oct 17, 2024

Abstract:Reinforcement Learning from Human Feedback (RLHF) is widely used to align Language Models (LMs) with human preferences. However, existing approaches often neglect individual user preferences, leading to suboptimal personalization. We present the Preference Pretrained Transformer (PPT), a novel approach for adaptive personalization using online user feedback. PPT leverages the in-context learning capabilities of transformers to dynamically adapt to individual preferences. Our approach consists of two phases: (1) an offline phase where we train a single policy model using a history-dependent loss function, and (2) an online phase where the model adapts to user preferences through in-context learning. We demonstrate PPT's effectiveness in a contextual bandit setting, showing that it achieves personalized adaptation superior to existing methods while significantly reducing the computational costs. Our results suggest the potential of in-context learning for scalable and efficient personalization in large language models.

Orthogonal Causal Calibration

Jun 04, 2024Abstract:Estimates of causal parameters such as conditional average treatment effects and conditional quantile treatment effects play an important role in real-world decision making. Given this importance, one should ensure these estimators are calibrated. While there is a rich literature on calibrating estimators of non-causal parameters, very few methods have been derived for calibrating estimators of causal parameters, or more generally estimators of quantities involving nuisance parameters. In this work, we provide a general framework for calibrating predictors involving nuisance estimation. We consider a notion of calibration defined with respect to an arbitrary, nuisance-dependent loss $\ell$, under which we say an estimator $\theta$ is calibrated if its predictions cannot be changed on any level set to decrease loss. We prove generic upper bounds on the calibration error of any causal parameter estimate $\theta$ with respect to any loss $\ell$ using a concept called Neyman Orthogonality. Our bounds involve two decoupled terms - one measuring the error in estimating the unknown nuisance parameters, and the other representing the calibration error in a hypothetical world where the learned nuisance estimates were true. We use our bound to analyze the convergence of two sample splitting algorithms for causal calibration. One algorithm, which applies to universally orthogonalizable loss functions, transforms the data into generalized pseudo-outcomes and applies an off-the-shelf calibration procedure. The other algorithm, which applies to conditionally orthogonalizable loss functions, extends the classical uniform mass binning algorithm to include nuisance estimation. Our results are exceedingly general, showing that essentially any existing calibration algorithm can be used in causal settings, with additional loss only arising from errors in nuisance estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge