Bryan Wilder

Combining digital data streams and epidemic networks for real time outbreak detection

Nov 10, 2025

Abstract:Responding to disease outbreaks requires close surveillance of their trajectories, but outbreak detection is hindered by the high noise in epidemic time series. Aggregating information across data sources has shown great denoising ability in other fields, but remains underexplored in epidemiology. Here, we present LRTrend, an interpretable machine learning framework to identify outbreaks in real time. LRTrend effectively aggregates diverse health and behavioral data streams within one region and learns disease-specific epidemic networks to aggregate information across regions. We reveal diverse epidemic clusters and connections across the United States that are not well explained by commonly used human mobility networks and may be informative for future public health coordination. We apply LRTrend to 2 years of COVID-19 data in 305 hospital referral regions and frequently detect regional Delta and Omicron waves within 2 weeks of the outbreak's start, when case counts are a small fraction of the wave's resulting peak.

OEUVRE: OnlinE Unbiased Variance-Reduced loss Estimation

Oct 26, 2025Abstract:Online learning algorithms continually update their models as data arrive, making it essential to accurately estimate the expected loss at the current time step. The prequential method is an effective estimation approach which can be practically deployed in various ways. However, theoretical guarantees have previously been established under strong conditions on the algorithm, and practical algorithms have hyperparameters which require careful tuning. We introduce OEUVRE, an estimator that evaluates each incoming sample on the function learned at the current and previous time steps, recursively updating the loss estimate in constant time and memory. We use algorithmic stability, a property satisfied by many popular online learners, for optimal updates and prove consistency, convergence rates, and concentration bounds for our estimator. We design a method to adaptively tune OEUVRE's hyperparameters and test it across diverse online and stochastic tasks. We observe that OEUVRE matches or outperforms other estimators even when their hyperparameters are tuned with oracle access to ground truth.

Predicting Language Models' Success at Zero-Shot Probabilistic Prediction

Sep 18, 2025Abstract:Recent work has investigated the capabilities of large language models (LLMs) as zero-shot models for generating individual-level characteristics (e.g., to serve as risk models or augment survey datasets). However, when should a user have confidence that an LLM will provide high-quality predictions for their particular task? To address this question, we conduct a large-scale empirical study of LLMs' zero-shot predictive capabilities across a wide range of tabular prediction tasks. We find that LLMs' performance is highly variable, both on tasks within the same dataset and across different datasets. However, when the LLM performs well on the base prediction task, its predicted probabilities become a stronger signal for individual-level accuracy. Then, we construct metrics to predict LLMs' performance at the task level, aiming to distinguish between tasks where LLMs may perform well and where they are likely unsuitable. We find that some of these metrics, each of which are assessed without labeled data, yield strong signals of LLMs' predictive performance on new tasks.

Dependent Randomized Rounding for Budget Constrained Experimental Design

Jun 15, 2025Abstract:Policymakers in resource-constrained settings require experimental designs that satisfy strict budget limits while ensuring precise estimation of treatment effects. We propose a framework that applies a dependent randomized rounding procedure to convert assignment probabilities into binary treatment decisions. Our proposed solution preserves the marginal treatment probabilities while inducing negative correlations among assignments, leading to improved estimator precision through variance reduction. We establish theoretical guarantees for the inverse propensity weighted and general linear estimators, and demonstrate through empirical studies that our approach yields efficient and accurate inference under fixed budget constraints.

An AI-Based Public Health Data Monitoring System

Jun 04, 2025Abstract:Public health experts need scalable approaches to monitor large volumes of health data (e.g., cases, hospitalizations, deaths) for outbreaks or data quality issues. Traditional alert-based monitoring systems struggle with modern public health data monitoring systems for several reasons, including that alerting thresholds need to be constantly reset and the data volumes may cause application lag. Instead, we propose a ranking-based monitoring paradigm that leverages new AI anomaly detection methods. Through a multi-year interdisciplinary collaboration, the resulting system has been deployed at a national organization to monitor up to 5,000,000 data points daily. A three-month longitudinal deployed evaluation revealed a significant improvement in monitoring objectives, with a 54x increase in reviewer speed efficiency compared to traditional alert-based methods. This work highlights the potential of human-centered AI to transform public health decision-making.

Explaining Concept Shift with Interpretable Feature Attribution

May 27, 2025Abstract:Regardless the amount of data a machine learning (ML) model is trained on, there will inevitably be data that differs from their training set, lowering model performance. Concept shift occurs when the distribution of labels conditioned on the features changes, making even a well-tuned ML model to have learned a fundamentally incorrect representation. Identifying these shifted features provides unique insight into how one dataset differs from another, considering the difference may be across a scientifically relevant dimension, such as time, disease status, population, etc. In this paper, we propose SGShift, a model for detecting concept shift in tabular data and attributing reduced model performance to a sparse set of shifted features. SGShift models concept shift with a Generalized Additive Model (GAM) and performs subsequent feature selection to identify shifted features. We propose further extensions of SGShift by incorporating knockoffs to control false discoveries and an absorption term to account for models with poor fit to the data. We conduct extensive experiments in synthetic and real data across various ML models and find SGShift can identify shifted features with AUC $>0.9$ and recall $>90\%$, often 2 or 3 times as high as baseline methods.

Generate to Discriminate: Expert Routing for Continual Learning

Dec 22, 2024Abstract:In many real-world settings, regulations and economic incentives permit the sharing of models but not data across institutional boundaries. In such scenarios, practitioners might hope to adapt models to new domains, without losing performance on previous domains (so-called catastrophic forgetting). While any single model may struggle to achieve this goal, learning an ensemble of domain-specific experts offers the potential to adapt more closely to each individual institution. However, a core challenge in this context is determining which expert to deploy at test time. In this paper, we propose Generate to Discriminate (G2D), a domain-incremental continual learning method that leverages synthetic data to train a domain-discriminator that routes samples at inference time to the appropriate expert. Surprisingly, we find that leveraging synthetic data in this capacity is more effective than using the samples to \textit{directly} train the downstream classifier (the more common approach to leveraging synthetic data in the lifelong learning literature). We observe that G2D outperforms competitive domain-incremental learning methods on tasks in both vision and language modalities, providing a new perspective on the use of synthetic data in the lifelong learning literature.

Comparing Targeting Strategies for Maximizing Social Welfare with Limited Resources

Nov 11, 2024

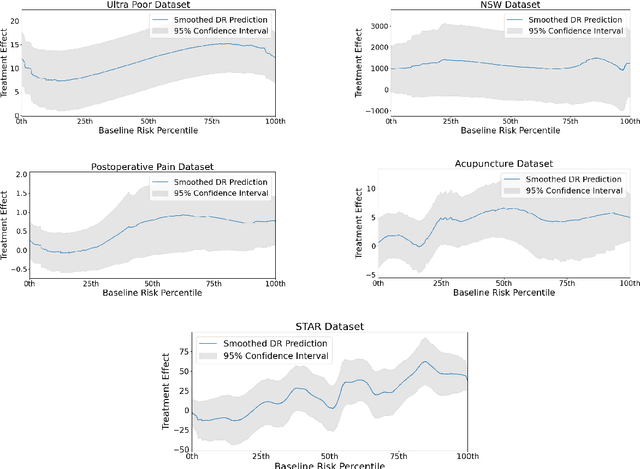

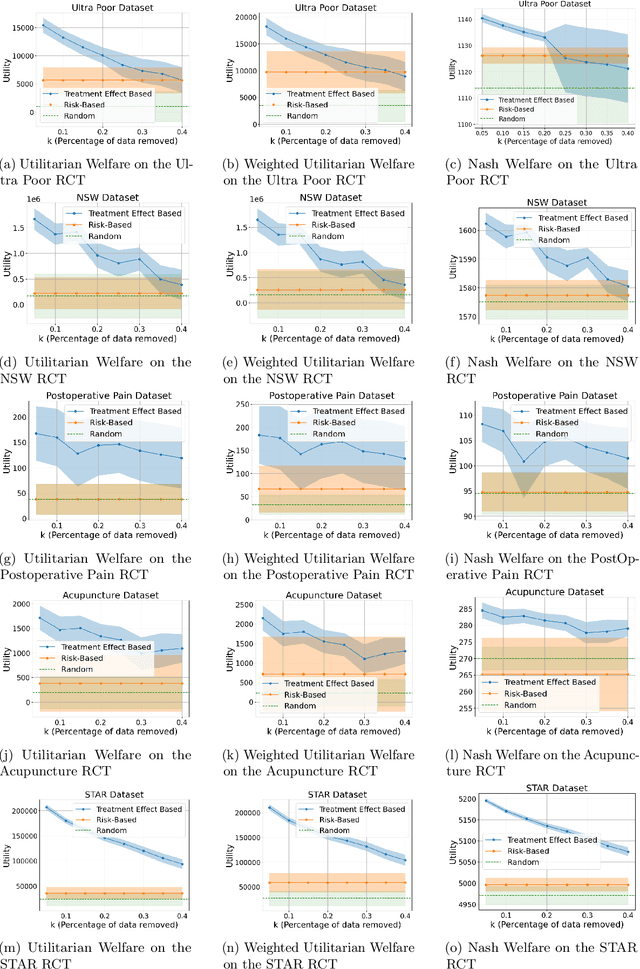

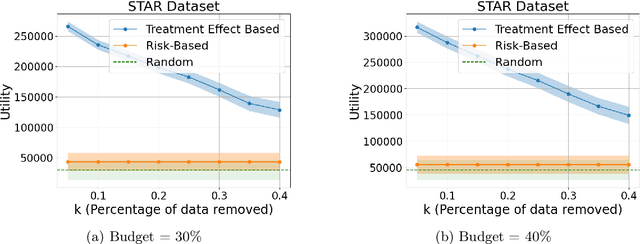

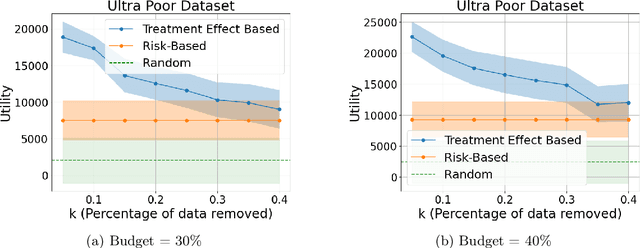

Abstract:Machine learning is increasingly used to select which individuals receive limited-resource interventions in domains such as human services, education, development, and more. However, it is often not apparent what the right quantity is for models to predict. In particular, policymakers rarely have access to data from a randomized controlled trial (RCT) that would enable accurate estimates of treatment effects -- which individuals would benefit more from the intervention. Observational data is more likely to be available, creating a substantial risk of bias in treatment effect estimates. Practitioners instead commonly use a technique termed "risk-based targeting" where the model is just used to predict each individual's status quo outcome (an easier, non-causal task). Those with higher predicted risk are offered treatment. There is currently almost no empirical evidence to inform which choices lead to the most effect machine learning-informed targeting strategies in social domains. In this work, we use data from 5 real-world RCTs in a variety of domains to empirically assess such choices. We find that risk-based targeting is almost always inferior to targeting based on even biased estimates of treatment effects. Moreover, these results hold even when the policymaker has strong normative preferences for assisting higher-risk individuals. Our results imply that, despite the widespread use of risk prediction models in applied settings, practitioners may be better off incorporating even weak evidence about heterogeneous causal effects to inform targeting.

Failure Modes of LLMs for Causal Reasoning on Narratives

Oct 31, 2024

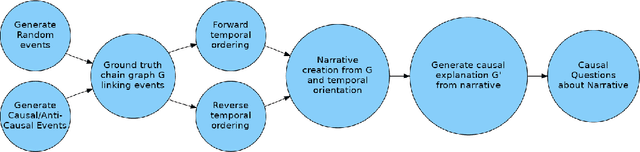

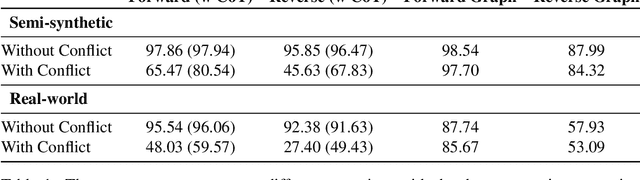

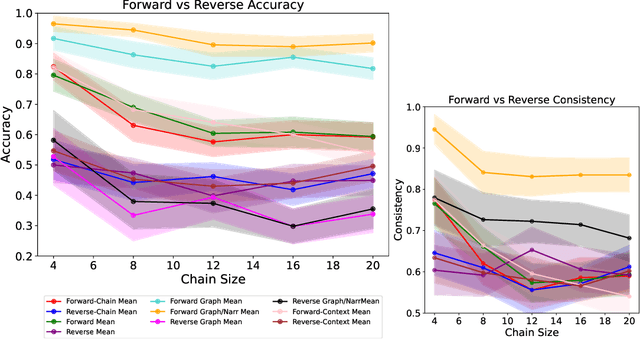

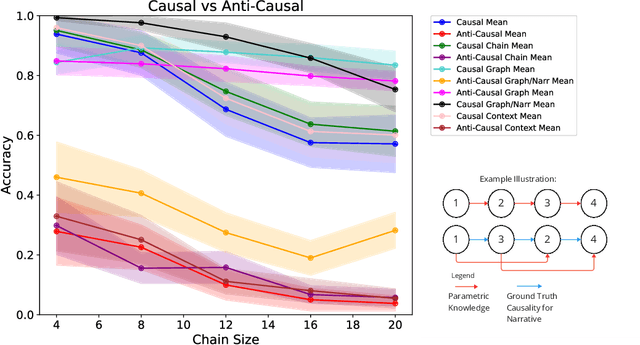

Abstract:In this work, we investigate the causal reasoning abilities of large language models (LLMs) through the representative problem of inferring causal relationships from narratives. We find that even state-of-the-art language models rely on unreliable shortcuts, both in terms of the narrative presentation and their parametric knowledge. For example, LLMs tend to determine causal relationships based on the topological ordering of events (i.e., earlier events cause later ones), resulting in lower performance whenever events are not narrated in their exact causal order. Similarly, we demonstrate that LLMs struggle with long-term causal reasoning and often fail when the narratives are long and contain many events. Additionally, we show LLMs appear to rely heavily on their parametric knowledge at the expense of reasoning over the provided narrative. This degrades their abilities whenever the narrative opposes parametric knowledge. We extensively validate these failure modes through carefully controlled synthetic experiments, as well as evaluations on real-world narratives. Finally, we observe that explicitly generating a causal graph generally improves performance while naive chain-of-thought is ineffective. Collectively, our results distill precise failure modes of current state-of-the-art models and can pave the way for future techniques to enhance causal reasoning in LLMs.

Accounting for Missing Covariates in Heterogeneous Treatment Estimation

Oct 21, 2024

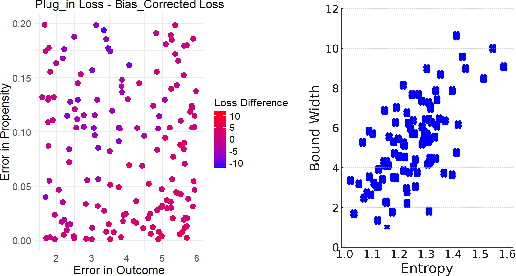

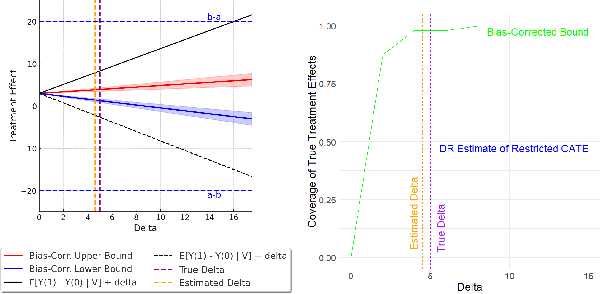

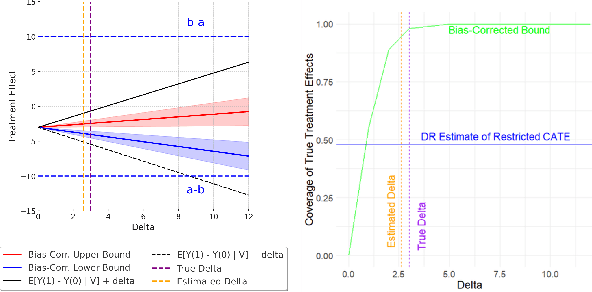

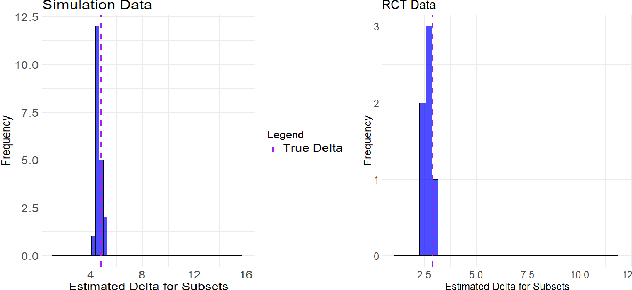

Abstract:Many applications of causal inference require using treatment effects estimated on a study population to make decisions in a separate target population. We consider the challenging setting where there are covariates that are observed in the target population that were not seen in the original study. Our goal is to estimate the tightest possible bounds on heterogeneous treatment effects conditioned on such newly observed covariates. We introduce a novel partial identification strategy based on ideas from ecological inference; the main idea is that estimates of conditional treatment effects for the full covariate set must marginalize correctly when restricted to only the covariates observed in both populations. Furthermore, we introduce a bias-corrected estimator for these bounds and prove that it enjoys fast convergence rates and statistical guarantees (e.g., asymptotic normality). Experimental results on both real and synthetic data demonstrate that our framework can produce bounds that are much tighter than would otherwise be possible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge