Tong Mo

GuiLoMo: Allocating Expert Number and Rank for LoRA-MoE via Bilevel Optimization with GuidedSelection Vectors

Jun 17, 2025Abstract:Parameter-efficient fine-tuning (PEFT) methods, particularly Low-Rank Adaptation (LoRA), offer an efficient way to adapt large language models with reduced computational costs. However, their performance is limited by the small number of trainable parameters. Recent work combines LoRA with the Mixture-of-Experts (MoE), i.e., LoRA-MoE, to enhance capacity, but two limitations remain in hindering the full exploitation of its potential: 1) the influence of downstream tasks when assigning expert numbers, and 2) the uniform rank assignment across all LoRA experts, which restricts representational diversity. To mitigate these gaps, we propose GuiLoMo, a fine-grained layer-wise expert numbers and ranks allocation strategy with GuidedSelection Vectors (GSVs). GSVs are learned via a prior bilevel optimization process to capture both model- and task-specific needs, and are then used to allocate optimal expert numbers and ranks. Experiments on three backbone models across diverse benchmarks show that GuiLoMo consistently achieves superior or comparable performance to all baselines. Further analysis offers key insights into how expert numbers and ranks vary across layers and tasks, highlighting the benefits of adaptive expert configuration. Our code is available at https://github.com/Liar406/Gui-LoMo.git.

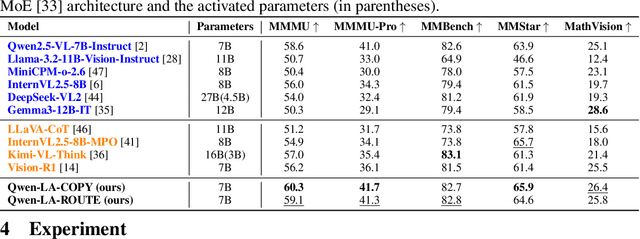

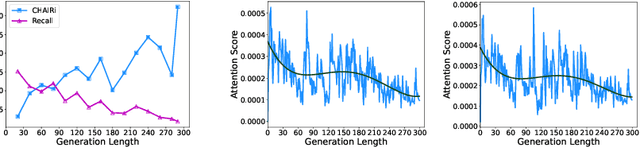

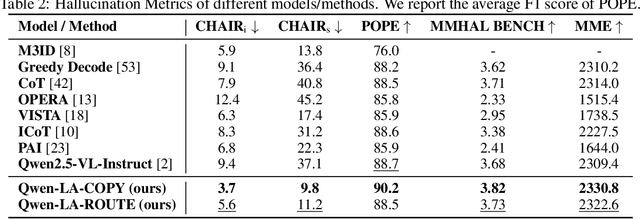

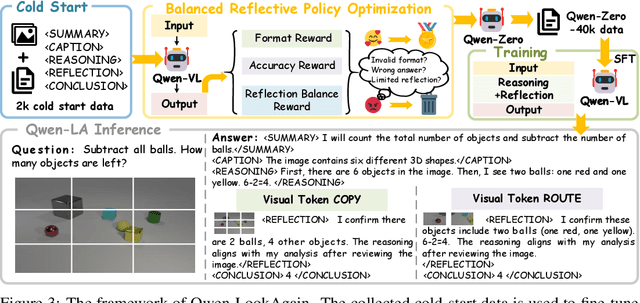

Qwen Look Again: Guiding Vision-Language Reasoning Models to Re-attention Visual Information

May 29, 2025

Abstract:Inference time scaling drives extended reasoning to enhance the performance of Vision-Language Models (VLMs), thus forming powerful Vision-Language Reasoning Models (VLRMs). However, long reasoning dilutes visual tokens, causing visual information to receive less attention and may trigger hallucinations. Although introducing text-only reflection processes shows promise in language models, we demonstrate that it is insufficient to suppress hallucinations in VLMs. To address this issue, we introduce Qwen-LookAgain (Qwen-LA), a novel VLRM designed to mitigate hallucinations by incorporating a vision-text reflection process that guides the model to re-attention visual information during reasoning. We first propose a reinforcement learning method Balanced Reflective Policy Optimization (BRPO), which guides the model to decide when to generate vision-text reflection on its own and balance the number and length of reflections. Then, we formally prove that VLRMs lose attention to visual tokens as reasoning progresses, and demonstrate that supplementing visual information during reflection enhances visual attention. Therefore, during training and inference, Visual Token COPY and Visual Token ROUTE are introduced to force the model to re-attention visual information at the visual level, addressing the limitations of text-only reflection. Experiments on multiple visual QA datasets and hallucination metrics indicate that Qwen-LA achieves leading accuracy performance while reducing hallucinations. Our code is available at: https://github.com/Liar406/Look_Again.

CLEAR-KGQA: Clarification-Enhanced Ambiguity Resolution for Knowledge Graph Question Answering

Apr 13, 2025Abstract:This study addresses the challenge of ambiguity in knowledge graph question answering (KGQA). While recent KGQA systems have made significant progress, particularly with the integration of large language models (LLMs), they typically assume user queries are unambiguous, which is an assumption that rarely holds in real-world applications. To address these limitations, we propose a novel framework that dynamically handles both entity ambiguity (e.g., distinguishing between entities with similar names) and intent ambiguity (e.g., clarifying different interpretations of user queries) through interactive clarification. Our approach employs a Bayesian inference mechanism to quantify query ambiguity and guide LLMs in determining when and how to request clarification from users within a multi-turn dialogue framework. We further develop a two-agent interaction framework where an LLM-based user simulator enables iterative refinement of logical forms through simulated user feedback. Experimental results on the WebQSP and CWQ dataset demonstrate that our method significantly improves performance by effectively resolving semantic ambiguities. Additionally, we contribute a refined dataset of disambiguated queries, derived from interaction histories, to facilitate future research in this direction.

GraphBC: Improving LLMs for Better Graph Data Processing

Jan 24, 2025

Abstract:The success of Large Language Models (LLMs) in various domains has led researchers to apply them to graph-related problems by converting graph data into natural language text. However, unlike graph data, natural language inherently has sequential order. We observe that when the order of nodes or edges in the natural language description of a graph is shuffled, despite describing the same graph, model performance fluctuates between high performance and random guessing. Additionally, due to the limited input context length of LLMs, current methods typically randomly sample neighbors of target nodes as representatives of their neighborhood, which may not always be effective for accurate reasoning. To address these gaps, we introduce GraphBC. This novel model framework features an Order Selector Module to ensure proper serialization order of the graph and a Subgraph Sampling Module to sample subgraphs with better structure for better reasoning. Furthermore, we propose Graph CoT obtained through distillation, and enhance LLM's reasoning and zero-shot learning capabilities for graph tasks through instruction tuning. Experiments on multiple datasets for node classification and graph question-answering demonstrate that GraphBC improves LLMs' performance and generalization ability on graph tasks.

Domaino1s: Guiding LLM Reasoning for Explainable Answers in High-Stakes Domains

Jan 24, 2025

Abstract:Large Language Models (LLMs) are widely applied to downstream domains. However, current LLMs for high-stakes domain tasks, such as financial investment and legal QA, typically generate brief answers without reasoning processes and explanations. This limits users' confidence in making decisions based on their responses. While original CoT shows promise, it lacks self-correction mechanisms during reasoning. This work introduces Domain$o1$s, which enhances LLMs' reasoning capabilities on domain tasks through supervised fine-tuning and tree search. We construct CoT-stock-2k and CoT-legal-2k datasets for fine-tuning models that activate domain-specific reasoning steps based on their judgment. Additionally, we propose Selective Tree Exploration to spontaneously explore solution spaces and sample optimal reasoning paths to improve performance. We also introduce PROOF-Score, a new metric for evaluating domain models' explainability, complementing traditional accuracy metrics with richer assessment dimensions. Extensive experiments on stock investment recommendation and legal reasoning QA tasks demonstrate Domaino1s's leading performance and explainability. Our code is available at https://anonymous.4open.science/r/Domaino1s-006F/.

Mitigating Hallucinations on Object Attributes using Multiview Images and Negative Instructions

Jan 17, 2025

Abstract:Current popular Large Vision-Language Models (LVLMs) are suffering from Hallucinations on Object Attributes (HoOA), leading to incorrect determination of fine-grained attributes in the input images. Leveraging significant advancements in 3D generation from a single image, this paper proposes a novel method to mitigate HoOA in LVLMs. This method utilizes multiview images sampled from generated 3D representations as visual prompts for LVLMs, thereby providing more visual information from other viewpoints. Furthermore, we observe the input order of multiple multiview images significantly affects the performance of LVLMs. Consequently, we have devised Multiview Image Augmented VLM (MIAVLM), incorporating a Multiview Attributes Perceiver (MAP) submodule capable of simultaneously eliminating the influence of input image order and aligning visual information from multiview images with Large Language Models (LLMs). Besides, we designed and employed negative instructions to mitigate LVLMs' bias towards ``Yes" responses. Comprehensive experiments demonstrate the effectiveness of our method.

Adaptive Spatiotemporal Augmentation for Improving Dynamic Graph Learning

Jan 17, 2025

Abstract:Dynamic graph augmentation is used to improve the performance of dynamic GNNs. Most methods assume temporal locality, meaning that recent edges are more influential than earlier edges. However, for temporal changes in edges caused by random noise, overemphasizing recent edges while neglecting earlier ones may lead to the model capturing noise. To address this issue, we propose STAA (SpatioTemporal Activity-Aware Random Walk Diffusion). STAA identifies nodes likely to have noisy edges in spatiotemporal dimensions. Spatially, it analyzes critical topological positions through graph wavelet coefficients. Temporally, it analyzes edge evolution through graph wavelet coefficient change rates. Then, random walks are used to reduce the weights of noisy edges, deriving a diffusion matrix containing spatiotemporal information as an augmented adjacency matrix for dynamic GNN learning. Experiments on multiple datasets show that STAA outperforms other dynamic graph augmentation methods in node classification and link prediction tasks.

Order Matters: Exploring Order Sensitivity in Multimodal Large Language Models

Oct 22, 2024

Abstract:Multimodal Large Language Models (MLLMs) utilize multimodal contexts consisting of text, images, or videos to solve various multimodal tasks. However, we find that changing the order of multimodal input can cause the model's performance to fluctuate between advanced performance and random guessing. This phenomenon exists in both single-modality (text-only or image-only) and mixed-modality (image-text-pair) contexts. Furthermore, we demonstrate that popular MLLMs pay special attention to certain multimodal context positions, particularly the beginning and end. Leveraging this special attention, we place key video frames and important image/text content in special positions within the context and submit them to the MLLM for inference. This method results in average performance gains of 14.7% for video-caption matching and 17.8% for visual question answering tasks. Additionally, we propose a new metric, Position-Invariant Accuracy (PIA), to address order bias in MLLM evaluation. Our research findings contribute to a better understanding of Multi-Modal In-Context Learning (MMICL) and provide practical strategies for enhancing MLLM performance without increasing computational costs.

Aeroengine performance prediction using a physical-embedded data-driven method

Jun 29, 2024Abstract:Accurate and efficient prediction of aeroengine performance is of paramount importance for engine design, maintenance, and optimization endeavours. However, existing methodologies often struggle to strike an optimal balance among predictive accuracy, computational efficiency, modelling complexity, and data dependency. To address these challenges, we propose a strategy that synergistically combines domain knowledge from both the aeroengine and neural network realms to enable real-time prediction of engine performance parameters. Leveraging aeroengine domain knowledge, we judiciously design the network structure and regulate the internal information flow. Concurrently, drawing upon neural network domain expertise, we devise four distinct feature fusion methods and introduce an innovative loss function formulation. To rigorously evaluate the effectiveness and robustness of our proposed strategy, we conduct comprehensive validation across two distinct datasets. The empirical results demonstrate :(1) the evident advantages of our tailored loss function; (2) our model's ability to maintain equal or superior performance with a reduced parameter count; (3) our model's reduced data dependency compared to generalized neural network architectures; (4)Our model is more interpretable than traditional black box machine learning methods.

Supportiveness-based Knowledge Rewriting for Retrieval-augmented Language Modeling

Jun 12, 2024

Abstract:Retrieval-augmented language models (RALMs) have recently shown great potential in mitigating the limitations of implicit knowledge in LLMs, such as untimely updating of the latest expertise and unreliable retention of long-tail knowledge. However, since the external knowledge base, as well as the retriever, can not guarantee reliability, potentially leading to the knowledge retrieved not being helpful or even misleading for LLM generation. In this paper, we introduce Supportiveness-based Knowledge Rewriting (SKR), a robust and pluggable knowledge rewriter inherently optimized for LLM generation. Specifically, we introduce the novel concept of "supportiveness"--which represents how effectively a knowledge piece facilitates downstream tasks--by considering the perplexity impact of augmented knowledge on the response text of a white-box LLM. Based on knowledge supportiveness, we first design a training data curation strategy for our rewriter model, effectively identifying and filtering out poor or irrelevant rewrites (e.g., with low supportiveness scores) to improve data efficacy. We then introduce the direct preference optimization (DPO) algorithm to align the generated rewrites to optimal supportiveness, guiding the rewriter model to summarize augmented content that better improves the final response. Comprehensive evaluations across six popular knowledge-intensive tasks and four LLMs have demonstrated the effectiveness and superiority of SKR. With only 7B parameters, SKR has shown better knowledge rewriting capability over GPT-4, the current state-of-the-art general-purpose LLM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge