Tingting Li

DrawSim-PD: Simulating Student Science Drawings to Support NGSS-Aligned Teacher Diagnostic Reasoning

Feb 02, 2026Abstract:Developing expertise in diagnostic reasoning requires practice with diverse student artifacts, yet privacy regulations prohibit sharing authentic student work for teacher professional development (PD) at scale. We present DrawSim-PD, the first generative framework that simulates NGSS-aligned, student-like science drawings exhibiting controllable pedagogical imperfections to support teacher training. Central to our approach are apability profiles--structured cognitive states encoding what students at each performance level can and cannot yet demonstrate. These profiles ensure cross-modal coherence across generated outputs: (i) a student-like drawing, (ii) a first-person reasoning narrative, and (iii) a teacher-facing diagnostic concept map. Using 100 curated NGSS topics spanning K-12, we construct a corpus of 10,000 systematically structured artifacts. Through an expert-based feasibility evaluation, K--12 science educators verified the artifacts' alignment with NGSS expectations (>84% positive on core items) and utility for interpreting student thinking, while identifying refinement opportunities for grade-band extremes. We release this open infrastructure to overcome data scarcity barriers in visual assessment research.

Adaptive Fidelity Estimation for Quantum Programs with Graph-Guided Noise Awareness

Jan 21, 2026Abstract:Fidelity estimation is a critical yet resource-intensive step in testing quantum programs on noisy intermediate-scale quantum (NISQ) devices, where the required number of measurements is difficult to predefine due to hardware noise, device heterogeneity, and transpilation-induced circuit transformations. We present QuFid, an adaptive and noise-aware framework that determines measurement budgets online by leveraging circuit structure and runtime statistical feedback. QuFid models a quantum program as a directed acyclic graph (DAG) and employs a control-flow-aware random walk to characterize noise propagation along gate dependencies. Backend-specific effects are captured via transpilation-induced structural deformation metrics, which are integrated into the random-walk formulation to induce a noise-propagation operator. Circuit complexity is then quantified through the spectral characteristics of this operator, providing a principled and lightweight basis for adaptive measurement planning. Experiments on 18 quantum benchmarks executed on IBM Quantum backends show that QuFid significantly reduces measurement cost compared to fixed-shot and learning-based baselines, while consistently maintaining acceptable fidelity bias.

MARS2 2025 Challenge on Multimodal Reasoning: Datasets, Methods, Results, Discussion, and Outlook

Sep 17, 2025

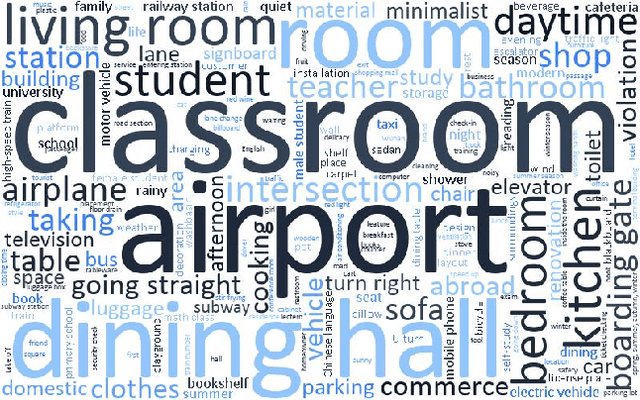

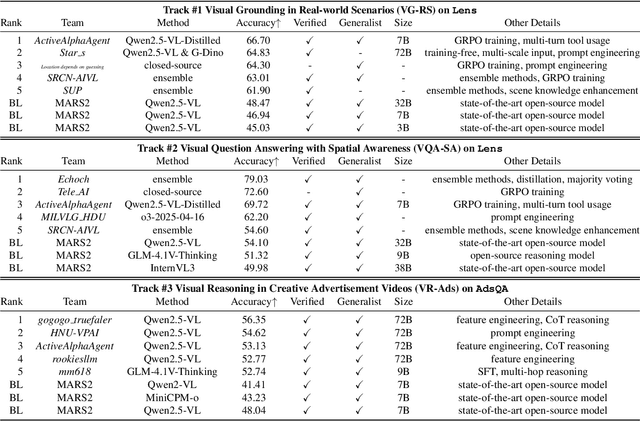

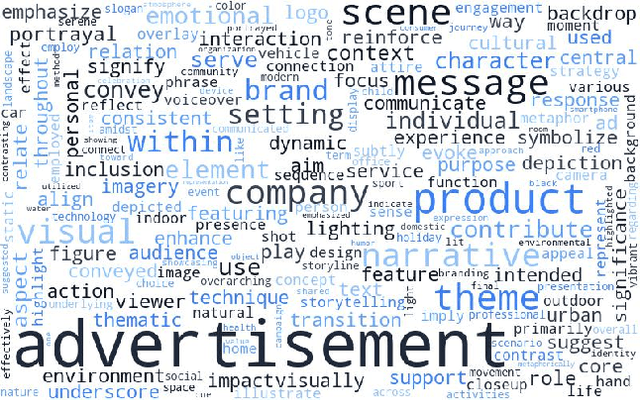

Abstract:This paper reviews the MARS2 2025 Challenge on Multimodal Reasoning. We aim to bring together different approaches in multimodal machine learning and LLMs via a large benchmark. We hope it better allows researchers to follow the state-of-the-art in this very dynamic area. Meanwhile, a growing number of testbeds have boosted the evolution of general-purpose large language models. Thus, this year's MARS2 focuses on real-world and specialized scenarios to broaden the multimodal reasoning applications of MLLMs. Our organizing team released two tailored datasets Lens and AdsQA as test sets, which support general reasoning in 12 daily scenarios and domain-specific reasoning in advertisement videos, respectively. We evaluated 40+ baselines that include both generalist MLLMs and task-specific models, and opened up three competition tracks, i.e., Visual Grounding in Real-world Scenarios (VG-RS), Visual Question Answering with Spatial Awareness (VQA-SA), and Visual Reasoning in Creative Advertisement Videos (VR-Ads). Finally, 76 teams from the renowned academic and industrial institutions have registered and 40+ valid submissions (out of 1200+) have been included in our ranking lists. Our datasets, code sets (40+ baselines and 15+ participants' methods), and rankings are publicly available on the MARS2 workshop website and our GitHub organization page https://github.com/mars2workshop/, where our updates and announcements of upcoming events will be continuously provided.

Enhancing LLM-Based Short Answer Grading with Retrieval-Augmented Generation

Apr 07, 2025

Abstract:Short answer assessment is a vital component of science education, allowing evaluation of students' complex three-dimensional understanding. Large language models (LLMs) that possess human-like ability in linguistic tasks are increasingly popular in assisting human graders to reduce their workload. However, LLMs' limitations in domain knowledge restrict their understanding in task-specific requirements and hinder their ability to achieve satisfactory performance. Retrieval-augmented generation (RAG) emerges as a promising solution by enabling LLMs to access relevant domain-specific knowledge during assessment. In this work, we propose an adaptive RAG framework for automated grading that dynamically retrieves and incorporates domain-specific knowledge based on the question and student answer context. Our approach combines semantic search and curated educational sources to retrieve valuable reference materials. Experimental results in a science education dataset demonstrate that our system achieves an improvement in grading accuracy compared to baseline LLM approaches. The findings suggest that RAG-enhanced grading systems can serve as reliable support with efficient performance gains.

Probing many-body Bell correlation depth with superconducting qubits

Jun 25, 2024

Abstract:Quantum nonlocality describes a stronger form of quantum correlation than that of entanglement. It refutes Einstein's belief of local realism and is among the most distinctive and enigmatic features of quantum mechanics. It is a crucial resource for achieving quantum advantages in a variety of practical applications, ranging from cryptography and certified random number generation via self-testing to machine learning. Nevertheless, the detection of nonlocality, especially in quantum many-body systems, is notoriously challenging. Here, we report an experimental certification of genuine multipartite Bell correlations, which signal nonlocality in quantum many-body systems, up to 24 qubits with a fully programmable superconducting quantum processor. In particular, we employ energy as a Bell correlation witness and variationally decrease the energy of a many-body system across a hierarchy of thresholds, below which an increasing Bell correlation depth can be certified from experimental data. As an illustrating example, we variationally prepare the low-energy state of a two-dimensional honeycomb model with 73 qubits and certify its Bell correlations by measuring an energy that surpasses the corresponding classical bound with up to 48 standard deviations. In addition, we variationally prepare a sequence of low-energy states and certify their genuine multipartite Bell correlations up to 24 qubits via energies measured efficiently by parity oscillation and multiple quantum coherence techniques. Our results establish a viable approach for preparing and certifying multipartite Bell correlations, which provide not only a finer benchmark beyond entanglement for quantum devices, but also a valuable guide towards exploiting multipartite Bell correlation in a wide spectrum of practical applications.

A Light-weight Transformer-based Self-supervised Matching Network for Heterogeneous Images

Apr 30, 2024

Abstract:Matching visible and near-infrared (NIR) images remains a significant challenge in remote sensing image fusion. The nonlinear radiometric differences between heterogeneous remote sensing images make the image matching task even more difficult. Deep learning has gained substantial attention in computer vision tasks in recent years. However, many methods rely on supervised learning and necessitate large amounts of annotated data. Nevertheless, annotated data is frequently limited in the field of remote sensing image matching. To address this challenge, this paper proposes a novel keypoint descriptor approach that obtains robust feature descriptors via a self-supervised matching network. A light-weight transformer network, termed as LTFormer, is designed to generate deep-level feature descriptors. Furthermore, we implement an innovative triplet loss function, LT Loss, to enhance the matching performance further. Our approach outperforms conventional hand-crafted local feature descriptors and proves equally competitive compared to state-of-the-art deep learning-based methods, even amidst the shortage of annotated data.

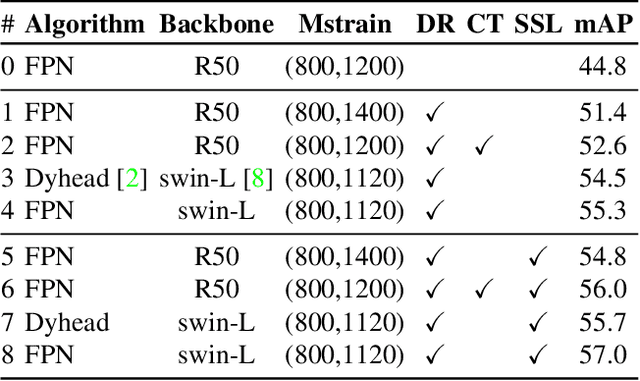

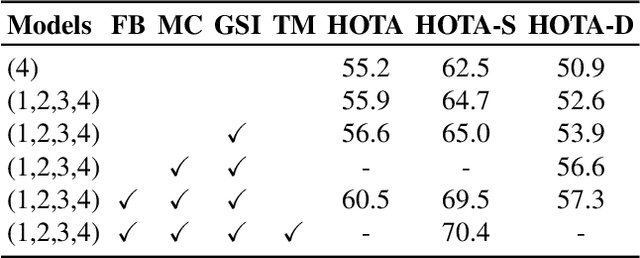

SDTracker: Synthetic Data Based Multi-Object Tracking

Mar 26, 2023

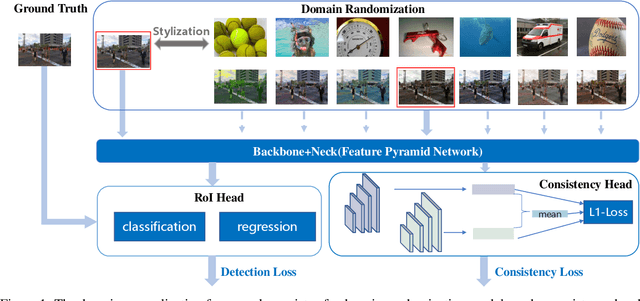

Abstract:We present SDTracker, a method that harnesses the potential of synthetic data for multi-object tracking of real-world scenes in a domain generalization and semi-supervised fashion. First, we use the ImageNet dataset as an auxiliary to randomize the style of synthetic data. With out-of-domain data, we further enforce pyramid consistency loss across different "stylized" images from the same sample to learn domain invariant features. Second, we adopt the pseudo-labeling method to effectively utilize the unlabeled MOT17 training data. To obtain high-quality pseudo-labels, we apply proximal policy optimization (PPO2) algorithm to search confidence thresholds for each sequence. When using the unlabeled MOT17 training set, combined with the pure-motion tracking strategy upgraded via developed post-processing, we finally reach 61.4 HOTA.

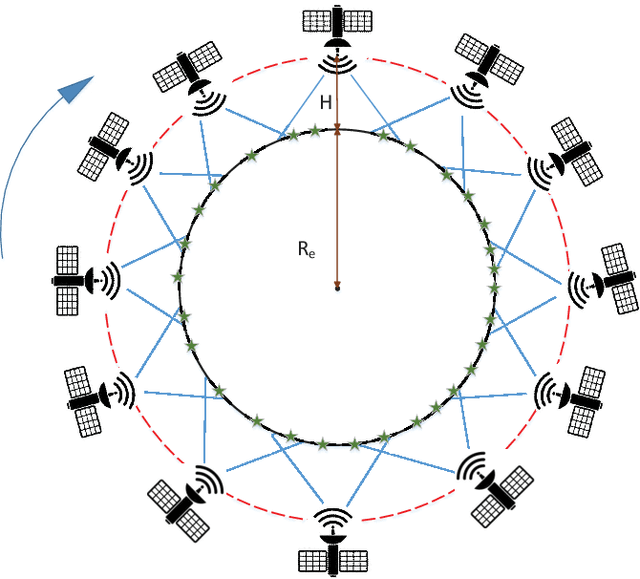

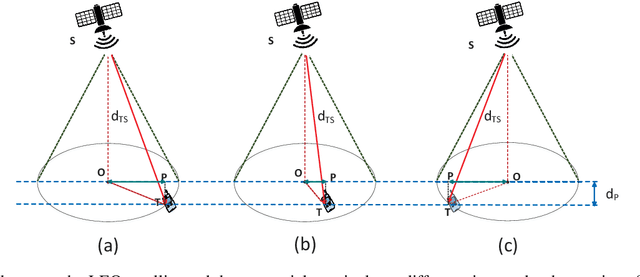

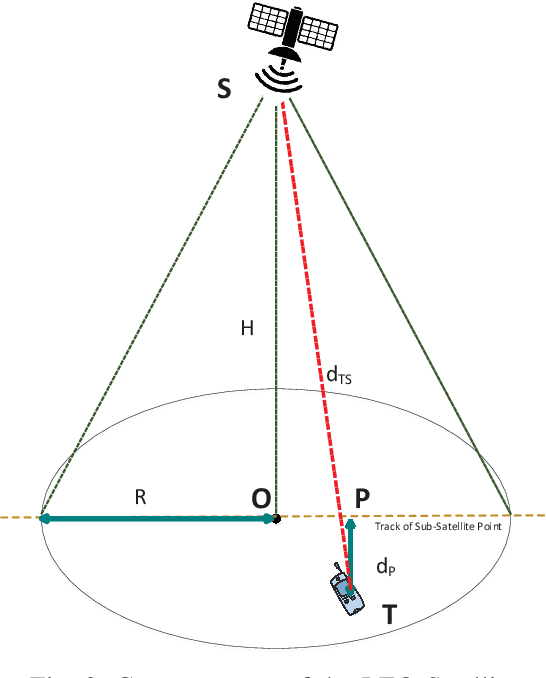

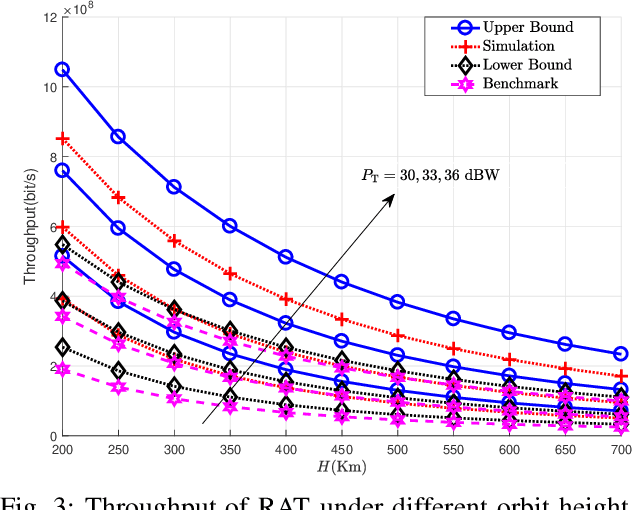

Effect of Strong Time-Varying Transmission Distance on LEO Satellite-Terrestrial Deliveries

Jun 11, 2022

Abstract:In this paper, we investigate the effect of the strong time-varying transmission distance on the performance of the low-earth orbit (LEO) satellite-terrestrial transmission (STT) system. We propose a new analytical framework using finite-state Markov channel (FSMC) model and time discretization method. Moreover, to demonstrate the applications of the proposed framework, the performances of two adaptive transmissions, rate-adaptive transmission (RAT) and power-adaptive transmission (PAT) schemes, are evaluated for the cases when the transmit power or the transmission rate at the LEO satellite is fixed. Closed-form expressions for the throughput, energy efficiency (EE), and delay outage rate (DOR) of the considered systems are derived and verified, which are capable of addressing the capacity, energy efficiency, and outage rate performance of the considered LEO STT scenarios with the proposed analytical framework.

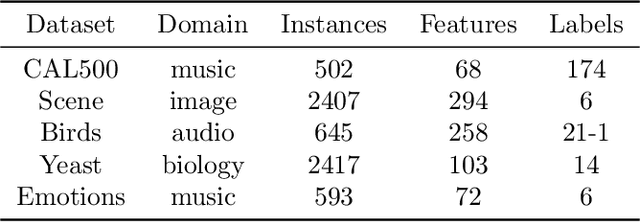

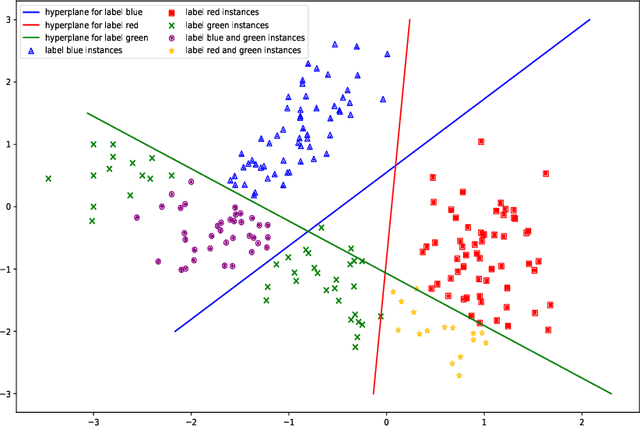

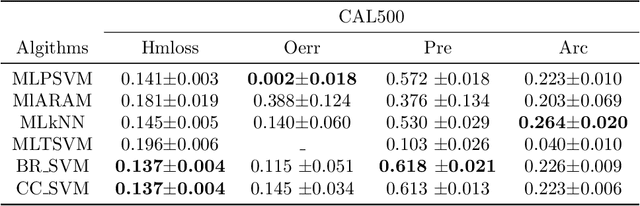

MLPSVM:A new parallel support vector machine to multi-label learning

Apr 13, 2020

Abstract:Multi-label learning has attracted the attention of the machine learning community. The problem conversion method Binary Relevance converts a familiar single label into a multi-label algorithm. The binary relevance method is widely used because of its simple structure and efficient algorithm. But binary relevance does not consider the links between labels, making it cumbersome to handle some tasks. This paper proposes a multi-label learning algorithm that can also be used for single-label classification. It is based on standard support vector machines and changes the original single decision hyperplane into two parallel decision hyper-planes, which call multi-label parallel support vector machine (MLPSVM). At the end of the article, MLPSVM is compared with other multi-label learning algorithms. The experimental results show that the algorithm performs well on data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge