Yuanyuan Ma

On Secure Uplink Transmission in Hybrid RF-FSO Cooperative Satellite-Aerial-Terrestrial Networks

Oct 12, 2022

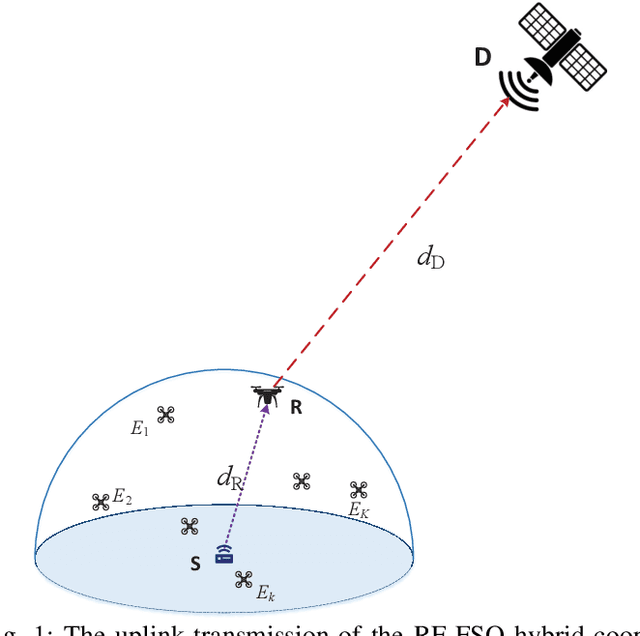

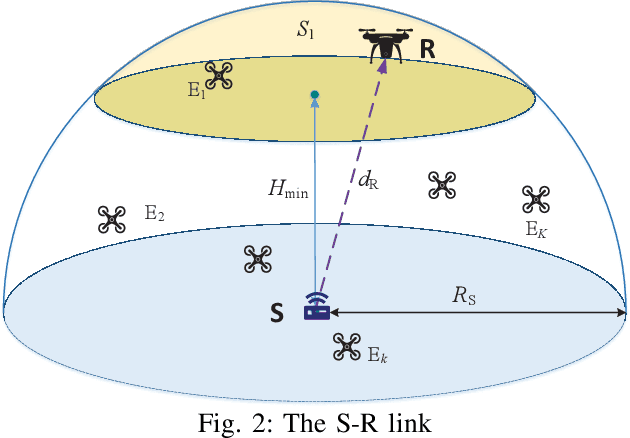

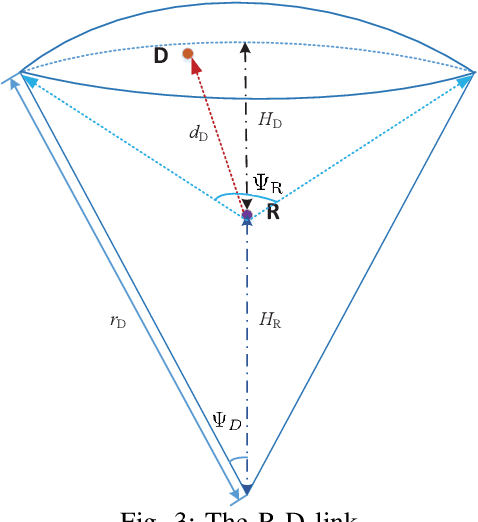

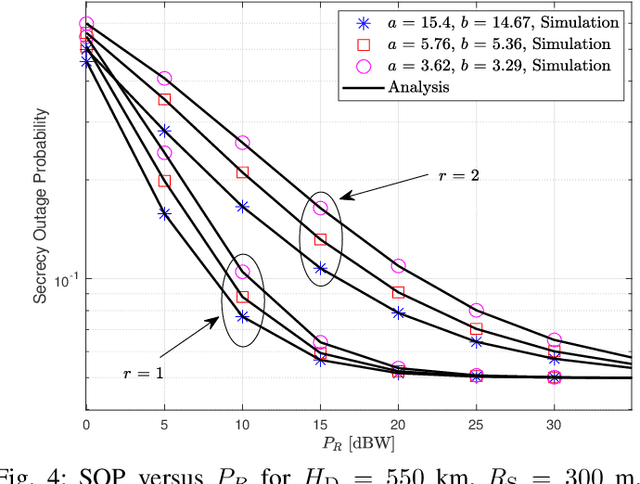

Abstract:This work investigates the secrecy outage performance of the uplink transmission of a radio-frequency (RF)-free-space optical (FSO) hybrid cooperative satellite-aerial-terrestrial network (SATN). Specifically, in the considered cooperative SATN, a terrestrial source (S) transmits its information to a satellite receiver (D) via the help of a cache-enabled aerial relay (R) terminal with the most popular content caching scheme, while a group of eavesdropping aerial terminals (Eves) trying to overhear the transmitted confidential information. Moreover, RF and FSO transmissions are employed over S-R and R-D links, respectively. Considering the randomness of R, D, and Eves, and employing a stochastic geometry framework, the secrecy outage performance of the cooperative uplink transmission in the considered SATN is investigated and a closed-form analytical expression for the end-to-end secrecy outage probability is derived. Finally, Monte-Carlo simulations are shown to verify the accuracy of our analysis.

Effect of Strong Time-Varying Transmission Distance on LEO Satellite-Terrestrial Deliveries

Jun 11, 2022

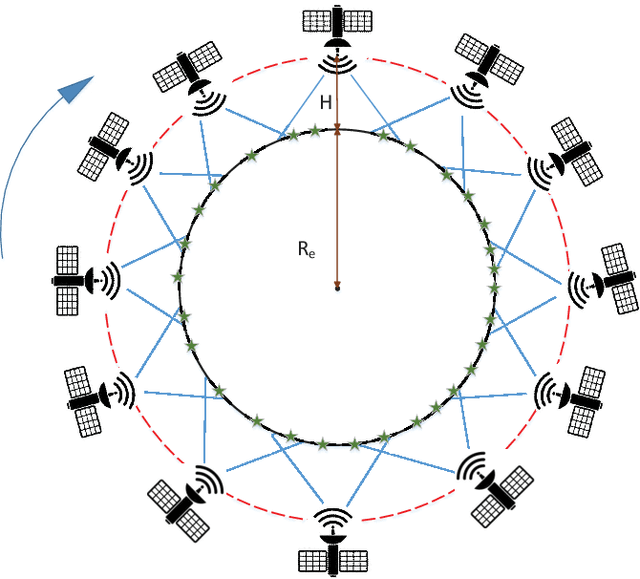

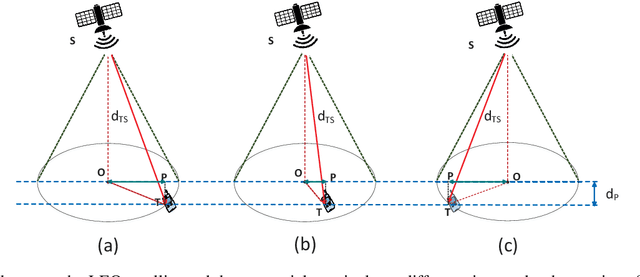

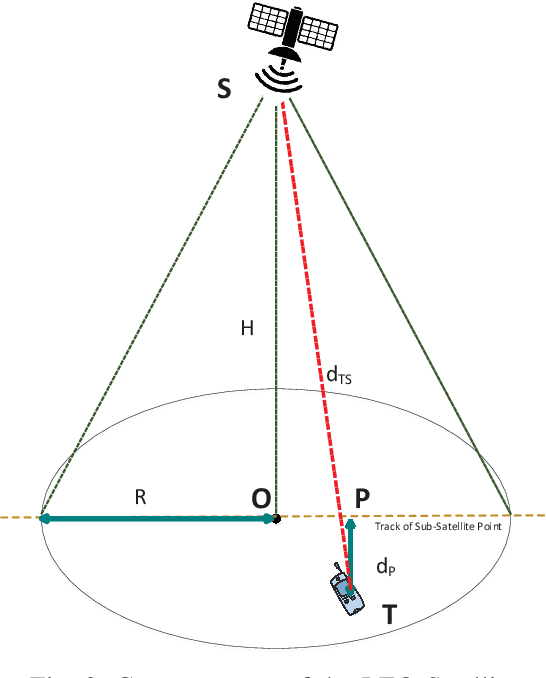

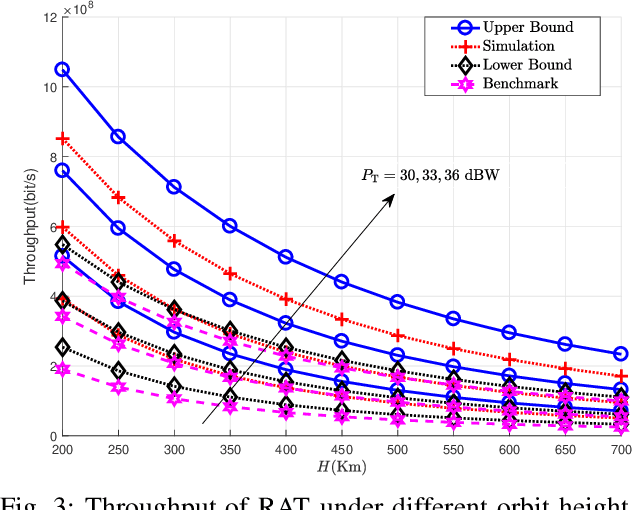

Abstract:In this paper, we investigate the effect of the strong time-varying transmission distance on the performance of the low-earth orbit (LEO) satellite-terrestrial transmission (STT) system. We propose a new analytical framework using finite-state Markov channel (FSMC) model and time discretization method. Moreover, to demonstrate the applications of the proposed framework, the performances of two adaptive transmissions, rate-adaptive transmission (RAT) and power-adaptive transmission (PAT) schemes, are evaluated for the cases when the transmit power or the transmission rate at the LEO satellite is fixed. Closed-form expressions for the throughput, energy efficiency (EE), and delay outage rate (DOR) of the considered systems are derived and verified, which are capable of addressing the capacity, energy efficiency, and outage rate performance of the considered LEO STT scenarios with the proposed analytical framework.

Multi-view Subspace Clustering Networks with Local and Global Graph Information

Oct 24, 2020

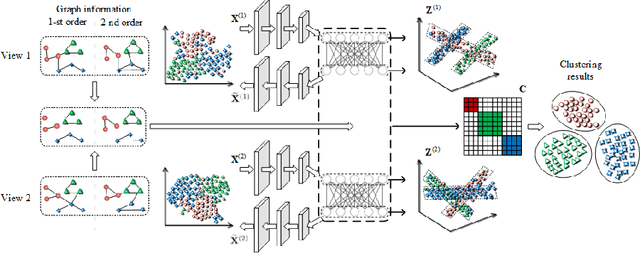

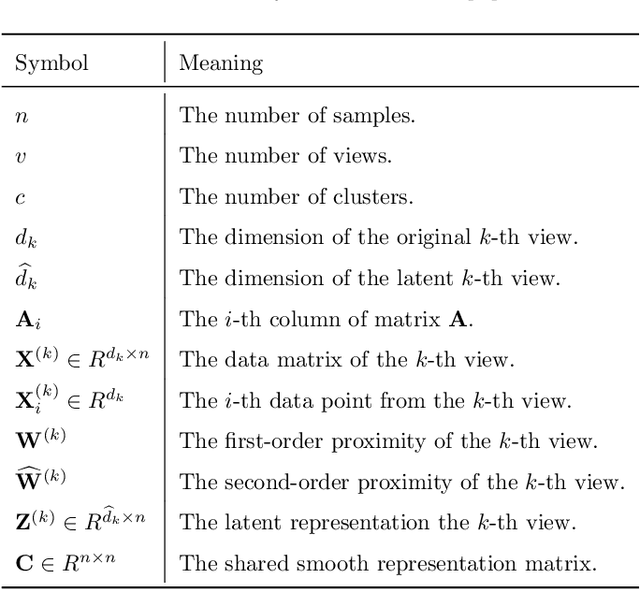

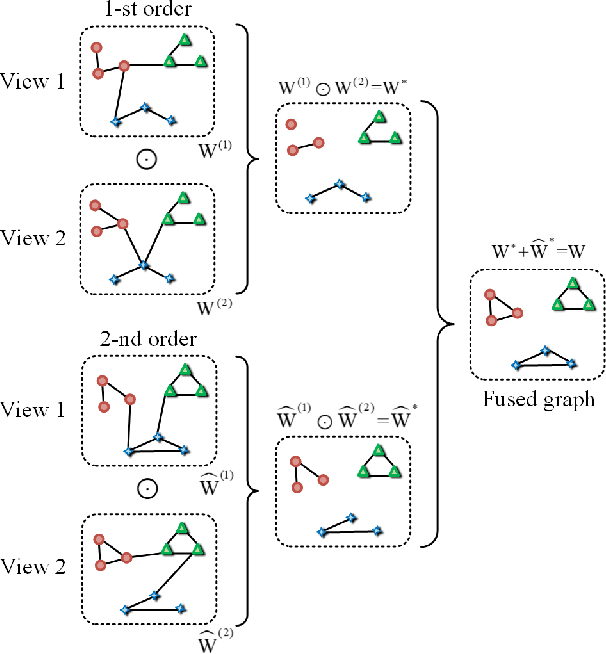

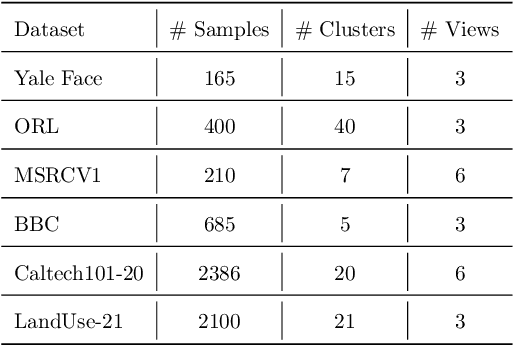

Abstract:This study investigates the problem of multi-view subspace clustering, the goal of which is to explore the underlying grouping structure of data collected from different fields or measurements. Since data do not always comply with the linear subspace models in many real-world applications, most existing multi-view subspace clustering methods that based on the shallow linear subspace models may fail in practice. Furthermore, underlying graph information of multi-view data is always ignored in most existing multi-view subspace clustering methods. To address aforementioned limitations, we proposed the novel multi-view subspace clustering networks with local and global graph information, termed MSCNLG, in this paper. Specifically, autoencoder networks are employed on multiple views to achieve latent smooth representations that are suitable for the linear assumption. Simultaneously, by integrating fused multi-view graph information into self-expressive layers, the proposed MSCNLG obtains the common shared multi-view subspace representation, which can be used to get clustering results by employing the standard spectral clustering algorithm. As an end-to-end trainable framework, the proposed method fully investigates the valuable information of multiple views. Comprehensive experiments on six benchmark datasets validate the effectiveness and superiority of the proposed MSCNLG.

Predictive Precompute with Recurrent Neural Networks

Dec 14, 2019

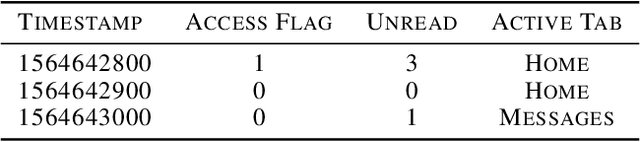

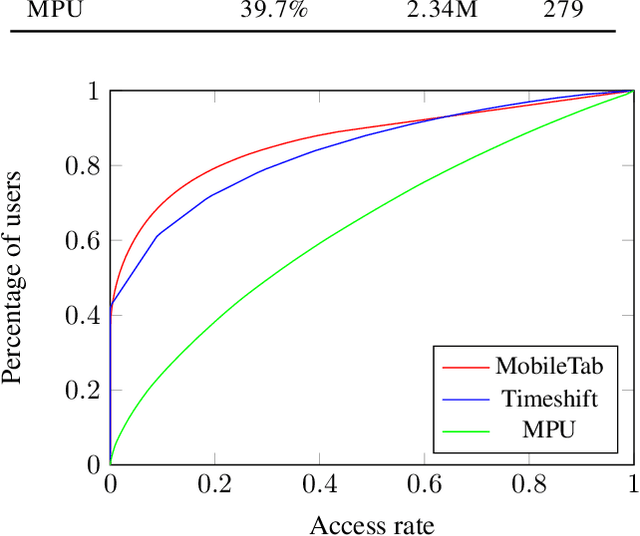

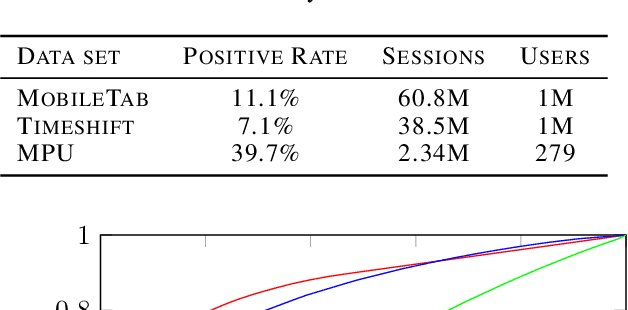

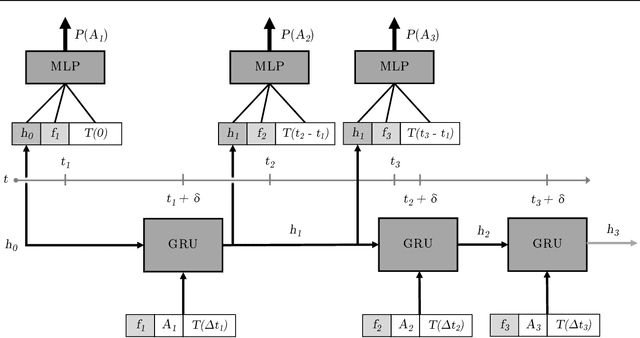

Abstract:In both mobile and web applications, speeding up user interface response times can often lead to significant improvements in user engagement. A common technique to improve responsiveness is to precompute data ahead of time for specific features. However, simply precomputing data for all user and feature combinations is prohibitive at scale due to both network constraints and server-side computational costs. It is therefore important to accurately predict per-user feature usage in order to minimize wasted precomputation ("predictive precompute''). In this paper, we describe the novel application of recurrent neural networks (RNNs) for predictive precompute. We compare their performance with traditional machine learning models, and share findings from their use in a billion-user scale production environment at Facebook. We demonstrate that RNN models improve prediction accuracy, eliminate most feature engineering steps, and reduce the computational cost of serving predictions by an order of magnitude.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge