Tim Adler

Computer Assisted Medical Interventions, Faculty of Mathematics and Computer Science, Heidelberg University, Germany

Beyond Knowledge Silos: Task Fingerprinting for Democratization of Medical Imaging AI

Dec 11, 2024Abstract:The field of medical imaging AI is currently undergoing rapid transformations, with methodical research increasingly translated into clinical practice. Despite these successes, research suffers from knowledge silos, hindering collaboration and progress: Existing knowledge is scattered across publications and many details remain unpublished, while privacy regulations restrict data sharing. In the spirit of democratizing of AI, we propose a framework for secure knowledge transfer in the field of medical image analysis. The key to our approach is dataset "fingerprints", structured representations of feature distributions, that enable quantification of task similarity. We tested our approach across 71 distinct tasks and 12 medical imaging modalities by transferring neural architectures, pretraining, augmentation policies, and multi-task learning. According to comprehensive analyses, our method outperforms traditional methods for identifying relevant knowledge and facilitates collaborative model training. Our framework fosters the democratization of AI in medical imaging and could become a valuable tool for promoting faster scientific advancement.

Self-distillation for surgical action recognition

Mar 22, 2023

Abstract:Surgical scene understanding is a key prerequisite for contextaware decision support in the operating room. While deep learning-based approaches have already reached or even surpassed human performance in various fields, the task of surgical action recognition remains a major challenge. With this contribution, we are the first to investigate the concept of self-distillation as a means of addressing class imbalance and potential label ambiguity in surgical video analysis. Our proposed method is a heterogeneous ensemble of three models that use Swin Transfomers as backbone and the concepts of self-distillation and multi-task learning as core design choices. According to ablation studies performed with the CholecT45 challenge data via cross-validation, the biggest performance boost is achieved by the usage of soft labels obtained by self-distillation. External validation of our method on an independent test set was achieved by providing a Docker container of our inference model to the challenge organizers. According to their analysis, our method outperforms all other solutions submitted to the latest challenge in the field. Our approach thus shows the potential of self-distillation for becoming an important tool in medical image analysis applications.

Sources of performance variability in deep learning-based polyp detection

Nov 17, 2022Abstract:Validation metrics are a key prerequisite for the reliable tracking of scientific progress and for deciding on the potential clinical translation of methods. While recent initiatives aim to develop comprehensive theoretical frameworks for understanding metric-related pitfalls in image analysis problems, there is a lack of experimental evidence on the concrete effects of common and rare pitfalls on specific applications. We address this gap in the literature in the context of colon cancer screening. Our contribution is twofold. Firstly, we present the winning solution of the Endoscopy computer vision challenge (EndoCV) on colon cancer detection, conducted in conjunction with the IEEE International Symposium on Biomedical Imaging (ISBI) 2022. Secondly, we demonstrate the sensitivity of commonly used metrics to a range of hyperparameters as well as the consequences of poor metric choices. Based on comprehensive validation studies performed with patient data from six clinical centers, we found all commonly applied object detection metrics to be subject to high inter-center variability. Furthermore, our results clearly demonstrate that the adaptation of standard hyperparameters used in the computer vision community does not generally lead to the clinically most plausible results. Finally, we present localization criteria that correspond well to clinical relevance. Our work could be a first step towards reconsidering common validation strategies in automatic colon cancer screening applications.

Robust deep learning-based semantic organ segmentation in hyperspectral images

Nov 09, 2021Abstract:Semantic image segmentation is an important prerequisite for context-awareness and autonomous robotics in surgery. The state of the art has focused on conventional RGB video data acquired during minimally invasive surgery, but full-scene semantic segmentation based on spectral imaging data and obtained during open surgery has received almost no attention to date. To address this gap in the literature, we are investigating the following research questions based on hyperspectral imaging (HSI) data of pigs acquired in an open surgery setting: (1) What is an adequate representation of HSI data for neural network-based fully automated organ segmentation, especially with respect to the spatial granularity of the data (pixels vs. superpixels vs. patches vs. full images)? (2) Is there a benefit of using HSI data compared to other modalities, namely RGB data and processed HSI data (e.g. tissue parameters like oxygenation), when performing semantic organ segmentation? According to a comprehensive validation study based on 506 HSI images from 20 pigs, annotated with a total of 19 classes, deep learning-based segmentation performance increases - consistently across modalities - with the spatial context of the input data. Unprocessed HSI data offers an advantage over RGB data or processed data from the camera provider, with the advantage increasing with decreasing size of the input to the neural network. Maximum performance (HSI applied to whole images) yielded a mean dice similarity coefficient (DSC) of 0.89 (standard deviation (SD) 0.04), which is in the range of the inter-rater variability (DSC of 0.89 (SD 0.07)). We conclude that HSI could become a powerful image modality for fully-automatic surgical scene understanding with many advantages over traditional imaging, including the ability to recover additional functional tissue information.

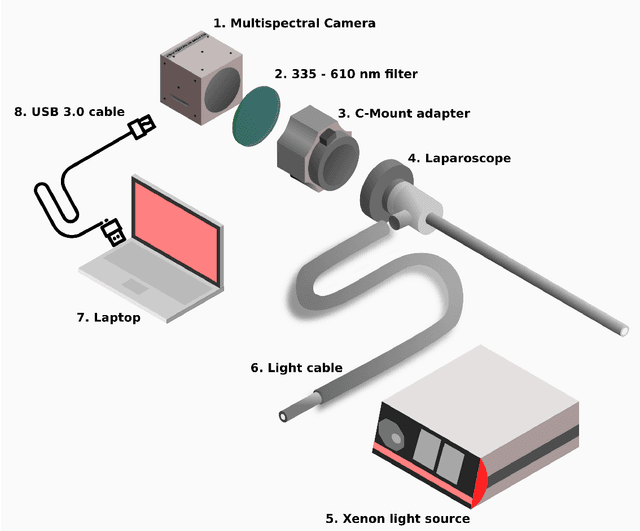

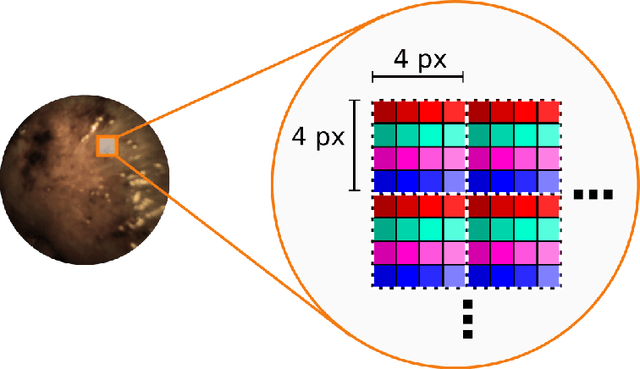

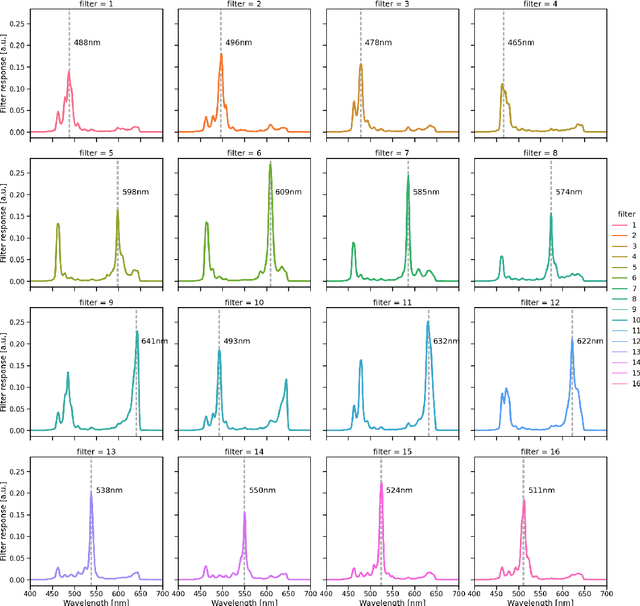

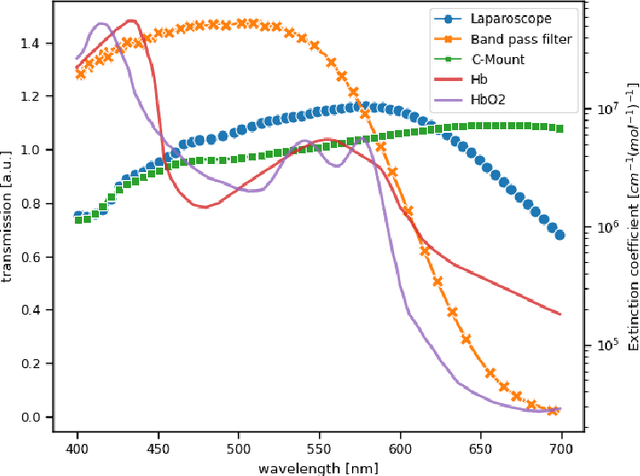

Video-rate multispectral imaging in laparoscopic surgery: First-in-human application

May 28, 2021

Abstract:Multispectral and hyperspectral imaging (MSI/HSI) can provide clinically relevant information on morphological and functional tissue properties. Application in the operating room (OR), however, has so far been limited by complex hardware setups and slow acquisition times. To overcome these limitations, we propose a novel imaging system for video-rate spectral imaging in the clinical workflow. The system integrates a small snapshot multispectral camera with a standard laparoscope and a clinically commonly used light source, enabling the recording of multispectral images with a spectral dimension of 16 at a frame rate of 25 Hz. An ongoing in patient study shows that multispectral recordings from this system can help detect perfusion changes in partial nephrectomy surgery, thus opening the doors to a wide range of clinical applications.

Representing Ambiguity in Registration Problems with Conditional Invertible Neural Networks

Dec 15, 2020

Abstract:Image registration is the basis for many applications in the fields of medical image computing and computer assisted interventions. One example is the registration of 2D X-ray images with preoperative three-dimensional computed tomography (CT) images in intraoperative surgical guidance systems. Due to the high safety requirements in medical applications, estimating registration uncertainty is of a crucial importance in such a scenario. However, previously proposed methods, including classical iterative registration methods and deep learning-based methods have one characteristic in common: They lack the capacity to represent the fact that a registration problem may be inherently ambiguous, meaning that multiple (substantially different) plausible solutions exist. To tackle this limitation, we explore the application of invertible neural networks (INN) as core component of a registration methodology. In the proposed framework, INNs enable going beyond point estimates as network output by representing the possible solutions to a registration problem by a probability distribution that encodes different plausible solutions via multiple modes. In a first feasibility study, we test the approach for a 2D 3D registration setting by registering spinal CT volumes to X-ray images. To this end, we simulate the X-ray images taken by a C-Arm with multiple orientations using the principle of digitially reconstructed radiographs (DRRs). Due to the symmetry of human spine, there are potentially multiple substantially different poses of the C-Arm that can lead to similar projections. The hypothesis of this work is that the proposed approach is able to identify multiple solutions in such ambiguous registration problems.

Invertible Neural Networks for Uncertainty Quantification in Photoacoustic Imaging

Nov 10, 2020

Abstract:Multispectral photoacoustic imaging (PAI) is an emerging imaging modality which enables the recovery of functional tissue parameters such as blood oxygenation. However, the underlying inverse problems are potentially ill-posed, meaning that radically different tissue properties may - in theory - yield comparable measurements. In this work, we present a new approach for handling this specific type of uncertainty by leveraging the concept of conditional invertible neural networks (cINNs). Specifically, we propose going beyond commonly used point estimates for tissue oxygenation and converting single-pixel initial pressure spectra to the full posterior probability density. This way, the inherent ambiguity of a problem can be encoded with multiple modes in the output. Based on the presented architecture, we demonstrate two use cases which leverage this information to not only detect and quantify but also to compensate for uncertainties: (1) photoacoustic device design and (2) optimization of photoacoustic image acquisition. Our in silico studies demonstrate the potential of the proposed methodology to become an important building block for uncertainty-aware reconstruction of physiological parameters with PAI.

Estimation of blood oxygenation with learned spectral decoloring for quantitative photoacoustic imaging (LSD-qPAI)

Feb 15, 2019

Abstract:One of the main applications of photoacoustic (PA) imaging is the recovery of functional tissue properties, such as blood oxygenation (sO2). This is typically achieved by linear spectral unmixing of relevant chromophores from multispectral photoacoustic images. Despite the progress that has been made towards quantitative PA imaging (qPAI), most sO2 estimation methods yield poor results in realistic settings. In this work, we tackle the challenge by employing learned spectral decoloring for quantitative photoacoustic imaging (LSD-qPAI) to obtain quantitative estimates for blood oxygenation. LSD-qPAI computes sO2 directly from pixel-wise initial pressure spectra Sp0, which are vectors comprised of the initial pressure at the same spatial location over all recorded wavelengths. Initial results suggest that LSD-qPAI is able to obtain accurate sO2 estimates directly from multispectral photoacoustic measurements in silico and plausible estimates in vivo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge