Tianpeng Liu

A Reverse Causal Framework to Mitigate Spurious Correlations for Debiasing Scene Graph Generation

May 29, 2025Abstract:Existing two-stage Scene Graph Generation (SGG) frameworks typically incorporate a detector to extract relationship features and a classifier to categorize these relationships; therefore, the training paradigm follows a causal chain structure, where the detector's inputs determine the classifier's inputs, which in turn influence the final predictions. However, such a causal chain structure can yield spurious correlations between the detector's inputs and the final predictions, i.e., the prediction of a certain relationship may be influenced by other relationships. This influence can induce at least two observable biases: tail relationships are predicted as head ones, and foreground relationships are predicted as background ones; notably, the latter bias is seldom discussed in the literature. To address this issue, we propose reconstructing the causal chain structure into a reverse causal structure, wherein the classifier's inputs are treated as the confounder, and both the detector's inputs and the final predictions are viewed as causal variables. Specifically, we term the reconstructed causal paradigm as the Reverse causal Framework for SGG (RcSGG). RcSGG initially employs the proposed Active Reverse Estimation (ARE) to intervene on the confounder to estimate the reverse causality, \ie the causality from final predictions to the classifier's inputs. Then, the Maximum Information Sampling (MIS) is suggested to enhance the reverse causality estimation further by considering the relationship information. Theoretically, RcSGG can mitigate the spurious correlations inherent in the SGG framework, subsequently eliminating the induced biases. Comprehensive experiments on popular benchmarks and diverse SGG frameworks show the state-of-the-art mean recall rate.

SAR-GTR: Attributed Scattering Information Guided SAR Graph Transformer Recognition Algorithm

May 13, 2025Abstract:Utilizing electromagnetic scattering information for SAR data interpretation is currently a prominent research focus in the SAR interpretation domain. Graph Neural Networks (GNNs) can effectively integrate domain-specific physical knowledge and human prior knowledge, thereby alleviating challenges such as limited sample availability and poor generalization in SAR interpretation. In this study, we thoroughly investigate the electromagnetic inverse scattering information of single-channel SAR and re-examine the limitations of applying GNNs to SAR interpretation. We propose the SAR Graph Transformer Recognition Algorithm (SAR-GTR). SAR-GTR carefully considers the attributes and characteristics of different electromagnetic scattering parameters by distinguishing the mapping methods for discrete and continuous parameters, thereby avoiding information confusion and loss. Furthermore, the GTR combines GNNs with the Transformer mechanism and introduces an edge information enhancement channel to facilitate interactive learning of node and edge features, enabling the capture of robust and global structural characteristics of targets. Additionally, the GTR constructs a hierarchical topology-aware system through global node encoding and edge position encoding, fully exploiting the hierarchical structural information of targets. Finally, the effectiveness of the algorithm is validated using the ATRNet-STAR large-scale vehicle dataset.

Step-wise Distribution Alignment Guided Style Prompt Tuning for Source-free Cross-domain Few-shot Learning

Nov 15, 2024

Abstract:Existing cross-domain few-shot learning (CDFSL) methods, which develop source-domain training strategies to enhance model transferability, face challenges with large-scale pre-trained models (LMs) due to inaccessible source data and training strategies. Moreover, fine-tuning LMs for CDFSL demands substantial computational resources, limiting practicality. This paper addresses the source-free CDFSL (SF-CDFSL) problem, tackling few-shot learning (FSL) in the target domain using only pre-trained models and a few target samples without source data or strategies. To overcome the challenge of inaccessible source data, this paper introduces Step-wise Distribution Alignment Guided Style Prompt Tuning (StepSPT), which implicitly narrows domain gaps through prediction distribution optimization. StepSPT proposes a style prompt to align target samples with the desired distribution and adopts a dual-phase optimization process. In the external process, a step-wise distribution alignment strategy factorizes prediction distribution optimization into a multi-step alignment problem to tune the style prompt. In the internal process, the classifier is updated using standard cross-entropy loss. Evaluations on five datasets demonstrate that StepSPT outperforms existing prompt tuning-based methods and SOTAs. Ablation studies further verify its effectiveness. Code will be made publicly available at \url{https://github.com/xuhuali-mxj/StepSPT}.

MaDiNet: Mamba Diffusion Network for SAR Target Detection

Nov 12, 2024

Abstract:The fundamental challenge in SAR target detection lies in developing discriminative, efficient, and robust representations of target characteristics within intricate non-cooperative environments. However, accurate target detection is impeded by factors including the sparse distribution and discrete features of the targets, as well as complex background interference. In this study, we propose a \textbf{Ma}mba \textbf{Di}ffusion \textbf{Net}work (MaDiNet) for SAR target detection. Specifically, MaDiNet conceptualizes SAR target detection as the task of generating the position (center coordinates) and size (width and height) of the bounding boxes in the image space. Furthermore, we design a MambaSAR module to capture intricate spatial structural information of targets and enhance the capability of the model to differentiate between targets and complex backgrounds. The experimental results on extensive SAR target detection datasets achieve SOTA, proving the effectiveness of the proposed network. Code is available at \href{https://github.com/JoyeZLearning/MaDiNet}{https://github.com/JoyeZLearning/MaDiNet}.

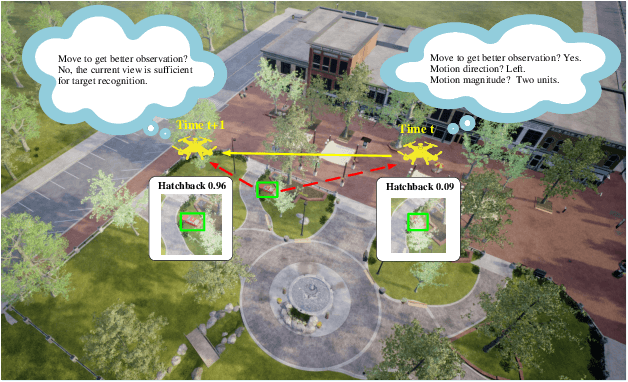

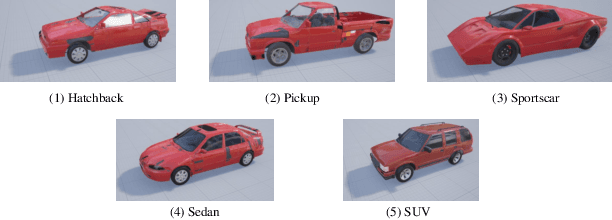

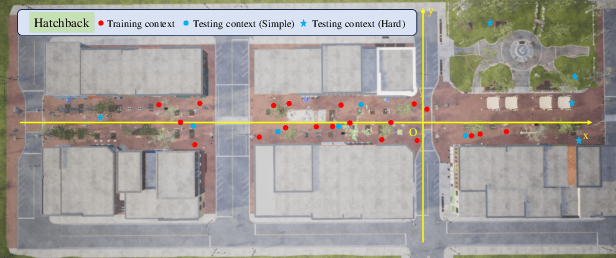

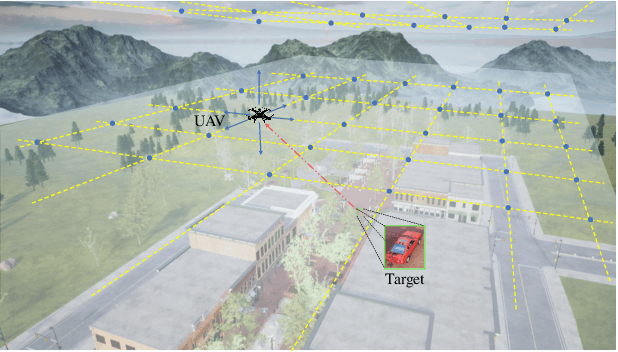

UEVAVD: A Dataset for Developing UAV's Eye View Active Object Detection

Nov 07, 2024

Abstract:Occlusion is a longstanding difficulty that challenges the UAV-based object detection. Many works address this problem by adapting the detection model. However, few of them exploit that the UAV could fundamentally improve detection performance by changing its viewpoint. Active Object Detection (AOD) offers an effective way to achieve this purpose. Through Deep Reinforcement Learning (DRL), AOD endows the UAV with the ability of autonomous path planning to search for the observation that is more conducive to target identification. Unfortunately, there exists no available dataset for developing the UAV AOD method. To fill this gap, we released a UAV's eye view active vision dataset named UEVAVD and hope it can facilitate research on the UAV AOD problem. Additionally, we improve the existing DRL-based AOD method by incorporating the inductive bias when learning the state representation. First, due to the partial observability, we use the gated recurrent unit to extract state representations from the observation sequence instead of the single-view observation. Second, we pre-decompose the scene with the Segment Anything Model (SAM) and filter out the irrelevant information with the derived masks. With these practices, the agent could learn an active viewing policy with better generalization capability. The effectiveness of our innovations is validated by the experiments on the UEVAVD dataset. Our dataset will soon be available at https://github.com/Leo000ooo/UEVAVD_dataset.

Enhancing Transferability of Targeted Adversarial Examples: A Self-Universal Perspective

Jul 22, 2024Abstract:Transfer-based targeted adversarial attacks against black-box deep neural networks (DNNs) have been proven to be significantly more challenging than untargeted ones. The impressive transferability of current SOTA, the generative methods, comes at the cost of requiring massive amounts of additional data and time-consuming training for each targeted label. This results in limited efficiency and flexibility, significantly hindering their deployment in practical applications. In this paper, we offer a self-universal perspective that unveils the great yet underexplored potential of input transformations in pursuing this goal. Specifically, transformations universalize gradient-based attacks with intrinsic but overlooked semantics inherent within individual images, exhibiting similar scalability and comparable results to time-consuming learning over massive additional data from diverse classes. We also contribute a surprising empirical insight that one of the most fundamental transformations, simple image scaling, is highly effective, scalable, sufficient, and necessary in enhancing targeted transferability. We further augment simple scaling with orthogonal transformations and block-wise applicability, resulting in the Simple, faSt, Self-universal yet Strong Scale Transformation (S$^4$ST) for self-universal TTA. On the ImageNet-Compatible benchmark dataset, our method achieves a 19.8% improvement in the average targeted transfer success rate against various challenging victim models over existing SOTA transformation methods while only consuming 36% time for attacking. It also outperforms resource-intensive attacks by a large margin in various challenging settings.

LDSF: Lightweight Dual-Stream Framework for SAR Target Recognition by Coupling Local Electromagnetic Scattering Features and Global Visual Features

Mar 06, 2024

Abstract:Mainstream DNN-based SAR-ATR methods still face issues such as easy overfitting of a few training data, high computational overhead, and poor interpretability of the black-box model. Integrating physical knowledge into DNNs to improve performance and achieve a higher level of physical interpretability becomes the key to solving the above problems. This paper begins by focusing on the electromagnetic (EM) backscattering mechanism. We extract the EM scattering (EMS) information from the complex SAR data and integrate the physical properties of the target into the network through a dual-stream framework to guide the network to learn physically meaningful and discriminative features. Specifically, one stream is the local EMS feature (LEMSF) extraction net. It is a heterogeneous graph neural network (GNN) guided by a multi-level multi-head attention mechanism. LEMSF uses the EMS information to obtain topological structure features and high-level physical semantic features. The other stream is a CNN-based global visual features (GVF) extraction net that captures the visual features of SAR pictures from the image domain. After obtaining the two-stream features, a feature fusion subnetwork is proposed to adaptively learn the fusion strategy. Thus, the two-stream features can maximize the performance. Furthermore, the loss function is designed based on the graph distance measure to promote intra-class aggregation. We discard overly complex design ideas and effectively control the model size while maintaining algorithm performance. Finally, to better validate the performance and generalizability of the algorithms, two more rigorous evaluation protocols, namely once-for-all (OFA) and less-for-more (LFM), are used to verify the superiority of the proposed algorithm on the MSTAR.

Towards Assessing the Synthetic-to-Measured Adversarial Vulnerability of SAR ATR

Jan 30, 2024Abstract:Recently, there has been increasing concern about the vulnerability of deep neural network (DNN)-based synthetic aperture radar (SAR) automatic target recognition (ATR) to adversarial attacks, where a DNN could be easily deceived by clean input with imperceptible but aggressive perturbations. This paper studies the synthetic-to-measured (S2M) transfer setting, where an attacker generates adversarial perturbation based solely on synthetic data and transfers it against victim models trained with measured data. Compared with the current measured-to-measured (M2M) transfer setting, our approach does not need direct access to the victim model or the measured SAR data. We also propose the transferability estimation attack (TEA) to uncover the adversarial risks in this more challenging and practical scenario. The TEA makes full use of the limited similarity between the synthetic and measured data pairs for blind estimation and optimization of S2M transferability, leading to feasible surrogate model enhancement without mastering the victim model and data. Comprehensive evaluations based on the publicly available synthetic and measured paired labeled experiment (SAMPLE) dataset demonstrate that the TEA outperforms state-of-the-art methods and can significantly enhance various attack algorithms in computer vision and remote sensing applications. Codes and data are available at https://github.com/scenarri/S2M-TEA.

Self-Supervised Learning for SAR ATR with a Knowledge-Guided Predictive Architecture

Nov 26, 2023

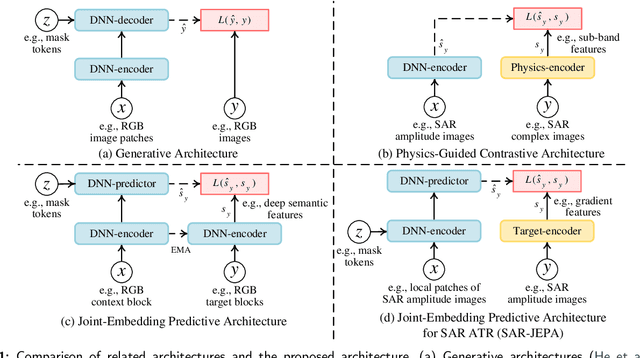

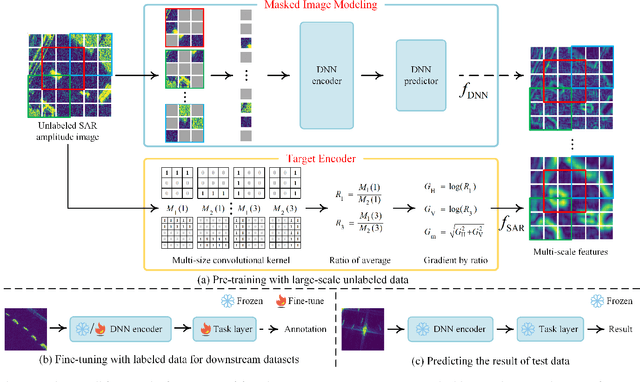

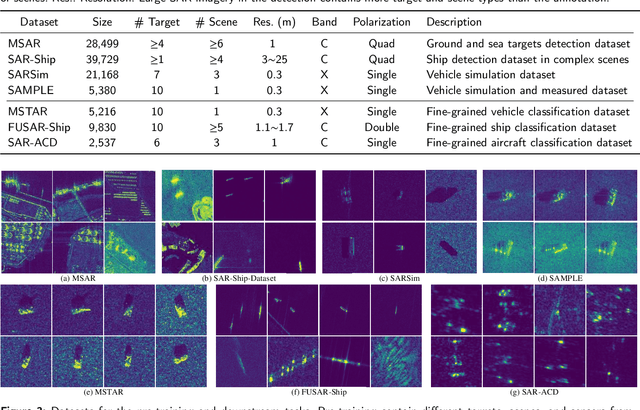

Abstract:Recently, the emergence of a large number of Synthetic Aperture Radar (SAR) sensors and target datasets has made it possible to unify downstream tasks with self-supervised learning techniques, which can pave the way for building the foundation model in the SAR target recognition field. The major challenge of self-supervised learning for SAR target recognition lies in the generalizable representation learning in low data quality and noise.To address the aforementioned problem, we propose a knowledge-guided predictive architecture that uses local masked patches to predict the multiscale SAR feature representations of unseen context. The core of the proposed architecture lies in combining traditional SAR domain feature extraction with state-of-the-art scalable self-supervised learning for accurate generalized feature representations. The proposed framework is validated on various downstream datasets (MSTAR, FUSAR-Ship, SAR-ACD and SSDD), and can bring consistent performance improvement for SAR target recognition. The experimental results strongly demonstrate the unified performance improvement of the self-supervised learning technique for SAR target recognition across diverse targets, scenes and sensors.

Deep Intellectual Property: A Survey

Apr 28, 2023Abstract:With the widespread application in industrial manufacturing and commercial services, well-trained deep neural networks (DNNs) are becoming increasingly valuable and crucial assets due to the tremendous training cost and excellent generalization performance. These trained models can be utilized by users without much expert knowledge benefiting from the emerging ''Machine Learning as a Service'' (MLaaS) paradigm. However, this paradigm also exposes the expensive models to various potential threats like model stealing and abuse. As an urgent requirement to defend against these threats, Deep Intellectual Property (DeepIP), to protect private training data, painstakingly-tuned hyperparameters, or costly learned model weights, has been the consensus of both industry and academia. To this end, numerous approaches have been proposed to achieve this goal in recent years, especially to prevent or discover model stealing and unauthorized redistribution. Given this period of rapid evolution, the goal of this paper is to provide a comprehensive survey of the recent achievements in this field. More than 190 research contributions are included in this survey, covering many aspects of Deep IP Protection: challenges/threats, invasive solutions (watermarking), non-invasive solutions (fingerprinting), evaluation metrics, and performance. We finish the survey by identifying promising directions for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge