Tasuku Makabe

Modification of muscle antagonistic relations and hand trajectory on the dynamic motion of Musculoskeletal Humanoid

Dec 01, 2024

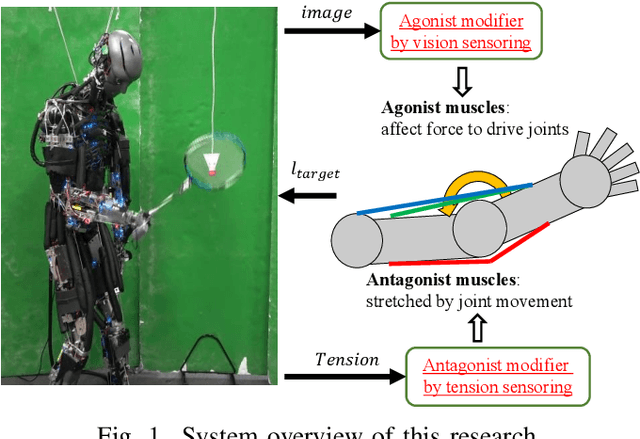

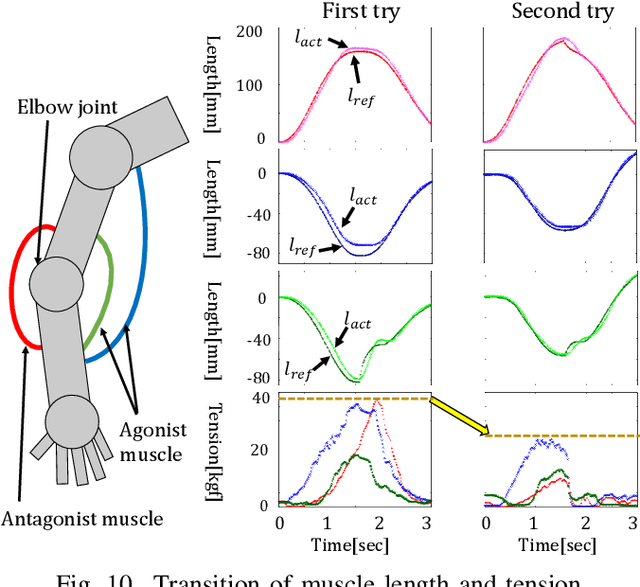

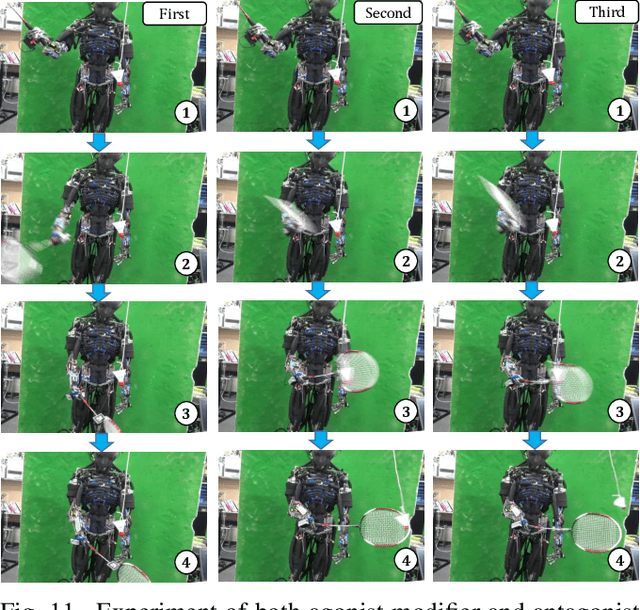

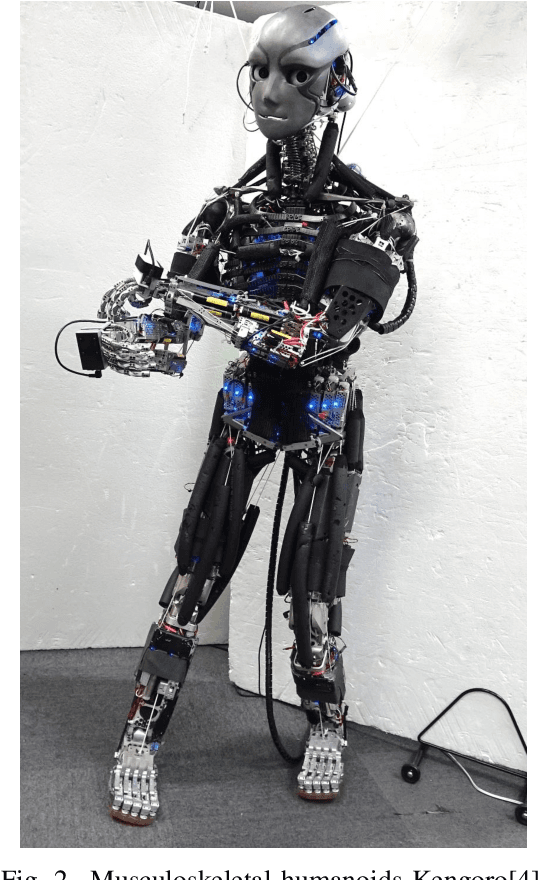

Abstract:In recent years, some research on musculoskeletal humanoids is in progress. However, there are some challenges such as unmeasurable transformation of body structure and muscle path, and difficulty in measuring own motion because of lack of joint angle sensor. In this study, we suggest two motion acquisition methods. One is a method to acquire antagonistic relations of muscles by tension sensing, and the other is a method to acquire correct hand trajectory by vision sensing. Finally, we realize badminton shuttlecock-hitting motion of Kengoro with these two acquisition methods.

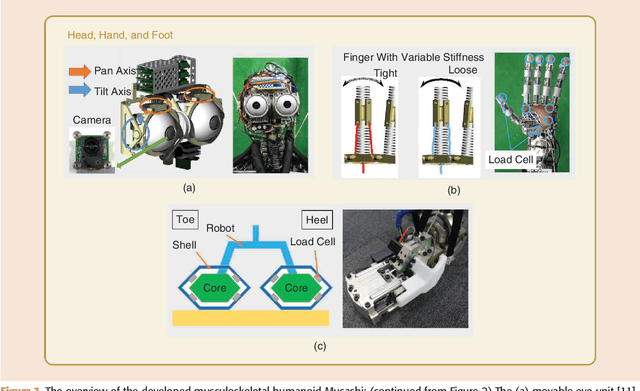

Component Modularized Design of Musculoskeletal Humanoid Platform Musashi to Investigate Learning Control Systems

Oct 29, 2024Abstract:To develop Musashi as a musculoskeletal humanoid platform to investigate learning control systems, we aimed for a body with flexible musculoskeletal structure, redundant sensors, and easily reconfigurable structure. For this purpose, we develop joint modules that can directly measure joint angles, muscle modules that can realize various muscle routes, and nonlinear elastic units with soft structures, etc. Next, we develop MusashiLarm, a musculoskeletal platform composed of only joint modules, muscle modules, generic bone frames, muscle wire units, and a few attachments. Finally, we develop Musashi, a musculoskeletal humanoid platform which extends MusashiLarm to the whole body design, and conduct several basic experiments and learning control experiments to verify the effectiveness of its concept.

A Robot Kinematics Model Estimation Using Inertial Sensors for On-Site Building Robotics

Oct 16, 2024

Abstract:In order to make robots more useful in a variety of environments, they need to be highly portable so that they can be transported to wherever they are needed, and highly storable so that they can be stored when not in use. We propose "on-site robotics", which uses parts procured at the location where the robot will be active, and propose a new solution to the problem of portability and storability. In this paper, as a proof of concept for on-site robotics, we describe a method for estimating the kinematic model of a robot by using inertial measurement units (IMU) sensor module on rigid links, estimating the relative orientation between modules from angular velocity, and estimating the relative position from the measurement of centrifugal force. At the end of this paper, as an evaluation for this method, we present an experiment in which a robot made up of wooden sticks reaches a target position. In this experiment, even if the combination of the links is changed, the robot is able to reach the target position again immediately after estimation, showing that it can operate even after being reassembled. Our implementation is available on https://github.com/hiroya1224/urdf_estimation_with_imus .

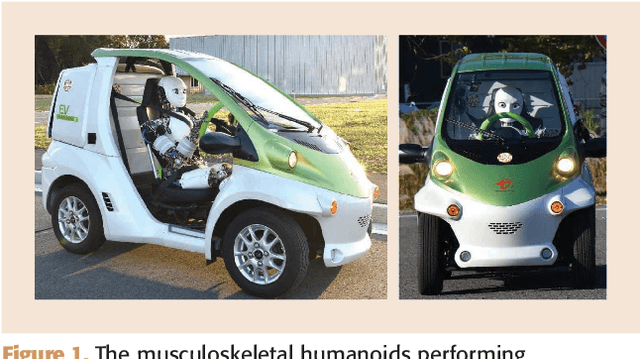

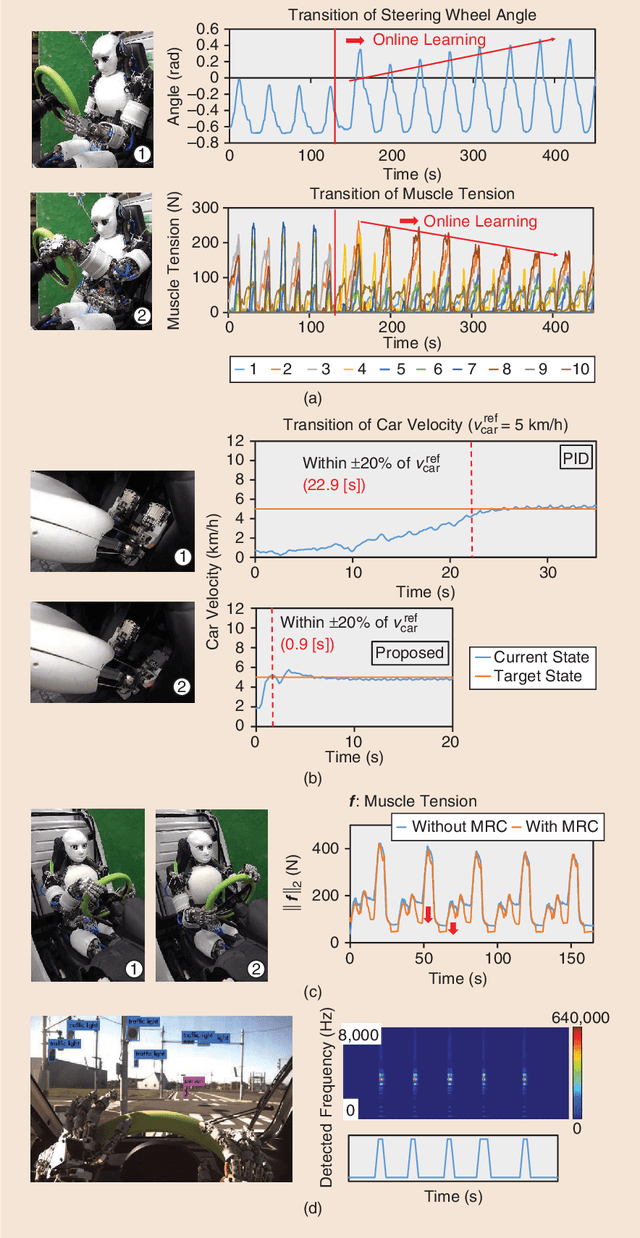

Toward Autonomous Driving by Musculoskeletal Humanoids: A Study of Developed Hardware and Learning-Based Software

Jun 08, 2024

Abstract:This paper summarizes an autonomous driving project by musculoskeletal humanoids. The musculoskeletal humanoid, which mimics the human body in detail, has redundant sensors and a flexible body structure. These characteristics are suitable for motions with complex environmental contact, and the robot is expected to sit down on the car seat, step on the acceleration and brake pedals, and operate the steering wheel by both arms. We reconsider the developed hardware and software of the musculoskeletal humanoid Musashi in the context of autonomous driving. The respective components of autonomous driving are conducted using the benefits of the hardware and software. Finally, Musashi succeeded in the pedal and steering wheel operations with recognition.

TWIMP: Two-Wheel Inverted Musculoskeletal Pendulum as a Learning Control Platform in the Real World with Environmental Physical Contact

Apr 22, 2024

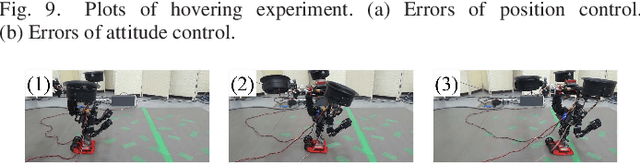

Abstract:By the recent spread of machine learning in the robotics field, a humanoid that can act, perceive, and learn in the real world through contact with the environment needs to be developed. In this study, as one of the choices, we propose a novel humanoid TWIMP, which combines a human mimetic musculoskeletal upper limb with a two-wheel inverted pendulum. By combining the benefit of a musculoskeletal humanoid, which can achieve soft contact with the external environment, and the benefit of a two-wheel inverted pendulum with a small footprint and high mobility, we can easily investigate learning control systems in environments with contact and sudden impact. We reveal our whole concept and system details of TWIMP, and execute several preliminary experiments to show its potential ability.

Five-fingered Hand with Wide Range of Thumb Using Combination of Machined Springs and Variable Stiffness Joints

Mar 26, 2024

Abstract:Human hands can not only grasp objects of various shape and size and manipulate them in hands but also exert such a large gripping force that they can support the body in the situations such as dangling a bar and climbing a ladder. On the other hand, it is difficult for most robot hands to manage both. Therefore in this paper we developed the hand which can grasp various objects and exert large gripping force. To develop such hand, we focused on the thumb CM joint with wide range of motion and the MP joints of four fingers with the DOF of abduction and adduction. Based on the hand with large gripping force and flexibility using machined spring, we applied above mentioned joint mechanism to the hand. The thumb CM joint has wide range of motion because of the combination of three machined springs and MP joints of four fingers have variable rigidity mechanism instead of driving each joint independently in order to move joint in limited space and by limited actuators. Using the developed hand, we achieved the grasping of various objects, supporting a large load and several motions with an arm.

Daily Assistive Modular Robot Design Based on Multi-Objective Black-Box Optimization

Sep 25, 2023

Abstract:The range of robot activities is expanding from industries with fixed environments to diverse and changing environments, such as nursing care support and daily life support. In particular, autonomous construction of robots that are personalized for each user and task is required. Therefore, we develop an actuator module that can be reconfigured to various link configurations, can carry heavy objects using a locking mechanism, and can be easily operated by human teaching using a releasing mechanism. Given multiple target coordinates, a modular robot configuration that satisfies these coordinates and minimizes the required torque is automatically generated by Tree-structured Parzen Estimator (TPE), a type of black-box optimization. Based on the obtained results, we show that the robot can be reconfigured to perform various functions such as moving monitors and lights, serving food, and so on.

Online Estimation of Self-Body Deflection With Various Sensor Data Based on Directional Statistics

Jun 06, 2023Abstract:In this paper, we propose a method for online estimation of the robot's posture. Our method uses von Mises and Bingham distributions as probability distributions of joint angles and 3D orientation, which are used in directional statistics. We constructed a particle filter using these distributions and configured a system to estimate the robot's posture from various sensor information (e.g., joint encoders, IMU sensors, and cameras). Furthermore, unlike tangent space approximations, these distributions can handle global features and represent sensor characteristics as observation noises. As an application, we show that the yaw drift of a 6-axis IMU sensor can be represented probabilistically to prevent adverse effects on attitude estimation. For the estimation, we used an approximate model that assumes the actual robot posture can be reproduced by correcting the joint angles of a rigid body model. In the experiment part, we tested the estimator's effectiveness by examining that the joint angles generated with the approximate model can be estimated using the link pose of the same model. We then applied the estimator to the actual robot and confirmed that the gripper position could be estimated, thereby verifying the validity of the approximate model in our situation.

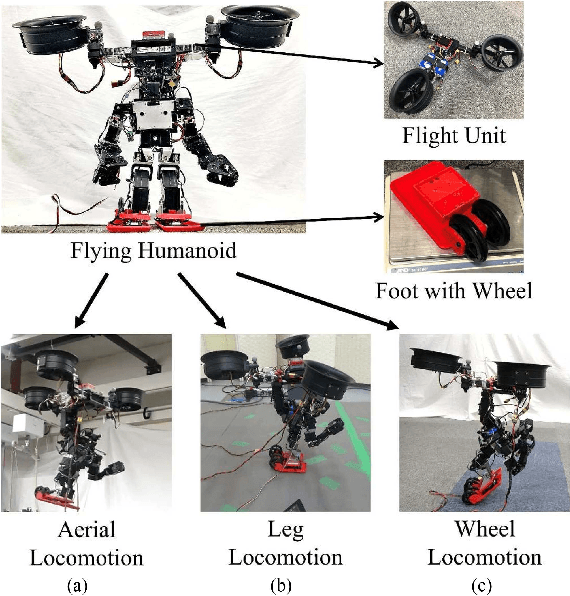

Design and Control of a Humanoid Equipped with Flight Unit and Wheels for Multimodal Locomotion

Mar 26, 2023

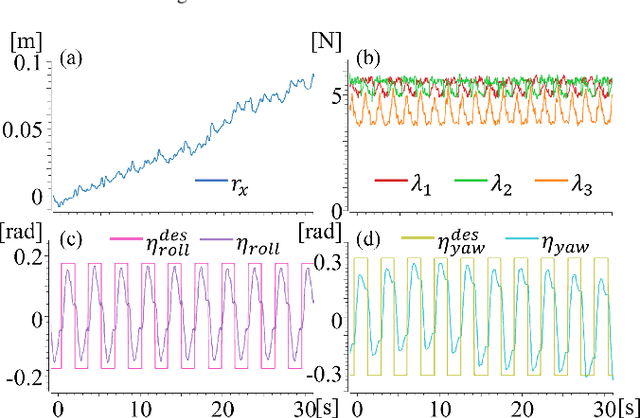

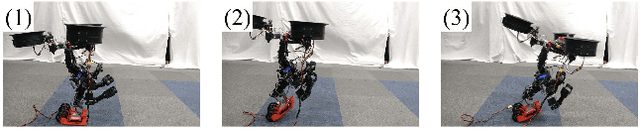

Abstract:Humanoids are versatile robotic platforms because of their limbs with multiple degrees of freedom. Although humanoids can walk like humans, the speed is relatively slow, and they cannot run over large barriers. To address these problems, we aim to achieve rapid terrestrial locomotion ability and simultaneously expand the domain of locomotion to the air by utilizing thrust for propulsion. In this paper, we first describe an optimized construction method of a humanoid robot equipped with wheels and a flight unit to achieve these abilities. Then, we describe the integrated control framework of the proposed flying humanoid for each mode of locomotion: aerial locomotion, leg locomotion, and wheel locomotion. Finally, we achieved multimodal locomotion and aerial manipulation experiments using the robot platform proposed in this work. To the best of our knowledge, it is the first time to achieve three different types of locomotion, including flight, by a single humanoid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge