Koki Shinjo

Environmentally Adaptive Control Including Variance Minimization Using Stochastic Predictive Network with Parametric Bias: Application to Mobile Robots

Dec 11, 2024Abstract:In this study, we propose a predictive model composed of a recurrent neural network including parametric bias and stochastic elements, and an environmentally adaptive robot control method including variance minimization using the model. Robots which have flexible bodies or whose states can only be partially observed are difficult to modelize, and their predictive models often have stochastic behaviors. In addition, the physical state of the robot and the surrounding environment change sequentially, and so the predictive model can change online. Therefore, in this study, we construct a learning-based stochastic predictive model implemented in a neural network embedded with such information from the experience of the robot, and develop a control method for the robot to avoid unstable motion with large variance while adapting to the current environment. This method is verified through a mobile robot in simulation and to the actual robot Fetch.

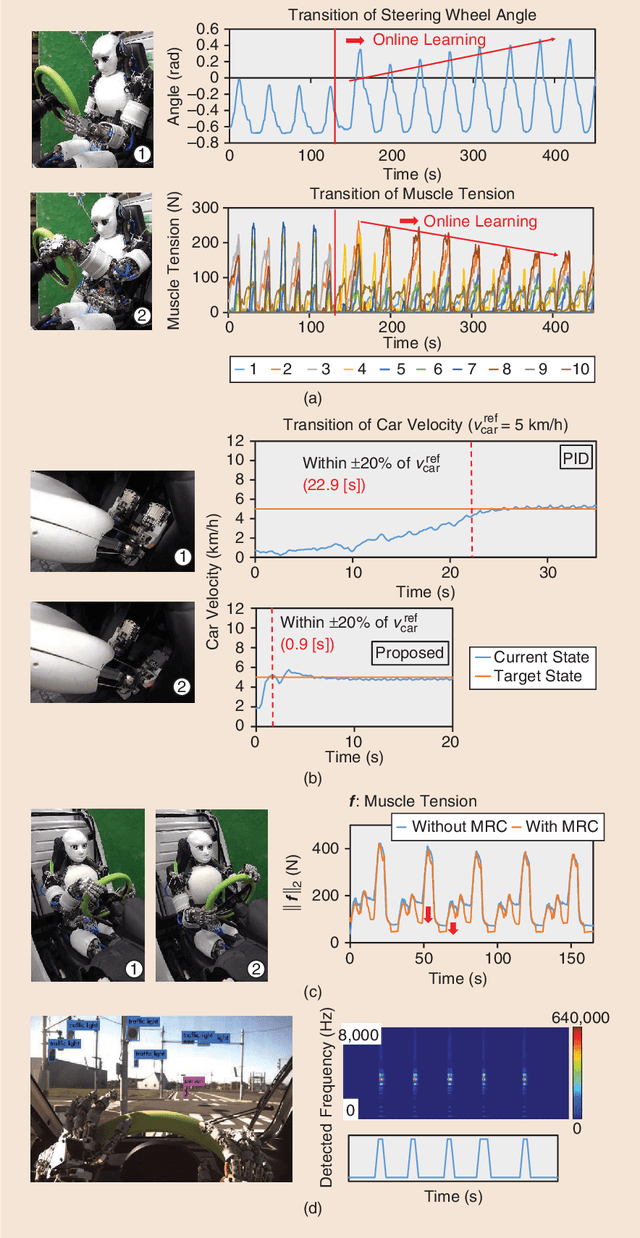

Task-specific Self-body Controller Acquisition by Musculoskeletal Humanoids: Application to Pedal Control in Autonomous Driving

Dec 11, 2024

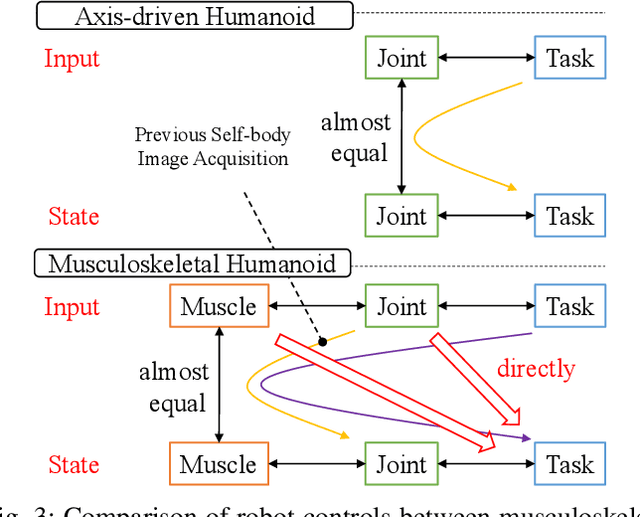

Abstract:The musculoskeletal humanoid has many benefits that human beings have, but the modeling of its complex flexible body is difficult. Although we have developed an online acquisition method of the nonlinear relationship between joints and muscles, we could not completely match the actual robot and its self-body image. When realizing a certain task, the direct relationship between the control input and task state needs to be learned. So, we construct a neural network representing the time-series relationship between the control input and task state, and realize the intended task state by applying the network to a real-time control. In this research, we conduct accelerator pedal control experiments as one application, and verify the effectiveness of this study.

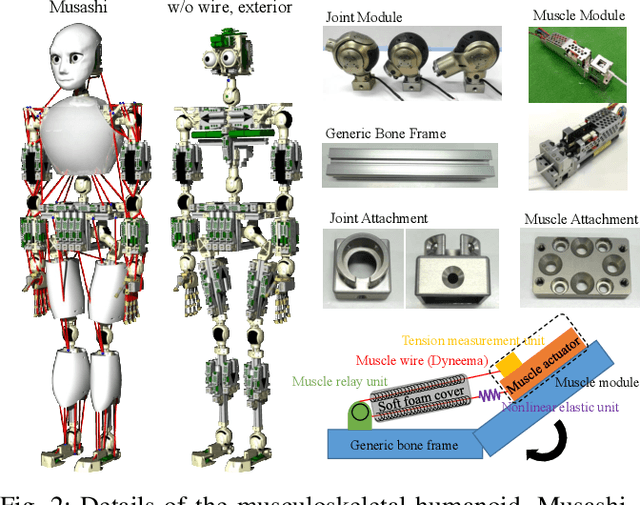

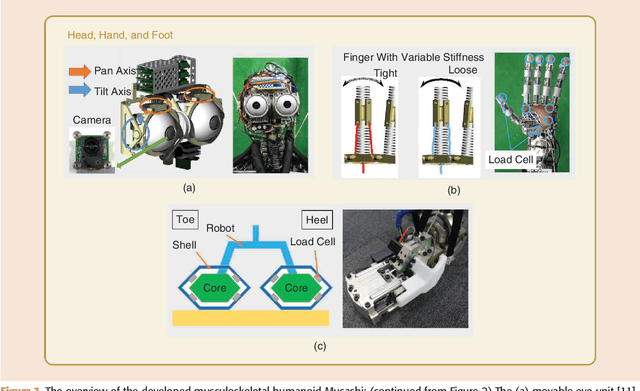

Component Modularized Design of Musculoskeletal Humanoid Platform Musashi to Investigate Learning Control Systems

Oct 29, 2024Abstract:To develop Musashi as a musculoskeletal humanoid platform to investigate learning control systems, we aimed for a body with flexible musculoskeletal structure, redundant sensors, and easily reconfigurable structure. For this purpose, we develop joint modules that can directly measure joint angles, muscle modules that can realize various muscle routes, and nonlinear elastic units with soft structures, etc. Next, we develop MusashiLarm, a musculoskeletal platform composed of only joint modules, muscle modules, generic bone frames, muscle wire units, and a few attachments. Finally, we develop Musashi, a musculoskeletal humanoid platform which extends MusashiLarm to the whole body design, and conduct several basic experiments and learning control experiments to verify the effectiveness of its concept.

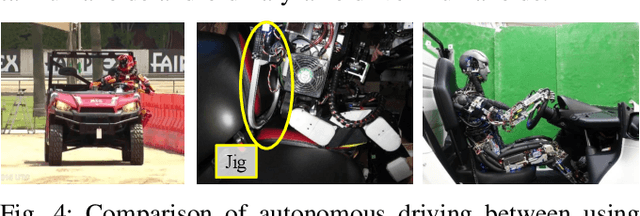

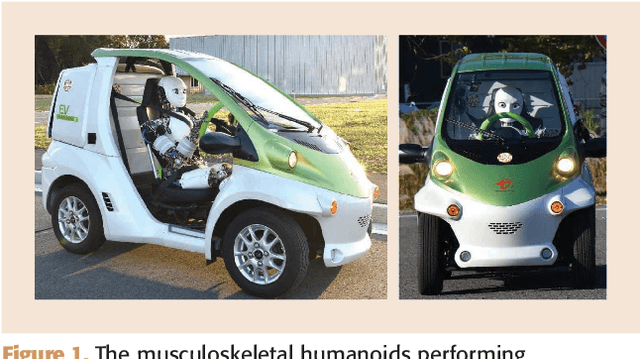

Toward Autonomous Driving by Musculoskeletal Humanoids: A Study of Developed Hardware and Learning-Based Software

Jun 08, 2024

Abstract:This paper summarizes an autonomous driving project by musculoskeletal humanoids. The musculoskeletal humanoid, which mimics the human body in detail, has redundant sensors and a flexible body structure. These characteristics are suitable for motions with complex environmental contact, and the robot is expected to sit down on the car seat, step on the acceleration and brake pedals, and operate the steering wheel by both arms. We reconsider the developed hardware and software of the musculoskeletal humanoid Musashi in the context of autonomous driving. The respective components of autonomous driving are conducted using the benefits of the hardware and software. Finally, Musashi succeeded in the pedal and steering wheel operations with recognition.

Semantic Scene Difference Detection in Daily Life Patroling by Mobile Robots using Pre-Trained Large-Scale Vision-Language Model

Sep 28, 2023Abstract:It is important for daily life support robots to detect changes in their environment and perform tasks. In the field of anomaly detection in computer vision, probabilistic and deep learning methods have been used to calculate the image distance. These methods calculate distances by focusing on image pixels. In contrast, this study aims to detect semantic changes in the daily life environment using the current development of large-scale vision-language models. Using its Visual Question Answering (VQA) model, we propose a method to detect semantic changes by applying multiple questions to a reference image and a current image and obtaining answers in the form of sentences. Unlike deep learning-based methods in anomaly detection, this method does not require any training or fine-tuning, is not affected by noise, and is sensitive to semantic state changes in the real world. In our experiments, we demonstrated the effectiveness of this method by applying it to a patrol task in a real-life environment using a mobile robot, Fetch Mobile Manipulator. In the future, it may be possible to add explanatory power to changes in the daily life environment through spoken language.

Automatic Diary Generation System including Information on Joint Experiences between Humans and Robots

Sep 05, 2023Abstract:In this study, we propose an automatic diary generation system that uses information from past joint experiences with the aim of increasing the favorability for robots through shared experiences between humans and robots. For the verbalization of the robot's memory, the system applies a large-scale language model, which is a rapidly developing field. Since this model does not have memories of experiences, it generates a diary by receiving information from joint experiences. As an experiment, a robot and a human went for a walk and generated a diary with interaction and dialogue history. The proposed diary achieved high scores in comfort and performance in the evaluation of the robot's impression. In the survey of diaries giving more favorable impressions, diaries with information on joint experiences were selected higher than diaries without such information, because diaries with information on joint experiences showed more cooperation between the robot and the human and more intimacy from the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge