Susan A. Murphy

Statistical Reinforcement Learning in the Real World: A Survey of Challenges and Future Directions

Jan 21, 2026Abstract:Reinforcement learning (RL) has achieved remarkable success in real-world decision-making across diverse domains, including gaming, robotics, online advertising, public health, and natural language processing. Despite these advances, a substantial gap remains between RL research and its deployment in many practical settings. Two recurring challenges often underlie this gap. First, many settings offer limited opportunity for the agent to interact extensively with the target environment due to practical constraints. Second, many target environments often undergo substantial changes, requiring redesign and redeployment of RL systems (e.g., advancements in science and technology that change the landscape of healthcare delivery). Addressing these challenges and bridging the gap between basic research and application requires theory and methodology that directly inform the design, implementation, and continual improvement of RL systems in real-world settings. In this paper, we frame the application of RL in practice as a three-component process: (i) online learning and optimization during deployment, (ii) post- or between-deployment offline analyses, and (iii) repeated cycles of deployment and redeployment to continually improve the RL system. We provide a narrative review of recent advances in statistical RL that address these components, including methods for maximizing data utility for between-deployment inference, enhancing sample efficiency for online learning within-deployment, and designing sequences of deployments for continual improvement. We also outline future research directions in statistical RL that are use-inspired -- aiming for impactful application of RL in practice.

Active Measuring in Reinforcement Learning With Delayed Negative Effects

Oct 16, 2025Abstract:Measuring states in reinforcement learning (RL) can be costly in real-world settings and may negatively influence future outcomes. We introduce the Actively Observable Markov Decision Process (AOMDP), where an agent not only selects control actions but also decides whether to measure the latent state. The measurement action reveals the true latent state but may have a negative delayed effect on the environment. We show that this reduced uncertainty may provably improve sample efficiency and increase the value of the optimal policy despite these costs. We formulate an AOMDP as a periodic partially observable MDP and propose an online RL algorithm based on belief states. To approximate the belief states, we further propose a sequential Monte Carlo method to jointly approximate the posterior of unknown static environment parameters and unobserved latent states. We evaluate the proposed algorithm in a digital health application, where the agent decides when to deliver digital interventions and when to assess users' health status through surveys.

BOTS: Batch Bayesian Optimization of Extended Thompson Sampling for Severely Episode-Limited RL Settings

Nov 30, 2024

Abstract:In settings where the application of reinforcement learning (RL) requires running real-world trials, including the optimization of adaptive health interventions, the number of episodes available for learning can be severely limited due to cost or time constraints. In this setting, the bias-variance trade-off of contextual bandit methods can be significantly better than that of more complex full RL methods. However, Thompson sampling bandits are limited to selecting actions based on distributions of immediate rewards. In this paper, we extend the linear Thompson sampling bandit to select actions based on a state-action utility function consisting of the Thompson sampler's estimate of the expected immediate reward combined with an action bias term. We use batch Bayesian optimization over episodes to learn the action bias terms with the goal of maximizing the expected return of the extended Thompson sampler. The proposed approach is able to learn optimal policies for a strictly broader class of Markov decision processes (MDPs) than standard Thompson sampling. Using an adaptive intervention simulation environment that captures key aspects of behavioral dynamics, we show that the proposed method can significantly out-perform standard Thompson sampling in terms of total return, while requiring significantly fewer episodes than standard value function and policy gradient methods.

Harnessing Causality in Reinforcement Learning With Bagged Decision Times

Oct 18, 2024

Abstract:We consider reinforcement learning (RL) for a class of problems with bagged decision times. A bag contains a finite sequence of consecutive decision times. The transition dynamics are non-Markovian and non-stationary within a bag. Further, all actions within a bag jointly impact a single reward, observed at the end of the bag. Our goal is to construct an online RL algorithm to maximize the discounted sum of the bag-specific rewards. To handle non-Markovian transitions within a bag, we utilize an expert-provided causal directed acyclic graph (DAG). Based on the DAG, we construct the states as a dynamical Bayesian sufficient statistic of the observed history, which results in Markovian state transitions within and across bags. We then frame this problem as a periodic Markov decision process (MDP) that allows non-stationarity within a period. An online RL algorithm based on Bellman-equations for stationary MDPs is generalized to handle periodic MDPs. To justify the proposed RL algorithm, we show that our constructed state achieves the maximal optimal value function among all state constructions for a periodic MDP. Further we prove the Bellman optimality equations for periodic MDPs. We evaluate the proposed method on testbed variants, constructed with real data from a mobile health clinical trial.

A Deployed Online Reinforcement Learning Algorithm In An Oral Health Clinical Trial

Sep 03, 2024

Abstract:Dental disease is a prevalent chronic condition associated with substantial financial burden, personal suffering, and increased risk of systemic diseases. Despite widespread recommendations for twice-daily tooth brushing, adherence to recommended oral self-care behaviors remains sub-optimal due to factors such as forgetfulness and disengagement. To address this, we developed Oralytics, a mHealth intervention system designed to complement clinician-delivered preventative care for marginalized individuals at risk for dental disease. Oralytics incorporates an online reinforcement learning algorithm to determine optimal times to deliver intervention prompts that encourage oral self-care behaviors. We have deployed Oralytics in a registered clinical trial. The deployment required careful design to manage challenges specific to the clinical trials setting in the U.S. In this paper, we (1) highlight key design decisions of the RL algorithm that address these challenges and (2) conduct a re-sampling analysis to evaluate algorithm design decisions. A second phase (randomized control trial) of Oralytics is planned to start in spring 2025.

Oralytics Reinforcement Learning Algorithm

Jun 19, 2024

Abstract:Dental disease is still one of the most common chronic diseases in the United States. While dental disease is preventable through healthy oral self-care behaviors (OSCB), this basic behavior is not consistently practiced. We have developed Oralytics, an online, reinforcement learning (RL) algorithm that optimizes the delivery of personalized intervention prompts to improve OSCB. In this paper, we offer a full overview of algorithm design decisions made using prior data, domain expertise, and experiments in a simulation test bed. The finalized RL algorithm was deployed in the Oralytics clinical trial, conducted from fall 2023 to summer 2024.

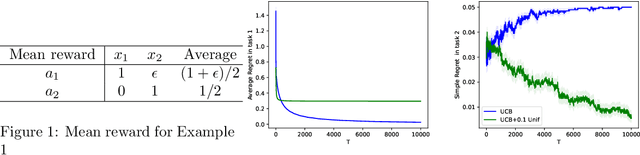

The Fallacy of Minimizing Local Regret in the Sequential Task Setting

Mar 16, 2024

Abstract:In the realm of Reinforcement Learning (RL), online RL is often conceptualized as an optimization problem, where an algorithm interacts with an unknown environment to minimize cumulative regret. In a stationary setting, strong theoretical guarantees, like a sublinear ($\sqrt{T}$) regret bound, can be obtained, which typically implies the convergence to an optimal policy and the cessation of exploration. However, these theoretical setups often oversimplify the complexities encountered in real-world RL implementations, where tasks arrive sequentially with substantial changes between tasks and the algorithm may not be allowed to adaptively learn within certain tasks. We study the changes beyond the outcome distributions, encompassing changes in the reward designs (mappings from outcomes to rewards) and the permissible policy spaces. Our results reveal the fallacy of myopically minimizing regret within each task: obtaining optimal regret rates in the early tasks may lead to worse rates in the subsequent ones, even when the outcome distributions stay the same. To realize the optimal cumulative regret bound across all the tasks, the algorithm has to overly explore in the earlier tasks. This theoretical insight is practically significant, suggesting that due to unanticipated changes (e.g., rapid technological development or human-in-the-loop involvement) between tasks, the algorithm needs to explore more than it would in the usual stationary setting within each task. Such implication resonates with the common practice of using clipped policies in mobile health clinical trials and maintaining a fixed rate of $\epsilon$-greedy exploration in robotic learning.

Towards Optimizing Human-Centric Objectives in AI-Assisted Decision-Making With Offline Reinforcement Learning

Mar 09, 2024

Abstract:As AI assistance is increasingly infused into decision-making processes, we may seek to optimize human-centric objectives beyond decision accuracy, such as skill improvement or task enjoyment of individuals interacting with these systems. With this aspiration in mind, we propose offline reinforcement learning (RL) as a general approach for modeling human-AI decision-making to optimize such human-centric objectives. Our approach seeks to optimize different objectives by adaptively providing decision support to humans -- the right type of assistance, to the right person, at the right time. We instantiate our approach with two objectives: human-AI accuracy on the decision-making task and human learning about the task, and learn policies that optimize these two objectives from previous human-AI interaction data. We compare the optimized policies against various baselines in AI-assisted decision-making. Across two experiments (N = 316 and N = 964), our results consistently demonstrate that people interacting with policies optimized for accuracy achieve significantly better accuracy -- and even human-AI complementarity -- compared to those interacting with any other type of AI support. Our results further indicate that human learning is more difficult to optimize than accuracy, with participants who interacted with learning-optimized policies showing significant learning improvement only at times. Our research (1) demonstrates offline RL to be a promising approach to model dynamics of human-AI decision-making, leading to policies that may optimize various human-centric objectives and provide novel insights about the AI-assisted decision-making space, and (2) emphasizes the importance of considering human-centric objectives beyond decision accuracy in AI-assisted decision-making, while also opening up the novel research challenge of optimizing such objectives.

Monitoring Fidelity of Online Reinforcement Learning Algorithms in Clinical Trials

Feb 26, 2024

Abstract:Online reinforcement learning (RL) algorithms offer great potential for personalizing treatment for participants in clinical trials. However, deploying an online, autonomous algorithm in the high-stakes healthcare setting makes quality control and data quality especially difficult to achieve. This paper proposes algorithm fidelity as a critical requirement for deploying online RL algorithms in clinical trials. It emphasizes the responsibility of the algorithm to (1) safeguard participants and (2) preserve the scientific utility of the data for post-trial analyses. We also present a framework for pre-deployment planning and real-time monitoring to help algorithm developers and clinical researchers ensure algorithm fidelity. To illustrate our framework's practical application, we present real-world examples from the Oralytics clinical trial. Since Spring 2023, this trial successfully deployed an autonomous, online RL algorithm to personalize behavioral interventions for participants at risk for dental disease.

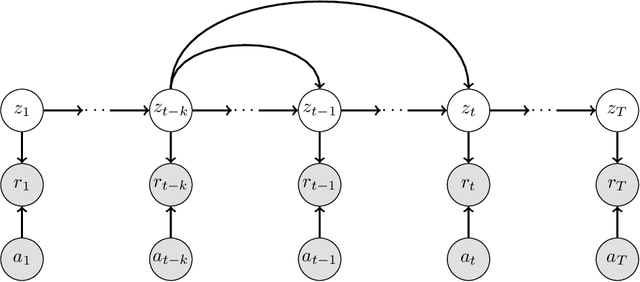

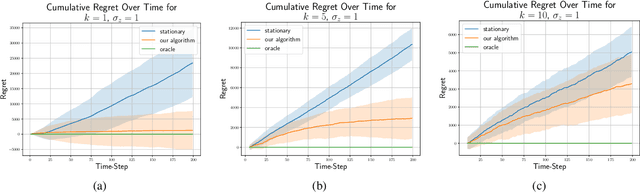

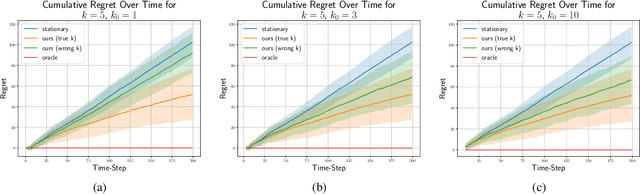

Non-Stationary Latent Auto-Regressive Bandits

Feb 05, 2024

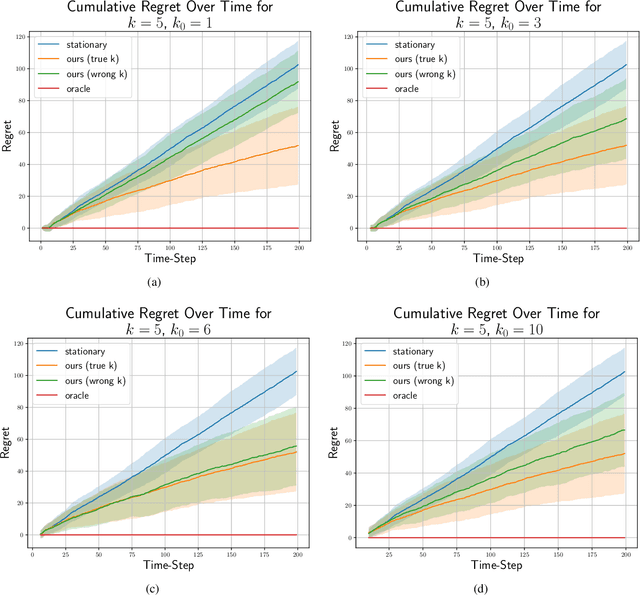

Abstract:We consider the stochastic multi-armed bandit problem with non-stationary rewards. We present a novel formulation of non-stationarity in the environment where changes in the mean reward of the arms over time are due to some unknown, latent, auto-regressive (AR) state of order $k$. We call this new environment the latent AR bandit. Different forms of the latent AR bandit appear in many real-world settings, especially in emerging scientific fields such as behavioral health or education where there are few mechanistic models of the environment. If the AR order $k$ is known, we propose an algorithm that achieves $\tilde{O}(k\sqrt{T})$ regret in this setting. Empirically, our algorithm outperforms standard UCB across multiple non-stationary environments, even if $k$ is mis-specified.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge