Sonali Parbhoo

Department of Engineering, Imperial College London

Causal Bayesian Optimization with Unknown Graphs

Mar 25, 2025Abstract:Causal Bayesian Optimization (CBO) is a methodology designed to optimize an outcome variable by leveraging known causal relationships through targeted interventions. Traditional CBO methods require a fully and accurately specified causal graph, which is a limitation in many real-world scenarios where such graphs are unknown. To address this, we propose a new method for the CBO framework that operates without prior knowledge of the causal graph. Consistent with causal bandit theory, we demonstrate through theoretical analysis and that focusing on the direct causal parents of the target variable is sufficient for optimization, and provide empirical validation in the context of CBO. Furthermore we introduce a new method that learns a Bayesian posterior over the direct parents of the target variable. This allows us to optimize the outcome variable while simultaneously learning the causal structure. Our contributions include a derivation of the closed-form posterior distribution for the linear case. In the nonlinear case where the posterior is not tractable, we present a Gaussian Process (GP) approximation that still enables CBO by inferring the parents of the outcome variable. The proposed method performs competitively with existing benchmarks and scales well to larger graphs, making it a practical tool for real-world applications where causal information is incomplete.

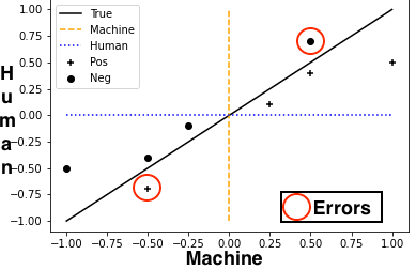

Feature Importance Depends on Properties of the Data: Towards Choosing the Correct Explanations for Your Data and Decision Trees based Models

Feb 11, 2025Abstract:In order to ensure the reliability of the explanations of machine learning models, it is crucial to establish their advantages and limits and in which case each of these methods outperform. However, the current understanding of when and how each method of explanation can be used is insufficient. To fill this gap, we perform a comprehensive empirical evaluation by synthesizing multiple datasets with the desired properties. Our main objective is to assess the quality of feature importance estimates provided by local explanation methods, which are used to explain predictions made by decision tree-based models. By analyzing the results obtained from synthetic datasets as well as publicly available binary classification datasets, we observe notable disparities in the magnitude and sign of the feature importance estimates generated by these methods. Moreover, we find that these estimates are sensitive to specific properties present in the data. Although some model hyper-parameters do not significantly influence feature importance assignment, it is important to recognize that each method of explanation has limitations in specific contexts. Our assessment highlights these limitations and provides valuable insight into the suitability and reliability of different explanatory methods in various scenarios.

Concept-driven Off Policy Evaluation

Nov 28, 2024

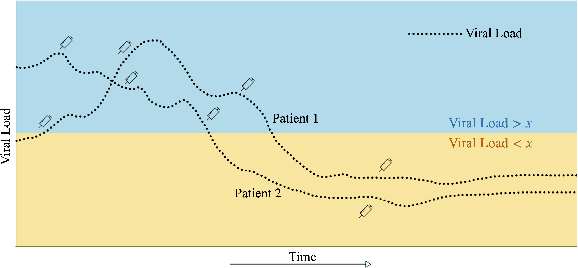

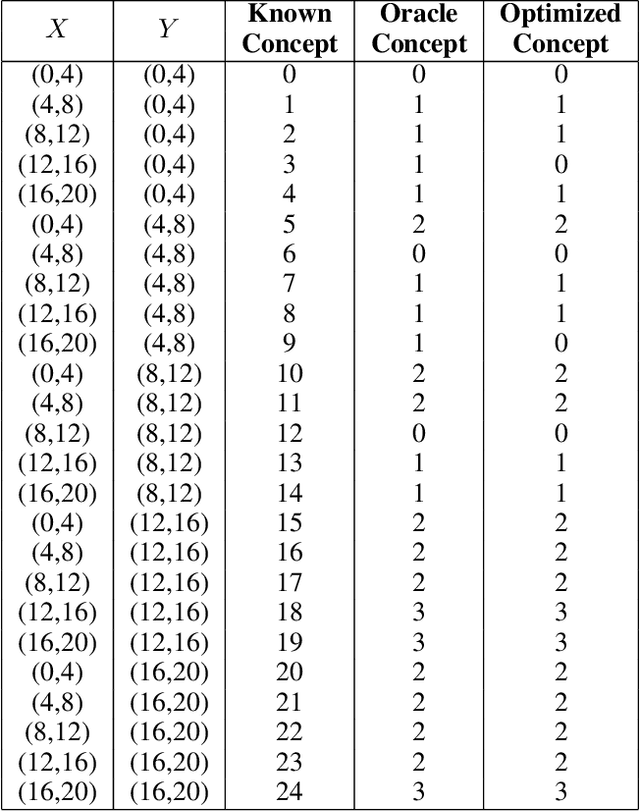

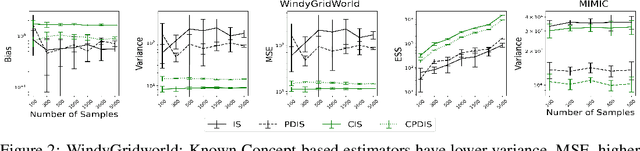

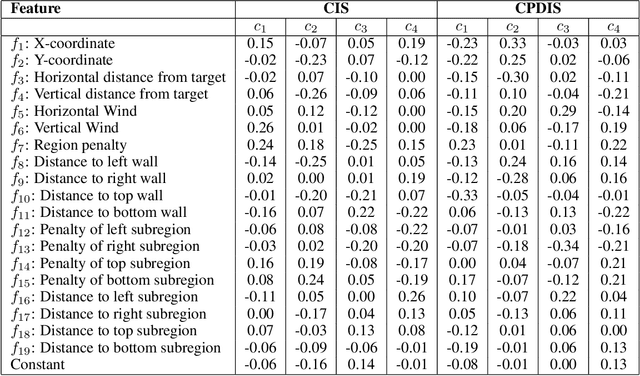

Abstract:Evaluating off-policy decisions using batch data poses significant challenges due to limited sample sizes leading to high variance. To improve Off-Policy Evaluation (OPE), we must identify and address the sources of this variance. Recent research on Concept Bottleneck Models (CBMs) shows that using human-explainable concepts can improve predictions and provide better understanding. We propose incorporating concepts into OPE to reduce variance. Our work introduces a family of concept-based OPE estimators, proving that they remain unbiased and reduce variance when concepts are known and predefined. Since real-world applications often lack predefined concepts, we further develop an end-to-end algorithm to learn interpretable, concise, and diverse parameterized concepts optimized for variance reduction. Our experiments with synthetic and real-world datasets show that both known and learned concept-based estimators significantly improve OPE performance. Crucially, we show that, unlike other OPE methods, concept-based estimators are easily interpretable and allow for targeted interventions on specific concepts, further enhancing the quality of these estimators.

Inverse Transition Learning: Learning Dynamics from Demonstrations

Nov 07, 2024

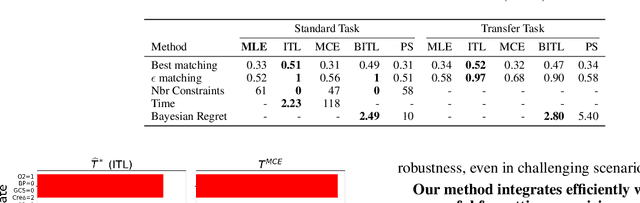

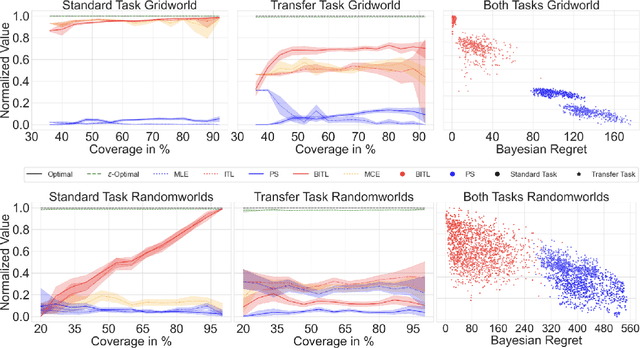

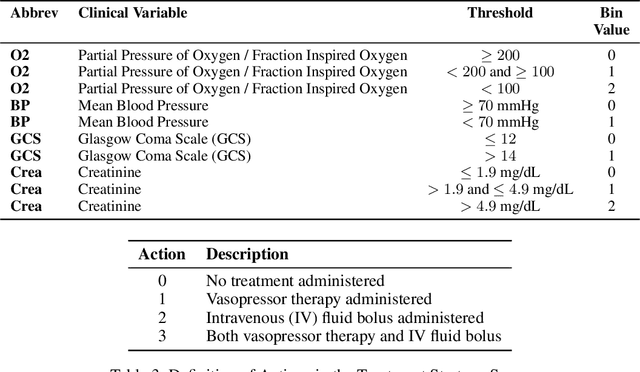

Abstract:We consider the problem of estimating the transition dynamics $T^*$ from near-optimal expert trajectories in the context of offline model-based reinforcement learning. We develop a novel constraint-based method, Inverse Transition Learning, that treats the limited coverage of the expert trajectories as a \emph{feature}: we use the fact that the expert is near-optimal to inform our estimate of $T^*$. We integrate our constraints into a Bayesian approach. Across both synthetic environments and real healthcare scenarios like Intensive Care Unit (ICU) patient management in hypotension, we demonstrate not only significant improvements in decision-making, but that our posterior can inform when transfer will be successful.

Insights from the Inverse: Reconstructing LLM Training Goals Through Inverse RL

Oct 16, 2024

Abstract:Large language models (LLMs) trained with Reinforcement Learning from Human Feedback (RLHF) have demonstrated remarkable capabilities, but their underlying reward functions and decision-making processes remain opaque. This paper introduces a novel approach to interpreting LLMs by applying inverse reinforcement learning (IRL) to recover their implicit reward functions. We conduct experiments on toxicity-aligned LLMs of varying sizes, extracting reward models that achieve up to 80.40% accuracy in predicting human preferences. Our analysis reveals key insights into the non-identifiability of reward functions, the relationship between model size and interpretability, and potential pitfalls in the RLHF process. We demonstrate that IRL-derived reward models can be used to fine-tune new LLMs, resulting in comparable or improved performance on toxicity benchmarks. This work provides a new lens for understanding and improving LLM alignment, with implications for the responsible development and deployment of these powerful systems.

Decision-Point Guided Safe Policy Improvement

Oct 12, 2024

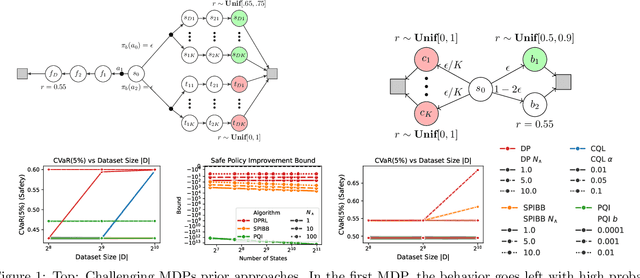

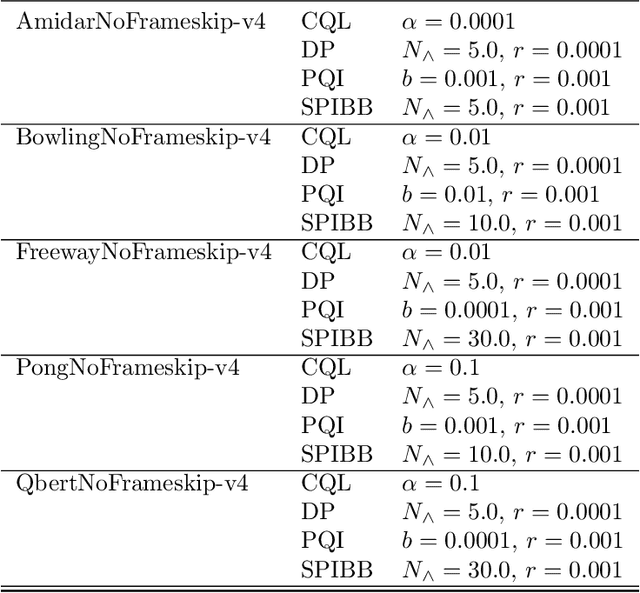

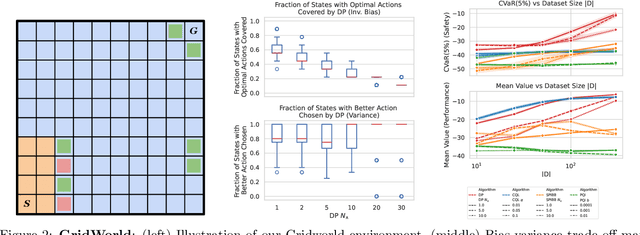

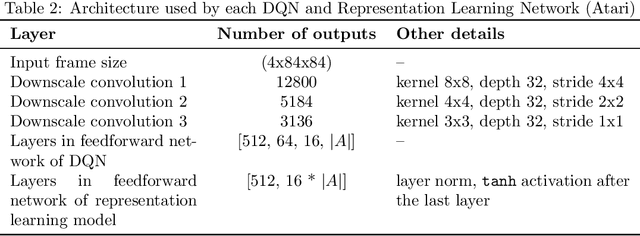

Abstract:Within batch reinforcement learning, safe policy improvement (SPI) seeks to ensure that the learnt policy performs at least as well as the behavior policy that generated the dataset. The core challenge in SPI is seeking improvements while balancing risk when many state-action pairs may be infrequently visited. In this work, we introduce Decision Points RL (DPRL), an algorithm that restricts the set of state-action pairs (or regions for continuous states) considered for improvement. DPRL ensures high-confidence improvement in densely visited states (i.e. decision points) while still utilizing data from sparsely visited states. By appropriately limiting where and how we may deviate from the behavior policy, we achieve tighter bounds than prior work; specifically, our data-dependent bounds do not scale with the size of the state and action spaces. In addition to the analysis, we demonstrate that DPRL is both safe and performant on synthetic and real datasets.

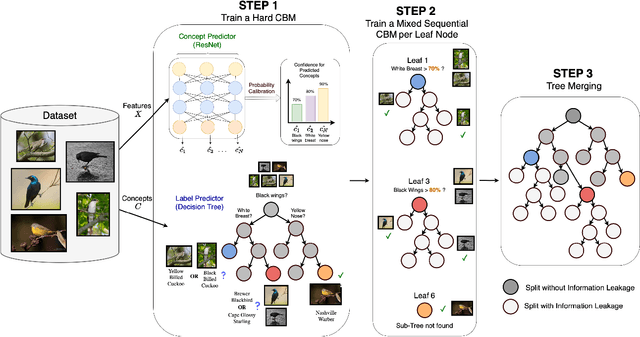

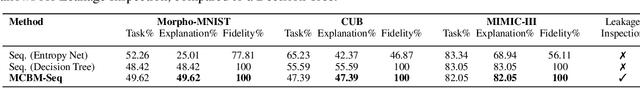

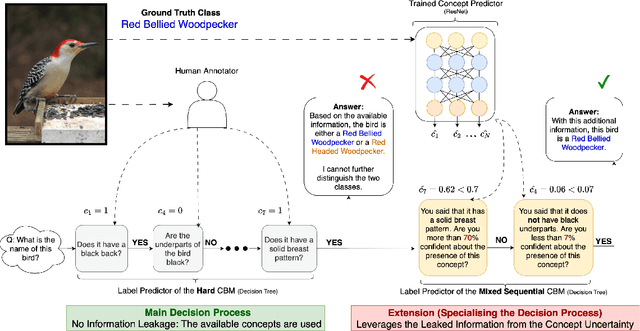

Tree-Based Leakage Inspection and Control in Concept Bottleneck Models

Oct 08, 2024

Abstract:As AI models grow larger, the demand for accountability and interpretability has become increasingly critical for understanding their decision-making processes. Concept Bottleneck Models (CBMs) have gained attention for enhancing interpretability by mapping inputs to intermediate concepts before making final predictions. However, CBMs often suffer from information leakage, where additional input data, not captured by the concepts, is used to improve task performance, complicating the interpretation of downstream predictions. In this paper, we introduce a novel approach for training both joint and sequential CBMs that allows us to identify and control leakage using decision trees. Our method quantifies leakage by comparing the decision paths of hard CBMs with their soft, leaky counterparts. Specifically, we show that soft leaky CBMs extend the decision paths of hard CBMs, particularly in cases where concept information is incomplete. Using this insight, we develop a technique to better inspect and manage leakage, isolating the subsets of data most affected by this. Through synthetic and real-world experiments, we demonstrate that controlling leakage in this way not only improves task accuracy but also yields more informative and transparent explanations.

Towards Integrating Personal Knowledge into Test-Time Predictions

Jun 12, 2024

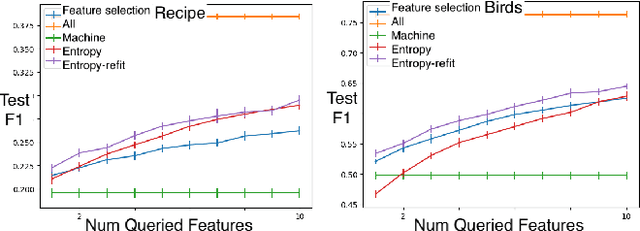

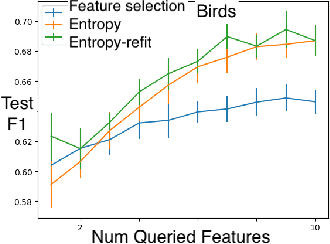

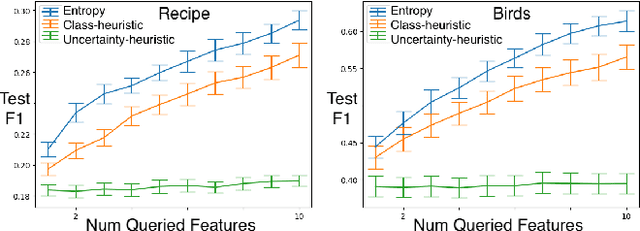

Abstract:Machine learning (ML) models can make decisions based on large amounts of data, but they can be missing personal knowledge available to human users about whom predictions are made. For example, a model trained to predict psychiatric outcomes may know nothing about a patient's social support system, and social support may look different for different patients. In this work, we introduce the problem of human feature integration, which provides a way to incorporate important personal-knowledge from users without domain expertise into ML predictions. We characterize this problem through illustrative user stories and comparisons to existing approaches; we formally describe this problem in a way that paves the ground for future technical solutions; and we provide a proof-of-concept study of a simple version of a solution to this problem in a semi-realistic setting.

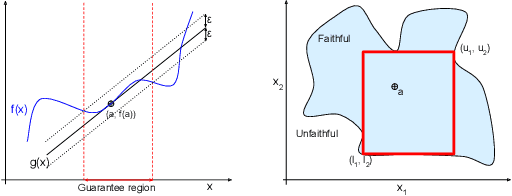

Guarantee Regions for Local Explanations

Feb 20, 2024

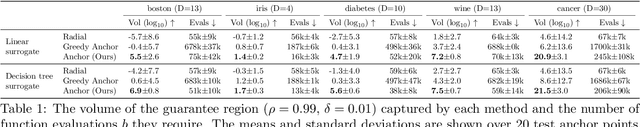

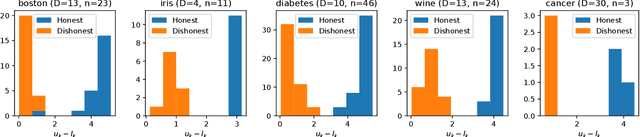

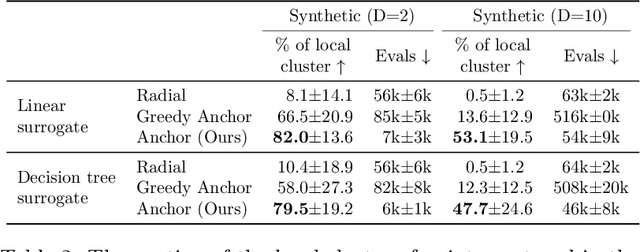

Abstract:Interpretability methods that utilise local surrogate models (e.g. LIME) are very good at describing the behaviour of the predictive model at a point of interest, but they are not guaranteed to extrapolate to the local region surrounding the point. However, overfitting to the local curvature of the predictive model and malicious tampering can significantly limit extrapolation. We propose an anchor-based algorithm for identifying regions in which local explanations are guaranteed to be correct by explicitly describing those intervals along which the input features can be trusted. Our method produces an interpretable feature-aligned box where the prediction of the local surrogate model is guaranteed to match the predictive model. We demonstrate that our algorithm can be used to find explanations with larger guarantee regions that better cover the data manifold compared to existing baselines. We also show how our method can identify misleading local explanations with significantly poorer guarantee regions.

Bayesian Inverse Transition Learning for Offline Settings

Aug 09, 2023

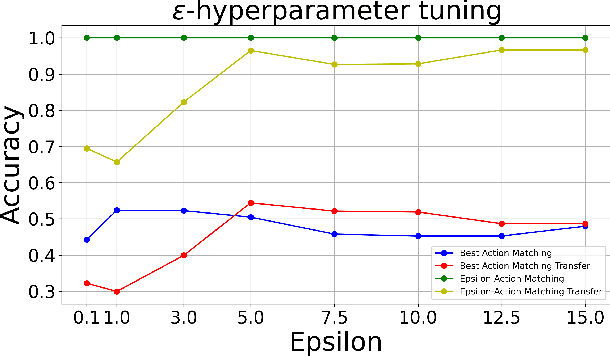

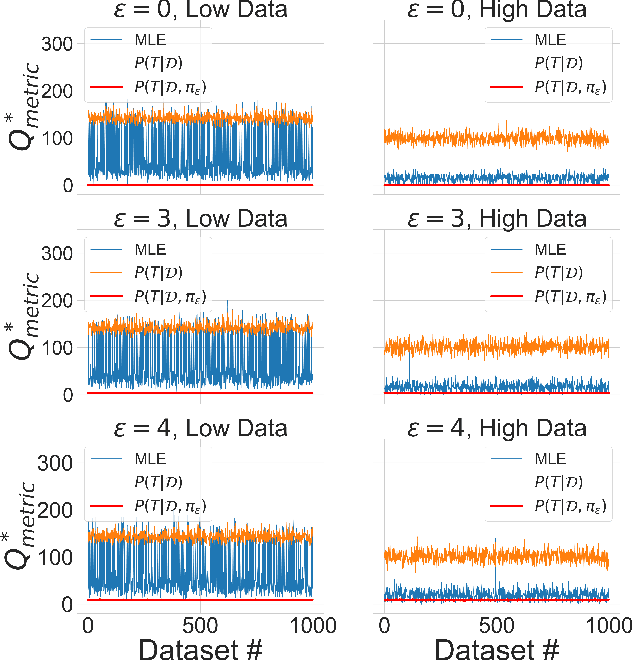

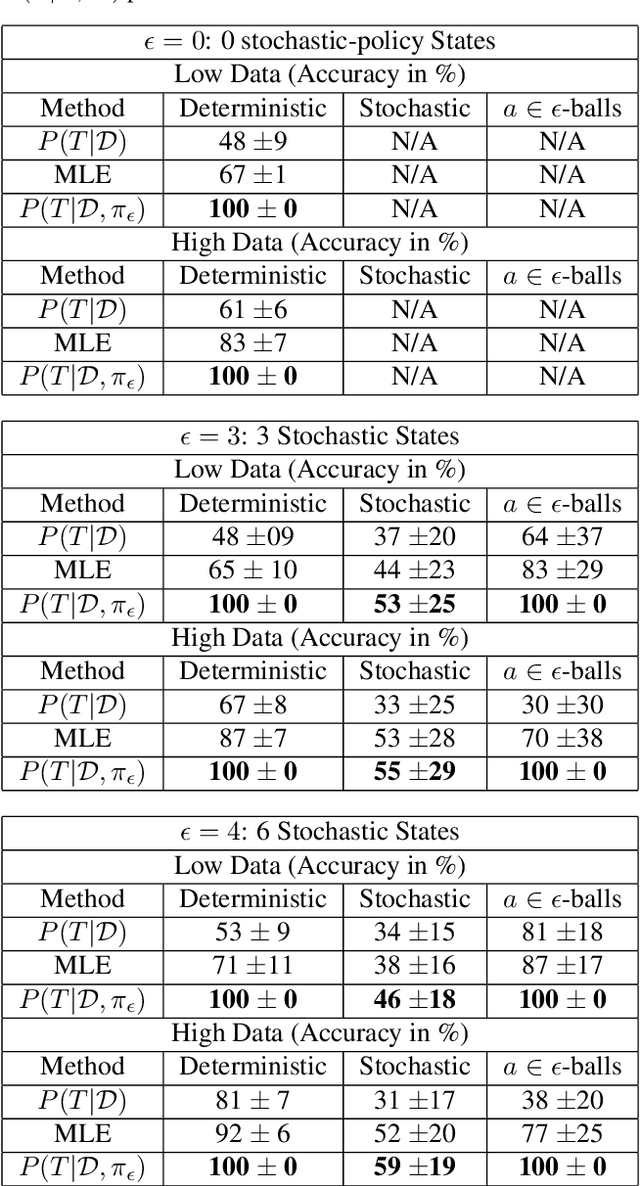

Abstract:Offline Reinforcement learning is commonly used for sequential decision-making in domains such as healthcare and education, where the rewards are known and the transition dynamics $T$ must be estimated on the basis of batch data. A key challenge for all tasks is how to learn a reliable estimate of the transition dynamics $T$ that produce near-optimal policies that are safe enough so that they never take actions that are far away from the best action with respect to their value functions and informative enough so that they communicate the uncertainties they have. Using data from an expert, we propose a new constraint-based approach that captures our desiderata for reliably learning a posterior distribution of the transition dynamics $T$ that is free from gradients. Our results demonstrate that by using our constraints, we learn a high-performing policy, while considerably reducing the policy's variance over different datasets. We also explain how combining uncertainty estimation with these constraints can help us infer a partial ranking of actions that produce higher returns, and helps us infer safer and more informative policies for planning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge