Abhishek Sharma

Image Synthesis Using Spintronic Deep Convolutional Generative Adversarial Network

Jan 04, 2026Abstract:The computational requirements of generative adversarial networks (GANs) exceed the limit of conventional Von Neumann architectures, necessitating energy efficient alternatives such as neuromorphic spintronics. This work presents a hybrid CMOS-spintronic deep convolutional generative adversarial network (DCGAN) architecture for synthetic image generation. The proposed generative vision model approach follows the standard framework, leveraging generator and discriminators adversarial training with our designed spintronics hardware for deconvolution, convolution, and activation layers of the DCGAN architecture. To enable hardware aware spintronic implementation, the generator's deconvolution layers are restructured as zero padded convolution, allowing seamless integration with a 6-bit skyrmion based synapse in a crossbar, without compromising training performance. Nonlinear activation functions are implemented using a hybrid CMOS domain wall based Rectified linear unit (ReLU) and Leaky ReLU units. Our proposed tunable Leaky ReLU employs domain wall position coded, continuous resistance states and a piecewise uniaxial parabolic anisotropy profile with a parallel MTJ readout, exhibiting energy consumption of 0.192 pJ. Our spintronic DCGAN model demonstrates adaptability across both grayscale and colored datasets, achieving Fr'echet Inception Distances (FID) of 27.5 for the Fashion MNIST and 45.4 for Anime Face datasets, with testing energy (training energy) of 4.9 nJ (14.97~nJ/image) and 24.72 nJ (74.7 nJ/image).

Advancing Parkinson's Disease Progression Prediction: Comparing Long Short-Term Memory Networks and Kolmogorov-Arnold Networks

Dec 30, 2024

Abstract:Parkinson's Disease (PD) is a degenerative neurological disorder that impairs motor and non-motor functions, significantly reducing quality of life and increasing mortality risk. Early and accurate detection of PD progression is vital for effective management and improved patient outcomes. Current diagnostic methods, however, are often costly, time-consuming, and require specialized equipment and expertise. This work proposes an innovative approach to predicting PD progression using regression methods, Long Short-Term Memory (LSTM) networks, and Kolmogorov Arnold Networks (KAN). KAN, utilizing spline-parametrized univariate functions, allows for dynamic learning of activation patterns, unlike traditional linear models. The Movement Disorder Society-Sponsored Revision of the Unified Parkinson's Disease Rating Scale (MDS-UPDRS) is a comprehensive tool for evaluating PD symptoms and is commonly used to measure disease progression. Additionally, protein or peptide abnormalities are linked to PD onset and progression. Identifying these associations can aid in predicting disease progression and understanding molecular changes. Comparing multiple models, including LSTM and KAN, this study aims to identify the method that delivers the highest metrics. The analysis reveals that KAN, with its dynamic learning capabilities, outperforms other approaches in predicting PD progression. This research highlights the potential of AI and machine learning in healthcare, paving the way for advanced computational models to enhance clinical predictions and improve patient care and treatment strategies in PD management.

Neural Finite-State Machines for Surgical Phase Recognition

Nov 27, 2024

Abstract:Surgical phase recognition is essential for analyzing procedure-specific surgical videos. While recent transformer-based architectures have advanced sequence processing capabilities, they struggle with maintaining consistency across lengthy surgical procedures. Drawing inspiration from classical hidden Markov models' finite-state interpretations, we introduce the neural finite-state machine (NFSM) module, which bridges procedural understanding with deep learning approaches. NFSM combines procedure-level understanding with neural networks through global state embeddings, attention-based dynamic transition tables, and transition-aware training and inference mechanisms for offline and online applications. When integrated into our future-aware architecture, NFSM improves video-level accuracy, phase-level precision, recall, and Jaccard indices on Cholec80 datasets by 2.3, 3.2, 3.0, and 4.8 percentage points respectively. As an add-on module to existing state-of-the-art models like Surgformer, NFSM further enhances performance, demonstrating its complementary value. Extended experiments on non-surgical datasets validate NFSM's generalizability beyond surgical domains. Comprehensive experiments demonstrate that incorporating NSFM into deep learning frameworks enables more robust and consistent phase recognition across long procedural videos.

Deep Learning for Fetal Inflammatory Response Diagnosis in the Umbilical Cord

Nov 14, 2024

Abstract:Inflammation of the umbilical cord can be seen as a result of ascending intrauterine infection or other inflammatory stimuli. Acute fetal inflammatory response (FIR) is characterized by infiltration of the umbilical cord by fetal neutrophils, and can be associated with neonatal sepsis or fetal inflammatory response syndrome. Recent advances in deep learning in digital pathology have demonstrated favorable performance across a wide range of clinical tasks, such as diagnosis and prognosis. In this study we classified FIR from whole slide images (WSI). We digitized 4100 histological slides of umbilical cord stained with hematoxylin and eosin(H&E) and extracted placental diagnoses from the electronic health record. We build models using attention-based whole slide learning models. We compared strategies between features extracted by a model (ConvNeXtXLarge) pretrained on non-medical images (ImageNet), and one pretrained using histopathology images (UNI). We trained multiple iterations of each model and combined them into an ensemble. The predictions from the ensemble of models trained using UNI achieved an overall balanced accuracy of 0.836 on the test dataset. In comparison, the ensembled predictions using ConvNeXtXLarge had a lower balanced accuracy of 0.7209. Heatmaps generated from top accuracy model appropriately highlighted arteritis in cases of FIR 2. In FIR 1, the highest performing model assigned high attention to areas of activated-appearing stroma in Wharton's Jelly. However, other high-performing models assigned attention to umbilical vessels. We developed models for diagnosis of FIR from placental histology images, helping reduce interobserver variability among pathologists. Future work may examine the utility of these models for identifying infants at risk of systemic inflammatory response or early onset neonatal sepsis.

Inverse Transition Learning: Learning Dynamics from Demonstrations

Nov 07, 2024

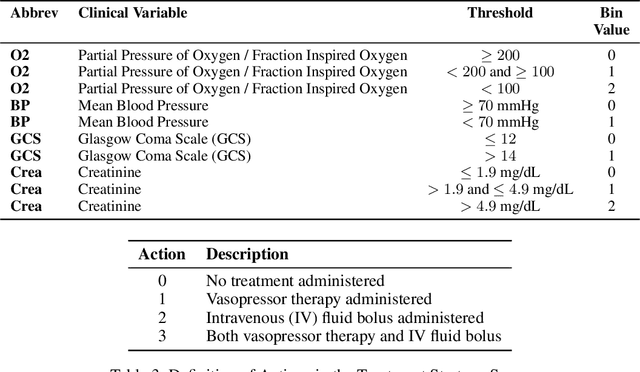

Abstract:We consider the problem of estimating the transition dynamics $T^*$ from near-optimal expert trajectories in the context of offline model-based reinforcement learning. We develop a novel constraint-based method, Inverse Transition Learning, that treats the limited coverage of the expert trajectories as a \emph{feature}: we use the fact that the expert is near-optimal to inform our estimate of $T^*$. We integrate our constraints into a Bayesian approach. Across both synthetic environments and real healthcare scenarios like Intensive Care Unit (ICU) patient management in hypotension, we demonstrate not only significant improvements in decision-making, but that our posterior can inform when transfer will be successful.

Machine learning identification of maternal inflammatory response and histologic choroamnionitis from placental membrane whole slide images

Nov 04, 2024

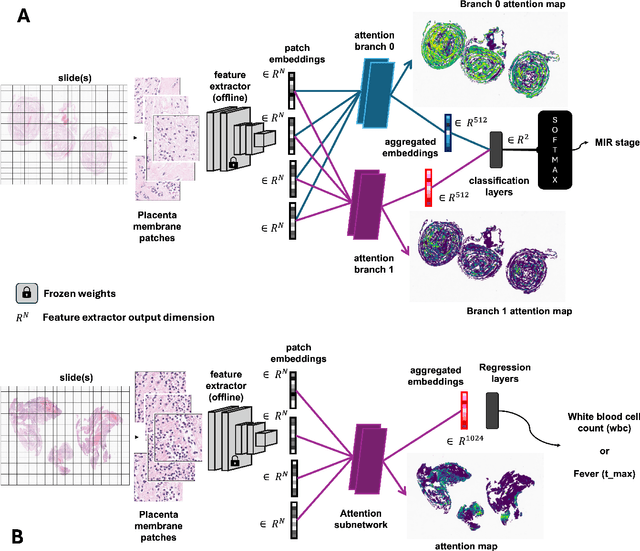

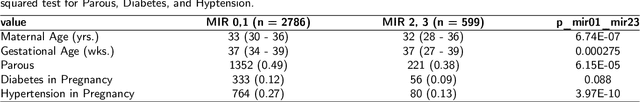

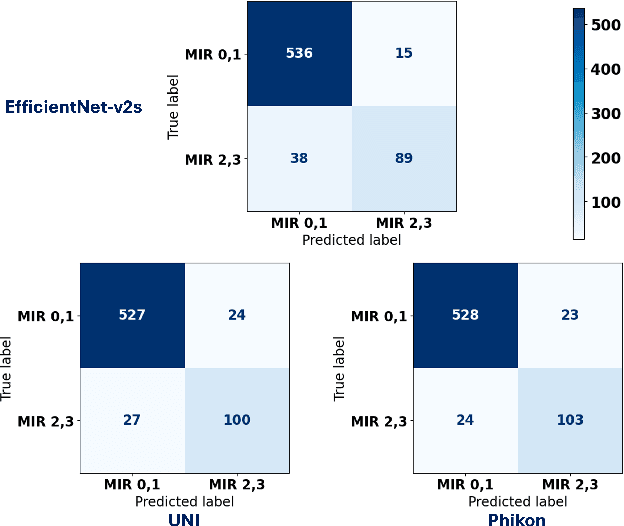

Abstract:The placenta forms a critical barrier to infection through pregnancy, labor and, delivery. Inflammatory processes in the placenta have short-term, and long-term consequences for offspring health. Digital pathology and machine learning can play an important role in understanding placental inflammation, and there have been very few investigations into methods for predicting and understanding Maternal Inflammatory Response (MIR). This work intends to investigate the potential of using machine learning to understand MIR based on whole slide images (WSI), and establish early benchmarks. To that end, we use Multiple Instance Learning framework with 3 feature extractors: ImageNet-based EfficientNet-v2s, and 2 histopathology foundation models, UNI and Phikon to investigate predictability of MIR stage from histopathology WSIs. We also interpret predictions from these models using the learned attention maps from these models. We also use the MIL framework for predicting white blood cells count (WBC) and maximum fever temperature ($T_{max}$). Attention-based MIL models are able to classify MIR with a balanced accuracy of up to 88.5% with a Cohen's Kappa ($\kappa$) of up to 0.772. Furthermore, we found that the pathology foundation models (UNI and Phikon) are both able to achieve higher performance with balanced accuracy and $\kappa$, compared to ImageNet-based feature extractor (EfficientNet-v2s). For WBC and $T_{max}$ prediction, we found mild correlation between actual values and those predicted from histopathology WSIs. We used MIL framework for predicting MIR stage from WSIs, and compared effectiveness of foundation models as feature extractors, with that of an ImageNet-based model. We further investigated model failure cases and found them to be either edge cases prone to interobserver variability, examples of pathologist's overreach, or mislabeled due to processing errors.

Decision-Point Guided Safe Policy Improvement

Oct 12, 2024

Abstract:Within batch reinforcement learning, safe policy improvement (SPI) seeks to ensure that the learnt policy performs at least as well as the behavior policy that generated the dataset. The core challenge in SPI is seeking improvements while balancing risk when many state-action pairs may be infrequently visited. In this work, we introduce Decision Points RL (DPRL), an algorithm that restricts the set of state-action pairs (or regions for continuous states) considered for improvement. DPRL ensures high-confidence improvement in densely visited states (i.e. decision points) while still utilizing data from sparsely visited states. By appropriately limiting where and how we may deviate from the behavior policy, we achieve tighter bounds than prior work; specifically, our data-dependent bounds do not scale with the size of the state and action spaces. In addition to the analysis, we demonstrate that DPRL is both safe and performant on synthetic and real datasets.

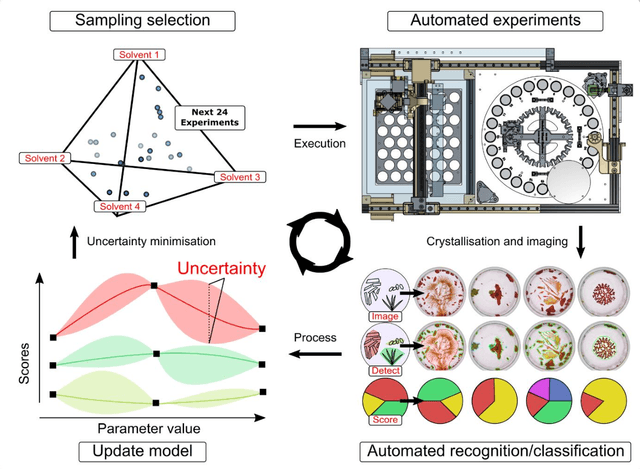

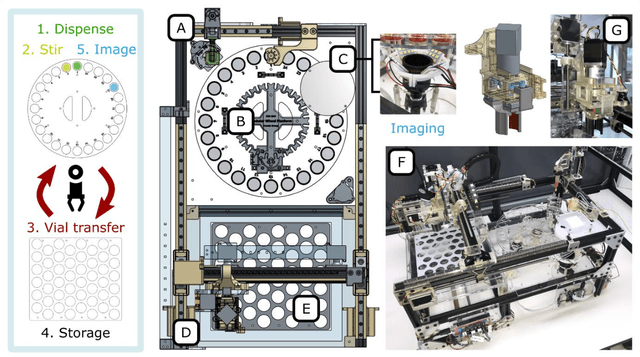

AI-Driven Robotic Crystal Explorer for Rapid Polymorph Identification

Sep 08, 2024

Abstract:Crystallisation is an important phenomenon which facilitates the purification as well as structural and bulk phase material characterisation using crystallographic methods. However, different conditions can lead to a vast set of different crystal structure polymorphs and these often exhibit different physical properties, allowing materials to be tailored to specific purposes. This means the high dimensionality that can result from variations in the conditions which affect crystallisation, and the interaction between them, means that exhaustive exploration is difficult, time-consuming, and costly to explore. Herein we present a robotic crystal search engine for the automated and efficient high-throughput approach to the exploration of crystallisation conditions. The system comprises a closed-loop computer crystal-vision system that uses machine learning to both identify crystals and classify their identity in a multiplexed robotic platform. By exploring the formation of a well-known polymorph, we were able to show how a robotic system could be used to efficiently search experimental space as a function of relative polymorph amount and efficiently create a high dimensionality phase diagram with minimal experimental budget and without expensive analytical techniques such as crystallography. In this way, we identify the set of polymorphs possible within a set of experimental conditions, as well as the optimal values of these conditions to grow each polymorph.

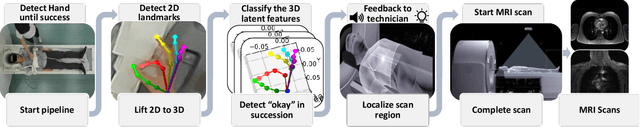

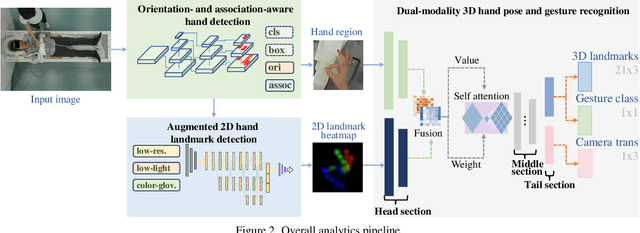

Automated Patient Positioning with Learned 3D Hand Gestures

Jul 20, 2024

Abstract:Positioning patients for scanning and interventional procedures is a critical task that requires high precision and accuracy. The conventional workflow involves manually adjusting the patient support to align the center of the target body part with the laser projector or other guiding devices. This process is not only time-consuming but also prone to inaccuracies. In this work, we propose an automated patient positioning system that utilizes a camera to detect specific hand gestures from technicians, allowing users to indicate the target patient region to the system and initiate automated positioning. Our approach relies on a novel multi-stage pipeline to recognize and interpret the technicians' gestures, translating them into precise motions of medical devices. We evaluate our proposed pipeline during actual MRI scanning procedures, using RGB-Depth cameras to capture the process. Results show that our system achieves accurate and precise patient positioning with minimal technician intervention. Furthermore, we validate our method on HaGRID, a large-scale hand gesture dataset, demonstrating its effectiveness in hand detection and gesture recognition.

Divide and Fuse: Body Part Mesh Recovery from Partially Visible Human Images

Jul 12, 2024

Abstract:We introduce a novel bottom-up approach for human body mesh reconstruction, specifically designed to address the challenges posed by partial visibility and occlusion in input images. Traditional top-down methods, relying on whole-body parametric models like SMPL, falter when only a small part of the human is visible, as they require visibility of most of the human body for accurate mesh reconstruction. To overcome this limitation, our method employs a "Divide and Fuse (D&F)" strategy, reconstructing human body parts independently before fusing them, thereby ensuring robustness against occlusions. We design Human Part Parametric Models (HPPM) that independently reconstruct the mesh from a few shape and global-location parameters, without inter-part dependency. A specially designed fusion module then seamlessly integrates the reconstructed parts, even when only a few are visible. We harness a large volume of ground-truth SMPL data to train our parametric mesh models. To facilitate the training and evaluation of our method, we have established benchmark datasets featuring images of partially visible humans with HPPM annotations. Our experiments, conducted on these benchmark datasets, demonstrate the effectiveness of our D&F method, particularly in scenarios with substantial invisibility, where traditional approaches struggle to maintain reconstruction quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge