Seongmin Park

MergeRec: Model Merging for Data-Isolated Cross-Domain Sequential Recommendation

Jan 05, 2026Abstract:Modern recommender systems trained on domain-specific data often struggle to generalize across multiple domains. Cross-domain sequential recommendation has emerged as a promising research direction to address this challenge; however, existing approaches face fundamental limitations, such as reliance on overlapping users or items across domains, or unrealistic assumptions that ignore privacy constraints. In this work, we propose a new framework, MergeRec, based on model merging under a new and realistic problem setting termed data-isolated cross-domain sequential recommendation, where raw user interaction data cannot be shared across domains. MergeRec consists of three key components: (1) merging initialization, (2) pseudo-user data construction, and (3) collaborative merging optimization. First, we initialize a merged model using training-free merging techniques. Next, we construct pseudo-user data by treating each item as a virtual sequence in each domain, enabling the synthesis of meaningful training samples without relying on real user interactions. Finally, we optimize domain-specific merging weights through a joint objective that combines a recommendation loss, which encourages the merged model to identify relevant items, and a distillation loss, which transfers collaborative filtering signals from the fine-tuned source models. Extensive experiments demonstrate that MergeRec not only preserves the strengths of the original models but also significantly enhances generalizability to unseen domains. Compared to conventional model merging methods, MergeRec consistently achieves superior performance, with average improvements of up to 17.21% in Recall@10, highlighting the potential of model merging as a scalable and effective approach for building universal recommender systems. The source code is available at https://github.com/DIALLab-SKKU/MergeRec.

Enhancing Time Awareness in Generative Recommendation

Sep 17, 2025Abstract:Generative recommendation has emerged as a promising paradigm that formulates the recommendations into a text-to-text generation task, harnessing the vast knowledge of large language models. However, existing studies focus on considering the sequential order of items and neglect to handle the temporal dynamics across items, which can imply evolving user preferences. To address this limitation, we propose a novel model, Generative Recommender Using Time awareness (GRUT), effectively capturing hidden user preferences via various temporal signals. We first introduce Time-aware Prompting, consisting of two key contexts. The user-level temporal context models personalized temporal patterns across timestamps and time intervals, while the item-level transition context provides transition patterns across users. We also devise Trend-aware Inference, a training-free method that enhances rankings by incorporating trend information about items with generation likelihood. Extensive experiments demonstrate that GRUT outperforms state-of-the-art models, with gains of up to 15.4% and 14.3% in Recall@5 and NDCG@5 across four benchmark datasets. The source code is available at https://github.com/skleee/GRUT.

CHILL at SemEval-2025 Task 2: You Can't Just Throw Entities and Hope -- Make Your LLM to Get Them Right

Jun 16, 2025Abstract:In this paper, we describe our approach for the SemEval 2025 Task 2 on Entity-Aware Machine Translation (EA-MT). Our system aims to improve the accuracy of translating named entities by combining two key approaches: Retrieval Augmented Generation (RAG) and iterative self-refinement techniques using Large Language Models (LLMs). A distinctive feature of our system is its self-evaluation mechanism, where the LLM assesses its own translations based on two key criteria: the accuracy of entity translations and overall translation quality. We demonstrate how these methods work together and effectively improve entity handling while maintaining high-quality translations.

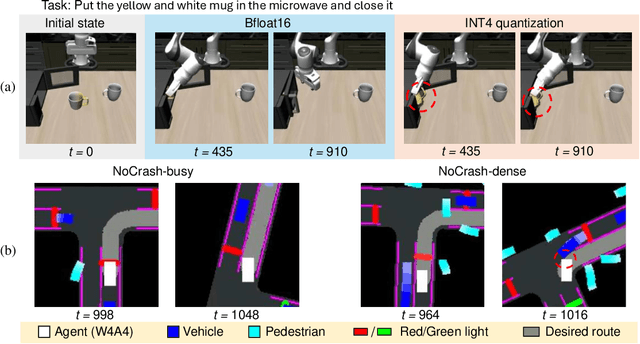

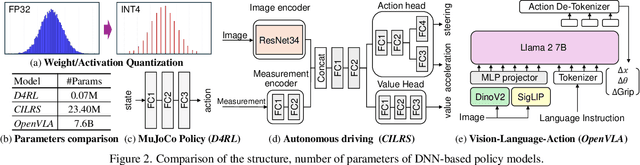

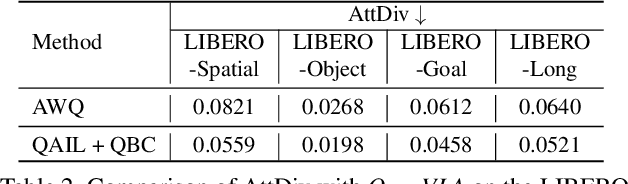

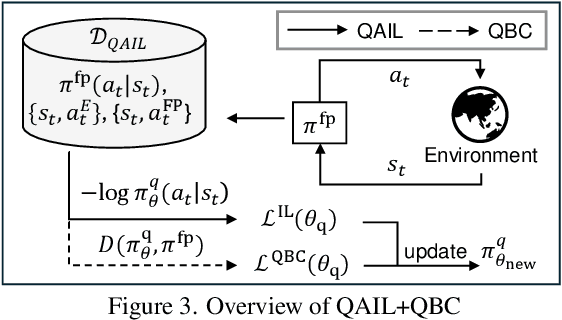

Saliency-Aware Quantized Imitation Learning for Efficient Robotic Control

May 21, 2025Abstract:Deep neural network (DNN)-based policy models, such as vision-language-action (VLA) models, excel at automating complex decision-making from multi-modal inputs. However, scaling these models greatly increases computational overhead, complicating deployment in resource-constrained settings like robot manipulation and autonomous driving. To address this, we propose Saliency-Aware Quantized Imitation Learning (SQIL), which combines quantization-aware training with a selective loss-weighting strategy for mission-critical states. By identifying these states via saliency scores and emphasizing them in the training loss, SQIL preserves decision fidelity under low-bit precision. We validate SQIL's generalization capability across extensive simulation benchmarks with environment variations, real-world tasks, and cross-domain tasks (self-driving, physics simulation), consistently recovering full-precision performance. Notably, a 4-bit weight-quantized VLA model for robotic manipulation achieves up to 2.5x speedup and 2.5x energy savings on an edge GPU with minimal accuracy loss. These results underline SQIL's potential for efficiently deploying large IL-based policy models on resource-limited devices.

Linear Item-Item Model with Neural Knowledge for Session-based Recommendation

Apr 21, 2025

Abstract:Session-based recommendation (SBR) aims to predict users' subsequent actions by modeling short-term interactions within sessions. Existing neural models primarily focus on capturing complex dependencies for sequential item transitions. As an alternative solution, linear item-item models mainly identify strong co-occurrence patterns across items and support faster inference speed. Although each paradigm has been actively studied in SBR, their fundamental differences in capturing item relationships and how to bridge these distinct modeling paradigms effectively remain unexplored. In this paper, we propose a novel SBR model, namely Linear Item-Item model with Neural Knowledge (LINK), which integrates both types of knowledge into a unified linear framework. Specifically, we design two specialized components of LINK: (i) Linear knowledge-enhanced Item-item Similarity model (LIS), which refines the item similarity correlation via self-distillation, and (ii) Neural knowledge-enhanced Item-item Transition model (NIT), which seamlessly incorporates complicated neural knowledge distilled from the off-the-shelf neural model. Extensive experiments demonstrate that LINK outperforms state-of-the-art linear SBR models across six real-world datasets, achieving improvements of up to 14.78% and 11.04% in Recall@20 and MRR@20 while showing up to 813x fewer inference FLOPs. Our code is available at https://github.com/jin530/LINK.

Why is Normalization Necessary for Linear Recommenders?

Apr 08, 2025Abstract:Despite their simplicity, linear autoencoder (LAE)-based models have shown comparable or even better performance with faster inference speed than neural recommender models. However, LAEs face two critical challenges: (i) popularity bias, which tends to recommend popular items, and (ii) neighborhood bias, which overly focuses on capturing local item correlations. To address these issues, this paper first analyzes the effect of two existing normalization methods for LAEs, i.e., random-walk and symmetric normalization. Our theoretical analysis reveals that normalization highly affects the degree of popularity and neighborhood biases among items. Inspired by this analysis, we propose a versatile normalization solution, called Data-Adaptive Normalization (DAN), which flexibly controls the popularity and neighborhood biases by adjusting item- and user-side normalization to align with unique dataset characteristics. Owing to its model-agnostic property, DAN can be easily applied to various LAE-based models. Experimental results show that DAN-equipped LAEs consistently improve existing LAE-based models across six benchmark datasets, with significant gains of up to 128.57% and 12.36% for long-tail items and unbiased evaluations, respectively. Refer to our code in https://github.com/psm1206/DAN.

Empowering Retrieval-based Conversational Recommendation with Contrasting User Preferences

Mar 27, 2025

Abstract:Conversational recommender systems (CRSs) are designed to suggest the target item that the user is likely to prefer through multi-turn conversations. Recent studies stress that capturing sentiments in user conversations improves recommendation accuracy. However, they employ a single user representation, which may fail to distinguish between contrasting user intentions, such as likes and dislikes, potentially leading to suboptimal performance. To this end, we propose a novel conversational recommender model, called COntrasting user pReference expAnsion and Learning (CORAL). Firstly, CORAL extracts the user's hidden preferences through contrasting preference expansion using the reasoning capacity of the LLMs. Based on the potential preference, CORAL explicitly differentiates the contrasting preferences and leverages them into the recommendation process via preference-aware learning. Extensive experiments show that CORAL significantly outperforms existing methods in three benchmark datasets, improving up to 99.72% in Recall@10. The code and datasets are available at https://github.com/kookeej/CORAL

Temporal Linear Item-Item Model for Sequential Recommendation

Dec 10, 2024

Abstract:In sequential recommendation (SR), neural models have been actively explored due to their remarkable performance, but they suffer from inefficiency inherent to their complexity. On the other hand, linear SR models exhibit high efficiency and achieve competitive or superior accuracy compared to neural models. However, they solely deal with the sequential order of items (i.e., sequential information) and overlook the actual timestamp (i.e., temporal information). It is limited to effectively capturing various user preference drifts over time. To address this issue, we propose a novel linear SR model, named TemporAl LinEar item-item model (TALE), incorporating temporal information while preserving training/inference efficiency, with three key components. (i) Single-target augmentation concentrates on a single target item, enabling us to learn the temporal correlation for the target item. (ii) Time interval-aware weighting utilizes the actual timestamp to discern the item correlation depending on time intervals. (iii) Trend-aware normalization reflects the dynamic shift of item popularity over time. Our empirical studies show that TALE outperforms ten competing SR models by up to 18.71% gains on five benchmark datasets. It also exhibits remarkable effectiveness in evaluating long-tail items by up to 30.45% gains. The source code is available at https://github.com/psm1206/TALE.

Quantization-Aware Imitation-Learning for Resource-Efficient Robotic Control

Dec 02, 2024

Abstract:Deep neural network (DNN)-based policy models like vision-language-action (VLA) models are transformative in automating complex decision-making across applications by interpreting multi-modal data. However, scaling these models greatly increases computational costs, which presents challenges in fields like robot manipulation and autonomous driving that require quick, accurate responses. To address the need for deployment on resource-limited hardware, we propose a new quantization framework for IL-based policy models that fine-tunes parameters to enhance robustness against low-bit precision errors during training, thereby maintaining efficiency and reliability under constrained conditions. Our evaluations with representative robot manipulation for 4-bit weight-quantization on a real edge GPU demonstrate that our framework achieves up to 2.5x speedup and 2.5x energy savings while preserving accuracy. For 4-bit weight and activation quantized self-driving models, the framework achieves up to 3.7x speedup and 3.1x energy saving on a low-end GPU. These results highlight the practical potential of deploying IL-based policy models on resource-constrained devices.

Selectively Dilated Convolution for Accuracy-Preserving Sparse Pillar-based Embedded 3D Object Detection

Aug 25, 2024

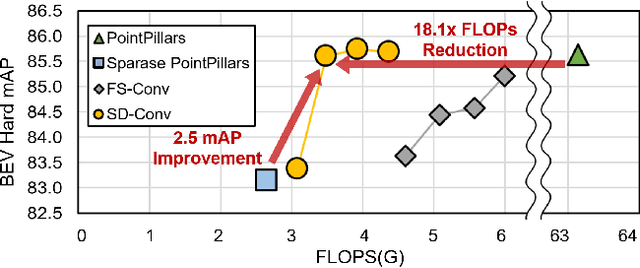

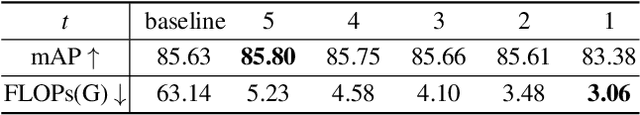

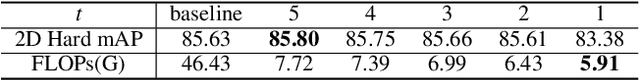

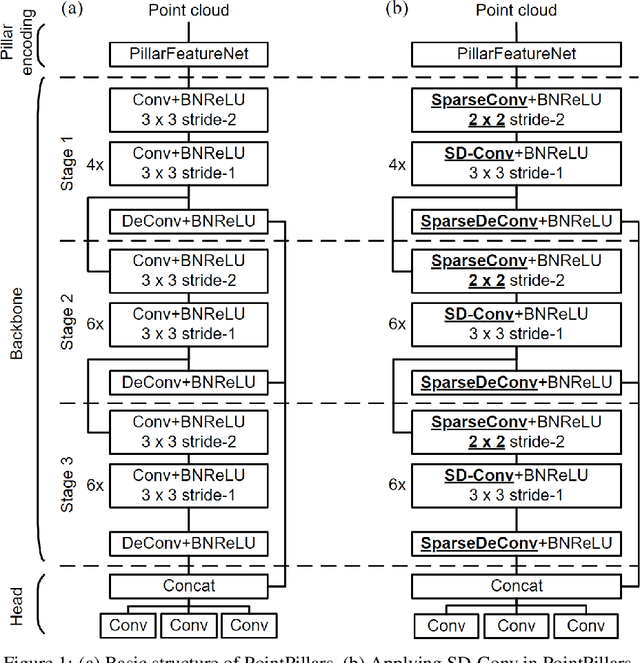

Abstract:Pillar-based 3D object detection has gained traction in self-driving technology due to its speed and accuracy facilitated by the artificial densification of pillars for GPU-friendly processing. However, dense pillar processing fundamentally wastes computation since it ignores the inherent sparsity of pillars derived from scattered point cloud data. Motivated by recent embedded accelerators with native sparsity support, sparse pillar convolution methods like submanifold convolution (SubM-Conv) aimed to reduce these redundant computations by applying convolution only on active pillars but suffered considerable accuracy loss. Our research identifies that this accuracy loss is due to the restricted fine-grained spatial information flow (fSIF) of SubM-Conv in sparse pillar networks. To overcome this restriction, we propose a selectively dilated (SD-Conv) convolution that evaluates the importance of encoded pillars and selectively dilates the convolution output, enhancing the receptive field for critical pillars and improving object detection accuracy. To facilitate actual acceleration with this novel convolution approach, we designed SPADE+ as a cost-efficient augmentation to existing embedded sparse convolution accelerators. This design supports the SD-Conv without significant demands in area and SRAM size, realizing superior trade-off between the speedup and model accuracy. This strategic enhancement allows our method to achieve extreme pillar sparsity, leading to up to 18.1x computational savings and 16.2x speedup on the embedded accelerators, without compromising object detection accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge