Ryan Alimo

Jet Propulsion Laboratory, California Institute of Technology, Pasadena, CA, 91109, USA

An Addendum to NeBula: Towards Extending TEAM CoSTAR's Solution to Larger Scale Environments

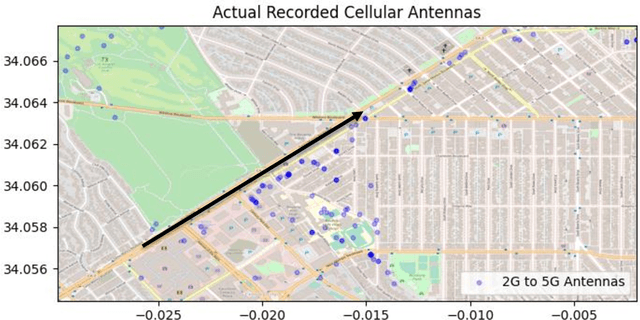

Apr 18, 2025Abstract:This paper presents an appendix to the original NeBula autonomy solution developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), participating in the DARPA Subterranean Challenge. Specifically, this paper presents extensions to NeBula's hardware, software, and algorithmic components that focus on increasing the range and scale of the exploration environment. From the algorithmic perspective, we discuss the following extensions to the original NeBula framework: (i) large-scale geometric and semantic environment mapping; (ii) an adaptive positioning system; (iii) probabilistic traversability analysis and local planning; (iv) large-scale POMDP-based global motion planning and exploration behavior; (v) large-scale networking and decentralized reasoning; (vi) communication-aware mission planning; and (vii) multi-modal ground-aerial exploration solutions. We demonstrate the application and deployment of the presented systems and solutions in various large-scale underground environments, including limestone mine exploration scenarios as well as deployment in the DARPA Subterranean challenge.

MaRF: Representing Mars as Neural Radiance Fields

Dec 03, 2022

Abstract:The aim of this work is to introduce MaRF, a novel framework able to synthesize the Martian environment using several collections of images from rover cameras. The idea is to generate a 3D scene of Mars' surface to address key challenges in planetary surface exploration such as: planetary geology, simulated navigation and shape analysis. Although there exist different methods to enable a 3D reconstruction of Mars' surface, they rely on classical computer graphics techniques that incur high amounts of computational resources during the reconstruction process, and have limitations with generalizing reconstructions to unseen scenes and adapting to new images coming from rover cameras. The proposed framework solves the aforementioned limitations by exploiting Neural Radiance Fields (NeRFs), a method that synthesize complex scenes by optimizing a continuous volumetric scene function using a sparse set of images. To speed up the learning process, we replaced the sparse set of rover images with their neural graphics primitives (NGPs), a set of vectors of fixed length that are learned to preserve the information of the original images in a significantly smaller size. In the experimental section, we demonstrate the environments created from actual Mars datasets captured by Curiosity rover, Perseverance rover and Ingenuity helicopter, all of which are available on the Planetary Data System (PDS).

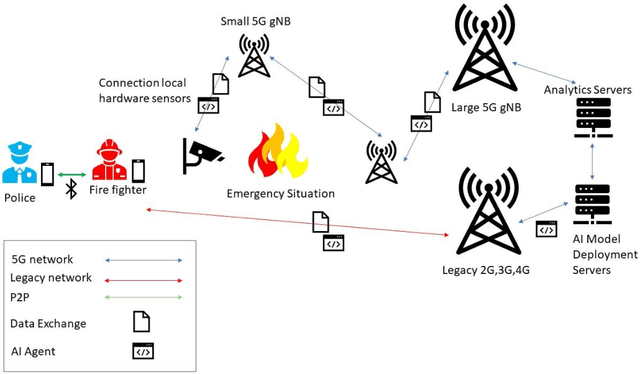

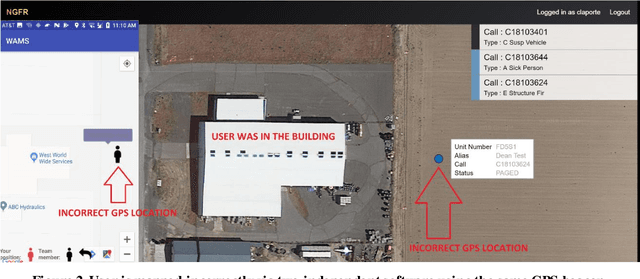

AI Agents in Emergency Response Applications

Sep 10, 2021

Abstract:Emergency personnel respond to various situations ranging from fire, medical, hazardous materials, industrial accidents, to natural disasters. Situations such as natural disasters or terrorist acts require a multifaceted response of firefighters, paramedics, hazmat teams, and other agencies. Engineering AI systems that aid emergency personnel proves to be a difficult system engineering problem. Mission-critical "edge AI" situations require low-latency, reliable analytics. To further add complexity, a high degree of model accuracy is required when lives are at stake, creating a need for the deployment of highly accurate, however computationally intensive models to resource-constrained devices. To address all these issues, we propose an agent-based architecture for deployment of AI agents via 5G service-based architecture.

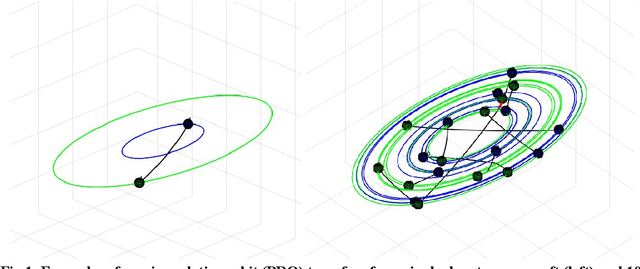

Multi-Agent Motion Planning using Deep Learning for Space Applications

Oct 15, 2020

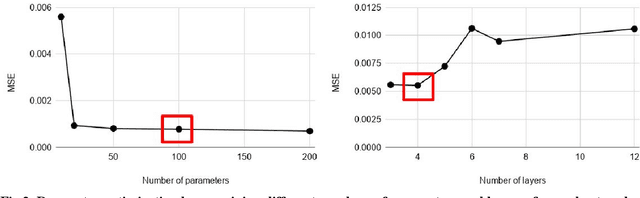

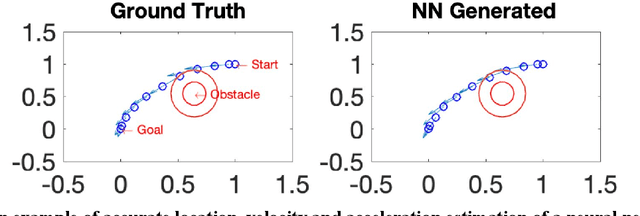

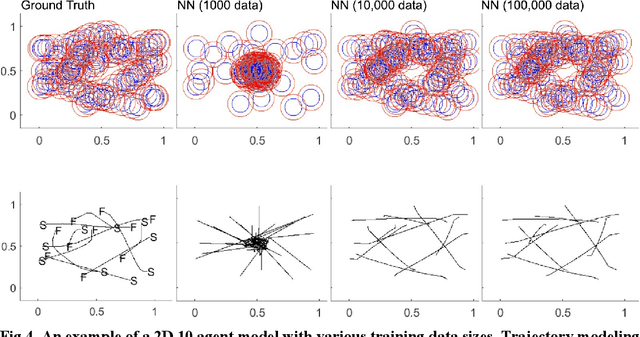

Abstract:State-of-the-art motion planners cannot scale to a large number of systems. Motion planning for multiple agents is an NP (non-deterministic polynomial-time) hard problem, so the computation time increases exponentially with each addition of agents. This computational demand is a major stumbling block to the motion planner's application to future NASA missions involving the swarm of space vehicles. We applied a deep neural network to transform computationally demanding mathematical motion planning problems into deep learning-based numerical problems. We showed optimal motion trajectories can be accurately replicated using deep learning-based numerical models in several 2D and 3D systems with multiple agents. The deep learning-based numerical model demonstrates superior computational efficiency with plans generated 1000 times faster than the mathematical model counterpart.

Tuning a variational autoencoder for data accountability problem in the Mars Science Laboratory ground data system

Jun 06, 2020

Abstract:The Mars Curiosity rover is frequently sending back engineering and science data that goes through a pipeline of systems before reaching its final destination at the mission operations center making it prone to volume loss and data corruption. A ground data system analysis (GDSA) team is charged with the monitoring of this flow of information and the detection of anomalies in that data in order to request a re-transmission when necessary. This work presents $\Delta$-MADS, a derivative-free optimization method applied for tuning the architecture and hyperparameters of a variational autoencoder trained to detect the data with missing patches in order to assist the GDSA team in their mission.

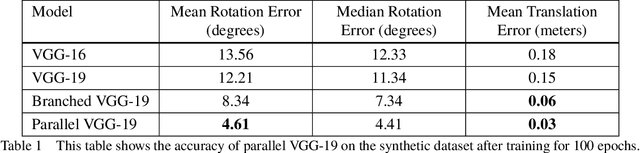

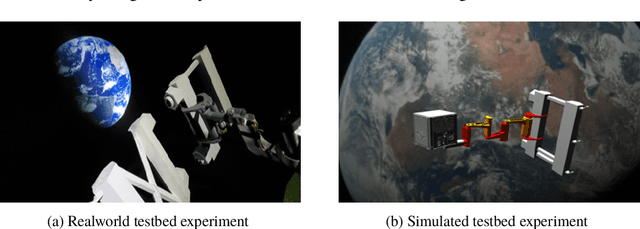

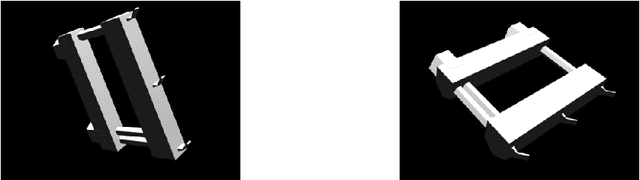

Assistive Relative Pose Estimation for On-orbit Assembly using Convolutional Neural Networks

Feb 19, 2020

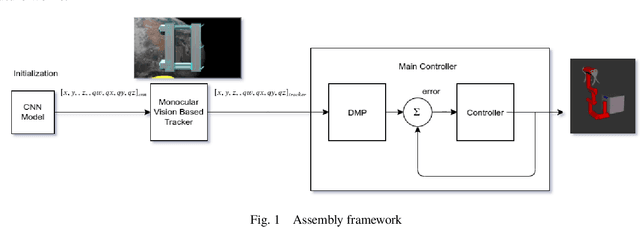

Abstract:Accurate real-time pose estimation of spacecraft or object in space is a key capability necessary for on-orbit spacecraft servicing and assembly tasks. Pose estimation of objects in space is more challenging than for objects on Earth due to space images containing widely varying illumination conditions, high contrast, and poor resolution in addition to power and mass constraints. In this paper, a convolutional neural network is leveraged to uniquely determine the translation and rotation of an object of interest relative to the camera. The main idea of using CNN model is to assist object tracker used in on space assembly tasks where only feature based method is always not sufficient. The simulation framework designed for assembly task is used to generate dataset for training the modified CNN models and, then results of different models are compared with measure of how accurately models are predicting the pose. Unlike many current approaches for spacecraft or object in space pose estimation, the model does not rely on hand-crafted object-specific features which makes this model more robust and easier to apply to other types of spacecraft. It is shown that the model performs comparable to the current feature-selection methods and can therefore be used in conjunction with them to provide more reliable estimates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge