Kyongsik Yun

Enhancing Fault-Tolerant Space Computing: Guidance Navigation and Control (GNC) and Landing Vision System (LVS) Implementations on Next-Gen Multi-Core Processors

Nov 06, 2025Abstract:Future planetary exploration missions demand high-performance, fault-tolerant computing to enable autonomous Guidance, Navigation, and Control (GNC) and Lander Vision System (LVS) operations during Entry, Descent, and Landing (EDL). This paper evaluates the deployment of GNC and LVS algorithms on next-generation multi-core processors--HPSC, Snapdragon VOXL2, and AMD Xilinx Versal--demonstrating up to 15x speedup for LVS image processing and over 250x speedup for Guidance for Fuel-Optimal Large Divert (GFOLD) trajectory optimization compared to legacy spaceflight hardware. To ensure computational reliability, we present ARBITER (Asynchronous Redundant Behavior Inspection for Trusted Execution and Recovery), a Multi-Core Voting (MV) mechanism that performs real-time fault detection and correction across redundant cores. ARBITER is validated in both static optimization tasks (GFOLD) and dynamic closed-loop control (Attitude Control System). A fault injection study further identifies the gradient computation stage in GFOLD as the most sensitive to bit-level errors, motivating selective protection strategies and vector-based output arbitration. This work establishes a scalable and energy-efficient architecture for future missions, including Mars Sample Return, Enceladus Orbilander, and Ceres Sample Return, where onboard autonomy, low latency, and fault resilience are critical.

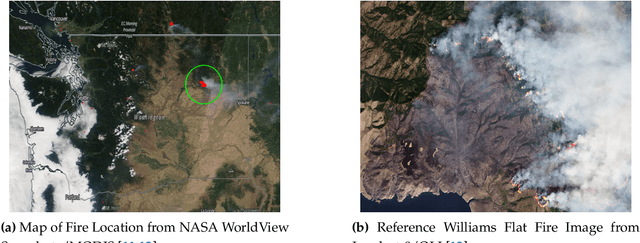

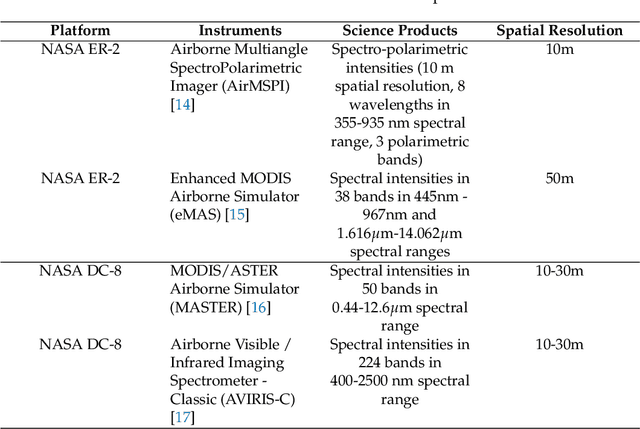

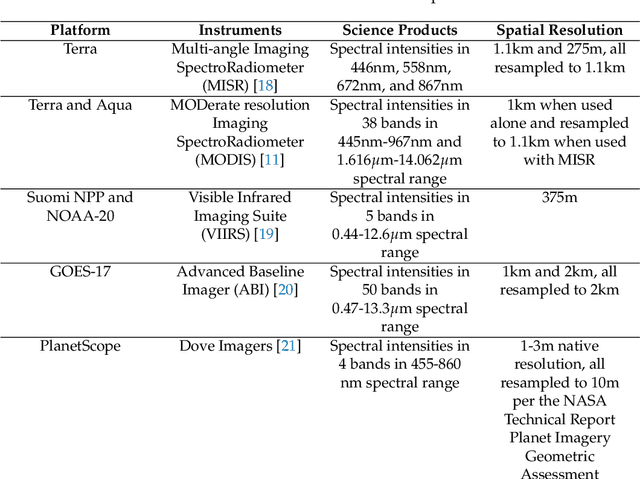

Development and Application of Self-Supervised Machine Learning for Smoke Plume and Active Fire Identification from the FIREX-AQ Datasets

Jan 25, 2025

Abstract:Fire Influence on Regional to Global Environments and Air Quality (FIREX-AQ) was a field campaign aimed at better understanding the impact of wildfires and agricultural fires on air quality and climate. The FIREX-AQ campaign took place in August 2019 and involved two aircraft and multiple coordinated satellite observations. This study applied and evaluated a self-supervised machine learning (ML) method for the active fire and smoke plume identification and tracking in the satellite and sub-orbital remote sensing datasets collected during the campaign. Our unique methodology combines remote sensing observations with different spatial and spectral resolutions. The demonstrated approach successfully differentiates fire pixels and smoke plumes from background imagery, enabling the generation of a per-instrument smoke and fire mask product, as well as smoke and fire masks created from the fusion of selected data from independent instruments. This ML approach has a potential to enhance operational wildfire monitoring systems and improve decision-making in air quality management through fast smoke plume identification12 and tracking and could improve climate impact studies through fusion data from independent instruments.

Constraint-Informed Learning for Warm Starting Trajectory Optimization

Dec 21, 2023Abstract:Future spacecraft and surface robotic missions require increasingly capable autonomy stacks for exploring challenging and unstructured domains and trajectory optimization will be a cornerstone of such autonomy stacks. However, the nonlinear optimization solvers required remain too slow for use on relatively resource constrained flight-grade computers. In this work, we turn towards amortized optimization, a learning-based technique for accelerating optimization run times, and present TOAST: Trajectory Optimization with Merit Function Warm Starts. Offline, using data collected from a simulation, we train a neural network to learn a mapping to the full primal and dual solutions given the problem parameters. Crucially, we build upon recent results from decision-focused learning and present a set of decision-focused loss functions using the notion of merit functions for optimization problems. We show that training networks with such constraint-informed losses can better encode the structure of the trajectory optimization problem and jointly learn to reconstruct the primal-dual solution while also yielding improved constraint satisfaction. Through numerical experiments on a Lunar rover problem, we demonstrate that TOAST outperforms benchmark approaches in terms of both computation times and network prediction constraint satisfaction.

Remote estimation of geologic composition using interferometric synthetic-aperture radar in California's Central Valley

Dec 04, 2022Abstract:California's Central Valley is the national agricultural center, producing 1/4 of the nation's food. However, land in the Central Valley is sinking at a rapid rate (as much as 20 cm per year) due to continued groundwater pumping. Land subsidence has a significant impact on infrastructure resilience and groundwater sustainability. In this study, we aim to identify specific regions with different temporal dynamics of land displacement and find relationships with underlying geological composition. Then, we aim to remotely estimate geologic composition using interferometric synthetic aperture radar (InSAR)-based land deformation temporal changes using machine learning techniques. We identified regions with different temporal characteristics of land displacement in that some areas (e.g., Helm) with coarser grain geologic compositions exhibited potentially reversible land deformation (elastic land compaction). We found a significant correlation between InSAR-based land deformation and geologic composition using random forest and deep neural network regression models. We also achieved significant accuracy with 1/4 sparse sampling to reduce any spatial correlations among data, suggesting that the model has the potential to be generalized to other regions for indirect estimation of geologic composition. Our results indicate that geologic composition can be estimated using InSAR-based land deformation data. In-situ measurements of geologic composition can be expensive and time consuming and may be impractical in some areas. The generalizability of the model sheds light on high spatial resolution geologic composition estimation utilizing existing measurements.

Neurosymbolic hybrid approach to driver collision warning

Mar 28, 2022

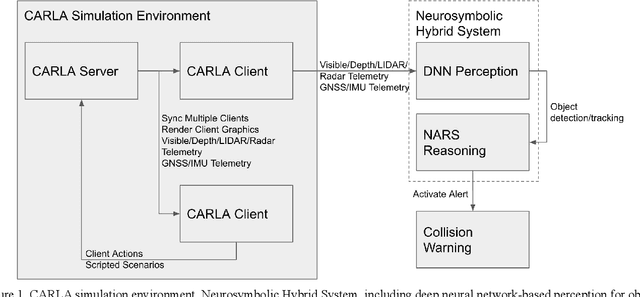

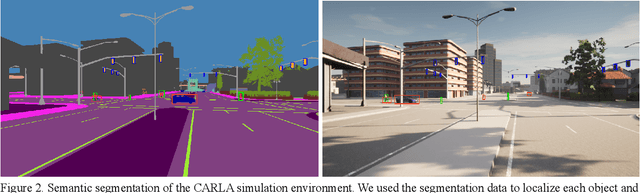

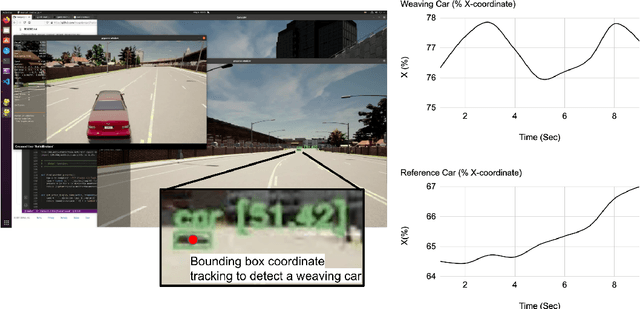

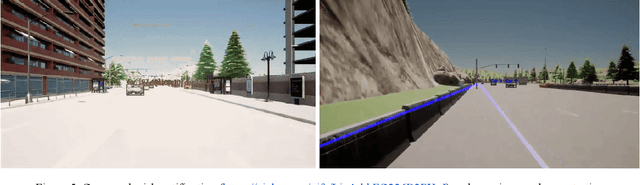

Abstract:There are two main algorithmic approaches to autonomous driving systems: (1) An end-to-end system in which a single deep neural network learns to map sensory input directly into appropriate warning and driving responses. (2) A mediated hybrid recognition system in which a system is created by combining independent modules that detect each semantic feature. While some researchers believe that deep learning can solve any problem, others believe that a more engineered and symbolic approach is needed to cope with complex environments with less data. Deep learning alone has achieved state-of-the-art results in many areas, from complex gameplay to predicting protein structures. In particular, in image classification and recognition, deep learning models have achieved accuracies as high as humans. But sometimes it can be very difficult to debug if the deep learning model doesn't work. Deep learning models can be vulnerable and are very sensitive to changes in data distribution. Generalization can be problematic. It's usually hard to prove why it works or doesn't. Deep learning models can also be vulnerable to adversarial attacks. Here, we combine deep learning-based object recognition and tracking with an adaptive neurosymbolic network agent, called the Non-Axiomatic Reasoning System (NARS), that can adapt to its environment by building concepts based on perceptual sequences. We achieved an improved intersection-over-union (IOU) object recognition performance of 0.65 in the adaptive retraining model compared to IOU 0.31 in the COCO data pre-trained model. We improved the object detection limits using RADAR sensors in a simulated environment, and demonstrated the weaving car detection capability by combining deep learning-based object detection and tracking with a neurosymbolic model.

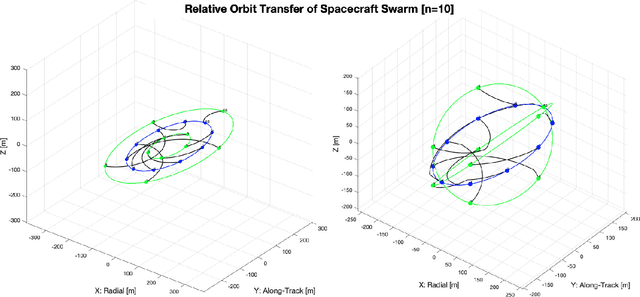

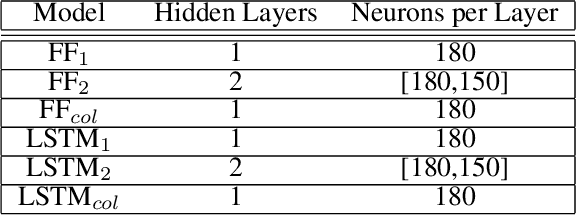

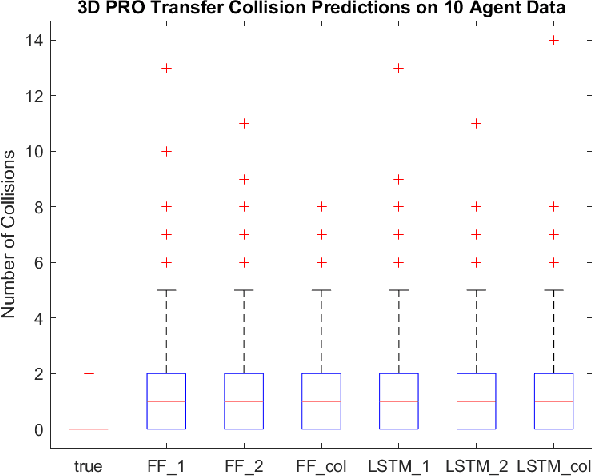

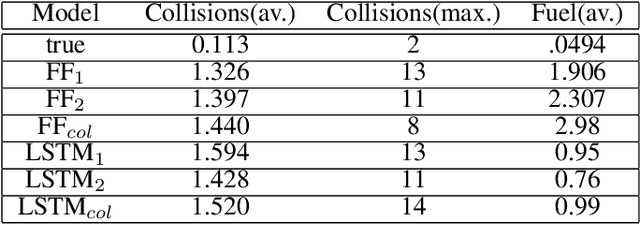

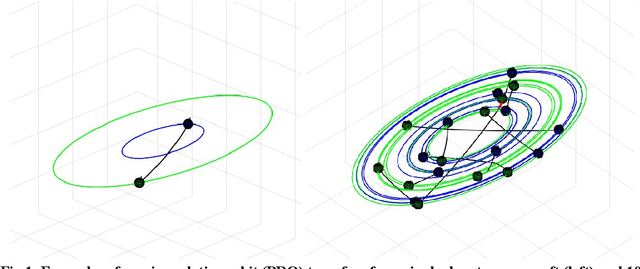

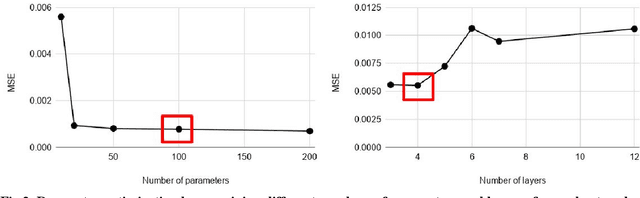

Machine Learning Based Relative Orbit Transfer for Swarm Spacecraft Motion Planning

Jan 28, 2022

Abstract:In this paper we describe a machine learning based framework for spacecraft swarm trajectory planning. In particular, we focus on coordinating motions of multi-spacecraft in formation flying through passive relative orbit(PRO) transfers. Accounting for spacecraft dynamics while avoiding collisions between the agents makes spacecraft swarm trajectory planning difficult. Centralized approaches can be used to solve this problem, but are computationally demanding and scale poorly with the number of agents in the swarm. As a result, centralized algorithms are ill-suited for real time trajectory planning on board small spacecraft (e.g. CubeSats) comprising the swarm. In our approach a neural network is used to approximate solutions of a centralized method. The necessary training data is generated using a centralized convex optimization framework through which several instances of the n=10 spacecraft swarm trajectory planning problem are solved. We are interested in answering the following questions which will give insight on the potential utility of deep learning-based approaches to the multi-spacecraft motion planning problem: 1) Can neural networks produce feasible trajectories that satisfy safety constraints (e.g. collision avoidance) and low in fuel cost? 2) Can a neural network trained using n spacecraft data be used to solve problems for spacecraft swarms of differing size?

Explainability Tools Enabling Deep Learning in Future In-Situ Real-Time Planetary Explorations

Jan 15, 2022

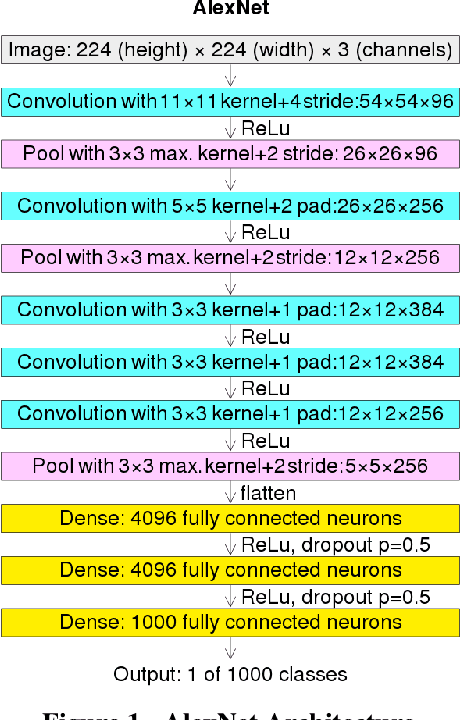

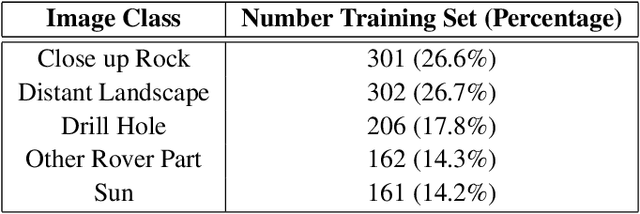

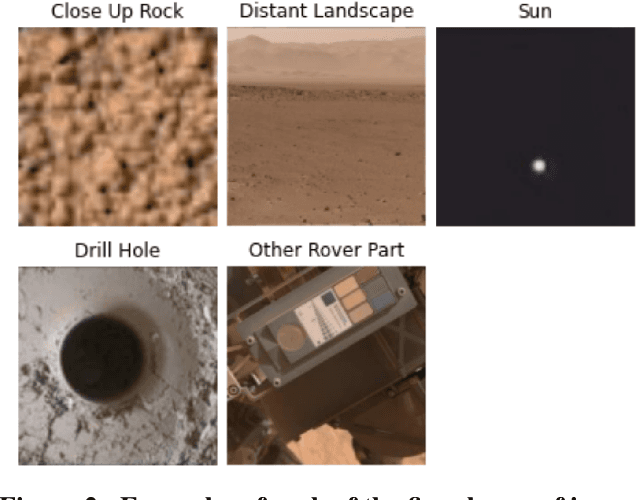

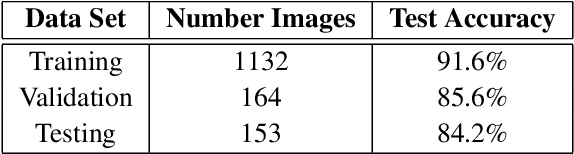

Abstract:Deep learning (DL) has proven to be an effective machine learning and computer vision technique. DL-based image segmentation, object recognition and classification will aid many in-situ Mars rover tasks such as path planning and artifact recognition/extraction. However, most of the Deep Neural Network (DNN) architectures are so complex that they are considered a 'black box'. In this paper, we used integrated gradients to describe the attributions of each neuron to the output classes. It provides a set of explainability tools (ET) that opens the black box of a DNN so that the individual contribution of neurons to category classification can be ranked and visualized. The neurons in each dense layer are mapped and ranked by measuring expected contribution of a neuron to a class vote given a true image label. The importance of neurons is prioritized according to their correct or incorrect contribution to the output classes and suppression or bolstering of incorrect classes, weighted by the size of each class. ET provides an interface to prune the network to enhance high-rank neurons and remove low-performing neurons. ET technology will make DNNs smaller and more efficient for implementation in small embedded systems. It also leads to more explainable and testable DNNs that can make systems easier for Validation \& Verification. The goal of ET technology is to enable the adoption of DL in future in-situ planetary exploration missions.

Time Series Comparisons in Deep Space Network

Nov 02, 2021

Abstract:The Deep Space Network is NASA's international array of antennas that support interplanetary spacecraft missions. A track is a block of multi-dimensional time series from the beginning to end of DSN communication with the target spacecraft, containing thousands of monitor data items lasting several hours at a frequency of 0.2-1Hz. Monitor data on each track reports on the performance of specific spacecraft operations and the DSN itself. DSN is receiving signals from 32 spacecraft across the solar system. DSN has pressure to reduce costs while maintaining the quality of support for DSN mission users. DSN Link Control Operators need to simultaneously monitor multiple tracks and identify anomalies in real time. DSN has seen that as the number of missions increases, the data that needs to be processed increases over time. In this project, we look at the last 8 years of data for analysis. Any anomaly in the track indicates a problem with either the spacecraft, DSN equipment, or weather conditions. DSN operators typically write Discrepancy Reports for further analysis. It is recognized that it would be quite helpful to identify 10 similar historical tracks out of the huge database to quickly find and match anomalies. This tool has three functions: (1) identification of the top 10 similar historical tracks, (2) detection of anomalies compared to the reference normal track, and (3) comparison of statistical differences between two given tracks. The requirements for these features were confirmed by survey responses from 21 DSN operators and engineers. The preliminary machine learning model has shown promising performance (AUC=0.92). We plan to increase the number of data sets and perform additional testing to improve performance further before its planned integration into the track visualizer interface to assist DSN field operators and engineers.

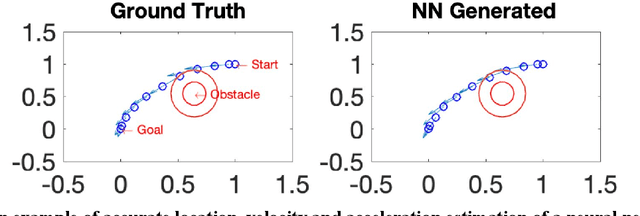

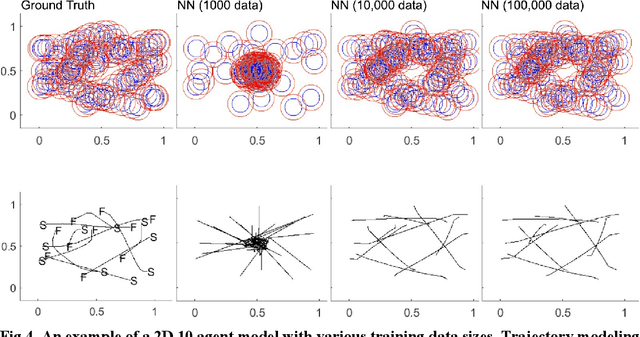

Multi-Agent Motion Planning using Deep Learning for Space Applications

Oct 15, 2020

Abstract:State-of-the-art motion planners cannot scale to a large number of systems. Motion planning for multiple agents is an NP (non-deterministic polynomial-time) hard problem, so the computation time increases exponentially with each addition of agents. This computational demand is a major stumbling block to the motion planner's application to future NASA missions involving the swarm of space vehicles. We applied a deep neural network to transform computationally demanding mathematical motion planning problems into deep learning-based numerical problems. We showed optimal motion trajectories can be accurately replicated using deep learning-based numerical models in several 2D and 3D systems with multiple agents. The deep learning-based numerical model demonstrates superior computational efficiency with plans generated 1000 times faster than the mathematical model counterpart.

Smoke Sky -- Exploring New Frontiers of Unmanned Aerial Systems for Wildland Fire Science and Applications

Nov 12, 2019

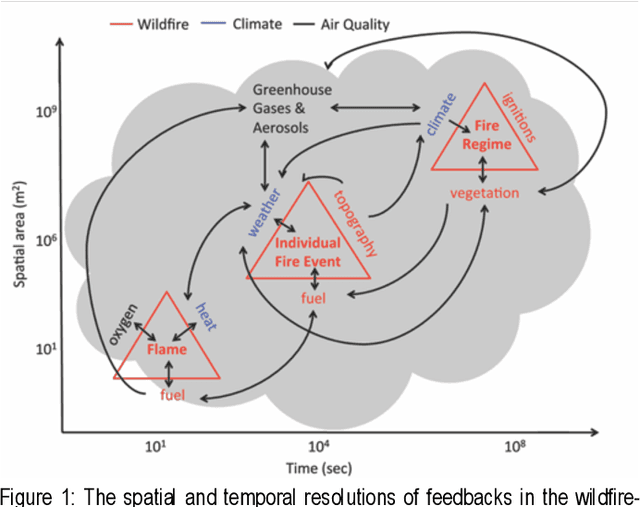

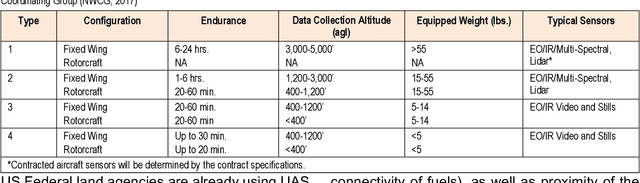

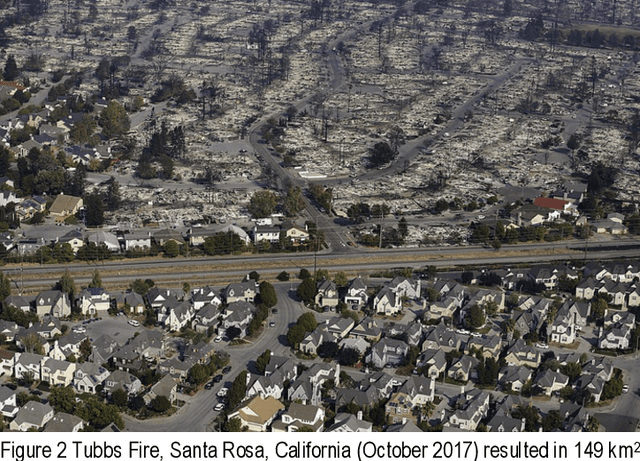

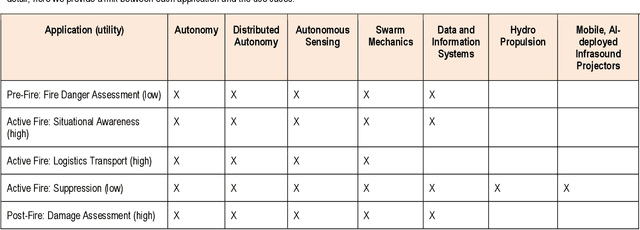

Abstract:Wildfire has had increasing impacts on society as the climate changes and the wildland urban interface grows. As such, there is a demand for innovative solutions to help manage fire. Managing wildfire can include proactive fire management such as prescribed burning within constrained areas or advancements for reactive fire management (e.g., fire suppression). Because of the growing societal impact, the JPL BlueSky program sought to assess the current state of fire management and technology and determine areas with high return on investment. To accomplish this, we met with the national interagency Unmanned Aerial System (UAS) Advisory Group (UASAG) and with leading technology transfer experts for fire science and management applications. We provide an overview of the current state as well as an analysis of the impact, maturity and feasibility of integrating different technologies that can be developed by JPL. Based on the findings, the highest return on investment technologies for fire management are first to develop single micro-aerial vehicle (MAV) autonomy, autonomous sensing over fire, and the associated data and information system for active fire local environment mapping. Once this is completed for a single MAV, expanding the work to include many in a swarm would require further investment of distributed MAV autonomy and MAV swarm mechanics, but could greatly expand the breadth of application over large fires. Important to investing in these technologies will be in developing collaborations with the key influencers and champions for using UAS technology in fire management.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge