Qinming He

Improving Unsupervised Task-driven Models of Ventral Visual Stream via Relative Position Predictivity

May 13, 2025Abstract:Based on the concept that ventral visual stream (VVS) mainly functions for object recognition, current unsupervised task-driven methods model VVS by contrastive learning, and have achieved good brain similarity. However, we believe functions of VVS extend beyond just object recognition. In this paper, we introduce an additional function involving VVS, named relative position (RP) prediction. We first theoretically explain contrastive learning may be unable to yield the model capability of RP prediction. Motivated by this, we subsequently integrate RP learning with contrastive learning, and propose a new unsupervised task-driven method to model VVS, which is more inline with biological reality. We conduct extensive experiments, demonstrating that: (i) our method significantly improves downstream performance of object recognition while enhancing RP predictivity; (ii) RP predictivity generally improves the model brain similarity. Our results provide strong evidence for the involvement of VVS in location perception (especially RP prediction) from a computational perspective.

Speed-enhanced Subdomain Adaptation Regression for Long-term Stable Neural Decoding in Brain-computer Interfaces

Jul 25, 2024

Abstract:Brain-computer interfaces (BCIs) offer a means to convert neural signals into control signals, providing a potential restoration of movement for people with paralysis. Despite their promise, BCIs face a significant challenge in maintaining decoding accuracy over time due to neural nonstationarities. However, the decoding accuracy of BCI drops severely across days due to the neural data drift. While current recalibration techniques address this issue to a degree, they often fail to leverage the limited labeled data, to consider the signal correlation between two days, or to perform conditional alignment in regression tasks. This paper introduces a novel approach to enhance recalibration performance. We begin with preliminary experiments that reveal the temporal patterns of neural signal changes and identify three critical elements for effective recalibration: global alignment, conditional speed alignment, and feature-label consistency. Building on these insights, we propose the Speed-enhanced Subdomain Adaptation Regression (SSAR) framework, integrating semi-supervised learning with domain adaptation techniques in regression neural decoding. SSAR employs Speed-enhanced Subdomain Alignment (SeSA) for global and speed conditional alignment of similarly labeled data, with Contrastive Consistency Constraint (CCC) to enhance the alignment of SeSA by reinforcing feature-label consistency through contrastive learning. Our comprehensive set of experiments, both qualitative and quantitative, substantiate the superior recalibration performance and robustness of SSAR.

Clean-image Backdoor Attacks

Mar 26, 2024

Abstract:To gather a significant quantity of annotated training data for high-performance image classification models, numerous companies opt to enlist third-party providers to label their unlabeled data. This practice is widely regarded as secure, even in cases where some annotated errors occur, as the impact of these minor inaccuracies on the final performance of the models is negligible and existing backdoor attacks require attacker's ability to poison the training images. Nevertheless, in this paper, we propose clean-image backdoor attacks which uncover that backdoors can still be injected via a fraction of incorrect labels without modifying the training images. Specifically, in our attacks, the attacker first seeks a trigger feature to divide the training images into two parts: those with the feature and those without it. Subsequently, the attacker falsifies the labels of the former part to a backdoor class. The backdoor will be finally implanted into the target model after it is trained on the poisoned data. During the inference phase, the attacker can activate the backdoor in two ways: slightly modifying the input image to obtain the trigger feature, or taking an image that naturally has the trigger feature as input. We conduct extensive experiments to demonstrate the effectiveness and practicality of our attacks. According to the experimental results, we conclude that our attacks seriously jeopardize the fairness and robustness of image classification models, and it is necessary to be vigilant about the incorrect labels in outsourced labeling.

CoMeta: Enhancing Meta Embeddings with Collaborative Information in Cold-start Problem of Recommendation

Mar 14, 2023

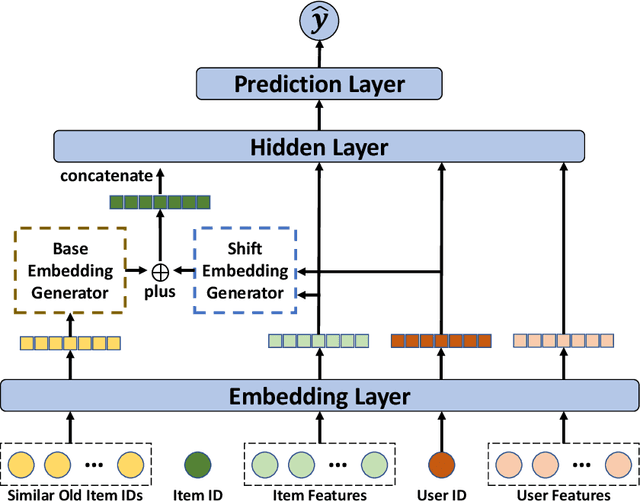

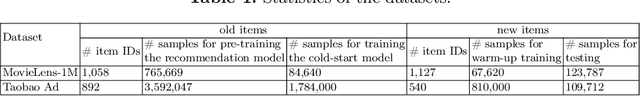

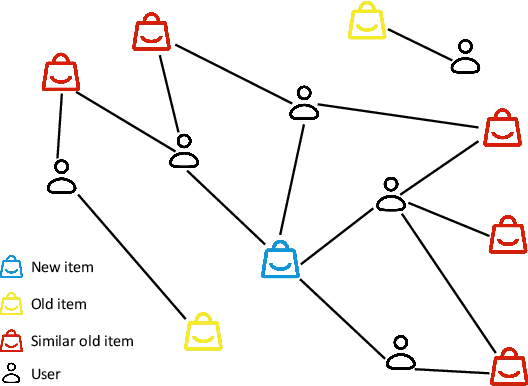

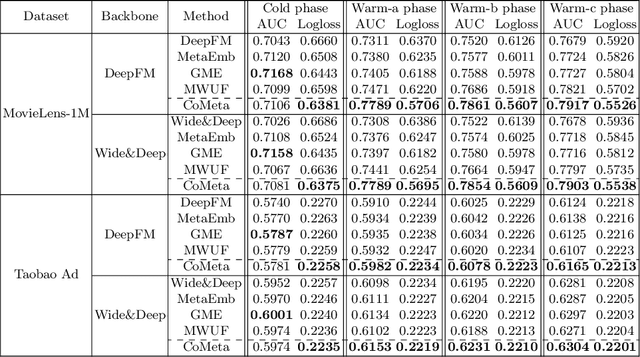

Abstract:The cold-start problem is quite challenging for existing recommendation models. Specifically, for the new items with only a few interactions, their ID embeddings are trained inadequately, leading to poor recommendation performance. Some recent studies introduce meta learning to solve the cold-start problem by generating meta embeddings for new items as their initial ID embeddings. However, we argue that the capability of these methods is limited, because they mainly utilize item attribute features which only contain little information, but ignore the useful collaborative information contained in the ID embeddings of users and old items. To tackle this issue, we propose CoMeta to enhance the meta embeddings with the collaborative information. CoMeta consists of two submodules: B-EG and S-EG. Specifically, for a new item: B-EG calculates the similarity-based weighted sum of the ID embeddings of old items as its base embedding; S-EG generates its shift embedding not only with its attribute features but also with the average ID embedding of the users who interacted with it. The final meta embedding is obtained by adding up the base embedding and the shift embedding. We conduct extensive experiments on two public datasets. The experimental results demonstrate both the effectiveness and the compatibility of CoMeta.

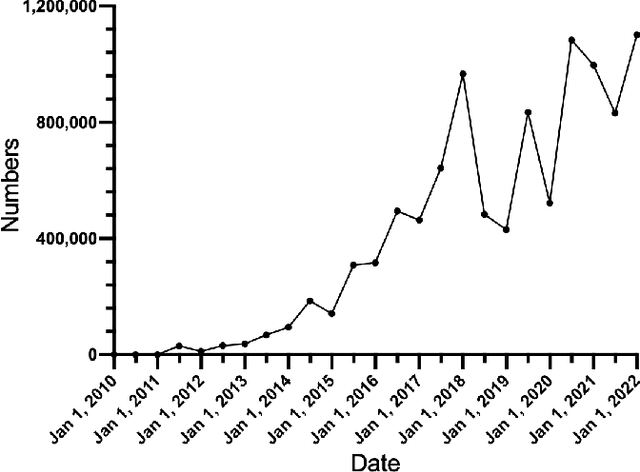

Who is Gambling? Finding Cryptocurrency Gamblers Using Multi-modal Retrieval Methods

Nov 27, 2022Abstract:With the popularity of cryptocurrencies and the remarkable development of blockchain technology, decentralized applications emerged as a revolutionary force for the Internet. Meanwhile, decentralized applications have also attracted intense attention from the online gambling community, with more and more decentralized gambling platforms created through the help of smart contracts. Compared with conventional gambling platforms, decentralized gambling have transparent rules and a low participation threshold, attracting a substantial number of gamblers. In order to discover gambling behaviors and identify the contracts and addresses involved in gambling, we propose a tool termed ETHGamDet. The tool is able to automatically detect the smart contracts and addresses involved in gambling by scrutinizing the smart contract code and address transaction records. Interestingly, we present a novel LightGBM model with memory components, which possesses the ability to learn from its own misclassifications. As a side contribution, we construct and release a large-scale gambling dataset at https://github.com/AwesomeHuang/Bitcoin-Gambling-Dataset to facilitate future research in this field. Empirically, ETHGamDet achieves a F1-score of 0.72 and 0.89 in address classification and contract classification respectively, and offers novel and interesting insights.

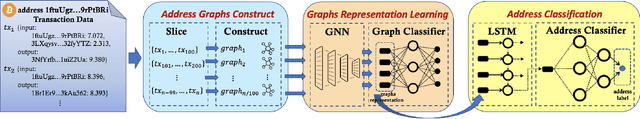

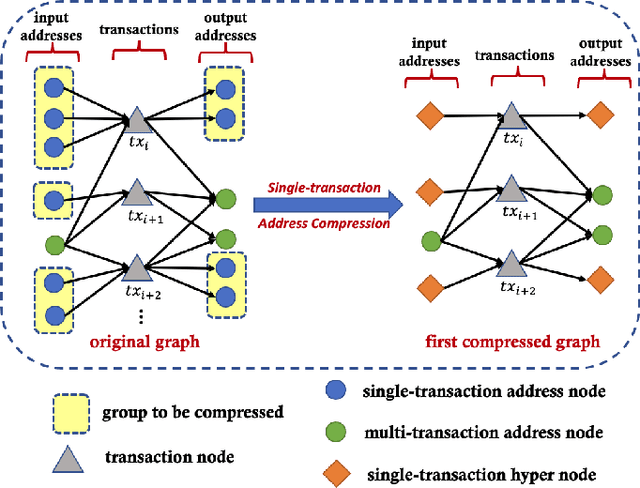

Demystifying Bitcoin Address Behavior via Graph Neural Networks

Nov 26, 2022

Abstract:Bitcoin is one of the decentralized cryptocurrencies powered by a peer-to-peer blockchain network. Parties who trade in the bitcoin network are not required to disclose any personal information. Such property of anonymity, however, precipitates potential malicious transactions to a certain extent. Indeed, various illegal activities such as money laundering, dark network trading, and gambling in the bitcoin network are nothing new now. While a proliferation of work has been developed to identify malicious bitcoin transactions, the behavior analysis and classification of bitcoin addresses are largely overlooked by existing tools. In this paper, we propose BAClassifier, a tool that can automatically classify bitcoin addresses based on their behaviors. Technically, we come up with the following three key designs. First, we consider casting the transactions of the bitcoin address into an address graph structure, of which we introduce a graph node compression technique and a graph structure augmentation method to characterize a unified graph representation. Furthermore, we leverage a graph feature network to learn the graph representations of each address and generate the graph embeddings. Finally, we aggregate all graph embeddings of an address into the address-level representation, and engage in a classification model to give the address behavior classification. As a side contribution, we construct and release a large-scale annotated dataset that consists of over 2 million real-world bitcoin addresses and concerns 4 types of address behaviors. Experimental results demonstrate that our proposed framework outperforms state-of-the-art bitcoin address classifiers and existing classification models, where the precision and F1-score are 96% and 95%, respectively. Our implementation and dataset are released, hoping to inspire others.

Poisoning Deep Learning based Recommender Model in Federated Learning Scenarios

Apr 26, 2022

Abstract:Various attack methods against recommender systems have been proposed in the past years, and the security issues of recommender systems have drawn considerable attention. Traditional attacks attempt to make target items recommended to as many users as possible by poisoning the training data. Benifiting from the feature of protecting users' private data, federated recommendation can effectively defend such attacks. Therefore, quite a few works have devoted themselves to developing federated recommender systems. For proving current federated recommendation is still vulnerable, in this work we probe to design attack approaches targeting deep learning based recommender models in federated learning scenarios. Specifically, our attacks generate poisoned gradients for manipulated malicious users to upload based on two strategies (i.e., random approximation and hard user mining). Extensive experiments show that our well-designed attacks can effectively poison the target models, and the attack effectiveness sets the state-of-the-art.

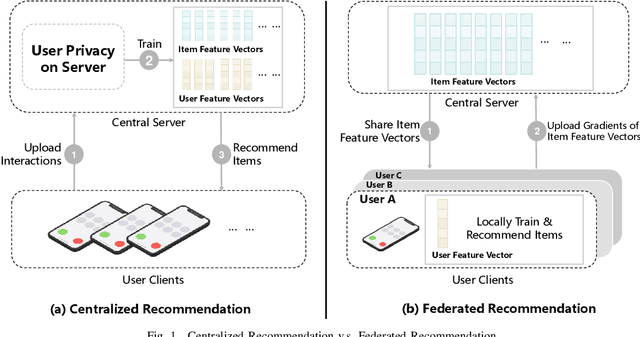

FedRecAttack: Model Poisoning Attack to Federated Recommendation

Apr 01, 2022

Abstract:Federated Recommendation (FR) has received considerable popularity and attention in the past few years. In FR, for each user, its feature vector and interaction data are kept locally on its own client thus are private to others. Without the access to above information, most existing poisoning attacks against recommender systems or federated learning lose validity. Benifiting from this characteristic, FR is commonly considered fairly secured. However, we argue that there is still possible and necessary security improvement could be made in FR. To prove our opinion, in this paper we present FedRecAttack, a model poisoning attack to FR aiming to raise the exposure ratio of target items. In most recommendation scenarios, apart from private user-item interactions (e.g., clicks, watches and purchases), some interactions are public (e.g., likes, follows and comments). Motivated by this point, in FedRecAttack we make use of the public interactions to approximate users' feature vectors, thereby attacker can generate poisoned gradients accordingly and control malicious users to upload the poisoned gradients in a well-designed way. To evaluate the effectiveness and side effects of FedRecAttack, we conduct extensive experiments on three real-world datasets of different sizes from two completely different scenarios. Experimental results demonstrate that our proposed FedRecAttack achieves the state-of-the-art effectiveness while its side effects are negligible. Moreover, even with small proportion (3%) of malicious users and small proportion (1%) of public interactions, FedRecAttack remains highly effective, which reveals that FR is more vulnerable to attack than people commonly considered.

Smart Contract Vulnerability Detection: From Pure Neural Network to Interpretable Graph Feature and Expert Pattern Fusion

Jun 17, 2021

Abstract:Smart contracts hold digital coins worth billions of dollars, their security issues have drawn extensive attention in the past years. Towards smart contract vulnerability detection, conventional methods heavily rely on fixed expert rules, leading to low accuracy and poor scalability. Recent deep learning approaches alleviate this issue but fail to encode useful expert knowledge. In this paper, we explore combining deep learning with expert patterns in an explainable fashion. Specifically, we develop automatic tools to extract expert patterns from the source code. We then cast the code into a semantic graph to extract deep graph features. Thereafter, the global graph feature and local expert patterns are fused to cooperate and approach the final prediction, while yielding their interpretable weights. Experiments are conducted on all available smart contracts with source code in two platforms, Ethereum and VNT Chain. Empirically, our system significantly outperforms state-of-the-art methods. Our code is released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge