Haonan Hu

Dual-Channel Reliable Breast Ultrasound Image Classification Based on Explainable Attribution and Uncertainty Quantification

Jan 08, 2024

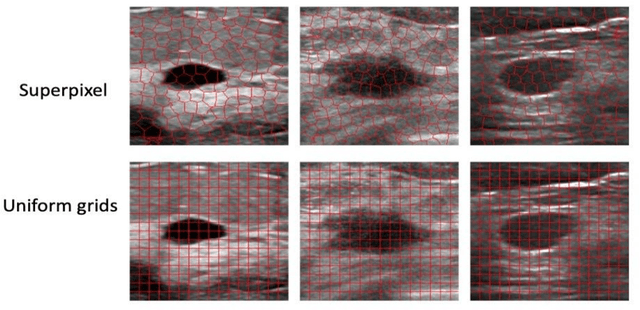

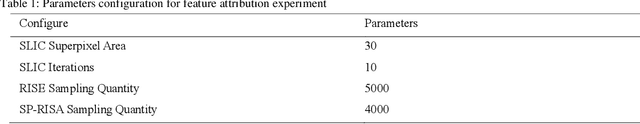

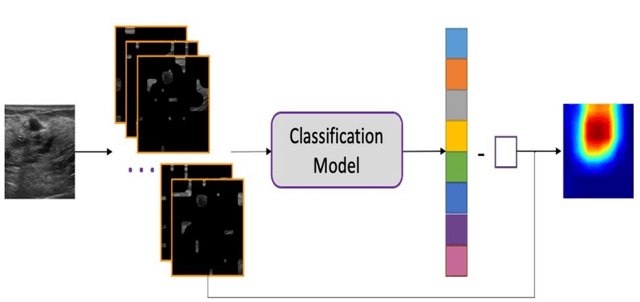

Abstract:This paper focuses on the classification task of breast ultrasound images and researches on the reliability measurement of classification results. We proposed a dual-channel evaluation framework based on the proposed inference reliability and predictive reliability scores. For the inference reliability evaluation, human-aligned and doctor-agreed inference rationales based on the improved feature attribution algorithm SP-RISA are gracefully applied. Uncertainty quantification is used to evaluate the predictive reliability via the Test Time Enhancement. The effectiveness of this reliability evaluation framework has been verified on our breast ultrasound clinical dataset YBUS, and its robustness is verified on the public dataset BUSI. The expected calibration errors on both datasets are significantly lower than traditional evaluation methods, which proves the effectiveness of our proposed reliability measurement.

On the performance of an integrated communication and localization system: an analytical framework

Sep 08, 2023Abstract:Quantifying the performance bound of an integrated localization and communication (ILAC) system and the trade-off between communication and localization performance is critical. In this letter, we consider an ILAC system that can perform communication and localization via time-domain or frequency-domain resource allocation. We develop an analytical framework to derive the closed-form expression of the capacity loss versus localization Cramer-Rao lower bound (CRB) loss via time-domain and frequency-domain resource allocation. Simulation results validate the analytical model and demonstrate that frequency-domain resource allocation is preferable in scenarios with a smaller number of antennas at the next generation nodeB (gNB) and a larger distance between user equipment (UE) and gNB, while time-domain resource allocation is preferable in scenarios with a larger number of antennas and smaller distance between UE and the gNB.

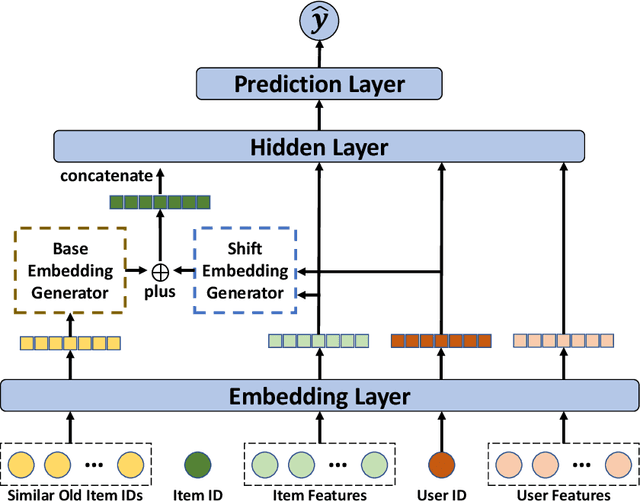

CoMeta: Enhancing Meta Embeddings with Collaborative Information in Cold-start Problem of Recommendation

Mar 14, 2023

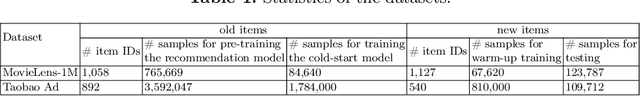

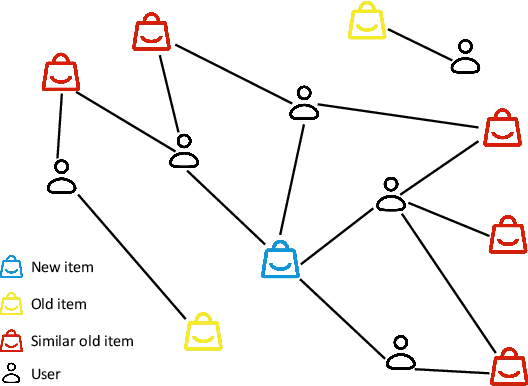

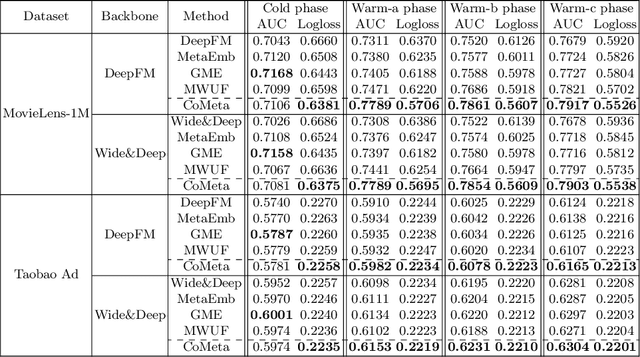

Abstract:The cold-start problem is quite challenging for existing recommendation models. Specifically, for the new items with only a few interactions, their ID embeddings are trained inadequately, leading to poor recommendation performance. Some recent studies introduce meta learning to solve the cold-start problem by generating meta embeddings for new items as their initial ID embeddings. However, we argue that the capability of these methods is limited, because they mainly utilize item attribute features which only contain little information, but ignore the useful collaborative information contained in the ID embeddings of users and old items. To tackle this issue, we propose CoMeta to enhance the meta embeddings with the collaborative information. CoMeta consists of two submodules: B-EG and S-EG. Specifically, for a new item: B-EG calculates the similarity-based weighted sum of the ID embeddings of old items as its base embedding; S-EG generates its shift embedding not only with its attribute features but also with the average ID embedding of the users who interacted with it. The final meta embedding is obtained by adding up the base embedding and the shift embedding. We conduct extensive experiments on two public datasets. The experimental results demonstrate both the effectiveness and the compatibility of CoMeta.

On the Performance of Data Compression in Clustered Fog Radio Access Networks

Jul 01, 2022

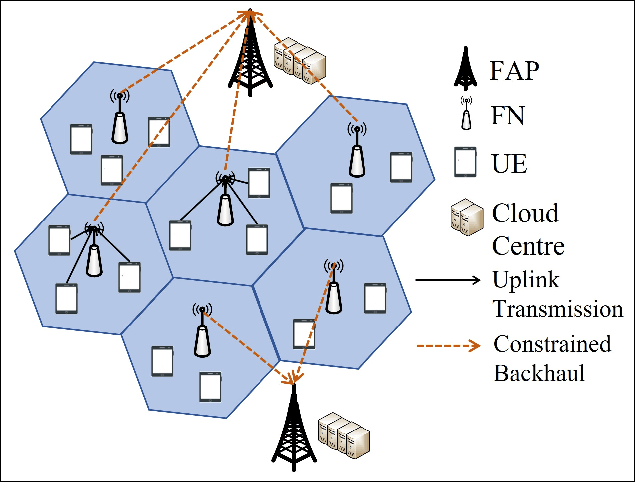

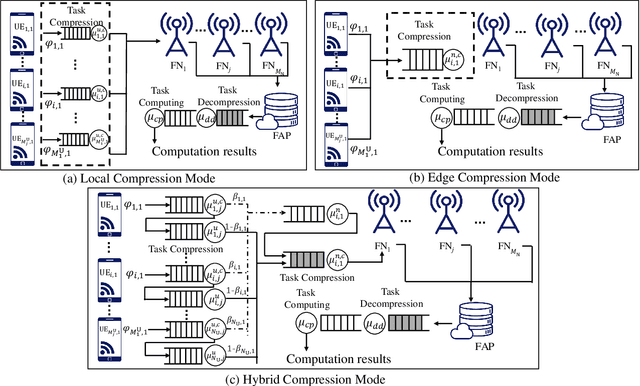

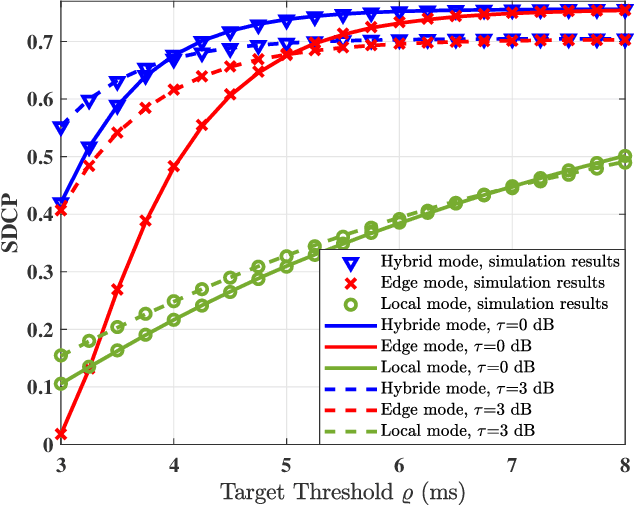

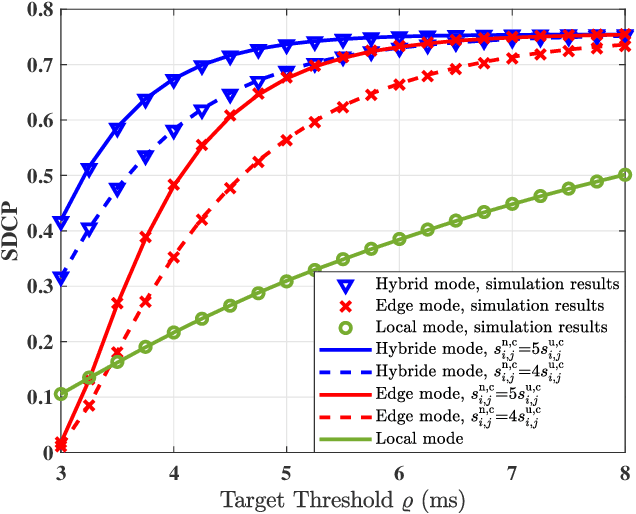

Abstract:The fog-radio-access-network (F-RAN) has been proposed to address the strict latency requirements, which offloads computation tasks generated in user equipments (UEs) to the edge to reduce the processing latency. However, it incorporates the task transmission latency, which may become the bottleneck of latency requirements. Data compression (DC) has been considered as one of the promising techniques to reduce the transmission latency. By compressing the computation tasks before transmitting, the transmission delay is reduced due to the shrink transmitted data size, and the original computing task can be retrieved by employing data decompressing (DD) at the edge nodes or the centre cloud. Nevertheless, the DC and DD incorporate extra processing latency, and the latency performance has not been investigated in the large-scale DC-enabled F-RAN. Therefore, in this work, the successful data compression probability (SDCP) is defined to analyse the latency performance of the F-RAN. Moreover, to analyse the effect of compression offloading ratio (COR), a novel hybrid compression mode is proposed based on the queueing theory. Based on this, the closed-form result of SDCP in the large-scale DC-enabled F-RAN is derived by combining the Matern cluster process and M/G/1 queueing model, and validated by Monte Carlo simulations. Based on the derived SDCP results, the effects of COR on the SDCP is analysed numerically. The results show that the SDCP with the optimal COR can be enhanced with a maximum value of 0.3 and 0.55 as compared with the cases of compressing all computing tasks at the edge and at the UE, respectively. Moreover, for the system requiring the minimal latency, the proposed hybrid compression mode can alleviate the requirement on the backhaul capacity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge