Qingyu Li

SAM 3D for 3D Object Reconstruction from Remote Sensing Images

Dec 27, 2025Abstract:Monocular 3D building reconstruction from remote sensing imagery is essential for scalable urban modeling, yet existing methods often require task-specific architectures and intensive supervision. This paper presents the first systematic evaluation of SAM 3D, a general-purpose image-to-3D foundation model, for monocular remote sensing building reconstruction. We benchmark SAM 3D against TRELLIS on samples from the NYC Urban Dataset, employing Frechet Inception Distance (FID) and CLIP-based Maximum Mean Discrepancy (CMMD) as evaluation metrics. Experimental results demonstrate that SAM 3D produces more coherent roof geometry and sharper boundaries compared to TRELLIS. We further extend SAM 3D to urban scene reconstruction through a segment-reconstruct-compose pipeline, demonstrating its potential for urban scene modeling. We also analyze practical limitations and discuss future research directions. These findings provide practical guidance for deploying foundation models in urban 3D reconstruction and motivate future integration of scene-level structural priors.

Kling-Omni Technical Report

Dec 18, 2025

Abstract:We present Kling-Omni, a generalist generative framework designed to synthesize high-fidelity videos directly from multimodal visual language inputs. Adopting an end-to-end perspective, Kling-Omni bridges the functional separation among diverse video generation, editing, and intelligent reasoning tasks, integrating them into a holistic system. Unlike disjointed pipeline approaches, Kling-Omni supports a diverse range of user inputs, including text instructions, reference images, and video contexts, processing them into a unified multimodal representation to deliver cinematic-quality and highly-intelligent video content creation. To support these capabilities, we constructed a comprehensive data system that serves as the foundation for multimodal video creation. The framework is further empowered by efficient large-scale pre-training strategies and infrastructure optimizations for inference. Comprehensive evaluations reveal that Kling-Omni demonstrates exceptional capabilities in in-context generation, reasoning-based editing, and multimodal instruction following. Moving beyond a content creation tool, we believe Kling-Omni is a pivotal advancement toward multimodal world simulators capable of perceiving, reasoning, generating and interacting with the dynamic and complex worlds.

FilmWeaver: Weaving Consistent Multi-Shot Videos with Cache-Guided Autoregressive Diffusion

Dec 12, 2025Abstract:Current video generation models perform well at single-shot synthesis but struggle with multi-shot videos, facing critical challenges in maintaining character and background consistency across shots and flexibly generating videos of arbitrary length and shot count. To address these limitations, we introduce \textbf{FilmWeaver}, a novel framework designed to generate consistent, multi-shot videos of arbitrary length. First, it employs an autoregressive diffusion paradigm to achieve arbitrary-length video generation. To address the challenge of consistency, our key insight is to decouple the problem into inter-shot consistency and intra-shot coherence. We achieve this through a dual-level cache mechanism: a shot memory caches keyframes from preceding shots to maintain character and scene identity, while a temporal memory retains a history of frames from the current shot to ensure smooth, continuous motion. The proposed framework allows for flexible, multi-round user interaction to create multi-shot videos. Furthermore, due to this decoupled design, our method demonstrates high versatility by supporting downstream tasks such as multi-concept injection and video extension. To facilitate the training of our consistency-aware method, we also developed a comprehensive pipeline to construct a high-quality multi-shot video dataset. Extensive experimental results demonstrate that our method surpasses existing approaches on metrics for both consistency and aesthetic quality, opening up new possibilities for creating more consistent, controllable, and narrative-driven video content. Project Page: https://filmweaver.github.io

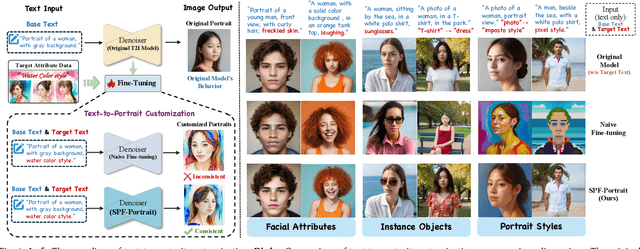

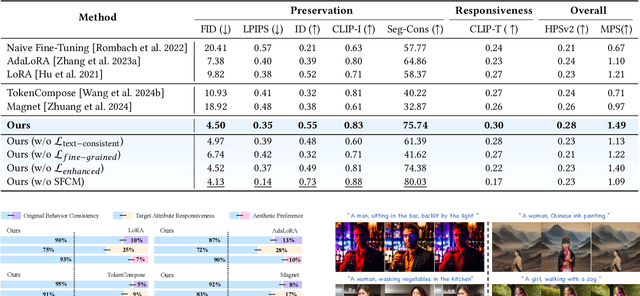

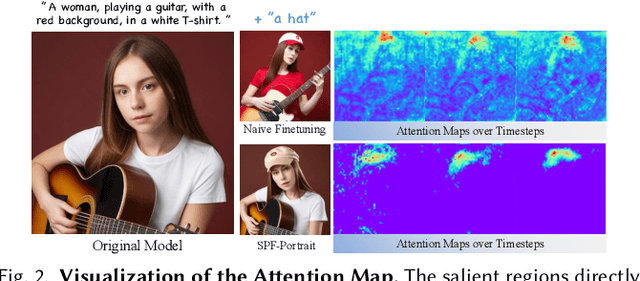

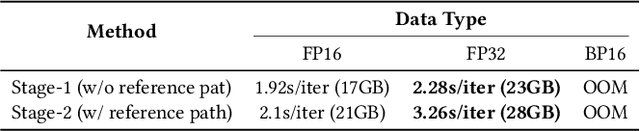

SPF-Portrait: Towards Pure Portrait Customization with Semantic Pollution-Free Fine-tuning

Apr 01, 2025

Abstract:While fine-tuning pre-trained Text-to-Image (T2I) models on portrait datasets enables attribute customization, existing methods suffer from Semantic Pollution that compromises the original model's behavior and prevents incremental learning. To address this, we propose SPF-Portrait, a pioneering work to purely understand customized semantics while eliminating semantic pollution in text-driven portrait customization. In our SPF-Portrait, we propose a dual-path pipeline that introduces the original model as a reference for the conventional fine-tuning path. Through contrastive learning, we ensure adaptation to target attributes and purposefully align other unrelated attributes with the original portrait. We introduce a novel Semantic-Aware Fine Control Map, which represents the precise response regions of the target semantics, to spatially guide the alignment process between the contrastive paths. This alignment process not only effectively preserves the performance of the original model but also avoids over-alignment. Furthermore, we propose a novel response enhancement mechanism to reinforce the performance of target attributes, while mitigating representation discrepancy inherent in direct cross-modal supervision. Extensive experiments demonstrate that SPF-Portrait achieves state-of-the-art performance.

HumanAesExpert: Advancing a Multi-Modality Foundation Model for Human Image Aesthetic Assessment

Mar 31, 2025

Abstract:Image Aesthetic Assessment (IAA) is a long-standing and challenging research task. However, its subset, Human Image Aesthetic Assessment (HIAA), has been scarcely explored, even though HIAA is widely used in social media, AI workflows, and related domains. To bridge this research gap, our work pioneers a holistic implementation framework tailored for HIAA. Specifically, we introduce HumanBeauty, the first dataset purpose-built for HIAA, which comprises 108k high-quality human images with manual annotations. To achieve comprehensive and fine-grained HIAA, 50K human images are manually collected through a rigorous curation process and annotated leveraging our trailblazing 12-dimensional aesthetic standard, while the remaining 58K with overall aesthetic labels are systematically filtered from public datasets. Based on the HumanBeauty database, we propose HumanAesExpert, a powerful Vision Language Model for aesthetic evaluation of human images. We innovatively design an Expert head to incorporate human knowledge of aesthetic sub-dimensions while jointly utilizing the Language Modeling (LM) and Regression head. This approach empowers our model to achieve superior proficiency in both overall and fine-grained HIAA. Furthermore, we introduce a MetaVoter, which aggregates scores from all three heads, to effectively balance the capabilities of each head, thereby realizing improved assessment precision. Extensive experiments demonstrate that our HumanAesExpert models deliver significantly better performance in HIAA than other state-of-the-art models. Our datasets, models, and codes are publicly released to advance the HIAA community. Project webpage: https://humanaesexpert.github.io/HumanAesExpert/

Global OpenBuildingMap -- Unveiling the Mystery of Global Buildings

Apr 22, 2024Abstract:Understanding how buildings are distributed globally is crucial to revealing the human footprint on our home planet. This built environment affects local climate, land surface albedo, resource distribution, and many other key factors that influence well-being and human health. Despite this, quantitative and comprehensive data on the distribution and properties of buildings worldwide is lacking. To this end, by using a big data analytics approach and nearly 800,000 satellite images, we generated the highest resolution and highest accuracy building map ever created: the Global OpenBuildingMap (Global OBM). A joint analysis of building maps and solar potentials indicates that rooftop solar energy can supply the global energy consumption need at a reasonable cost. Specifically, if solar panels were placed on the roofs of all buildings, they could supply 1.1-3.3 times -- depending on the efficiency of the solar device -- the global energy consumption in 2020, which is the year with the highest consumption on record. We also identified a clear geospatial correlation between building areas and key socioeconomic variables, which indicates our global building map can serve as an important input to modeling global socioeconomic needs and drivers.

AIO2: Online Correction of Object Labels for Deep Learning with Incomplete Annotation in Remote Sensing Image Segmentation

Mar 03, 2024

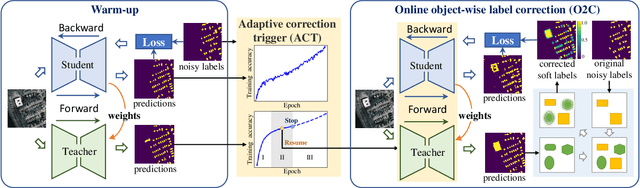

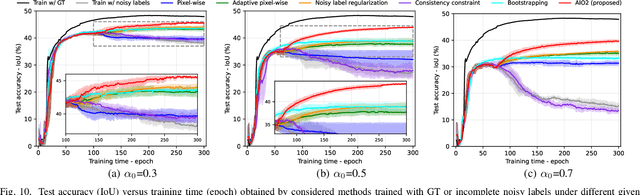

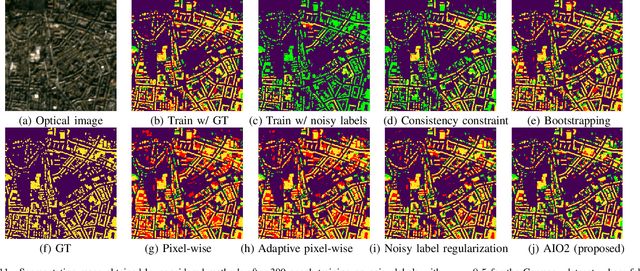

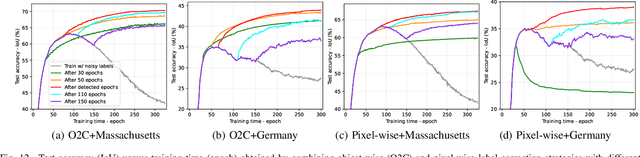

Abstract:While the volume of remote sensing data is increasing daily, deep learning in Earth Observation faces lack of accurate annotations for supervised optimization. Crowdsourcing projects such as OpenStreetMap distribute the annotation load to their community. However, such annotation inevitably generates noise due to insufficient control of the label quality, lack of annotators, frequent changes of the Earth's surface as a result of natural disasters and urban development, among many other factors. We present Adaptively trIggered Online Object-wise correction (AIO2) to address annotation noise induced by incomplete label sets. AIO2 features an Adaptive Correction Trigger (ACT) module that avoids label correction when the model training under- or overfits, and an Online Object-wise Correction (O2C) methodology that employs spatial information for automated label modification. AIO2 utilizes a mean teacher model to enhance training robustness with noisy labels to both stabilize the training accuracy curve for fitting in ACT and provide pseudo labels for correction in O2C. Moreover, O2C is implemented online without the need to store updated labels every training epoch. We validate our approach on two building footprint segmentation datasets with different spatial resolutions. Experimental results with varying degrees of building label noise demonstrate the robustness of AIO2. Source code will be available at https://github.com/zhu-xlab/AIO2.git.

Semi-Supervised Building Footprint Generation with Feature and Output Consistency Training

May 17, 2022

Abstract:Accurate and reliable building footprint maps are vital to urban planning and monitoring, and most existing approaches fall back on convolutional neural networks (CNNs) for building footprint generation. However, one limitation of these methods is that they require strong supervisory information from massive annotated samples for network learning. State-of-the-art semi-supervised semantic segmentation networks with consistency training can help to deal with this issue by leveraging a large amount of unlabeled data, which encourages the consistency of model output on data perturbation. Considering that rich information is also encoded in feature maps, we propose to integrate the consistency of both features and outputs in the end-to-end network training of unlabeled samples, enabling to impose additional constraints. Prior semi-supervised semantic segmentation networks have established the cluster assumption, in which the decision boundary should lie in the vicinity of low sample density. In this work, we observe that for building footprint generation, the low-density regions are more apparent at the intermediate feature representations within the encoder than the encoder's input or output. Therefore, we propose an instruction to assign the perturbation to the intermediate feature representations within the encoder, which considers the spatial resolution of input remote sensing imagery and the mean size of individual buildings in the study area. The proposed method is evaluated on three datasets with different resolutions: Planet dataset (3 m/pixel), Massachusetts dataset (1 m/pixel), and Inria dataset (0.3 m/pixel). Experimental results show that the proposed approach can well extract more complete building structures and alleviate omission errors.

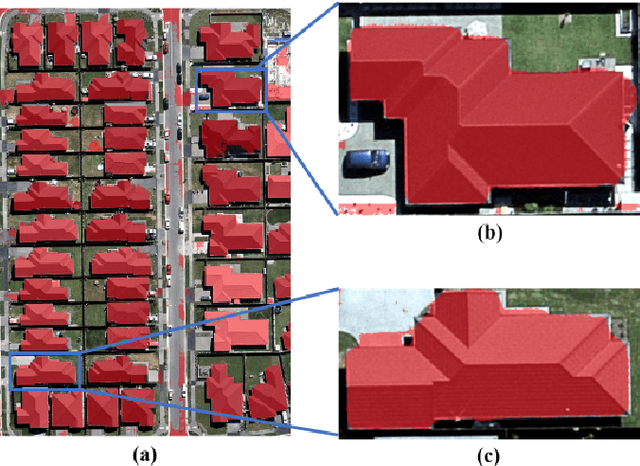

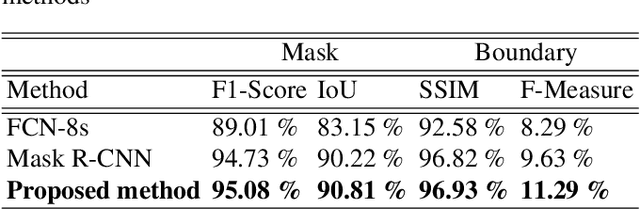

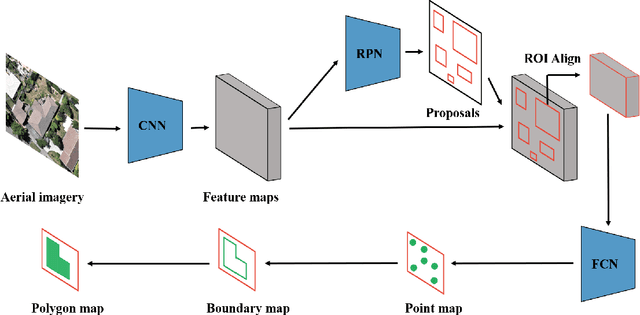

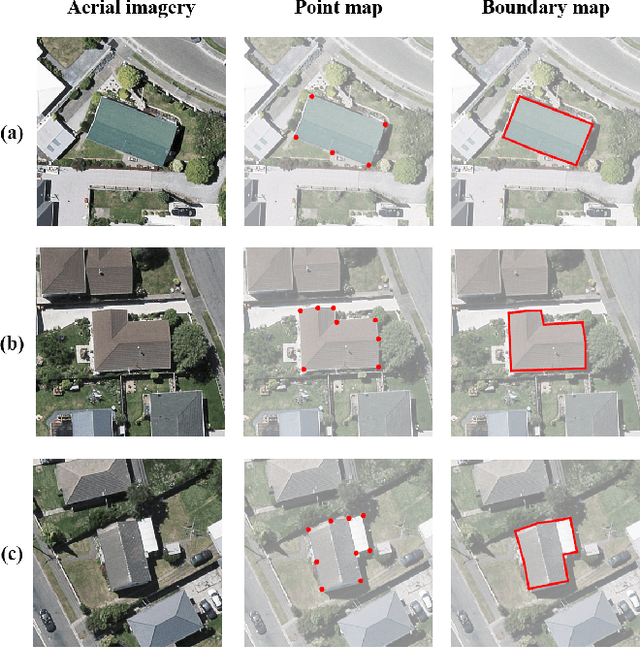

Instance segmentation of buildings using keypoints

Jun 06, 2020

Abstract:Building segmentation is of great importance in the task of remote sensing imagery interpretation. However, the existing semantic segmentation and instance segmentation methods often lead to segmentation masks with blurred boundaries. In this paper, we propose a novel instance segmentation network for building segmentation in high-resolution remote sensing images. More specifically, we consider segmenting an individual building as detecting several keypoints. The detected keypoints are subsequently reformulated as a closed polygon, which is the semantic boundary of the building. By doing so, the sharp boundary of the building could be preserved. Experiments are conducted on selected Aerial Imagery for Roof Segmentation (AIRS) dataset, and our method achieves better performance in both quantitative and qualitative results with comparison to the state-of-the-art methods. Our network is a bottom-up instance segmentation method that could well preserve geometric details.

Building Footprint Generation by IntegratingConvolution Neural Network with Feature PairwiseConditional Random Field (FPCRF)

Feb 11, 2020

Abstract:Building footprint maps are vital to many remote sensing applications, such as 3D building modeling, urban planning, and disaster management. Due to the complexity of buildings, the accurate and reliable generation of the building footprint from remote sensing imagery is still a challenging task. In this work, an end-to-end building footprint generation approach that integrates convolution neural network (CNN) and graph model is proposed. CNN serves as the feature extractor, while the graph model can take spatial correlation into consideration. Moreover, we propose to implement the feature pairwise conditional random field (FPCRF) as a graph model to preserve sharp boundaries and fine-grained segmentation. Experiments are conducted on four different datasets: (1) Planetscope satellite imagery of the cities of Munich, Paris, Rome, and Zurich; (2) ISPRS benchmark data from the city of Potsdam, (3) Dstl Kaggle dataset; and (4) Inria Aerial Image Labeling data of Austin, Chicago, Kitsap County, Western Tyrol, and Vienna. It is found that the proposed end-to-end building footprint generation framework with the FPCRF as the graph model can further improve the accuracy of building footprint generation by using only CNN, which is the current state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge