Pramod K. Varshney

Transmitter Subspace-Aware Target Detection in Two-Channel Passive Radars with Inter-Receiver Collaboration

Sep 16, 2025Abstract:We address target detection in a single Delay-Doppler cell using spatially distributed two-channel passive radars. An unknown illuminator of opportunity (IO) is assumed to emit a waveform lying in a known low-dimensional subspace (e.g., OFDM). Each receiver transforms its reference and surveillance signals onto the IO subspace after noise-whitening, to obtain cross-correlation (CC) measurements. To save bandwidth, receivers collaboratively exchange and linearly combine the CC output, and only a subset transmits them to a fusion center (FC) over a multiple-access channel (MAC). Collaboration weights are designed using the moments of the FC measurement to enhance detection performance.

Explainable AI for Radar Resource Management: Modified LIME in Deep Reinforcement Learning

Jun 26, 2025Abstract:Deep reinforcement learning has been extensively studied in decision-making processes and has demonstrated superior performance over conventional approaches in various fields, including radar resource management (RRM). However, a notable limitation of neural networks is their ``black box" nature and recent research work has increasingly focused on explainable AI (XAI) techniques to describe the rationale behind neural network decisions. One promising XAI method is local interpretable model-agnostic explanations (LIME). However, the sampling process in LIME ignores the correlations between features. In this paper, we propose a modified LIME approach that integrates deep learning (DL) into the sampling process, which we refer to as DL-LIME. We employ DL-LIME within deep reinforcement learning for radar resource management. Numerical results show that DL-LIME outperforms conventional LIME in terms of both fidelity and task performance, demonstrating superior performance with both metrics. DL-LIME also provides insights on which factors are more important in decision making for radar resource management.

Learning-Based Resource Management in Integrated Sensing and Communication Systems

Jun 25, 2025

Abstract:In this paper, we tackle the task of adaptive time allocation in integrated sensing and communication systems equipped with radar and communication units. The dual-functional radar-communication system's task involves allocating dwell times for tracking multiple targets and utilizing the remaining time for data transmission towards estimated target locations. We introduce a novel constrained deep reinforcement learning (CDRL) approach, designed to optimize resource allocation between tracking and communication under time budget constraints, thereby enhancing target communication quality. Our numerical results demonstrate the efficiency of our proposed CDRL framework, confirming its ability to maximize communication quality in highly dynamic environments while adhering to time constraints.

One-bit Compressed Sensing using Generative Models

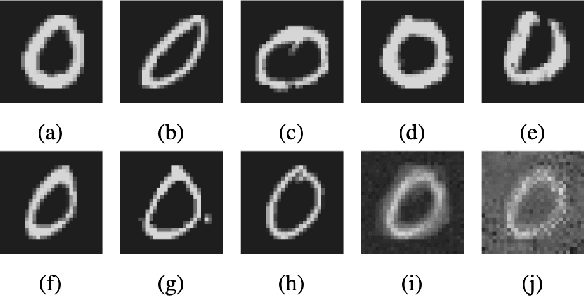

Feb 18, 2025Abstract:This paper addresses the classical problem of one-bit compressed sensing using a deep learning-based reconstruction algorithm that leverages a trained generative model to enhance the signal reconstruction performance. The generator, a pre-trained neural network, learns to map from a low-dimensional latent space to a higher-dimensional set of sparse vectors. This generator is then used to reconstruct sparse vectors from their one-bit measurements by searching over its range. The presented algorithm provides an excellent reconstruction performance because the generative model can learn additional structural information about the signal beyond sparsity. Furthermore, we provide theoretical guarantees on the reconstruction accuracy and sample complexity of the algorithm. Through numerical experiments using three publicly available image datasets, MNIST, Fashion-MNIST, and Omniglot, we demonstrate the superior performance of the algorithm compared to other existing algorithms and show that our algorithm can recover both the amplitude and the direction of the signal from one-bit measurements.

Harnessing the Power of Noise: A Survey of Techniques and Applications

Oct 08, 2024

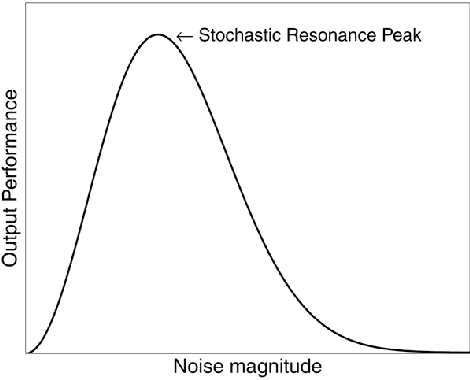

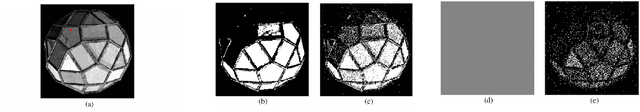

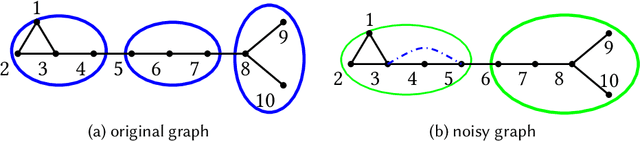

Abstract:Noise, traditionally considered a nuisance in computational systems, is reconsidered for its unexpected and counter-intuitive benefits across a wide spectrum of domains, including nonlinear information processing, signal processing, image processing, machine learning, network science, and natural language processing. Through a comprehensive review of both historical and contemporary research, this survey presents a dual perspective on noise, acknowledging its potential to both disrupt and enhance performance. Particularly, we highlight how noise-enhanced training strategies can lead to models that better generalize from noisy data, positioning noise not just as a challenge to overcome but as a strategic tool for improvement. This work calls for a shift in how we perceive noise, proposing that it can be a spark for innovation and advancement in the information era.

Interpretable Data Fusion for Distributed Learning: A Representative Approach via Gradient Matching

May 06, 2024

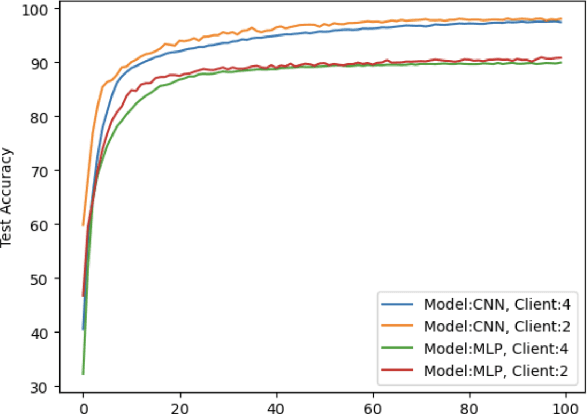

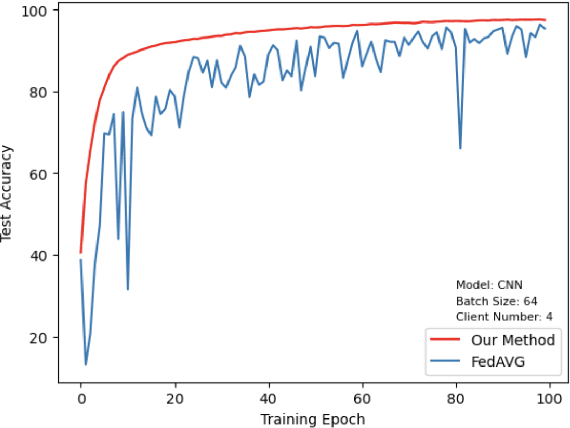

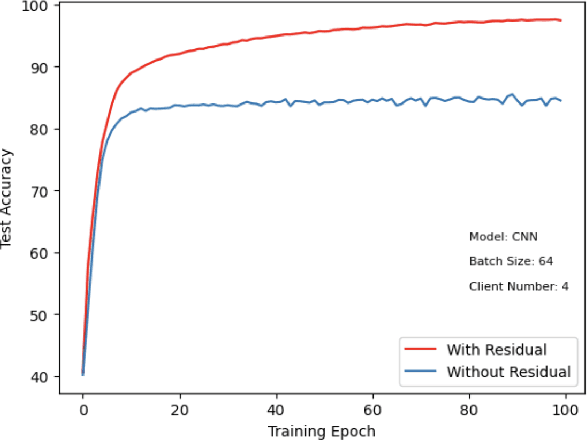

Abstract:This paper introduces a representative-based approach for distributed learning that transforms multiple raw data points into a virtual representation. Unlike traditional distributed learning methods such as Federated Learning, which do not offer human interpretability, our method makes complex machine learning processes accessible and comprehensible. It achieves this by condensing extensive datasets into digestible formats, thus fostering intuitive human-machine interactions. Additionally, this approach maintains privacy and communication efficiency, and it matches the training performance of models using raw data. Simulation results show that our approach is competitive with or outperforms traditional Federated Learning in accuracy and convergence, especially in scenarios with complex models and a higher number of clients. This framework marks a step forward in integrating human intuition with machine intelligence, which potentially enhances human-machine learning interfaces and collaborative efforts.

Anomaly Detection via Learning-Based Sequential Controlled Sensing

Nov 30, 2023

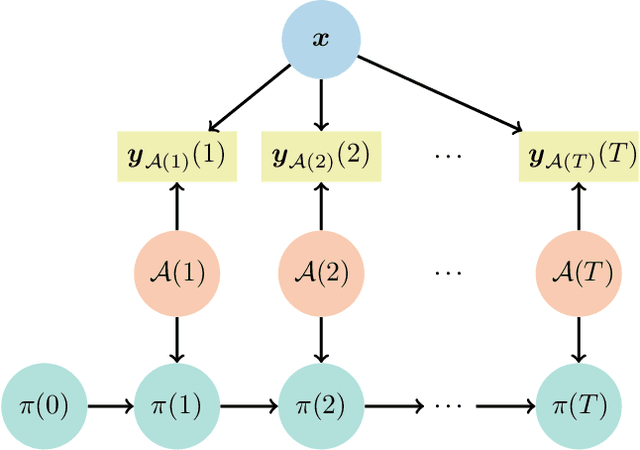

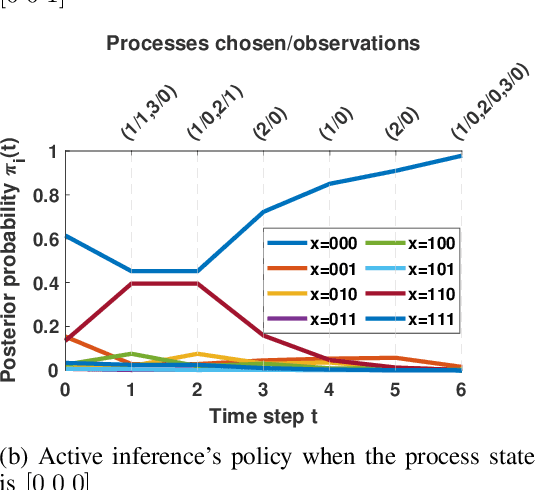

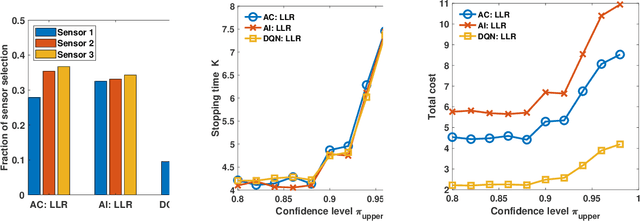

Abstract:In this paper, we address the problem of detecting anomalies among a given set of binary processes via learning-based controlled sensing. Each process is parameterized by a binary random variable indicating whether the process is anomalous. To identify the anomalies, the decision-making agent is allowed to observe a subset of the processes at each time instant. Also, probing each process has an associated cost. Our objective is to design a sequential selection policy that dynamically determines which processes to observe at each time with the goal to minimize the delay in making the decision and the total sensing cost. We cast this problem as a sequential hypothesis testing problem within the framework of Markov decision processes. This formulation utilizes both a Bayesian log-likelihood ratio-based reward and an entropy-based reward. The problem is then solved using two approaches: 1) a deep reinforcement learning-based approach where we design both deep Q-learning and policy gradient actor-critic algorithms; and 2) a deep active inference-based approach. Using numerical experiments, we demonstrate the efficacy of our algorithms and show that our algorithms adapt to any unknown statistical dependence pattern of the processes.

On Distributed and Asynchronous Sampling of Gaussian Processes for Sequential Binary Hypothesis Testing

Sep 14, 2023

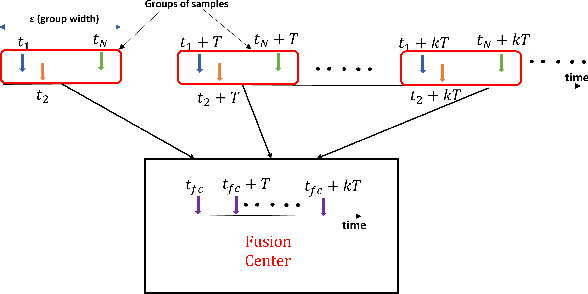

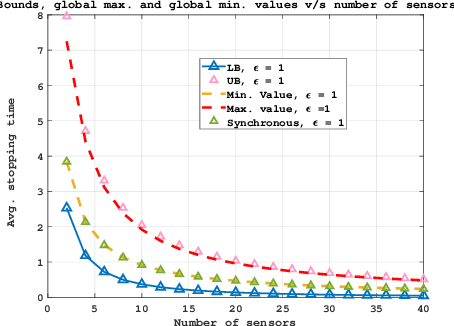

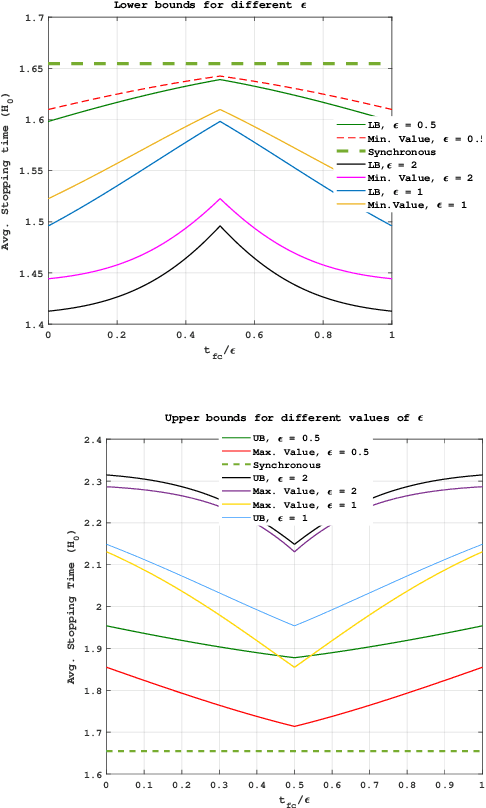

Abstract:In this work, we consider a binary sequential hypothesis testing problem with distributed and asynchronous measurements. The aim is to analyze the effect of sampling times of jointly \textit{wide-sense stationary} (WSS) Gaussian observation processes at distributed sensors on the expected stopping time of the sequential test at the fusion center (FC). The distributed system is such that the sensors and the FC sample observations periodically, where the sampling times are not necessarily synchronous, i.e., the sampling times at different sensors and the FC may be different from each other. \color{black} The sampling times, however, are restricted to be within a time window and a sample obtained within the window is assumed to be \textit{uncorrelated} with samples outside the window. We also assume that correlations may exist only between the observations sampled at the FC and those at the sensors in a pairwise manner (sensor pairs not including the FC have independent observations). The effect of \textit{asynchronous} sampling on the SPRT performance is analyzed by obtaining bounds for the expected stopping time. We illustrate the validity of the theoretical results with numerical results.

On Gibbs Sampling Architecture for Labeled Random Finite Sets Multi-Object Tracking

Jun 27, 2023Abstract:Gibbs sampling is one of the most popular Markov chain Monte Carlo algorithms because of its simplicity, scalability, and wide applicability within many fields of statistics, science, and engineering. In the labeled random finite sets literature, Gibbs sampling procedures have recently been applied to efficiently truncate the single-sensor and multi-sensor $\delta$-generalized labeled multi-Bernoulli posterior density as well as the multi-sensor adaptive labeled multi-Bernoulli birth distribution. However, only a limited discussion has been provided regarding key Gibbs sampler architecture details including the Markov chain Monte Carlo sample generation technique and early termination criteria. This paper begins with a brief background on Markov chain Monte Carlo methods and a review of the Gibbs sampler implementations proposed for labeled random finite sets filters. Next, we propose a short chain, multi-simulation sample generation technique that is well suited for these applications and enables a parallel processing implementation. Additionally, we present two heuristic early termination criteria that achieve similar sampling performance with substantially fewer Markov chain observations. Finally, the benefits of the proposed Gibbs samplers are demonstrated via two Monte Carlo simulations.

Distributed Quantized Detection of Sparse Signals Under Byzantine Attacks

Apr 27, 2023

Abstract:This paper investigates distributed detection of sparse stochastic signals with quantized measurements under Byzantine attacks. Under this type of attack, sensors in the networks might send falsified data to degrade system performance. The Bernoulli-Gaussian (BG) distribution in terms of the sparsity degree of the stochastic signal is utilized for modeling the sparsity of signals. Several detectors with improved detection performance are proposed by incorporating the estimated attack parameters into the detection process. First, we propose the generalized likelihood ratio test with reference sensors (GLRTRS) and the locally most powerful test with reference sensors (LMPTRS) detectors with adaptive thresholds, given that the sparsity degree and the attack parameters are unknown. Our simulation results show that the LMPTRS and GLRTRS detectors outperform the LMPT and GLRT detectors proposed for an attack-free environment and are more robust against attacks. The proposed detectors can achieve the detection performance close to the benchmark likelihood ratio test (LRT) detector, which has perfect knowledge of the attack parameters and sparsity degree. When the fraction of Byzantine nodes are assumed to be known, we can further improve the system's detection performance. We propose the enhanced LMPTRS (E-LMPTRS) and enhanced GLRTRS (E-GLRTRS) detectors by filtering out potential malicious sensors with the knowledge of the fraction of Byzantine nodes in the network. Simulation results show the superiority of proposed enhanced detectors over LMPTRS and GLRTRS detectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge