Baocheng Geng

Full-Frequency Temporal Patching and Structured Masking for Enhanced Audio Classification

Aug 28, 2025

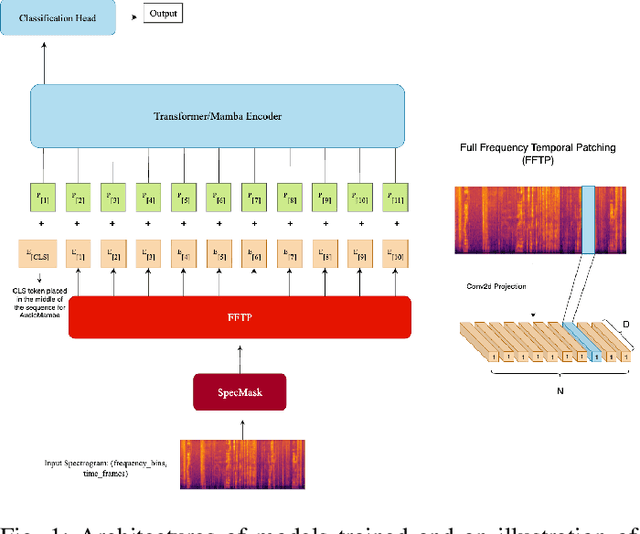

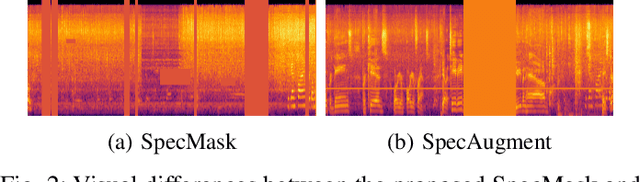

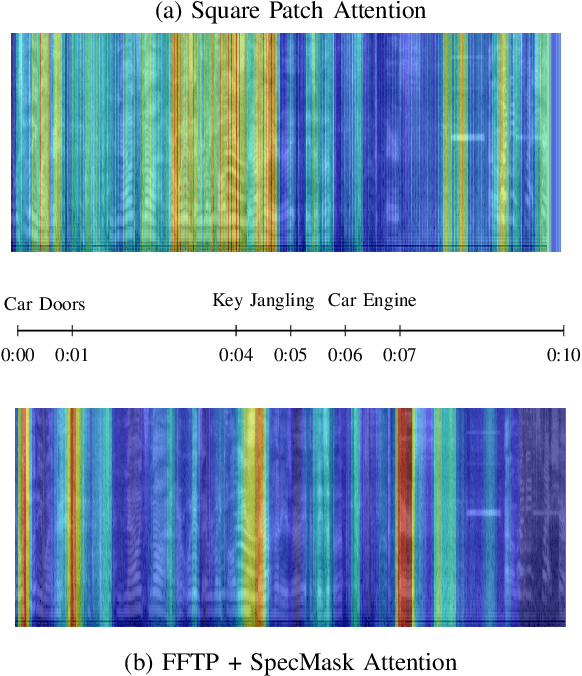

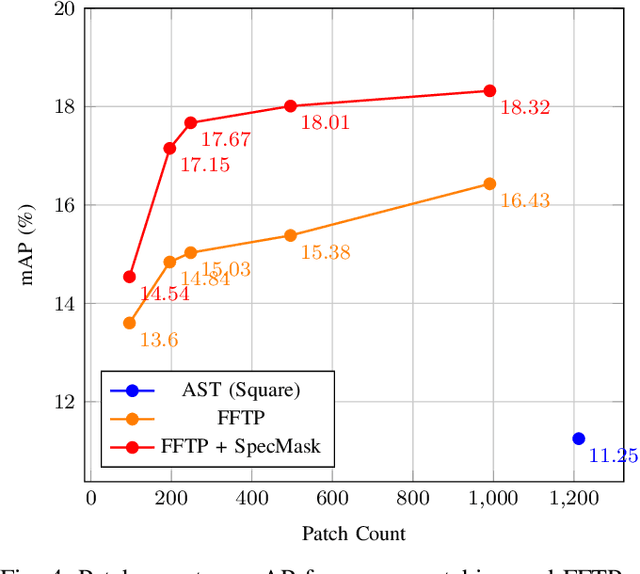

Abstract:Transformers and State-Space Models (SSMs) have advanced audio classification by modeling spectrograms as sequences of patches. However, existing models such as the Audio Spectrogram Transformer (AST) and Audio Mamba (AuM) adopt square patching from computer vision, which disrupts continuous frequency patterns and produces an excessive number of patches, slowing training, and increasing computation. We propose Full-Frequency Temporal Patching (FFTP), a patching strategy that better matches the time-frequency asymmetry of spectrograms by spanning full frequency bands with localized temporal context, preserving harmonic structure, and significantly reducing patch count and computation. We also introduce SpecMask, a patch-aligned spectrogram augmentation that combines full-frequency and localized time-frequency masks under a fixed masking budget, enhancing temporal robustness while preserving spectral continuity. When applied on both AST and AuM, our patching method with SpecMask improves mAP by up to +6.76 on AudioSet-18k and accuracy by up to +8.46 on SpeechCommandsV2, while reducing computation by up to 83.26%, demonstrating both performance and efficiency gains.

PFedDST: Personalized Federated Learning with Decentralized Selection Training

Feb 11, 2025

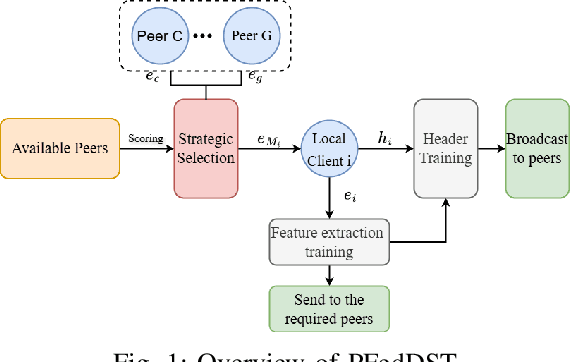

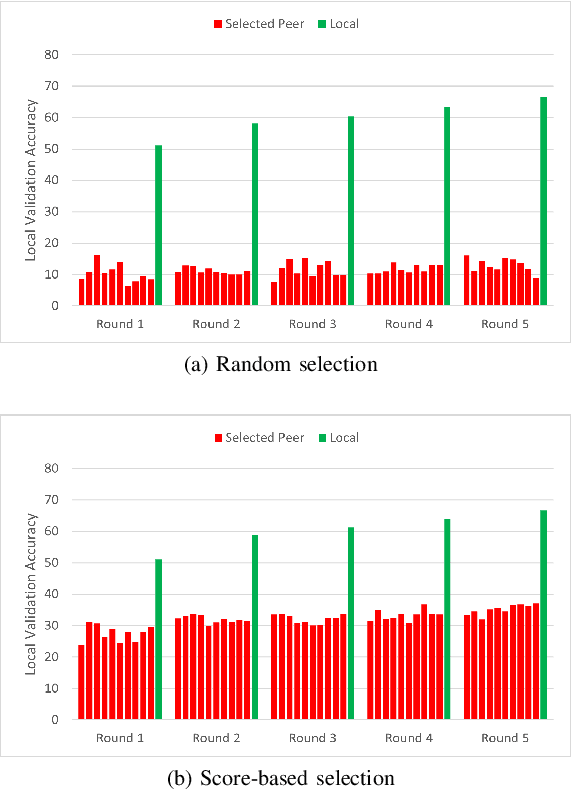

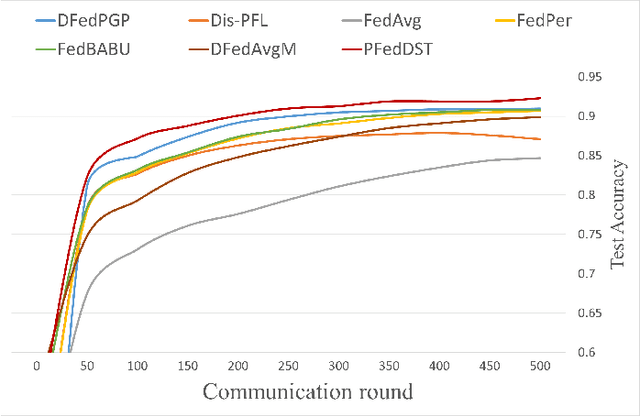

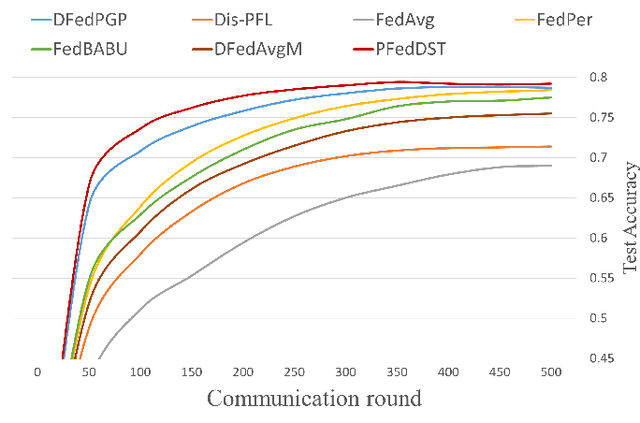

Abstract:Distributed Learning (DL) enables the training of machine learning models across multiple devices, yet it faces challenges like non-IID data distributions and device capability disparities, which can impede training efficiency. Communication bottlenecks further complicate traditional Federated Learning (FL) setups. To mitigate these issues, we introduce the Personalized Federated Learning with Decentralized Selection Training (PFedDST) framework. PFedDST enhances model training by allowing devices to strategically evaluate and select peers based on a comprehensive communication score. This score integrates loss, task similarity, and selection frequency, ensuring optimal peer connections. This selection strategy is tailored to increase local personalization and promote beneficial peer collaborations to strengthen the stability and efficiency of the training process. Our experiments demonstrate that PFedDST not only enhances model accuracy but also accelerates convergence. This approach outperforms state-of-the-art methods in handling data heterogeneity, delivering both faster and more effective training in diverse and decentralized systems.

Measuring Heterogeneity in Machine Learning with Distributed Energy Distance

Jan 27, 2025

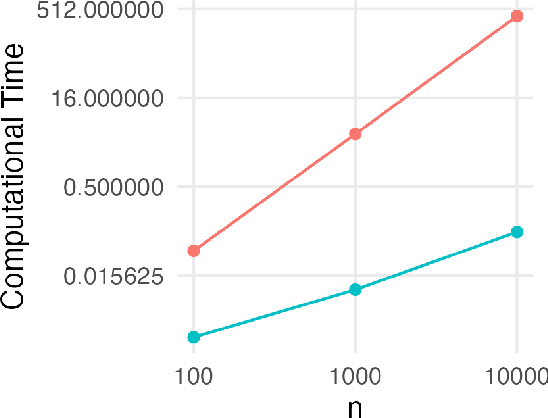

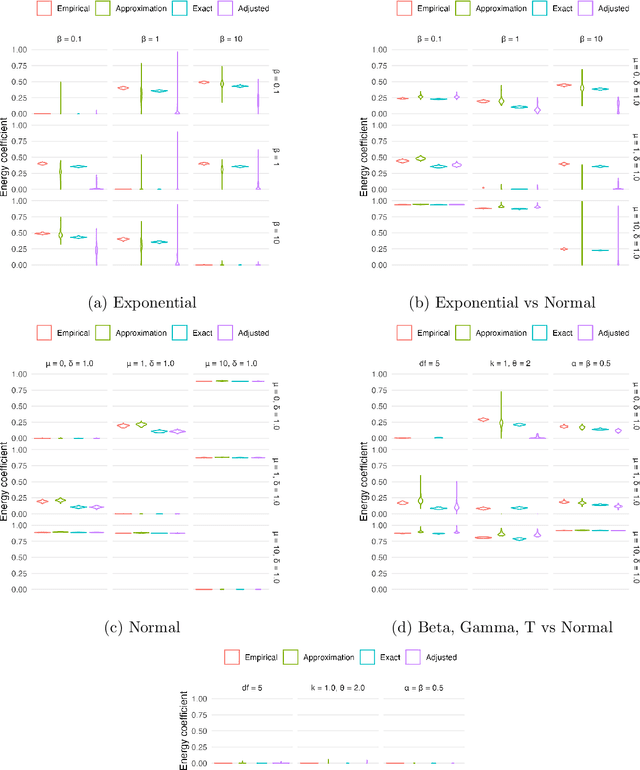

Abstract:In distributed and federated learning, heterogeneity across data sources remains a major obstacle to effective model aggregation and convergence. We focus on feature heterogeneity and introduce energy distance as a sensitive measure for quantifying distributional discrepancies. While we show that energy distance is robust for detecting data distribution shifts, its direct use in large-scale systems can be prohibitively expensive. To address this, we develop Taylor approximations that preserve key theoretical quantitative properties while reducing computational overhead. Through simulation studies, we show how accurately capturing feature discrepancies boosts convergence in distributed learning. Finally, we propose a novel application of energy distance to assign penalty weights for aligning predictions across heterogeneous nodes, ultimately enhancing coordination in federated and distributed settings.

Interpretable Data Fusion for Distributed Learning: A Representative Approach via Gradient Matching

May 06, 2024

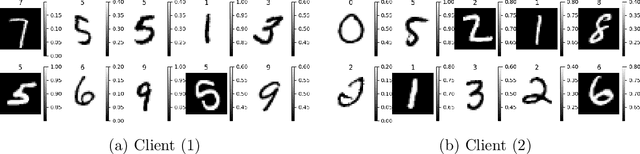

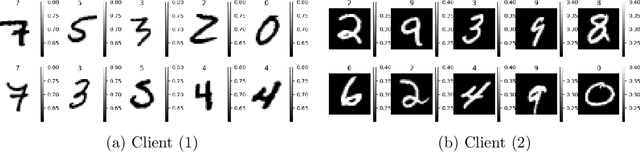

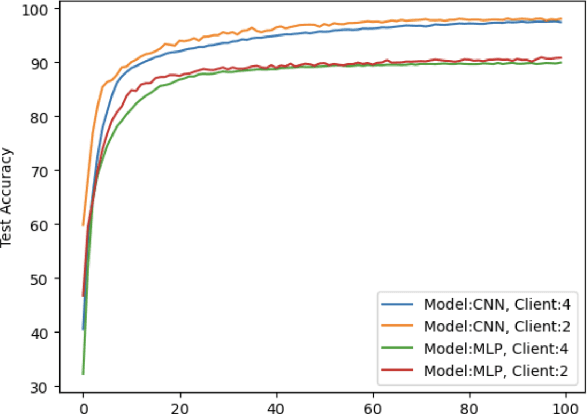

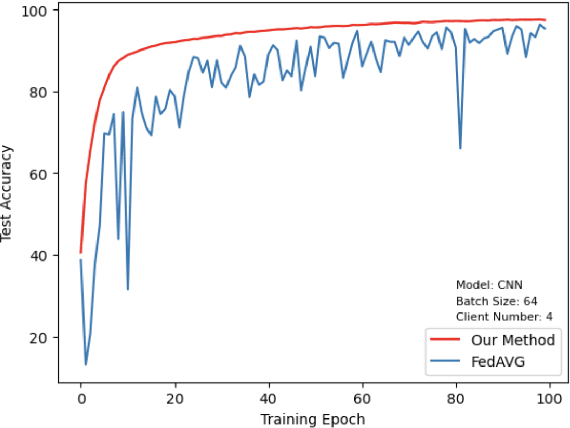

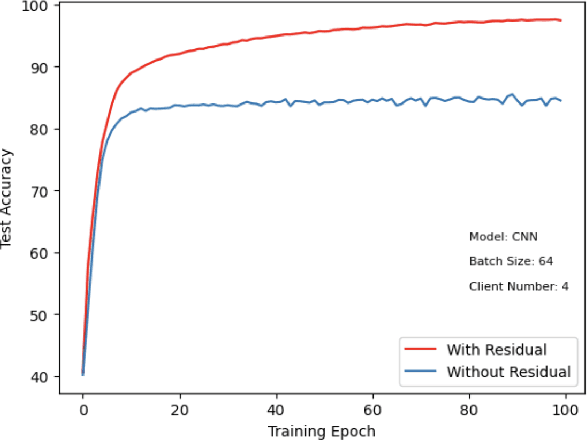

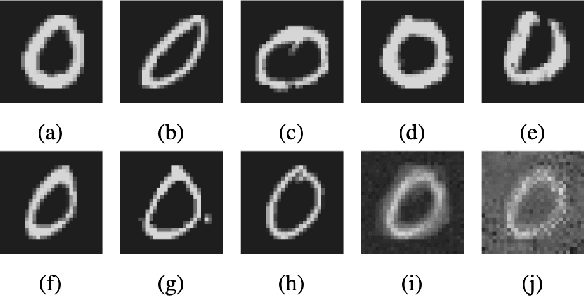

Abstract:This paper introduces a representative-based approach for distributed learning that transforms multiple raw data points into a virtual representation. Unlike traditional distributed learning methods such as Federated Learning, which do not offer human interpretability, our method makes complex machine learning processes accessible and comprehensible. It achieves this by condensing extensive datasets into digestible formats, thus fostering intuitive human-machine interactions. Additionally, this approach maintains privacy and communication efficiency, and it matches the training performance of models using raw data. Simulation results show that our approach is competitive with or outperforms traditional Federated Learning in accuracy and convergence, especially in scenarios with complex models and a higher number of clients. This framework marks a step forward in integrating human intuition with machine intelligence, which potentially enhances human-machine learning interfaces and collaborative efforts.

On Distributed and Asynchronous Sampling of Gaussian Processes for Sequential Binary Hypothesis Testing

Sep 14, 2023

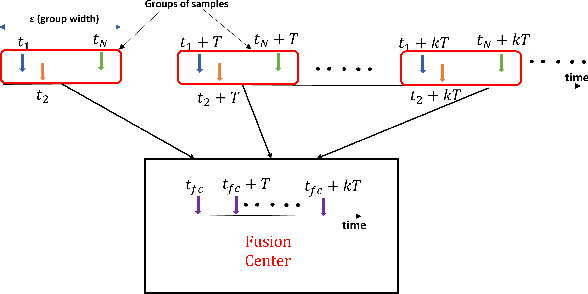

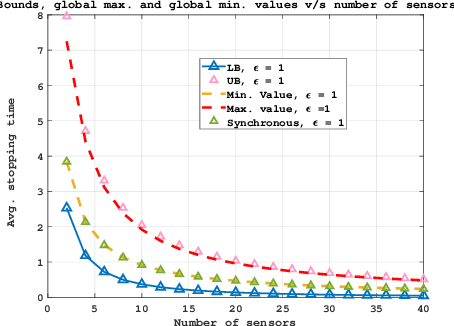

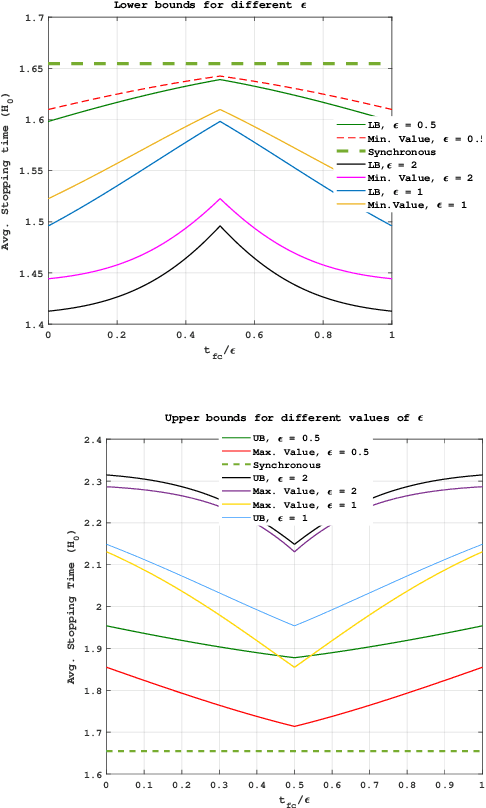

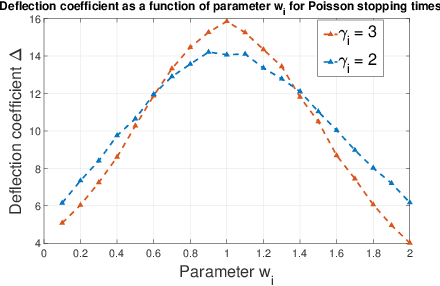

Abstract:In this work, we consider a binary sequential hypothesis testing problem with distributed and asynchronous measurements. The aim is to analyze the effect of sampling times of jointly \textit{wide-sense stationary} (WSS) Gaussian observation processes at distributed sensors on the expected stopping time of the sequential test at the fusion center (FC). The distributed system is such that the sensors and the FC sample observations periodically, where the sampling times are not necessarily synchronous, i.e., the sampling times at different sensors and the FC may be different from each other. \color{black} The sampling times, however, are restricted to be within a time window and a sample obtained within the window is assumed to be \textit{uncorrelated} with samples outside the window. We also assume that correlations may exist only between the observations sampled at the FC and those at the sensors in a pairwise manner (sensor pairs not including the FC have independent observations). The effect of \textit{asynchronous} sampling on the SPRT performance is analyzed by obtaining bounds for the expected stopping time. We illustrate the validity of the theoretical results with numerical results.

Distributed Quantized Detection of Sparse Signals Under Byzantine Attacks

Apr 27, 2023

Abstract:This paper investigates distributed detection of sparse stochastic signals with quantized measurements under Byzantine attacks. Under this type of attack, sensors in the networks might send falsified data to degrade system performance. The Bernoulli-Gaussian (BG) distribution in terms of the sparsity degree of the stochastic signal is utilized for modeling the sparsity of signals. Several detectors with improved detection performance are proposed by incorporating the estimated attack parameters into the detection process. First, we propose the generalized likelihood ratio test with reference sensors (GLRTRS) and the locally most powerful test with reference sensors (LMPTRS) detectors with adaptive thresholds, given that the sparsity degree and the attack parameters are unknown. Our simulation results show that the LMPTRS and GLRTRS detectors outperform the LMPT and GLRT detectors proposed for an attack-free environment and are more robust against attacks. The proposed detectors can achieve the detection performance close to the benchmark likelihood ratio test (LRT) detector, which has perfect knowledge of the attack parameters and sparsity degree. When the fraction of Byzantine nodes are assumed to be known, we can further improve the system's detection performance. We propose the enhanced LMPTRS (E-LMPTRS) and enhanced GLRTRS (E-GLRTRS) detectors by filtering out potential malicious sensors with the knowledge of the fraction of Byzantine nodes in the network. Simulation results show the superiority of proposed enhanced detectors over LMPTRS and GLRTRS detectors.

Sequential Processing of Observations in Human Decision-Making Systems

Jan 25, 2023

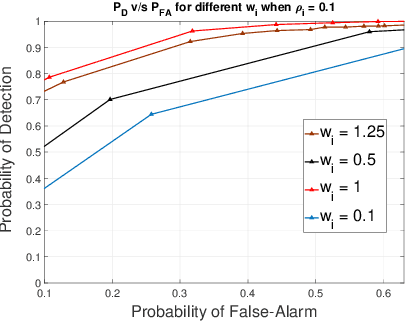

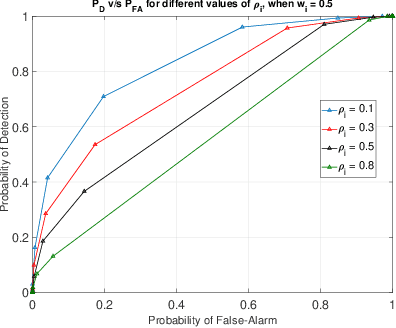

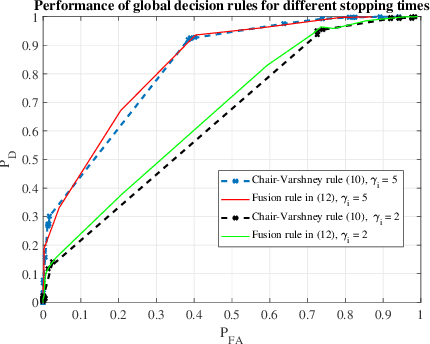

Abstract:In this work, we consider a binary hypothesis testing problem involving a group of human decision-makers. Due to the nature of human behavior, each human decision-maker observes the phenomenon of interest sequentially up to a random length of time. The humans use a belief model to accumulate the log-likelihood ratios until they cease observing the phenomenon. The belief model is used to characterize the perception of the human decision-maker towards observations at different instants of time, i.e., some decision-makers may assign greater importance to observations that were observed earlier, rather than later and vice-versa. The global decision-maker is a machine that fuses human decisions using the Chair-Varshney rule with different weights for the human decisions, where the weights are determined by the number of observations that were used by the humans to arrive at their respective decisions.

Human-machine Hierarchical Networks for Decision Making under Byzantine Attacks

Jan 25, 2023

Abstract:This paper proposes a belief-updating scheme in a human-machine collaborative decision-making network to combat Byzantine attacks. A hierarchical framework is used to realize the network where local decisions from physical sensors act as reference decisions to improve the quality of human sensor decisions. During the decision-making process, the belief that each physical sensor is malicious is updated. The case when humans have side information available is investigated, and its impact is analyzed. Simulation results substantiate that the proposed scheme can significantly improve the quality of human sensor decisions, even when most physical sensors are malicious. Moreover, the performance of the proposed method does not necessarily depend on the knowledge of the actual fraction of malicious physical sensors. Consequently, the proposed scheme can effectively defend against Byzantine attacks and improve the quality of human sensors' decisions so that the performance of the human-machine collaborative system is enhanced.

Human-Machine Collaboration for Smart Decision Making: Current Trends and Future Opportunities

Jan 18, 2023Abstract:Recently, modeling of decision making and control systems that include heterogeneous smart sensing devices (machines) as well as human agents as participants is becoming an important research area due to the wide variety of applications including autonomous driving, smart manufacturing, internet of things, national security, and healthcare. To accomplish complex missions under uncertainty, it is imperative that we build novel human machine collaboration structures to integrate the cognitive strengths of humans with computational capabilities of machines in an intelligent manner. In this paper, we present an overview of the existing works on human decision making and human machine collaboration within the scope of signal processing and information fusion. We review several application areas and research domains relevant to human machine collaborative decision making. We also discuss current challenges and future directions in this problem domain.

Loss Attitude Aware Energy Management for Signal Detection

Jan 18, 2023

Abstract:This work considers a Bayesian signal processing problem where increasing the power of the probing signal may cause risks or undesired consequences. We employ a market based approach to solve energy management problems for signal detection while balancing multiple objectives. In particular, the optimal amount of resource consumption is determined so as to maximize a profit-loss based expected utility function. Next, we study the human behavior of resource consumption while taking individuals' behavioral disparity into account. Unlike rational decision makers who consume the amount of resource to maximize the expected utility function, human decision makers act to maximize their subjective utilities. We employ prospect theory to model humans' loss aversion towards a risky event. The amount of resource consumption that maximizes the humans' subjective utility is derived to characterize the actual behavior of humans. It is shown that loss attitudes may lead the human to behave quite differently from a rational decision maker.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge