Pavan Chandra Konda

Multi-scale gigapixel microscopy using a multi-camera array microscope

Nov 30, 2022

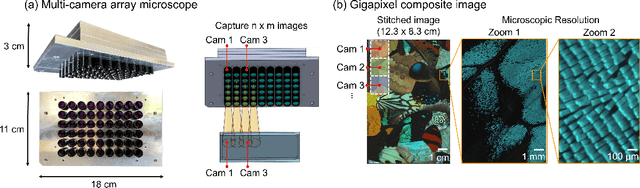

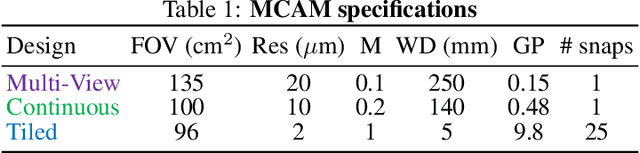

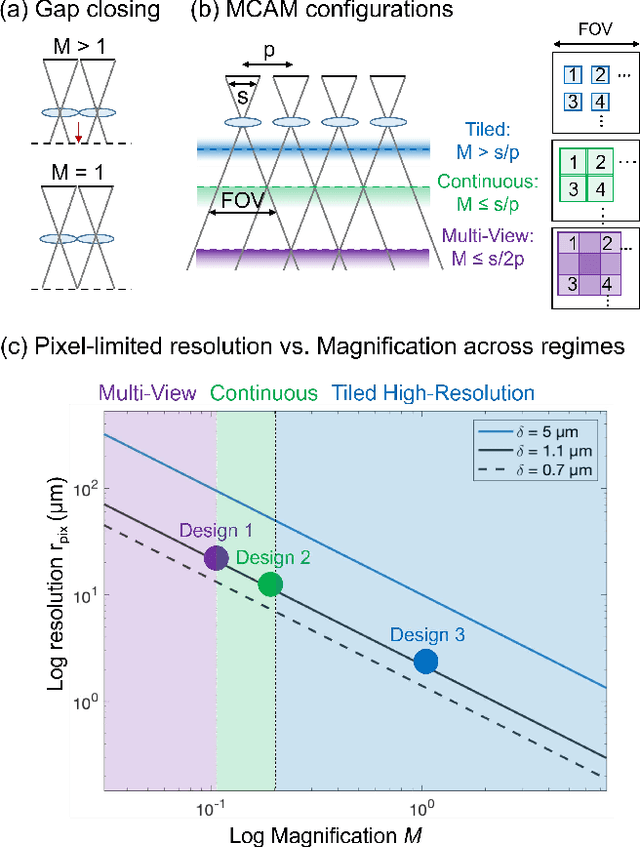

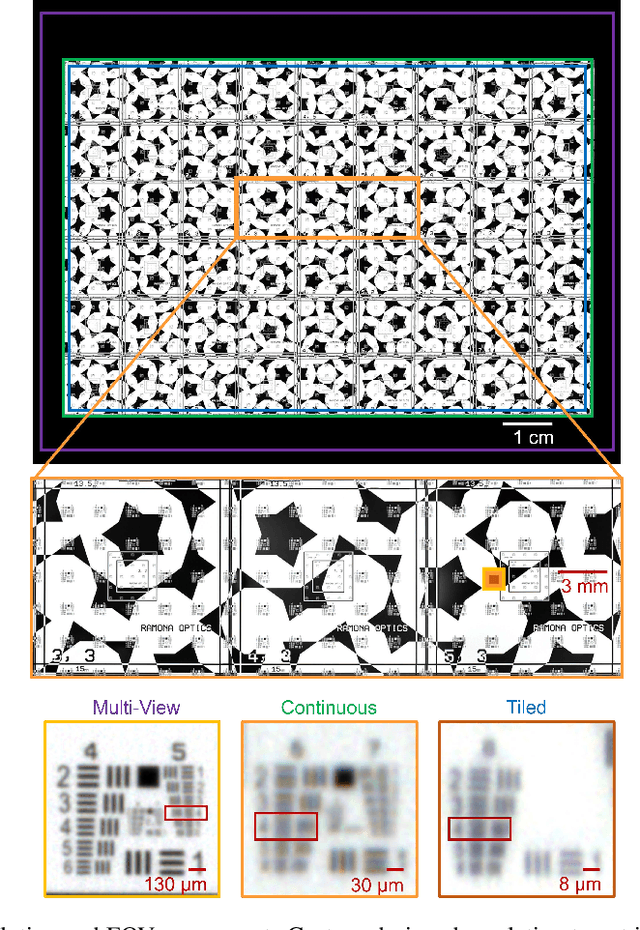

Abstract:This article experimentally examines different configurations of a novel multi-camera array microscope (MCAM) imaging technology. The MCAM is based upon a densely packed array of "micro-cameras" to jointly image across a large field-of-view at high resolution. Each micro-camera within the array images a unique area of a sample of interest, and then all acquired data with 54 micro-cameras are digitally combined into composite frames, whose total pixel counts significantly exceed the pixel counts of standard microscope systems. We present results from three unique MCAM configurations for different use cases. First, we demonstrate a configuration that simultaneously images and estimates the 3D object depth across a 100 x 135 mm^2 field-of-view (FOV) at approximately 20 um resolution, which results in 0.15 gigapixels (GP) per snapshot. Second, we demonstrate an MCAM configuration that records video across a continuous 83 x 123 mm^2 FOV with two-fold increased resolution (0.48 GP per frame). Finally, we report a third high-resolution configuration (2 um resolution) that can rapidly produce 9.8 GP composites of large histopathology specimens.

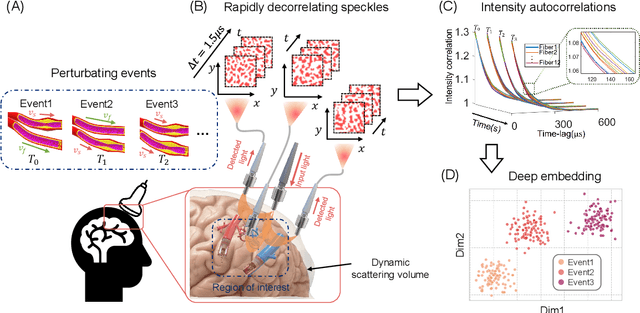

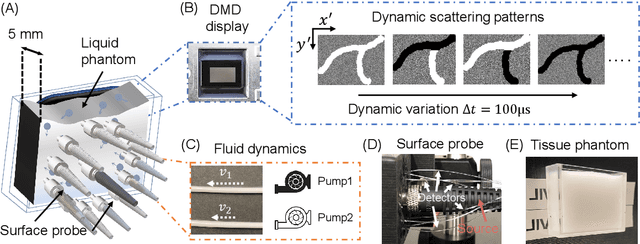

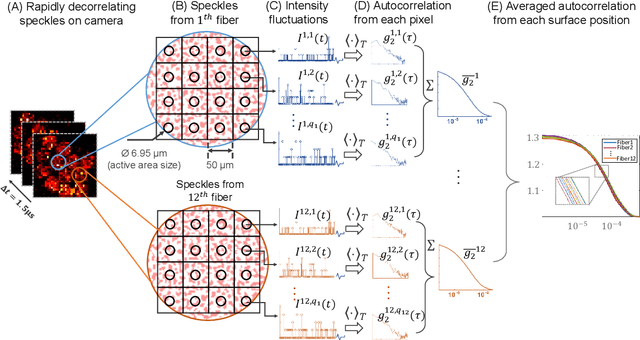

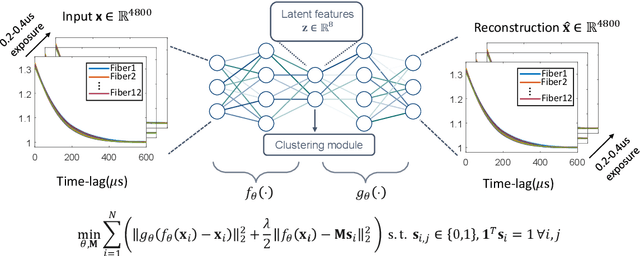

Transient motion classification through turbid volumes via parallelized single-photon detection and deep contrastive embedding

Apr 04, 2022

Abstract:Fast noninvasive probing of spatially varying decorrelating events, such as cerebral blood flow beneath the human skull, is an essential task in various scientific and clinical settings. One of the primary optical techniques used is diffuse correlation spectroscopy (DCS), whose classical implementation uses a single or few single-photon detectors, resulting in poor spatial localization accuracy and relatively low temporal resolution. Here, we propose a technique termed Classifying Rapid decorrelation Events via Parallelized single photon dEtection (CREPE)}, a new form of DCS that can probe and classify different decorrelating movements hidden underneath turbid volume with high sensitivity using parallelized speckle detection from a $32\times32$ pixel SPAD array. We evaluate our setup by classifying different spatiotemporal-decorrelating patterns hidden beneath a 5mm tissue-like phantom made with rapidly decorrelating dynamic scattering media. Twelve multi-mode fibers are used to collect scattered light from different positions on the surface of the tissue phantom. To validate our setup, we generate perturbed decorrelation patterns by both a digital micromirror device (DMD) modulated at multi-kilo-hertz rates, as well as a vessel phantom containing flowing fluid. Along with a deep contrastive learning algorithm that outperforms classic unsupervised learning methods, we demonstrate our approach can accurately detect and classify different transient decorrelation events (happening in 0.1-0.4s) underneath turbid scattering media, without any data labeling. This has the potential to be applied to noninvasively monitor deep tissue motion patterns, for example identifying normal or abnormal cerebral blood flow events, at multi-Hertz rates within a compact and static detection probe.

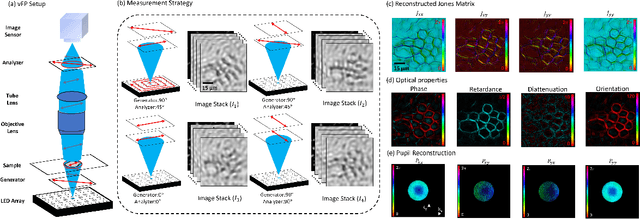

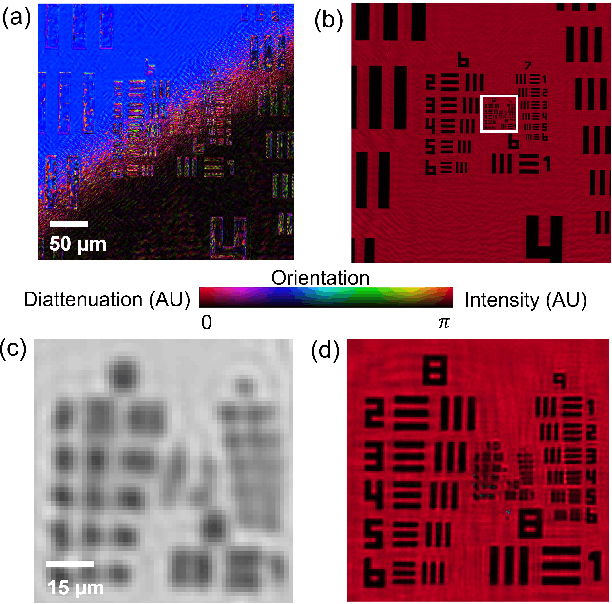

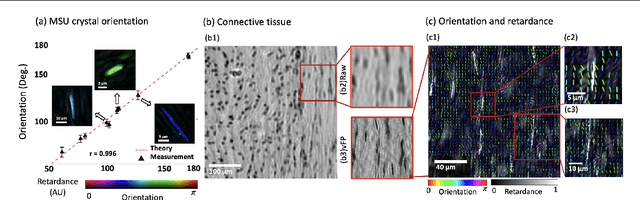

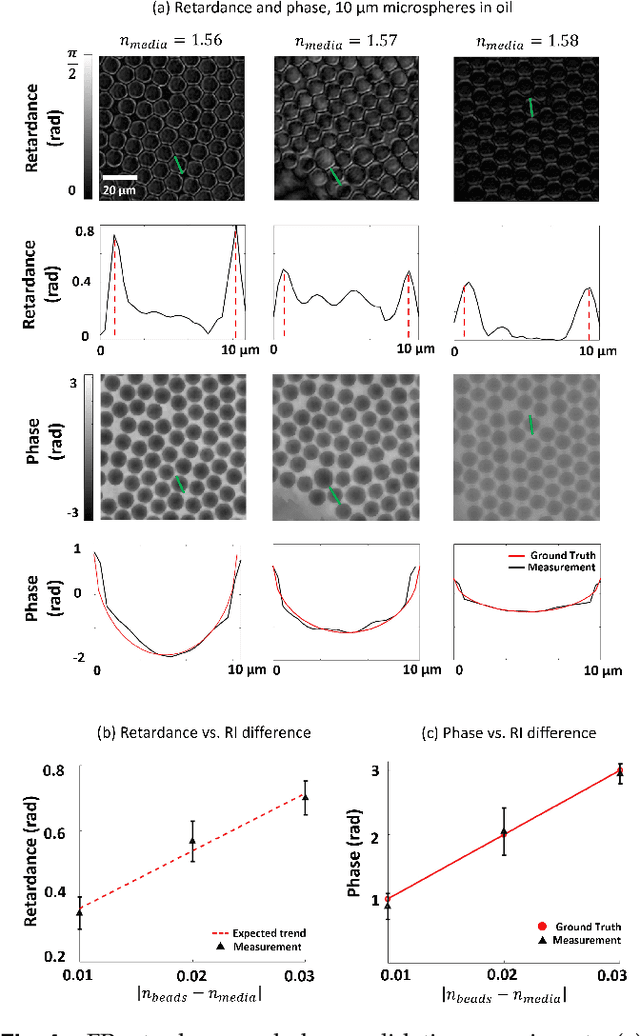

Quantitative Jones matrix imaging using vectorial Fourier ptychography

Oct 15, 2021

Abstract:This paper presents a microscopic imaging technique that uses variable-angle illumination to recover the complex polarimetric properties of a specimen at high resolution and over a large field-of-view. The approach extends Fourier ptychography, which is a synthetic aperture-based imaging approach to improve resolution with phaseless measurements, to additionally account for the vectorial nature of light. After images are acquired using a standard microscope outfitted with an LED illumination array and two polarizers, our vectorial Fourier Ptychography (vFP) algorithm solves for the complex 2x2 Jones matrix of the anisotropic specimen of interest at each resolved spatial location. We introduce a new sequential Gauss-Newton-based solver that additionally jointly estimates and removes polarization-dependent imaging system aberrations. We demonstrate effective vFP performance by generating large-area (29 mm$^2$), high-resolution (1.24 $\mu$m full-pitch) reconstructions of sample absorption, phase, orientation, diattenuation, and retardance for a variety of calibration samples and biological specimens.

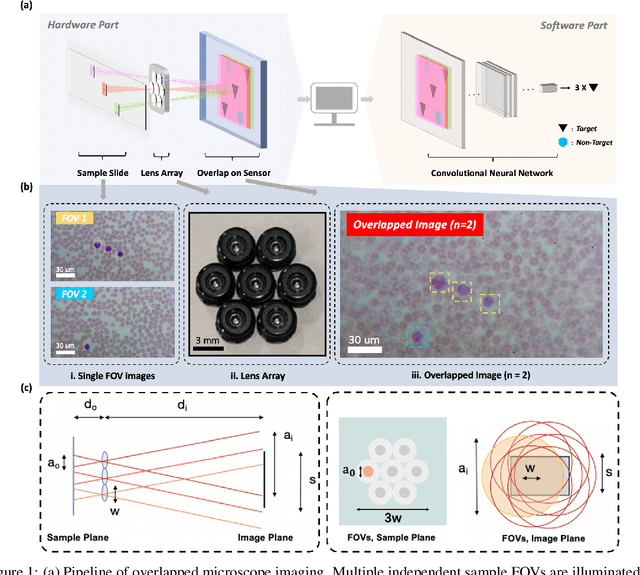

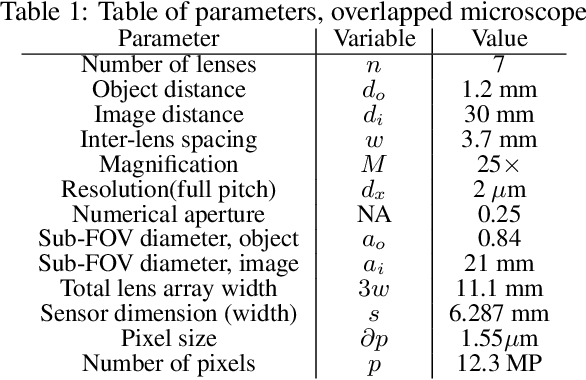

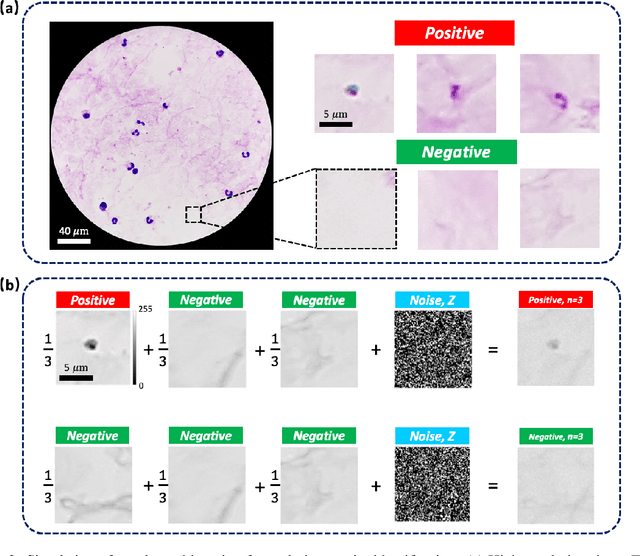

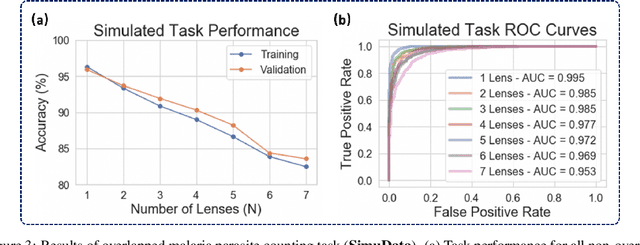

Increasing a microscope's effective field of view via overlapped imaging and machine learning

Oct 10, 2021

Abstract:This work demonstrates a multi-lens microscopic imaging system that overlaps multiple independent fields of view on a single sensor for high-efficiency automated specimen analysis. Automatic detection, classification and counting of various morphological features of interest is now a crucial component of both biomedical research and disease diagnosis. While convolutional neural networks (CNNs) have dramatically improved the accuracy of counting cells and sub-cellular features from acquired digital image data, the overall throughput is still typically hindered by the limited space-bandwidth product (SBP) of conventional microscopes. Here, we show both in simulation and experiment that overlapped imaging and co-designed analysis software can achieve accurate detection of diagnostically-relevant features for several applications, including counting of white blood cells and the malaria parasite, leading to multi-fold increase in detection and processing throughput with minimal reduction in accuracy.

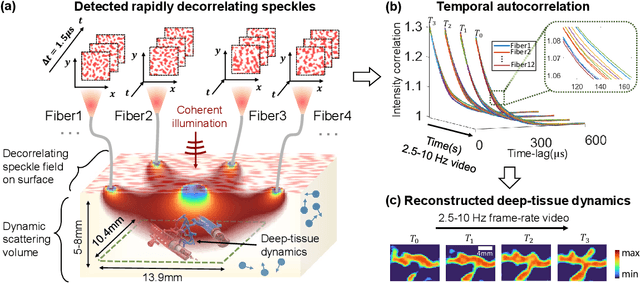

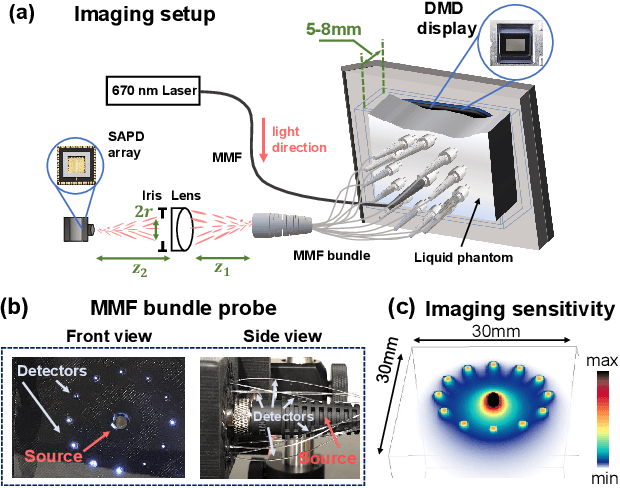

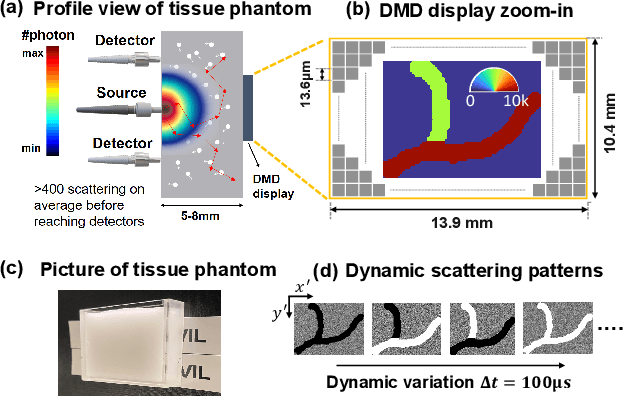

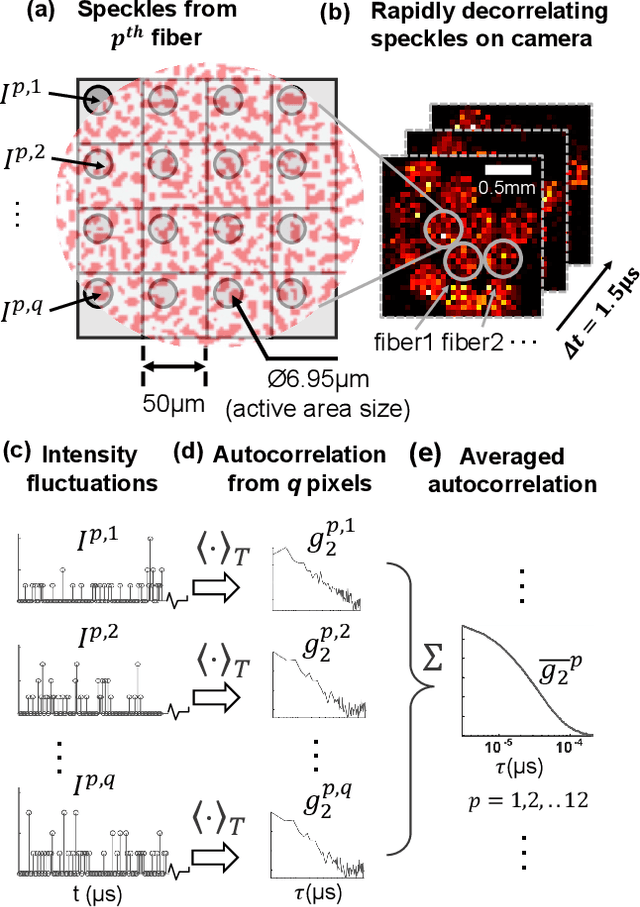

Imaging dynamics beneath turbid media via parallelized single-photon detection

Jul 22, 2021

Abstract:Noninvasive optical imaging through dynamic scattering media has numerous important biomedical applications but still remains a challenging task. While standard methods aim to form images based upon optical absorption or fluorescent emission, it is also well-established that the temporal correlation of scattered coherent light diffuses through tissue much like optical intensity. Few works to date, however, have aimed to experimentally measure and process such data to demonstrate deep-tissue imaging of decorrelation dynamics. In this work, we take advantage of a single-photon avalanche diode (SPAD) array camera, with over one thousand detectors, to simultaneously detect speckle fluctuations at the single-photon level from 12 different phantom tissue surface locations delivered via a customized fiber bundle array. We then apply a deep neural network to convert the acquired single-photon measurements into video of scattering dynamics beneath rapidly decorrelating liquid tissue phantoms. We demonstrate the ability to record video of dynamic events occurring 5-8 mm beneath a decorrelating tissue phantom with mm-scale resolution and at a 2.5-10 Hz frame rate.

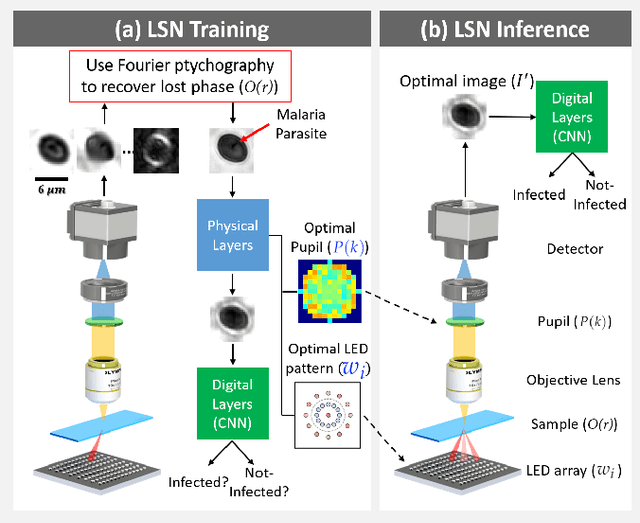

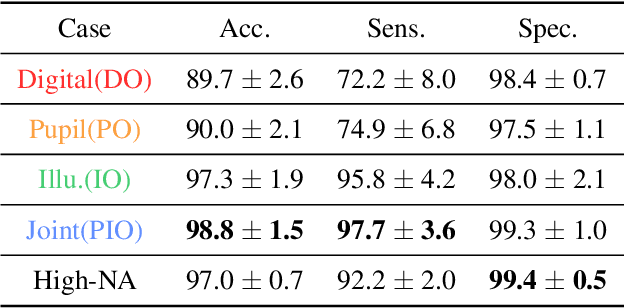

Multi-element microscope optimization by a learned sensing network with composite physical layers

Jun 27, 2020

Abstract:Standard microscopes offer a variety of settings to help improve the visibility of different specimens to the end microscope user. Increasingly, however, digital microscopes are used to capture images for automated interpretation by computer algorithms (e.g., for feature classification, detection or segmentation), often without any human involvement. In this work, we investigate an approach to jointly optimize multiple microscope settings, together with a classification network, for improved performance with such automated tasks. We explore the interplay between optimization of programmable illumination and pupil transmission, using experimentally imaged blood smears for automated malaria parasite detection, to show that multi-element "learned sensing" outperforms its single-element counterpart. While not necessarily ideal for human interpretation, the network's resulting low-resolution microscope images (20X-comparable) offer a machine learning network sufficient contrast to match the classification performance of corresponding high-resolution imagery (100X-comparable), pointing a path towards accurate automation over large fields-of-view.

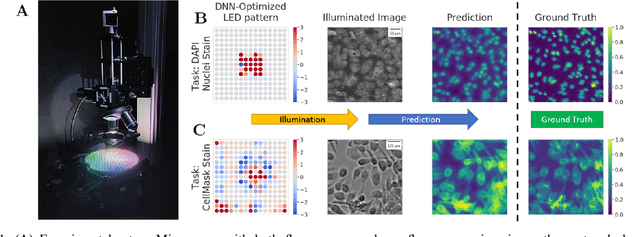

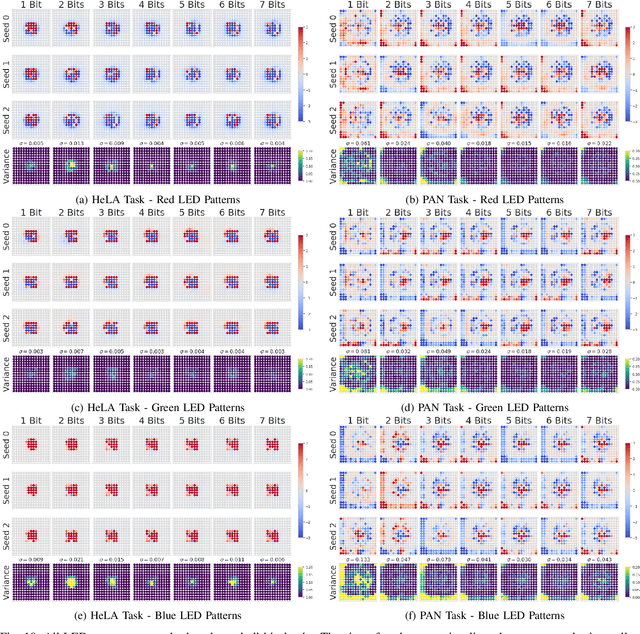

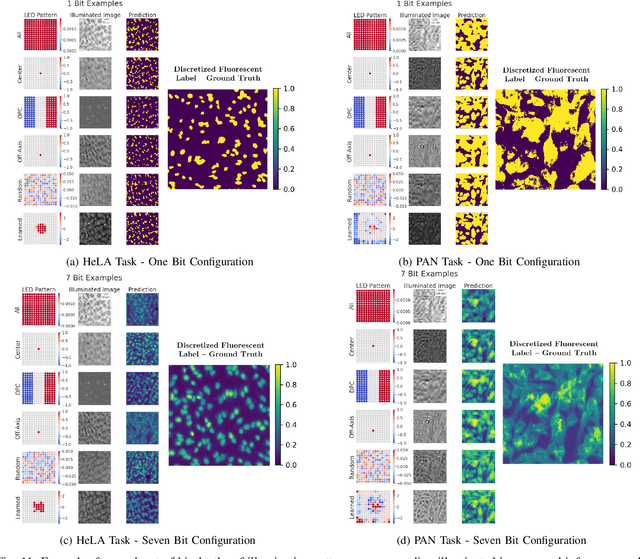

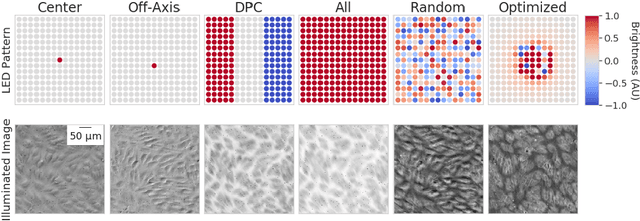

Physics-enhanced machine learning for virtual fluorescence microscopy

Apr 21, 2020

Abstract:This paper introduces a new method of data-driven microscope design for virtual fluorescence microscopy. Our results show that by including a model of illumination within the first layers of a deep convolutional neural network, it is possible to learn task-specific LED patterns that substantially improve the ability to infer fluorescence image information from unstained transmission microscopy images. We validated our method on two different experimental setups, with different magnifications and different sample types, to show a consistent improvement in performance as compared to conventional illumination methods. Additionally, to understand the importance of learned illumination on inference task, we varied the dynamic range of the fluorescent image targets (from one to seven bits), and showed that the margin of improvement for learned patterns increased with the information content of the target. This work demonstrates the power of programmable optical elements at enabling better machine learning algorithm performance and at providing physical insight into next generation of machine-controlled imaging systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge