Olivier Debeir

Collective perception for tracking people with a robot swarm

Oct 09, 2024

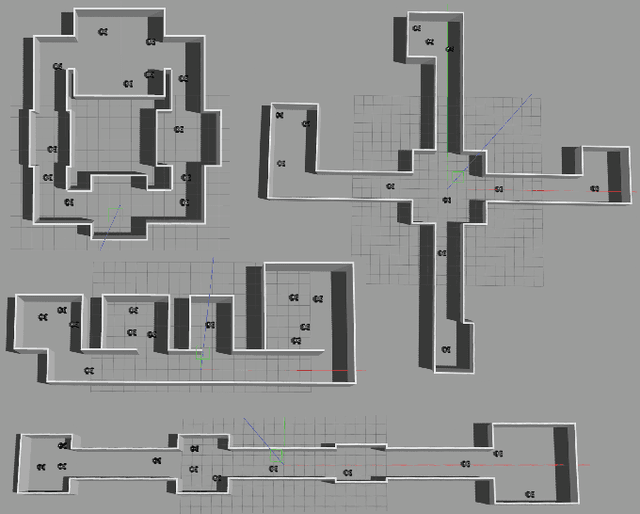

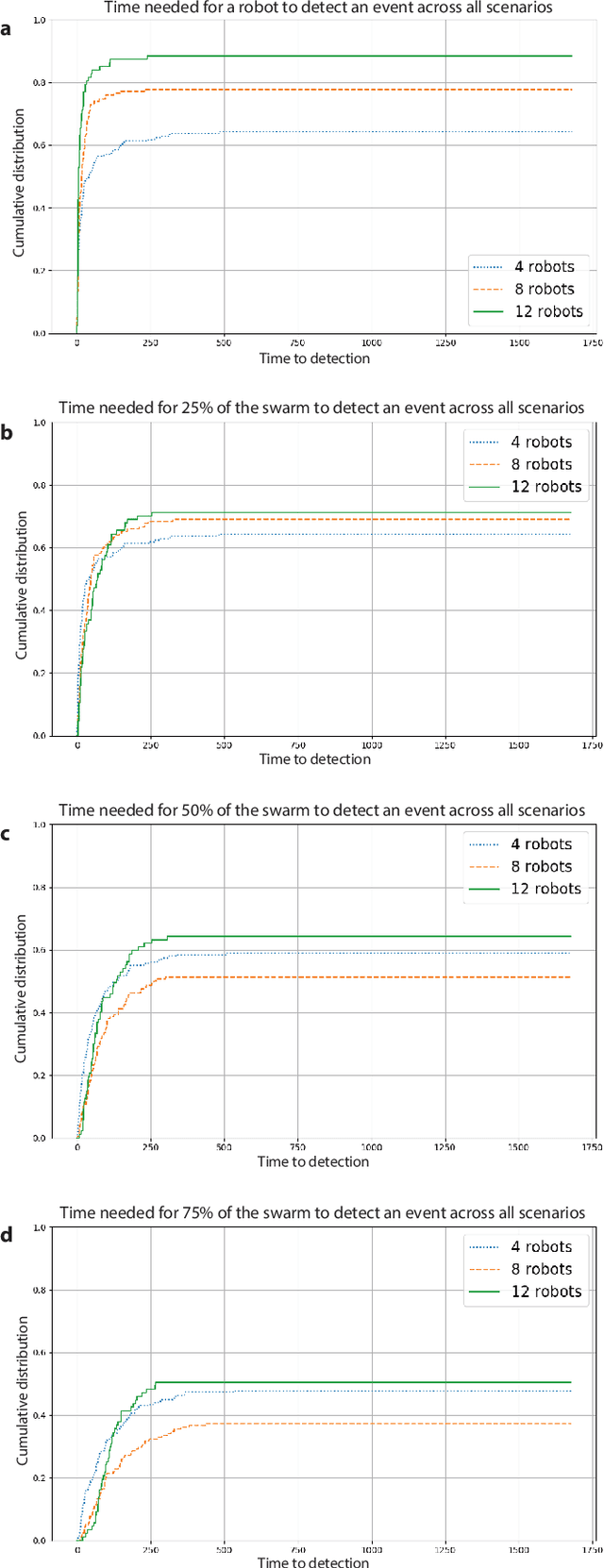

Abstract:Swarm perception refers to the ability of a robot swarm to utilize the perception capabilities of each individual robot, forming a collective understanding of the environment. Their distributed nature enables robot swarms to continuously monitor dynamic environments by maintaining a constant presence throughout the space.In this study, we present a preliminary experiment on the collective tracking of people using a robot swarm. The experiment was conducted in simulation across four different office environments, with swarms of varying sizes. The robots were provided with images sampled from a dataset of real-world office environment pictures.We measured the time distribution required for a robot to detect a person changing location and to propagate this information to increasing fractions of the swarm. The results indicate that robot swarms show significant promise in monitoring dynamic environments.

Deep Learning for Reaction-Diffusion Glioma Growth Modelling: Towards a Fully Personalised Model?

Nov 26, 2021

Abstract:Reaction-diffusion models have been proposed for decades to capture the growth of gliomas, the most common primary brain tumours. However, severe limitations regarding the estimation of the initial conditions and parameter values of such models have restrained their clinical use as a personalised tool. In this work, we investigate the ability of deep convolutional neural networks (DCNNs) to address the pitfalls commonly encountered in the field. Based on 1,200 synthetic tumours grown over real brain geometries derived from magnetic resonance (MR) data of 6 healthy subjects, we demonstrate the ability of DCNNs to reconstruct a whole tumour cell density distribution from only two imaging contours at a single time point. With an additional imaging contour extracted at a prior time point, we also demonstrate the ability of DCNNs to accurately estimate the individual diffusivity and proliferation parameters of the model. From this knowledge, the spatio-temporal evolution of the tumour cell density distribution at later time points can ultimately be precisely captured using the model. We finally show the applicability of our approach to MR data of a real glioblastoma patient. This approach may open the perspective of a clinical application of reaction-diffusion growth models for tumour prognosis and treatment planning.

XCycles Backprojection Acoustic Super-Resolution

May 19, 2021

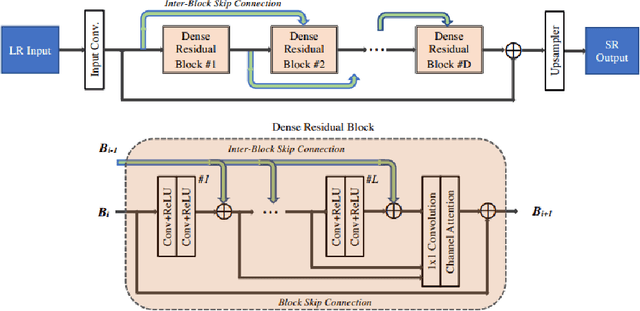

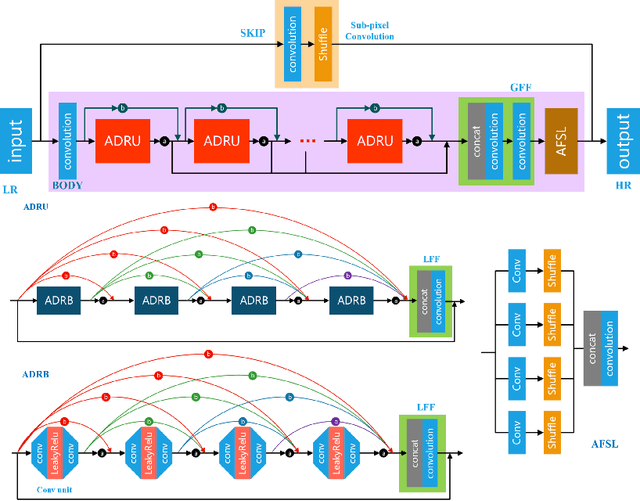

Abstract:The computer vision community has paid much attention to the development of visible image super-resolution (SR) using deep neural networks (DNNs) and has achieved impressive results. The advancement of non-visible light sensors, such as acoustic imaging sensors, has attracted much attention, as they allow people to visualize the intensity of sound waves beyond the visible spectrum. However, because of the limitations imposed on acquiring acoustic data, new methods for improving the resolution of the acoustic images are necessary. At this time, there is no acoustic imaging dataset designed for the SR problem. This work proposed a novel backprojection model architecture for the acoustic image super-resolution problem, together with Acoustic Map Imaging VUB-ULB Dataset (AMIVU). The dataset provides large simulated and real captured images at different resolutions. The proposed XCycles BackProjection model (XCBP), in contrast to the feedforward model approach, fully uses the iterative correction procedure in each cycle to reconstruct the residual error correction for the encoded features in both low- and high-resolution space. The proposed approach was evaluated on the dataset and showed high outperformance compared to the classical interpolation operators and to the recent feedforward state-of-the-art models. It also contributed to a drastically reduced sub-sampling error produced during the data acquisition.

Initial condition assessment for reaction-diffusion glioma growth models: A translational MRI/histology (in)validation study

Feb 02, 2021

Abstract:Diffuse gliomas are highly infiltrative tumors whose early diagnosis and follow-up usually rely on magnetic resonance imaging (MRI). However, the limited sensitivity of this technique makes it impossible to directly assess the extent of the glioma cell invasion, leading to sub-optimal treatment planing. Reaction-diffusion growth models have been proposed for decades to extrapolate glioma cell infiltration beyond margins visible on MRI and predict its spatial-temporal evolution. These models nevertheless require an initial condition, that is the tumor cell density values at every location of the brain at diagnosis time. Several works have proposed to relate the tumor cell density function to abnormality outlines visible on MRI but the underlying assumptions have never been verified so far. In this work we propose to verify these assumptions by stereotactic histological analysis of a non-operated brain with glioblastoma using a tailored 3D-printed slicer. Cell density maps are computed from histological slides using a deep learning approach. The density maps are then registered to a postmortem MR image and related to an MR-derived geodesic distance map to the tumor core. The relation between the edema outlines visible on T2 FLAIR MRI and the distance to the core is also investigated. Our results suggest that (i) the previously suggested exponential decrease of the tumor cell density with the distance to the tumor core is not unreasonable but (ii) the edema outlines may in general not correspond to a cell density iso-contour and (iii) the commonly adopted tumor cell density value at these outlines is likely overestimated. These findings highlight the limitations of using conventional MRI to derive glioma cell density maps and point out the need of validating other methods to initialize reaction-diffusion growth models and make them usable in clinical practice.

AIM 2020 Challenge on Real Image Super-Resolution: Methods and Results

Sep 25, 2020

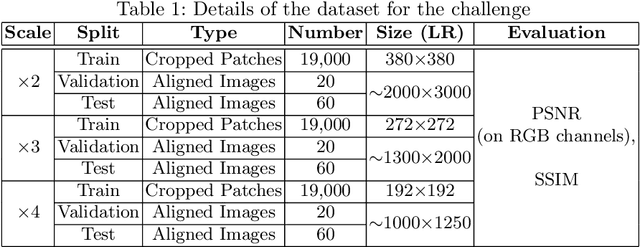

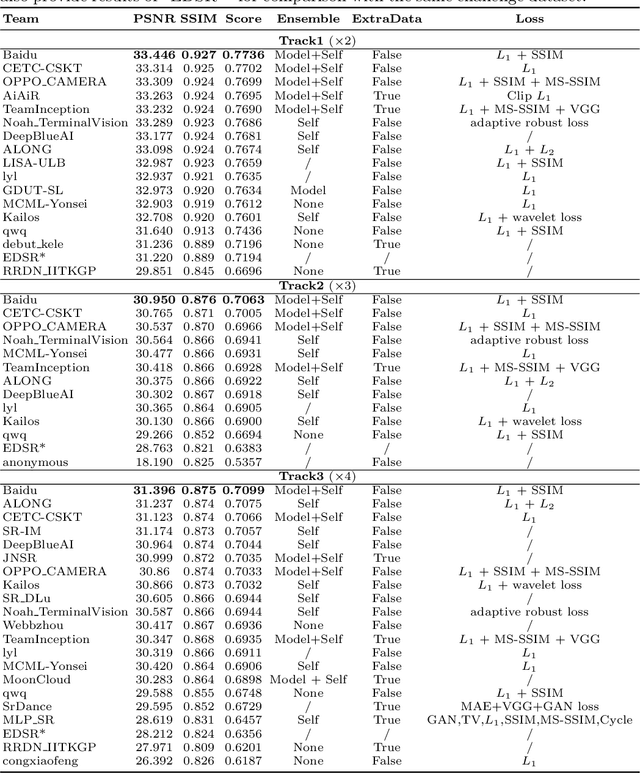

Abstract:This paper introduces the real image Super-Resolution (SR) challenge that was part of the Advances in Image Manipulation (AIM) workshop, held in conjunction with ECCV 2020. This challenge involves three tracks to super-resolve an input image for $\times$2, $\times$3 and $\times$4 scaling factors, respectively. The goal is to attract more attention to realistic image degradation for the SR task, which is much more complicated and challenging, and contributes to real-world image super-resolution applications. 452 participants were registered for three tracks in total, and 24 teams submitted their results. They gauge the state-of-the-art approaches for real image SR in terms of PSNR and SSIM.

Robust Perceptual Night Vision in Thermal Colorization

Mar 04, 2020

Abstract:Transforming a thermal infrared image into a robust perceptual colour Visible image is an ill-posed problem due to the differences in their spectral domains and in the objects' representations. Objects appear in one spectrum but not necessarily in the other, and the thermal signature of a single object may have different colours in its Visible representation. This makes a direct mapping from thermal to Visible images impossible and necessitates a solution that preserves texture captured in the thermal spectrum while predicting the possible colour for certain objects. In this work, a deep learning method to map the thermal signature from the thermal image's spectrum to a Visible representation in their low-frequency space is proposed. A pan-sharpening method is then used to merge the predicted low-frequency representation with the high-frequency representation extracted from the thermal image. The proposed model generates colour values consistent with the Visible ground truth when the object does not vary much in its appearance and generates averaged grey values in other cases. The proposed method shows robust perceptual night vision images in preserving the object's appearance and image context compared with the existing state-of-the-art.

Characterization of Posidonia Oceanica Seagrass Aerenchyma through Whole Slide Imaging: A Pilot Study

Mar 11, 2019

Abstract:Characterizing the tissue morphology and anatomy of seagrasses is essential to predicting their acoustic behavior. In this pilot study, we use histology techniques and whole slide imaging (WSI) to describe the composition and topology of the aerenchyma of an entire leaf blade in an automatic way combining the advantages of X-ray microtomography and optical microscopy. Paraffin blocks are prepared in such a way that microtome slices contain an arbitrarily large number of cross sections distributed along the full length of a blade. The sample organization in the paraffin block coupled with whole slide image analysis allows high throughput data extraction and an exhaustive characterization along the whole blade length. The core of the work are image processing algorithms that can identify cells and air lacunae (or void) from fiber strand, epidermis, mesophyll and vascular system. A set of specific features is developed to adequately describe the convexity of cells and voids where standard descriptors fail. The features scrutinize the local curvature of the object borders to allow an accurate discrimination between void and cell through machine learning. The algorithm allows to reconstruct the cells and cell membrane features that are relevant to tissue density, compressibility and rigidity. Size distribution of the different cell types and gas spaces, total biomass and total void volume fraction are then extracted from the high resolution slices to provide a complete characterization of the tissue along the leave from its base to the apex.

Multimodal Sensor Fusion In Single Thermal image Super-Resolution

Dec 21, 2018

Abstract:With the fast growth in the visual surveillance and security sectors, thermal infrared images have become increasingly necessary ina large variety of industrial applications. This is true even though IR sensors are still more expensive than their RGB counterpart having the same resolution. In this paper, we propose a deep learning solution to enhance the thermal image resolution. The following results are given:(I) Introduction of a multimodal, visual-thermal fusion model that ad-dresses thermal image super-resolution, via integrating high-frequency information from the visual image. (II) Investigation of different net-work architecture schemes in the literature, their up-sampling methods,learning procedures, and their optimization functions by showing their beneficial contribution to the super-resolution problem. (III) A bench-mark ULB17-VT dataset that contains thermal images and their visual images counterpart is presented. (IV) Presentation of a qualitative evaluation of a large test set with 58 samples and 22 raters which shows that our proposed model performs better against state-of-the-arts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge