Nico Hochgeschwender

Before Autonomy Takes Control: Software Testing in Robotics

Feb 02, 2026Abstract:Robotic systems are complex and safety-critical software systems. As such, they need to be tested thoroughly. Unfortunately, robot software is intrinsically hard to test compared to traditional software, mainly since the software needs to closely interact with hardware, account for uncertainty in its operational environment, handle disturbances, and act highly autonomously. However, given the large space in which robots operate, anticipating possible failures when designing tests is challenging. This paper presents a mapping study by considering robotics testing papers and relating them to the software testing theory. We consider 247 robotics testing papers and map them to software testing, discussing the state-of-the-art software testing in robotics with an illustrated example, and discuss current challenges. Forming the basis to introduce both the robotics and software engineering communities to software testing challenges. Finally, we identify open questions and lessons learned.

Planning Robot Placement for Object Grasping

May 26, 2024

Abstract:When performing manipulation-based activities such as picking objects, a mobile robot needs to position its base at a location that supports successful execution. To address this problem, prominent approaches typically rely on costly grasp planners to provide grasp poses for a target object, which are then are then analysed to identify the best robot placements for achieving each grasp pose. In this paper, we propose instead to first find robot placements that would not result in collision with the environment and from where picking up the object is feasible, then evaluate them to find the best placement candidate. Our approach takes into account the robot's reachability, as well as RGB-D images and occupancy grid maps of the environment for identifying suitable robot poses. The proposed algorithm is embedded in a service robotic workflow, in which a person points to select the target object for grasping. We evaluate our approach with a series of grasping experiments, against an existing baseline implementation that sends the robot to a fixed navigation goal. The experimental results show how the approach allows the robot to grasp the target object from locations that are very challenging to the baseline implementation.

A Multimodal Handover Failure Detection Dataset and Baselines

Feb 28, 2024Abstract:An object handover between a robot and a human is a coordinated action which is prone to failure for reasons such as miscommunication, incorrect actions and unexpected object properties. Existing works on handover failure detection and prevention focus on preventing failures due to object slip or external disturbances. However, there is a lack of datasets and evaluation methods that consider unpreventable failures caused by the human participant. To address this deficit, we present the multimodal Handover Failure Detection dataset, which consists of failures induced by the human participant, such as ignoring the robot or not releasing the object. We also present two baseline methods for handover failure detection: (i) a video classification method using 3D CNNs and (ii) a temporal action segmentation approach which jointly classifies the human action, robot action and overall outcome of the action. The results show that video is an important modality, but using force-torque data and gripper position help improve failure detection and action segmentation accuracy.

b-it-bots RoboCup@Work Team Description Paper 2023

Dec 29, 2023Abstract:This paper presents the b-it-bots RoboCup@Work team and its current hardware and functional architecture for the KUKA youBot robot. We describe the underlying software framework and the developed capabilities required for operating in industrial environments including features such as reliable and precise navigation, flexible manipulation, robust object recognition and task planning. New developments include an approach to grasp vertical objects, placement of objects by considering the empty space on a workstation, and the process of porting our code to ROS2.

Maximum Likelihood Uncertainty Estimation: Robustness to Outliers

Feb 03, 2022

Abstract:We benchmark the robustness of maximum likelihood based uncertainty estimation methods to outliers in training data for regression tasks. Outliers or noisy labels in training data results in degraded performances as well as incorrect estimation of uncertainty. We propose the use of a heavy-tailed distribution (Laplace distribution) to improve the robustness to outliers. This property is evaluated using standard regression benchmarks and on a high-dimensional regression task of monocular depth estimation, both containing outliers. In particular, heavy-tailed distribution based maximum likelihood provides better uncertainty estimates, better separation in uncertainty for out-of-distribution data, as well as better detection of adversarial attacks in the presence of outliers.

Property-Based Testing in Simulation for Verifying Robot Action Execution in Tabletop Manipulation

Aug 19, 2021

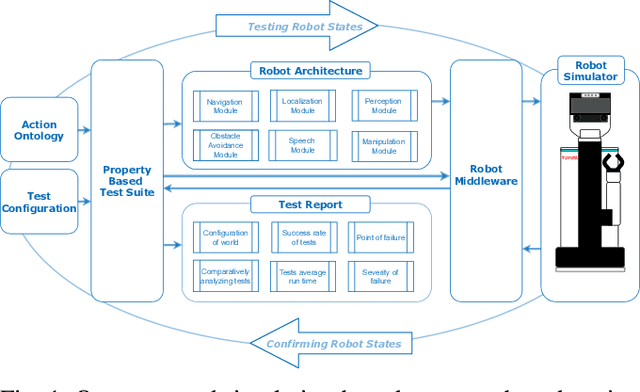

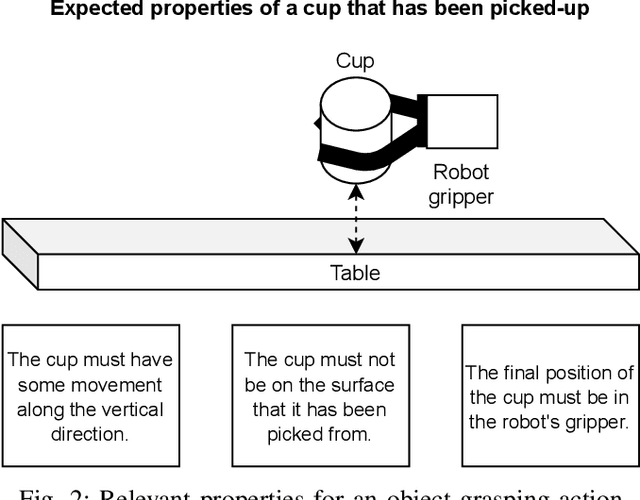

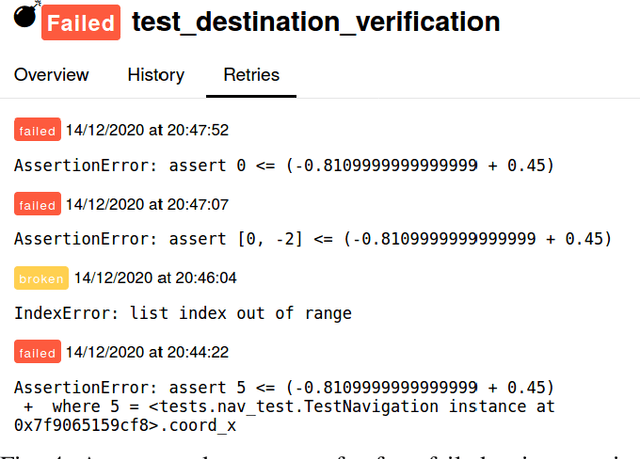

Abstract:An important prerequisite for the reliability and robustness of a service robot is ensuring the robot's correct behavior when it performs various tasks of interest. Extensive testing is one established approach for ensuring behavioural correctness; this becomes even more important with the integration of learning-based methods into robot software architectures, as there are often no theoretical guarantees about the performance of such methods in varying scenarios. In this paper, we aim towards evaluating the correctness of robot behaviors in tabletop manipulation through automatic generation of simulated test scenarios in which a robot assesses its performance using property-based testing. In particular, key properties of interest for various robot actions are encoded in an action ontology and are then verified and validated within a simulated environment. We evaluate our framework with a Toyota Human Support Robot (HSR) which is tested in a Gazebo simulation. We show that our framework can correctly and consistently identify various failed actions in a variety of randomised tabletop manipulation scenarios, in addition to providing deeper insights into the type and location of failures for each designed property.

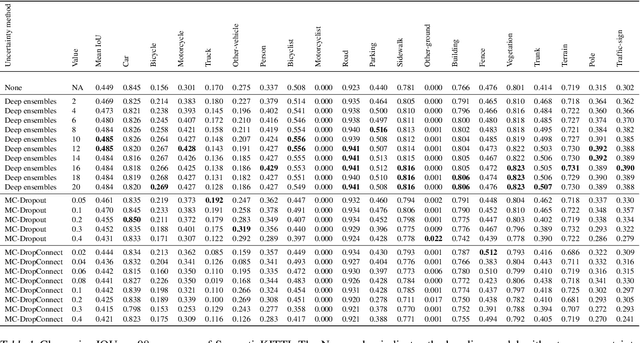

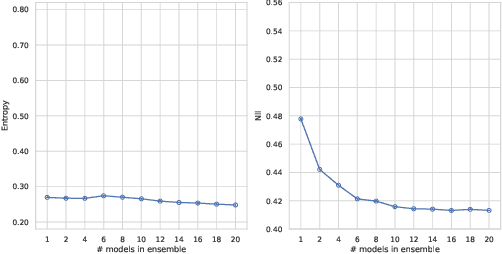

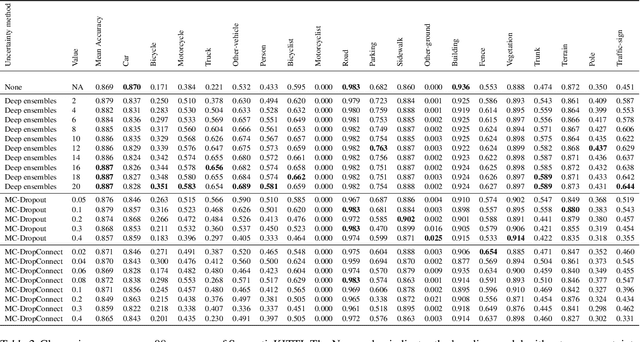

Evaluating Uncertainty Estimation Methods on 3D Semantic Segmentation of Point Clouds

Jul 03, 2020

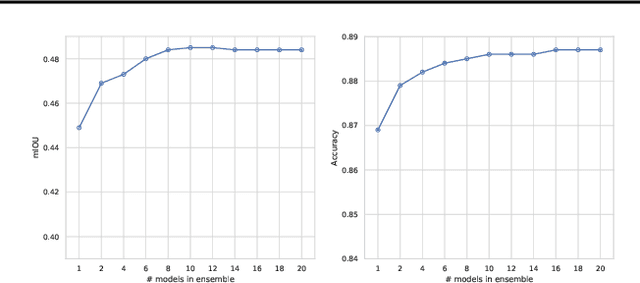

Abstract:Deep learning models are extensively used in various safety critical applications. Hence these models along with being accurate need to be highly reliable. One way of achieving this is by quantifying uncertainty. Bayesian methods for UQ have been extensively studied for Deep Learning models applied on images but have been less explored for 3D modalities such as point clouds often used for Robots and Autonomous Systems. In this work, we evaluate three uncertainty quantification methods namely Deep Ensembles, MC-Dropout and MC-DropConnect on the DarkNet21Seg 3D semantic segmentation model and comprehensively analyze the impact of various parameters such as number of models in ensembles or forward passes, and drop probability values, on task performance and uncertainty estimate quality. We find that Deep Ensembles outperforms other methods in both performance and uncertainty metrics. Deep ensembles outperform other methods by a margin of 2.4% in terms of mIOU, 1.3% in terms of accuracy, while providing reliable uncertainty for decision making.

Towards Persistent Storage and Retrieval of Domain Models using Graph Database Technology

Feb 19, 2016

Abstract:We employ graph database technology to persistently store and retrieve robot domain models.

Proceedings of the Fifth International Workshop on Domain-Specific Languages and Models for Robotic Systems

Nov 26, 2014Abstract:The Fifth International Workshop on Domain-Specific Languages and Models for Robotic Systems (DSLRob'14) was held in conjunction with the 2014 International Conference on Simulation, Modeling, and Programming for Autonomous Robots (SIMPAR 2014), October 2014 in Bergamo, Italy. The main topics of the workshop were Domain-Specific Languages (DSLs) and Model-driven Software Development (MDSD) for robotics. A domain-specific language is a programming language dedicated to a particular problem domain that offers specific notations and abstractions that increase programmer productivity within that domain. Model-driven software development offers a high-level way for domain users to specify the functionality of their system at the right level of abstraction. DSLs and models have historically been used for programming complex systems. However recently they have garnered interest as a separate field of study. Robotic systems blend hardware and software in a holistic way that intrinsically raises many crosscutting concerns (concurrency, uncertainty, time constraints, ...), for which reason, traditional general-purpose languages often lead to a poor fit between the language features and the implementation requirements. DSLs and models offer a powerful, systematic way to overcome this problem, enabling the programmer to quickly and precisely implement novel software solutions to complex problems within the robotics domain.

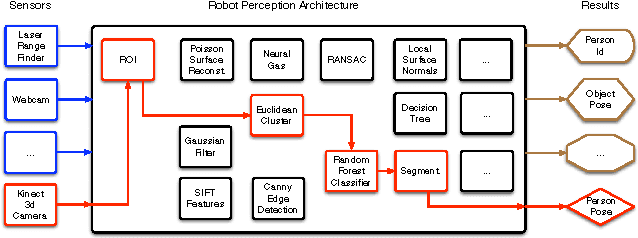

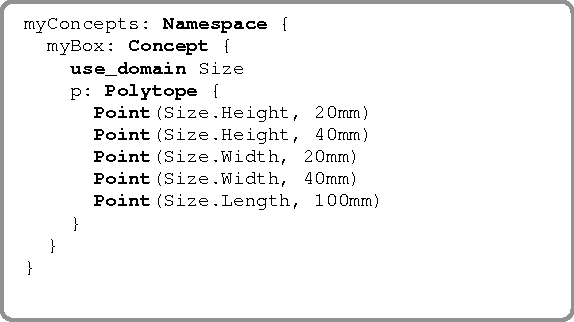

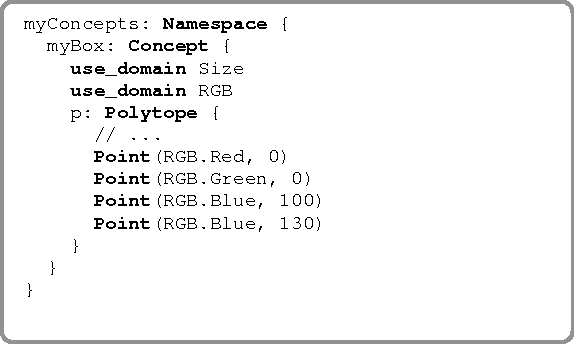

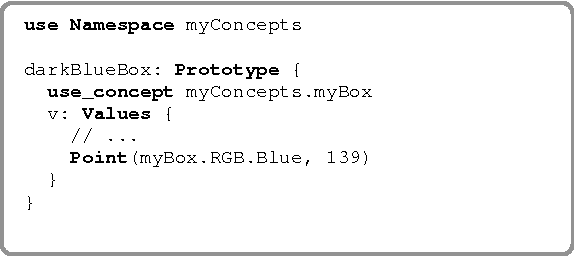

Towards a Robot Perception Specification Language

Aug 13, 2014

Abstract:In this paper we present our work in progress towards a domain-specific language called Robot Perception Specification Language (RPSL). RSPL provide means to specify the expected result (task knowledge) of a Robot Perception Architecture in a declarative and framework-independent manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge