Paul G. Plöger

Enhancing Video-Based Robot Failure Detection Using Task Knowledge

Aug 26, 2025Abstract:Robust robotic task execution hinges on the reliable detection of execution failures in order to trigger safe operation modes, recovery strategies, or task replanning. However, many failure detection methods struggle to provide meaningful performance when applied to a variety of real-world scenarios. In this paper, we propose a video-based failure detection approach that uses spatio-temporal knowledge in the form of the actions the robot performs and task-relevant objects within the field of view. Both pieces of information are available in most robotic scenarios and can thus be readily obtained. We demonstrate the effectiveness of our approach on three datasets that we amend, in part, with additional annotations of the aforementioned task-relevant knowledge. In light of the results, we also propose a data augmentation method that improves performance by applying variable frame rates to different parts of the video. We observe an improvement from 77.9 to 80.0 in F1 score on the ARMBench dataset without additional computational expense and an additional increase to 81.4 with test-time augmentation. The results emphasize the importance of spatio-temporal information during failure detection and suggest further investigation of suitable heuristics in future implementations. Code and annotations are available.

A Neuromorphic Approach to Obstacle Avoidance in Robot Manipulation

Apr 08, 2024Abstract:Neuromorphic computing mimics computational principles of the brain in $\textit{silico}$ and motivates research into event-based vision and spiking neural networks (SNNs). Event cameras (ECs) exclusively capture local intensity changes and offer superior power consumption, response latencies, and dynamic ranges. SNNs replicate biological neuronal dynamics and have demonstrated potential as alternatives to conventional artificial neural networks (ANNs), such as in reducing energy expenditure and inference time in visual classification. Nevertheless, these novel paradigms remain scarcely explored outside the domain of aerial robots. To investigate the utility of brain-inspired sensing and data processing, we developed a neuromorphic approach to obstacle avoidance on a camera-equipped manipulator. Our approach adapts high-level trajectory plans with reactive maneuvers by processing emulated event data in a convolutional SNN, decoding neural activations into avoidance motions, and adjusting plans using a dynamic motion primitive. We conducted experiments with a Kinova Gen3 arm performing simple reaching tasks that involve obstacles in sets of distinct task scenarios and in comparison to a non-adaptive baseline. Our neuromorphic approach facilitated reliable avoidance of imminent collisions in simulated and real-world experiments, where the baseline consistently failed. Trajectory adaptations had low impacts on safety and predictability criteria. Among the notable SNN properties were the correlation of computations with the magnitude of perceived motions and a robustness to different event emulation methods. Tests with a DAVIS346 EC showed similar performance, validating our experimental event emulation. Our results motivate incorporating SNN learning, utilizing neuromorphic processors, and further exploring the potential of neuromorphic methods.

A Multimodal Handover Failure Detection Dataset and Baselines

Feb 28, 2024Abstract:An object handover between a robot and a human is a coordinated action which is prone to failure for reasons such as miscommunication, incorrect actions and unexpected object properties. Existing works on handover failure detection and prevention focus on preventing failures due to object slip or external disturbances. However, there is a lack of datasets and evaluation methods that consider unpreventable failures caused by the human participant. To address this deficit, we present the multimodal Handover Failure Detection dataset, which consists of failures induced by the human participant, such as ignoring the robot or not releasing the object. We also present two baseline methods for handover failure detection: (i) a video classification method using 3D CNNs and (ii) a temporal action segmentation approach which jointly classifies the human action, robot action and overall outcome of the action. The results show that video is an important modality, but using force-torque data and gripper position help improve failure detection and action segmentation accuracy.

b-it-bots RoboCup@Work Team Description Paper 2023

Dec 29, 2023Abstract:This paper presents the b-it-bots RoboCup@Work team and its current hardware and functional architecture for the KUKA youBot robot. We describe the underlying software framework and the developed capabilities required for operating in industrial environments including features such as reliable and precise navigation, flexible manipulation, robust object recognition and task planning. New developments include an approach to grasp vertical objects, placement of objects by considering the empty space on a workstation, and the process of porting our code to ROS2.

Data-Driven Robot Fault Detection and Diagnosis Using Generative Models: A Modified SFDD Algorithm

Nov 23, 2023Abstract:This paper presents a modification of the data-driven sensor-based fault detection and diagnosis (SFDD) algorithm for online robot monitoring. Our version of the algorithm uses a collection of generative models, in particular restricted Boltzmann machines, each of which represents the distribution of sliding window correlations between a pair of correlated measurements. We use such models in a residual generation scheme, where high residuals generate conflict sets that are then used in a subsequent diagnosis step. As a proof of concept, the framework is evaluated on a mobile logistics robot for the problem of recognising disconnected wheels, such that the evaluation demonstrates the feasibility of the framework (on the faulty data set, the models obtained 88.6% precision and 75.6% recall rates), but also shows that the monitoring results are influenced by the choice of distribution model and the model parameters as a whole.

Adaptive Compliant Robot Control with Failure Recovery for Object Press-Fitting

Jul 17, 2023Abstract:Loading of shipping containers for dairy products often includes a press-fit task, which involves manually stacking milk cartons in a container without using pallets or packaging. Automating this task with a mobile manipulator can reduce worker strain, and also enhance the efficiency and safety of the container loading process. This paper proposes an approach called Adaptive Compliant Control with Integrated Failure Recovery (ACCIFR), which enables a mobile manipulator to reliably perform the press-fit task. We base the approach on a demonstration learning-based compliant control framework, such that we integrate a monitoring and failure recovery mechanism for successful task execution. Concretely, we monitor the execution through distance and force feedback, detect collisions while the robot is performing the press-fit task, and use wrench measurements to classify the direction of collision; this information informs the subsequent recovery process. We evaluate the method on a miniature container setup, considering variations in the (i) starting position of the end effector, (ii) goal configuration, and (iii) object grasping position. The results demonstrate that the proposed approach outperforms the baseline demonstration-based learning framework regarding adaptability to environmental variations and the ability to recover from collision failures, making it a promising solution for practical press-fit applications.

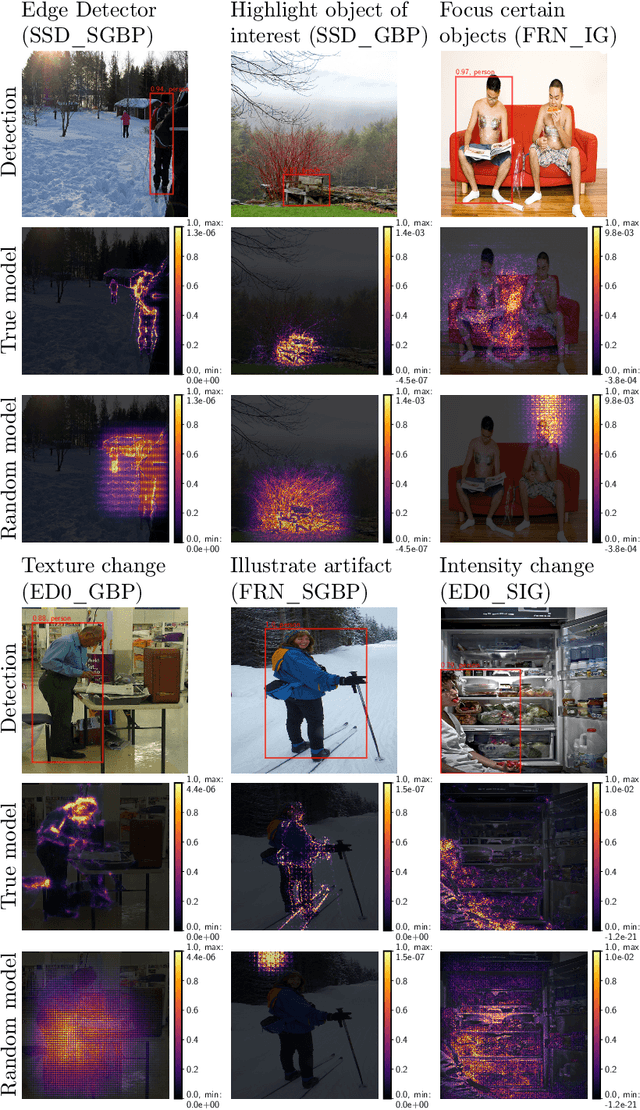

Sanity Checks for Saliency Methods Explaining Object Detectors

Jun 04, 2023

Abstract:Saliency methods are frequently used to explain Deep Neural Network-based models. Adebayo et al.'s work on evaluating saliency methods for classification models illustrate certain explanation methods fail the model and data randomization tests. However, on extending the tests for various state of the art object detectors we illustrate that the ability to explain a model is more dependent on the model itself than the explanation method. We perform sanity checks for object detection and define new qualitative criteria to evaluate the saliency explanations, both for object classification and bounding box decisions, using Guided Backpropagation, Integrated Gradients, and their Smoothgrad versions, together with Faster R-CNN, SSD, and EfficientDet-D0, trained on COCO. In addition, the sensitivity of the explanation method to model parameters and data labels varies class-wise motivating to perform the sanity checks for each class. We find that EfficientDet-D0 is the most interpretable method independent of the saliency method, which passes the sanity checks with little problems.

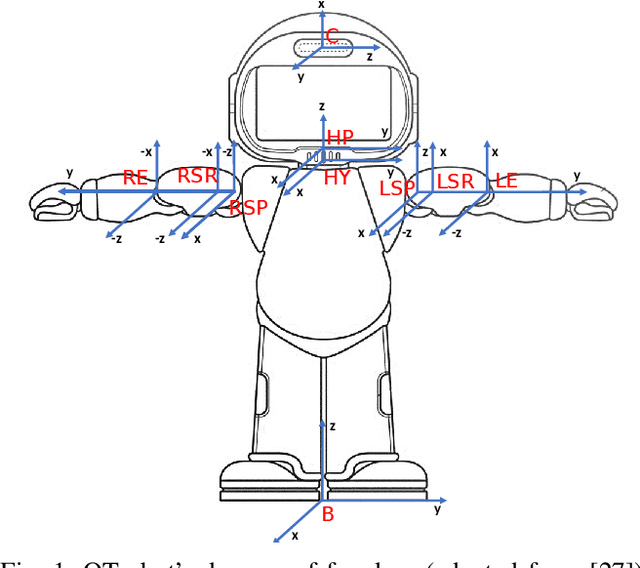

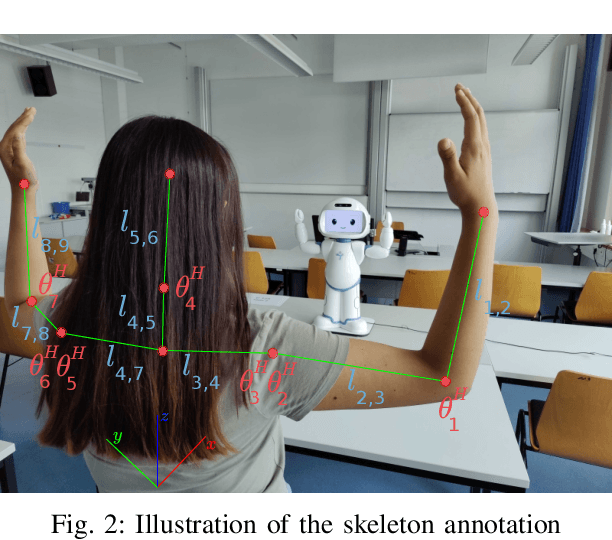

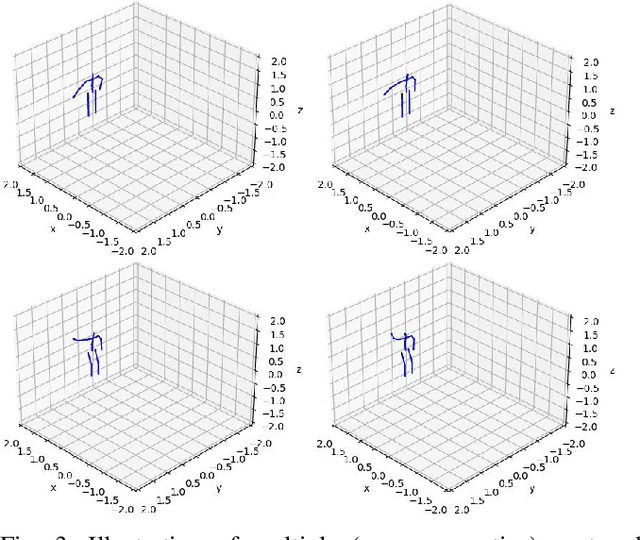

Learning Human Body Motions from Skeleton-Based Observations for Robot-Assisted Therapy

Jul 25, 2022

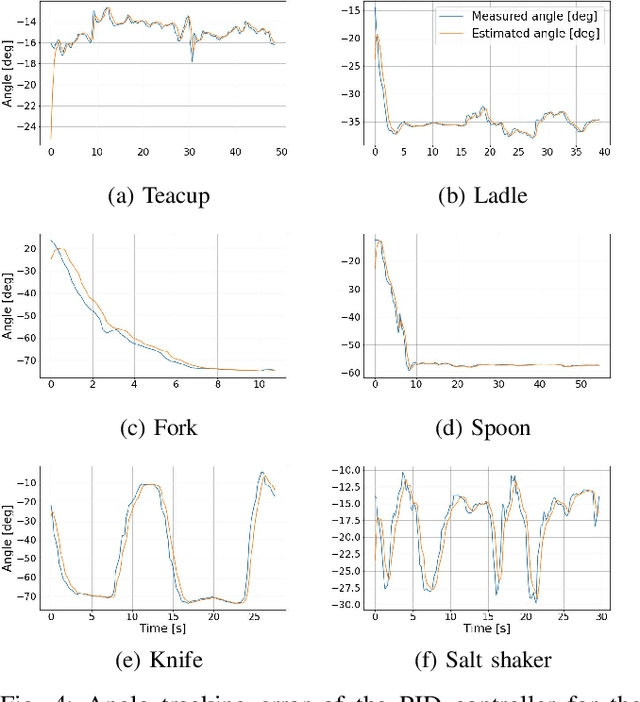

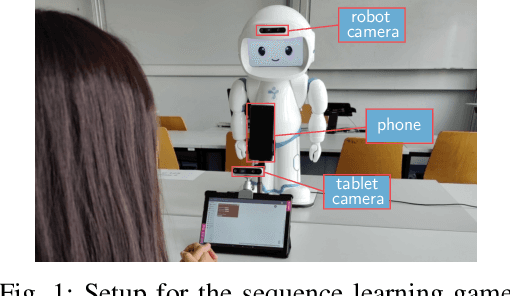

Abstract:Robots applied in therapeutic scenarios, for instance in the therapy of individuals with Autism Spectrum Disorder, are sometimes used for imitation learning activities in which a person needs to repeat motions by the robot. To simplify the task of incorporating new types of motions that a robot can perform, it is desirable that the robot has the ability to learn motions by observing demonstrations from a human, such as a therapist. In this paper, we investigate an approach for acquiring motions from skeleton observations of a human, which are collected by a robot-centric RGB-D camera. Given a sequence of observations of various joints, the joint positions are mapped to match the configuration of a robot before being executed by a PID position controller. We evaluate the method, in particular the reproduction error, by performing a study with QTrobot in which the robot acquired different upper-body dance moves from multiple participants. The results indicate the method's overall feasibility, but also indicate that the reproduction quality is affected by noise in the skeleton observations.

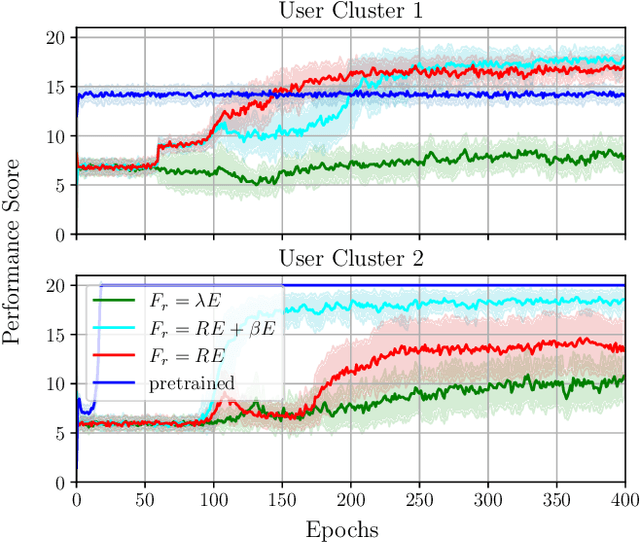

Personalised Robot Behaviour Modelling for Robot-Assisted Therapy in the Context of Autism Spectrum Disorder

Jul 25, 2022

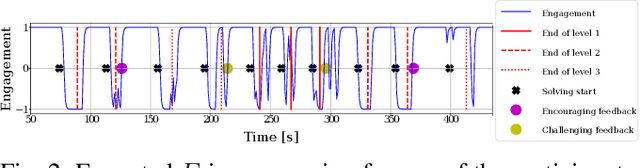

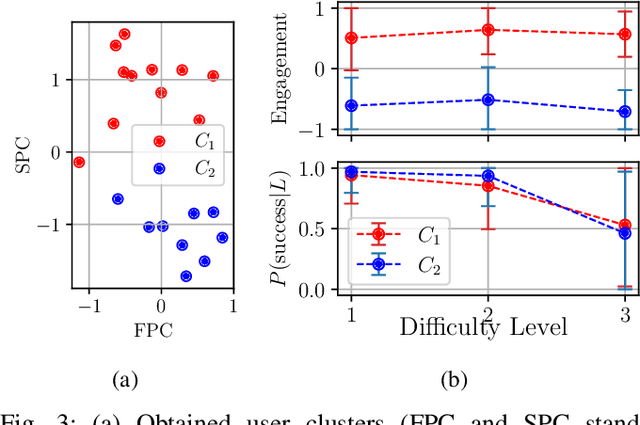

Abstract:In robot-assisted therapy for individuals with Autism Spectrum Disorder, the workload of therapists during a therapeutic session is increased if they have to control the robot manually. To allow therapists to focus on the interaction with the person instead, the robot should be more autonomous, namely it should be able to interpret the person's state and continuously adapt its actions according to their behaviour. In this paper, we develop a personalised robot behaviour model that can be used in the robot decision-making process during an activity; this behaviour model is trained with the help of a user model that has been learned from real interaction data. We use Q-learning for this task, such that the results demonstrate that the policy requires about 10,000 iterations to converge. We thus investigate policy transfer for improving the convergence speed; we show that this is a feasible solution, but an inappropriate initial policy can lead to a suboptimal final return.

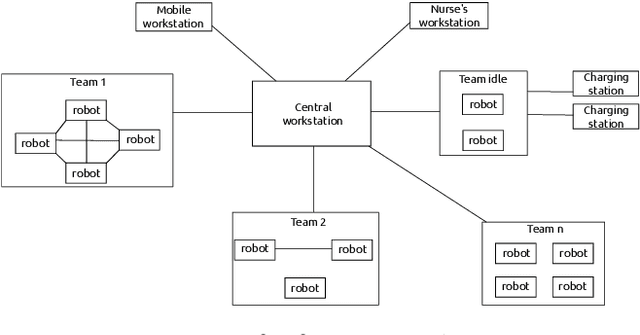

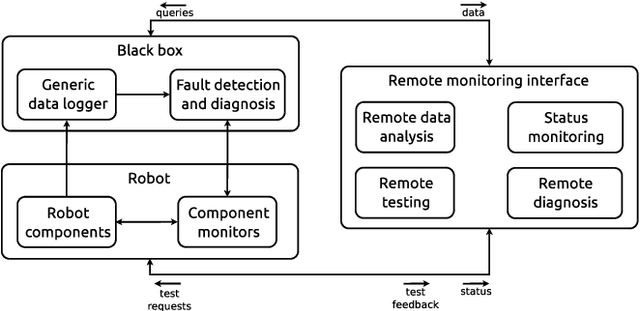

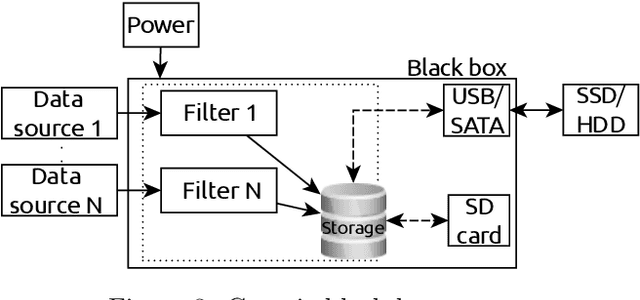

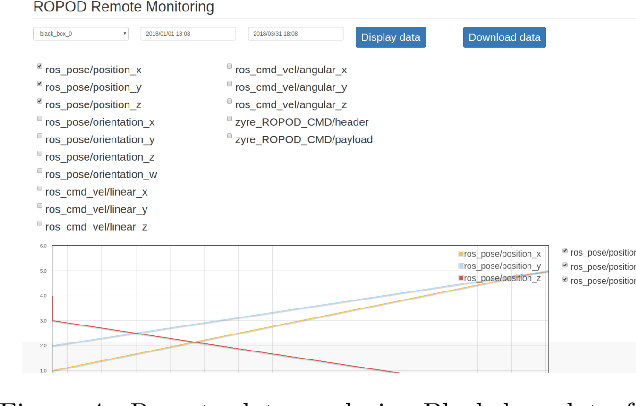

Deploying Robots in Everyday Environments: Towards Dependable and Practical Robotic Systems

Jun 25, 2022

Abstract:Robot deployment in realistic dynamic environments is a challenging problem despite the fact that robots can be quite skilled at a large number of isolated tasks. One reason for this is that robots are rarely equipped with powerful introspection capabilities, which means that they cannot always deal with failures in a reasonable manner; in addition, manual diagnosis is often a tedious task that requires technicians to have a considerable set of robotics skills. In this paper, we discuss our ongoing efforts - in the context of the ROPOD project - to address some of these problems. In particular, we (i) present our early efforts at developing a robotic black box and consider some factors that complicate its design, (ii) explain our component and system monitoring concept, and (iii) describe the necessity for remote monitoring and experimentation as well as our initial attempts at performing those. Our preliminary work opens a range of promising directions for making robots more usable and reliable in practice - not only in the context of ROPOD, but in a more general sense as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge